By Super Neuro

July 13th this year marks the second anniversary of the release of Pokemon Go. Although the game has not been launched in the Chinese market, it does not affect the global user base of this classic IP.Pokémon Go isIt is the fourth highest-grossing mobile game in the world, after "Honor of Kings", "QQ Speed" and "Fantasy Westward Journey", which means that "Pokemon Go" is the highest-grossing product among non-Chinese mobile games in the world.

There is also a group of bored and immature engineers who successfully predicted the battle results between different Pokémon in "Pokemon GO" through machine learning models.

Pokémon GO was launched in September 2016 and became a huge hit. The game was jointly developed by Nintendo, The Pokémon Company and Google's Niantic Labs.

Among them, Pokémon is responsible for content support, game design and story content; Niantic is responsible for technical support and providing AR technology for the game, and Nintendo is responsible for game development and global distribution.

The game mainly uses AR technology, and players can capture Pokémon and fight in the real world through their mobile phones.

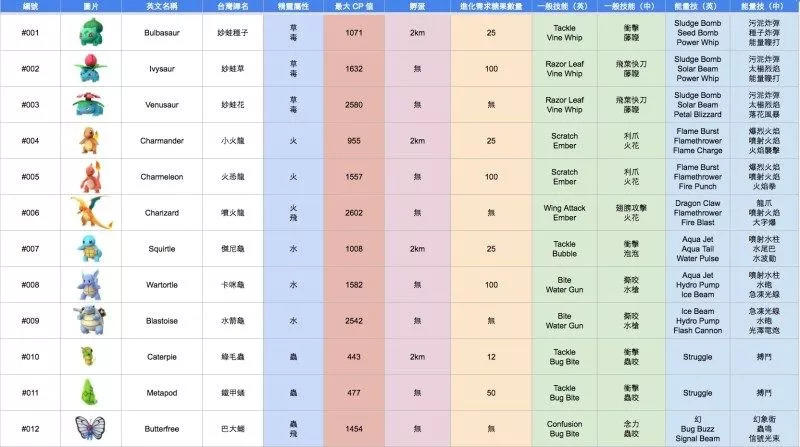

There are more than 800 Pokémon in the game, assigned to different camps. Each Pokémon has different attributes, including attack, defense, hit, speed, etc.

Shows the attribute values of some of these Pokémon

Golduck is the strongest dragon in Pokémon

These attribute values are the data sets of the prediction results of the machine learning model. Currently, the model is mainly completed through three steps: "building a classifier, training a classifier, and testing a classifier."

Building a classifier

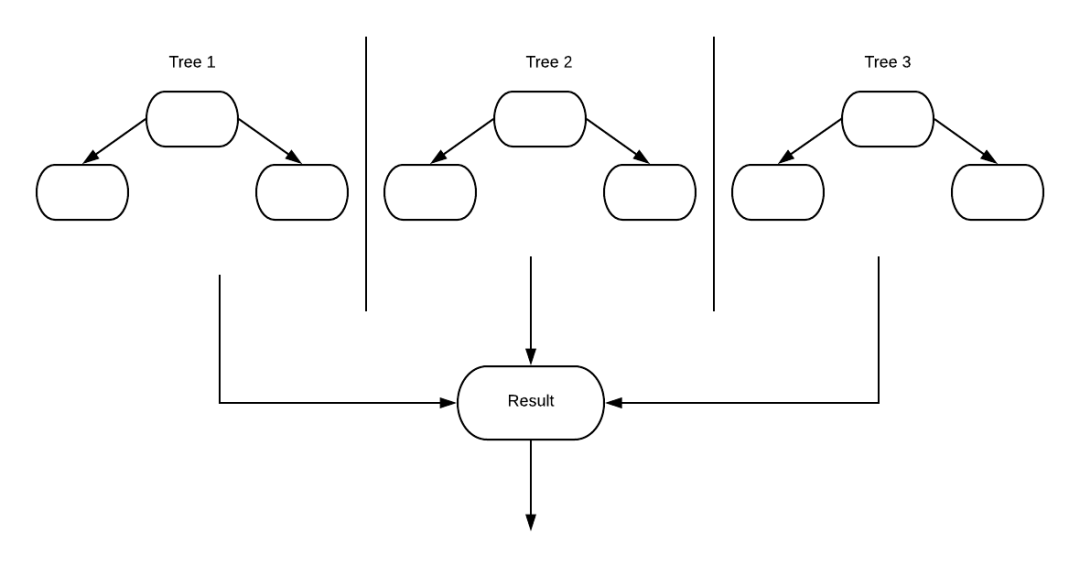

Classifiers are mainly used to classify data, such as classifying an image as a dog or a cat. The most commonly used one is the random forest classifier, which works by training and predicting sample data based on multiple decision trees.

Decision Tree Classifier

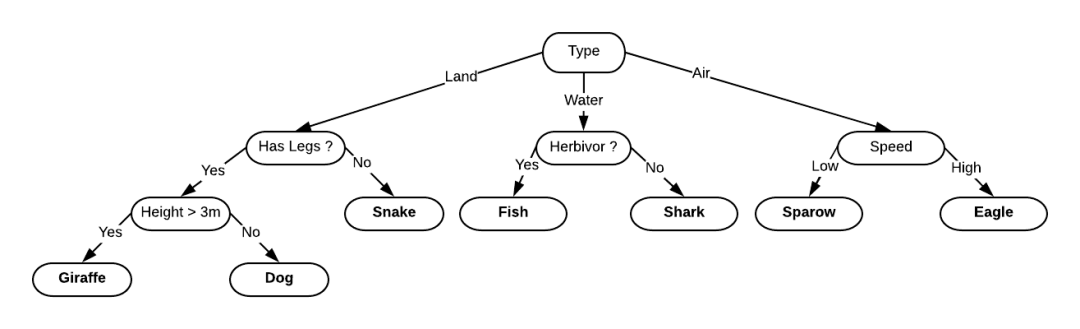

Let's talk briefly about decision trees. Suppose we are given some information about the type, height, weight, speed, etc. of an animal, and we are asked to infer whether the animal is a cat, a dog, or something else. This can be achieved through a decision tree.

As shown in the figure above, a question is generated at each node of the decision tree. Subtrees can be further divided according to the answer to the question, and then the whole process is repeated until we determine whether the animal is a cat or a dog.

It can be seen that the advantage of the decision tree classifier is that, given a data set, it can ask the right questions at each node (i.e., find out the gain information), thereby dividing the tree and increasing the accuracy of each prediction.

Random Forest Classifier

The random forest classifier is a collection of multiple decision tree classifiers. Compared with using a single decision tree, this method can get better results and is more practical.

Okay, now let's build this random forest classifier. It looks like this: n_estimators gives the number of decision trees used to create the random forest as 100.

Training the classifier

The attribute values of the elves are used as data sets (i.e. x_train), and the classifier is trained through these data sets to minimize the loss of the predicted value and the actual value (i.e. y_train) on the training set.

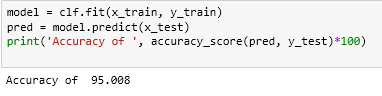

The training of the entire classifier needs to be achieved by determining the relationship between different attribute values. Finally, the accuracy of the random forest classifier reaches more than 95%.

Testing the Classifier

In actual prediction, the data set used is still the attribute values of all sprites, and the random forest classifier predicts the results based on these values.

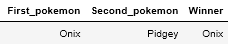

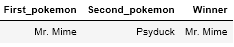

Onix, Pidgeon, Squidward and Golduck are 4 characters in "Pokemon GO". This model will predict the results of Onix VS Pidgeon and Squidward VS Golduck respectively.

Prediction: Onix wins

Prediction: Suction Cup Golem wins

This kind of battle has never appeared in "Pokemon GO" before. If you are playing this game, you might as well try it with your friends to see if the results are really as predicted by this model.

The project has been published on Github by the engineers, and other interested friends can also check it out.