By Super Neuro

We have played Go and DOTA2, and now it’s the turn for AI to do reasoning questions in IQ tests.

Inspired by traditional intelligence tests, the DeepMind team recently launched an experiment to test AI’s reasoning ability.The results show that AI can not only understand some abstract concepts, but also infer new concepts.

Oops, AI gets another point~

No more chess, let’s test AI with reasoning questions

At the "International Conference on Machine Learning" held in Stockholm, Sweden in July this year, DeepMind published a paper stating that it is possible to measure the reasoning ability of neural networks through a series of abstract elements, just like testing human IQ.

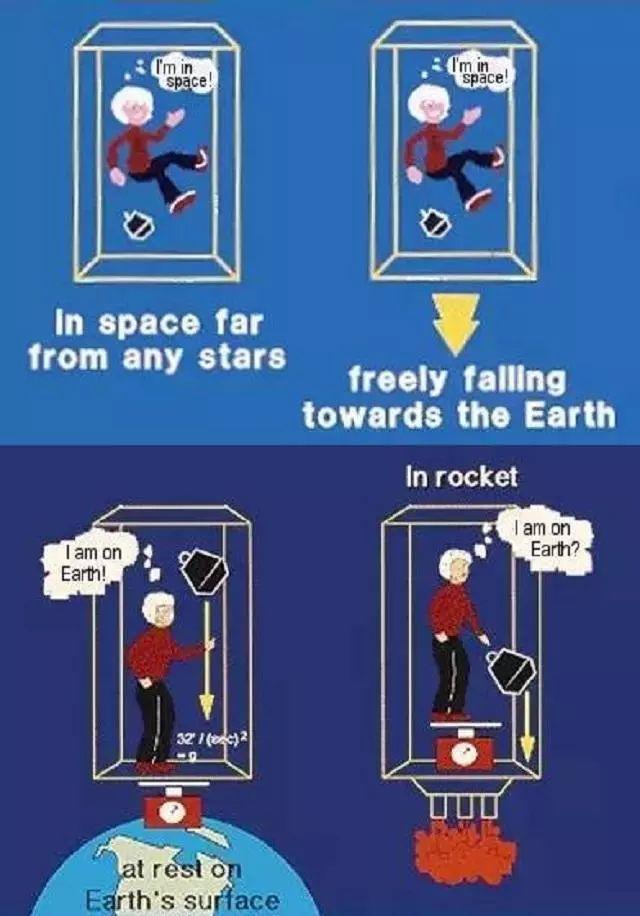

The researchers define this abstract reasoning ability as detecting patterns and solving problems at a conceptual level.Abstract reasoning is one of the symbols of human intelligence. A famous example is Einstein's deduction of the theory of general relativity through the elevator thought experiment.

In this experiment, Einstein reasoned that there is an equivalence between an observer falling under uniform acceleration and an observer in a uniform gravitational field.

It was this ability to connect these two abstract concepts that enabled him to derive the theory of general relativity and, based on it, to propose the curvature of space-time. This abstract ability is one of the characteristics of human intelligence.

Note: In "An Introduction to the Special and General Theory of Relativity", Einstein gave an analogy. There is a person in a closed box who cannot see outside. In the absence of external gravity, an unknown creature pulls the box upward with an acceleration of 9.8 m/s^2. The person in the box feels the same as if the box was stationary on the ground. If he holds a ball in his hand, he cannot distinguish whether the weight is caused by the gravity of the earth or by the upward acceleration of g, so the gravitational mass is equal to the inertial mass.

In addition to processing data, AI also has the ability to abstract

So, does AI also have the ability to infer new concepts through some abstract elements? The DeepMind team's experiments prove that the answer is yes.

The team initially intended to rely on attributes such as shape, position, and line color of the training material to test the AI's reasoning ability, but the results were not ideal and it was difficult to accurately reflect the AI's reasoning ability.

Common intelligence test question types

The main reason is that if the experimental materials prepared are too much or too specific, the neural network, relying on its powerful learning ability, will refuse to reason because it discovers the general rules therein, and the same is true for humans.

The research team's solution was to build a question generator, which is a set of questions created from a series of abstract elements, such as relationships (such as the development of things) and attributes (such as color and size), specifically designed to train and test AI's reasoning ability.

Most AI models performed well in the test, with some even achieving performance of 75%.The researchers found that the accuracy of the question set is strongly correlated with AI's ability to infer abstract concepts, and its reasoning ability can be improved by adjusting the properties of the question set.

Image reasoning questions stump most AIs

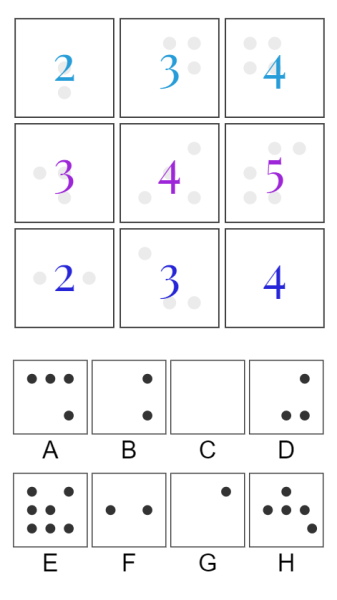

In contrast, visual reasoning is more difficult and requires AI to create a set of questions based on the elements shown in the image. However, the DeepMind team said that some AI models can already perform visual reasoning.

To achieve visual reasoning, these models need to infer and test abstract concepts, like logical operations and arithmetic progressions, from the raw pixels of an image, and apply these concepts to objects they have never observed before.

Figure 1: A visual reasoning test performed by a machine learning model designed by the DeepMind team

The entire test proves that neural networks can give AI the ability to reason, but this ability currently has major limitations, and even the current best Wild Relation Network (WReN) cannot completely solve this problem.

Limitations of AI Reasoning

This limitation mainly lies in the fact that it is difficult for neural networks to discover elements outside the problem set, which leads to reduced generalization ability during reasoning.

The research team wrote in a blog post:“Neural networks have good reasoning capabilities under certain conditions, but when the conditions change, their reasoning capabilities drop rapidly. In addition, the success of model reasoning is also related to many factors, such as the model’s architecture and whether it has been trained.”

This limitation may be addressed if we can find some ways to improve the generalization ability of the model and explore inductive biases that can be used in future models that are "rich in structure and generally applicable."

But are all engineers masochists?

From Go to DOTA2, humans are defeated by AI again and again. Is it really interesting?

Here’s a famous quote for you↓↓↓