Command Palette

Search for a command to run...

Is Your Girlfriend Angry? Algorithms Understand Her Better Than Straight Men

There are usually two ways to use AI technology to judge a person's emotions, one is through facial expressions, and the other is through voice. The former is relatively mature, while research on voice recognition emotions is developing rapidly. Recently, some scientific research teams have proposed new methods to more accurately identify emotions in users' voices.

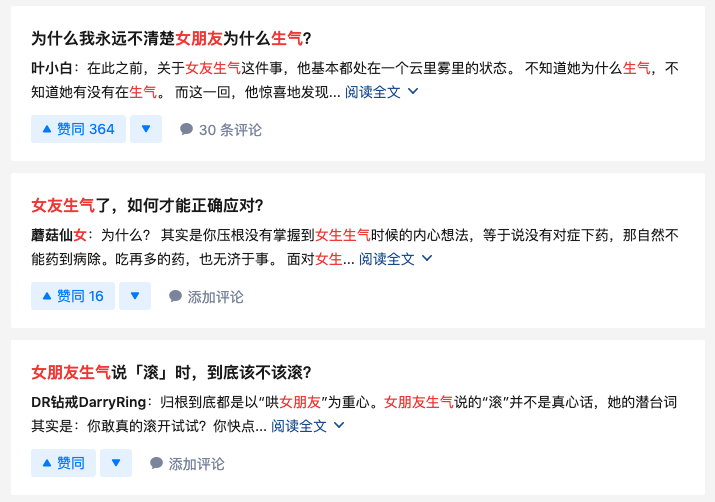

There are many articles on Zhihu about How to tell if your girlfriend is angryWhen asked questions like this, some people answered: The fewer words, the bigger the matter; others said: If I’m really angry, I won’t contact you for a month; if I’m pretending to be angry, I’ll act coquettishly and say “I’m angry”.

Therefore, a girlfriend's "I'm not angry/really not angry" = "very angry"; "I'm angry" = "act like a spoiled child, I'm not angry, kiss, hug and lift me up high". This kind of emotional logic drives straight men crazy.

How can I tell whether my girlfriend is angry or not?It is said that AI has made achievements in identifying emotions by listening to music, and the results may be more accurate than the results of boys scratching their heads and thinking for a long time.

Alexa voice assistant: cultivating a warm and caring personality

Amazon's voice assistant Alexa may be smarter than your boyfriend when it comes to sensing emotions.

This year, after the latest upgrade, Alexa has been able toAnalyze the pitch and volume of user commands, identify emotions such as happiness, joy, anger, sadness, irritability, fear, disgust, boredom and even stress, and respond to corresponding commands.

For example, if a girl blows her nose and coughs while telling Alexa that she is a little hungry, Alexa will analyze the tone of her voice (weak, low) and the background noise (coughing, blowing her nose) and figure out that she is probably sick. Then, Alexa will send out caring advice from the machine: Would you like a bowl of chicken soup, or takeout? Or even order a bottle of cough syrup online and have it delivered to your door within an hour?

Isn’t this behavior more considerate than that of a straight boyfriend?

Artificial intelligence for emotion classification is nothing new, but recently, Amazon Alexa Speech The team broke the traditional methods some time ago and published new research results.

Traditional methods are supervised, and the training data obtained has been labeled according to the speaker's emotional state. Scientists from Amazon's Alexa Speech team recently took a different approach and published a paper introducing this method at the International Conference on Acoustics, Speech and Signal Processing (ICASSP)."Improving Emotion Classification through Variational Inference of Latent Variables" (http://t.cn/Ai0se57g)

Instead of training the system on a corpus of fully annotated sentiment data, they provided aAdversarial Autoencoder (AAE)This is a video with 10 different speakers. 10,000 indivualA public dataset of utterances.

The results of their study showed that in judging people's voices,Potency(emotion valence) orSentimental Value(emotional value), the neural networkImproved accuracy by 4%.Thanks to the team’s efforts, the user’s mood or emotional state can be reliably determined through the user’s voice.

Viktor Rozgic, a co-author of the paper and senior applied scientist in the Alexa Speech group, explained that an adversarial autoencoder is a two-part model that includes an encoder, which learns to generate a compact (or latent) representation of the input speech that encodes all the properties of the training examples, and a decoder, which reconstructs the input from the compact representation.

The researchers' emotional representations areThree network nodesThe three network nodes are used for three emotion measurements respectively:Potency,activation(activation, whether the speaker is alert, engaged, or passive), andDomination(Does the speaker feel controlled by the surrounding situation).

Training pointsThree stagesThe first stage is to train the encoder and decoder separately using unlabeled data. The second stage is adversarial training, a technique where the adversarial discriminator tries to distinguish between real representations produced by the encoder and artificial representations. This stage is used to adjust the encoder. In the third stage, the encoder is adjusted to ensure that the latent emotion representation can predict the emotion labels of the training data.

In “hand-engineered” experiments involving sentence-level feature representations to capture information about speech signals, their AI system was 3% more accurate at assessing valence than a traditionally trained network.

Furthermore, they show that when the network was fed a sequence of acoustic properties representing 20-millisecond frames (or audio clips), performance improved by 4%.

MIT lab builds neural network that can sense anger in 1.2 seconds

Amazon isn’t the only company working on improved voice-based emotion detection.MIT Media Lab Affectiva Recently, a neural network SoundNet was demonstrated: it can Within 1.2 seconds(Surpassing the time it takes for humans to perceive anger) Classify anger and audio data, regardless of language.

In a new paper, researchers at Affectiva 《Transfer Learning From Sound Representations For Anger Detection in Speech》(https://arxiv.org/pdf/1902.02120.pdf)The system is described in .It builds on voice and facial data to create emotional profiles.

To test the generalizability of the AI model, the team evaluated the model trained on Mandarin speech emotion data (Mandarin Affective Corpus, or MASC) using a model trained on English.Not only does it generalize well to English speech data, it also works well on Chinese data, although the performance drops slightly.

“Anger recognition has a wide range of applications, including conversational interfaces and social robots, interactive voice response (IVR) systems, market research, customer agent assessment and training, and virtual and augmented reality,” the team said.

Future work will develop other large public corpora and train AI systems for related speech-based tasks, such as recognizing other types of emotions and affective states.

Israeli app recognizes emotions: accuracy rate 80%

Israeli startups Beyond Verbal An application called Moodies has been developed, which can collect the speaker's voice through a microphone and determine the speaker's emotional characteristics after about 20 seconds of analysis.

Although speech analysis experts acknowledge that language and emotions are correlated, many experts question the accuracy of such real-time measurements - the sound samples collected by such tools are very limited, and actual analysis may require collecting samples for several years.

“With the current state of cognitive neuroscience, we simply don’t have the technology to truly understand a person’s thoughts or emotions,” said Andrew Baron, assistant professor of psychology at Columbia University.

However, Dan Emodi, vice president of marketing at Beyond Verbal, said that Moodies has been researching for more than three years and based on user feedback,The accuracy of the applied analysis is approximately 80%.

Beyond Verbal said that Moodies can be used for self-emotional diagnosis, customer service center to handle customer relations and even to detect whether job applicants are lying. Of course, you can also bring it to a dating scene to see if the other person is really interested in you.

Voice emotion recognition still faces challenges

Although many technology companies have been conducting research in this area for many years and have achieved good results, as Andrew Baron questioned above, this technology still faces many challenges.

Just like a girlfriend's calm "I'm not angry" doesn't mean she's really not angry, a pronunciation can contain a variety of emotions.The boundaries between different emotions are also difficult to define, which emotion is the current dominant emotion?

Not all tones are obvious and intense; expressing emotions is a highly personalized matter that varies greatly depending on the individual, environment, and even culture.

In addition, a mood may last for a long time, but there will also be rapid changes in mood during the period.Does the emotion recognition system detect long-term emotions or short-term emotions?For example, someone is suffering from unemployment, but is briefly happy because of the concern of his friends. But in fact, he is still sad. How should AI define his state?

Another worrying thing is that when these products can understand people's emotions, will they ask more private questions and obtain more information about users because of their dependence on them, therebyTurn "service" into "transaction"?

I hope you will have Dabai and someone who truly understands you.

Many people want to have a warm and caring Baymax. Will this high-emotional-intelligence robot that only exists in science fiction animations become a reality in the future?

At present, many chatbots still lack emotional intelligence and cannot perceive users' small emotions, often killing the conversation. Therefore, the only one who can really understand you is still the person who stays by your side and listens to you.

-- over--