Command Palette

Search for a command to run...

The Magic of Social Media: How to Delete a Piece of History on the Internet?

In today's information explosion, people's focus and memory have both advantages and disadvantages under the application of algorithms. So how are our active or passive memory or forgetting affected by data information?

In science fiction, tools or spells are often used to erase people's recent memories. People whose memories are erased usually see something they shouldn't see, or forget something they want to forget.

In reality, there is no such tool, and important history will be recorded in various forms by people of the same generation. As a new information carrier, data has also greatly changed people's concept system.

Countless new hot spots gradually cover our memories. Under the influence of news hotspots, we constantly refresh our cognition passively.

The more hot spots there are, the more they occupy our memory space. If they are not repeatedly emphasized, over time, some hot spots will seem like they never happened.

A short story: The formation of extreme ideas

Around 2014, ISIS was on the rise, and social media and mobile Internet information were exploding.

Although the ideas they advocate are extremely conservative, ISIS uses a variety of communication methods, such as Twitter, Facebook and YouTube, to spread extremist ideas and attract supporters from all over the world to join them.

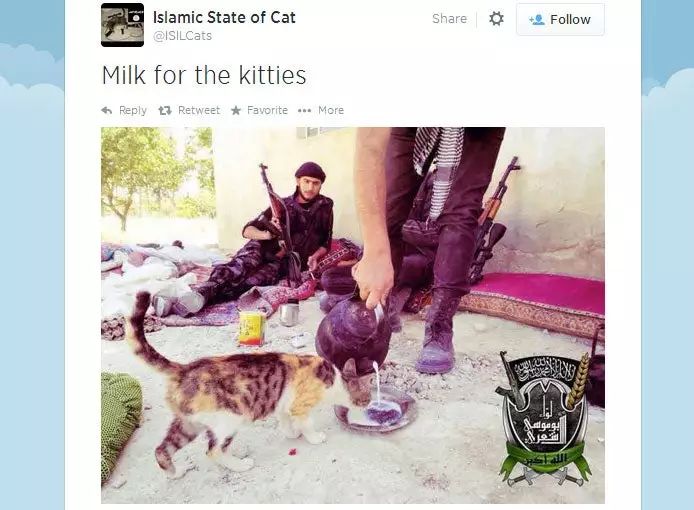

They even know how to build their own persona. In addition to posting brutal punishment videos, they also try to please young netizens.

Even created a Islamic State Catsaccounts that posted photos and videos of kittens in their lives, until these accounts were closed one by one by Twitter and Facebook.

In the first quarter of 2018, Facebook deleted a total of 28.8837 million posts and closed and deleted approximately 583 million fake accounts, mainly information and accounts related to terrorism and hate speech.

The power of giants: quietly erasing traces of disharmony

Facebook said it has become less and less reliant on human intervention in the process of blocking and deleting posts. 99.5 % Terrorism related posts,It was found by Facebook using artificial intelligence technology.

One of these techniques isImage recognition and matchingOnce a picture posted by a user is suspected of being suspicious, Facebook will automatically match it through an algorithm to find out whether the picture is related to ISIS propaganda videos, or whether it can be associated with deleted extreme pictures or videos, and then take ban measures.

Facebook's technical team once blogged"Rosetta: Understanding text in images and videos with machine learning", describes how the image recognition tool Rosetta works.

Rosetta uses Faster R-CNN to detect characters, and then uses the ResNet-18 fully convolutional model with CTC (Connectionist Temporal Classification) loss to perform text recognition, and uses LSTM to enhance accuracy.

In addition, Facebook is also conductingText analysis research, analyze the language that terrorists may use on the website, and take corresponding countermeasures immediately once the content published involves terrorism.

In addition to increasingly powerful AI review tools, Facebook also has a strong manual review team. Their security team is divided into two teams: community operations and community integrity. The community integrity team is mainly responsible for establishing automated tools for the reporting-response mechanism.

Currently, this manual review team has reached more than 20,000 people. Based on Facebook's current 2 billion active users, each security officer needs to cover 100,000 users.

Live streaming influencers: Even if the platform does not interfere, the popularity will only last for half a year at most

Data shows that the number of effective anchors on the six major domestic entertainment live streaming platforms, Inke, Huajiao, Yizhibo, Meipai, Momo, and Huoshan, is about 1.44 million. If the game live streaming platforms of Douyu, Huya, Penguin Esports, and Panda TV are added, the total number of effective anchors on well-known domestic live streaming platforms should be roughly between 2.4 million and 2.5 million.

Many of these young people who want to become famous quickly do not have what it takes to become an idol, so they try to find new ways to attract users through plastic surgery, pranks, and extreme performances.

Some anchors performed acts such as eating raw animals and drinking chili oil. Some even took photos on railroad tracks, parked on highways, climbed tall buildings, and dived from high platforms. These were all highly dangerous behaviors. At one point, there were multiple incidents where anchors caused serious casualties while shooting videos.

After experiencing a chaotic period at the beginning of its development with no supervision from above and no threshold from below, national regulatory authorities quickly intervened to establish industry rules, various laws and regulations were successively introduced, special rectification actions were launched one after another, and various platforms also began to crack down on anchors who violated various content regulations.

Kuaishou CEO Su Hua mentioned in an interview with the media that Kuaishou's recommendation algorithm is not a simple labeling, but an interactive influence, such as "When several people like the same person, we assume that these people share a certain characteristic."It is given these features that the algorithm can estimate the degree of match between content and users.

In the recommendation system, the most commonly usedCollaborative filtering, Simply put, the main functions of this algorithm are prediction and recommendation.

The algorithm discovers user preferences by mining historical user behavior data, constructs user portraits or content portraits, and divides users into groups based on different preferences to recommend content that may be of interest to users.

The first category is Neighborhood-based, the second type is Model-based approach,In this approach, the model uses different data mining ,machine learning algorithms to predict the user’s rating for ,unrated content.

Most commercial applications are also hybrid, and they overlap multiple recommendation algorithms to make up for the shortcomings of different algorithms and make the recommendation results more accurate.

Nowadays, it is very difficult for us to see extremely vulgar video content, and we will not see them being pushed to the front page because of the popularity of the audience. But this does not prove that these videos have not been filmed, or that these video contents have not happened in the real world.

Collective amnesia: What you see is what he wants you to see

All the information we come into contact with is a kind of picture constructed after being screened through layers of algorithms, but will it be a "The Truman Show", I’m afraid it’s hard to explain clearly.

Moreover, in this world, forces from different governments, organizations, nationalities, and cultures are interfering with the presentation of information. The truth of this world can never be understood by simply swiping your phone or thinking.

Behind the constantly interfered memories are the big companies that control our social channels.As long as they want, they can easily control public opinion and make people quickly ignore or forget some things..

Having more and more smart tools is not an excuse for us to be lazy in thinking. On the contrary, what we need is to work harder with the help of technology.Explore the truth of the world.