HyperAI Introduction

Forget about the unhappy things, there are still people who are making browsers well.

Recently, a group of engineers developed a face recognition API that can run on the browser based on the tensorflow.js core framework - face-api.js, which can not only recognize multiple faces at the same time,Allow more non-professional AI engineers to use facial recognition technology at low cost.

Face recognition principle

face-api.js is a js framework based on Tensorflow.js core. It uses three types of CNN to perform face recognition and facial feature detection to identify people in images.

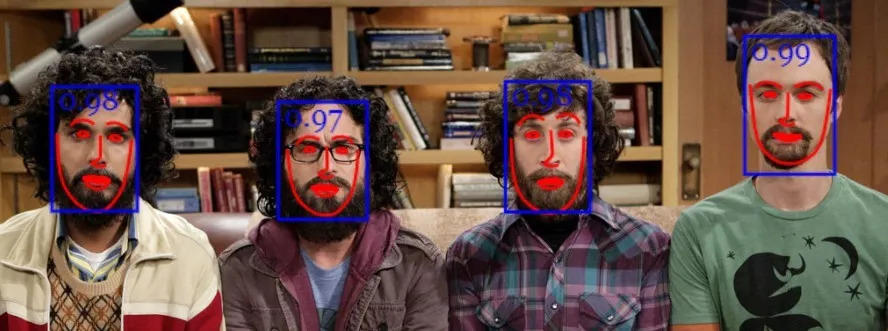

Like most image recognition technologies, this technology works by matching the database, finding the image with the highest similarity, and outputting the result. However, face-api.js can recognize multiple faces in an image at the same time.

The general working principle of face recognition technology is: engineers first input a large number of images marked with information such as names into the system to build a data training set, and then use the recognition objects as a test set to compare with the images in the training set.

If the similarity between two images reaches the threshold, the result is output, otherwise "unknown" is output.

The implementation principle of face-api.js

First, face detection is required, that is, circling all the faces in the image.

face-api.js uses the SSD algorithm (Single Shot Multibox Detector) to detect faces. The SSD algorithm is a multi-target detection method that can directly detect target categories and determine bounding boxes (commonly known as b boxes), which can improve recognition accuracy while also increasing recognition speed.

SSD can be understood as a CNN based on MobileNetV1 with an additional border prediction layer. The system first uses a bounding box to circle the facial contour and scores it. The closer the image is to the face, the higher the score is, thereby filtering out non-face image content.

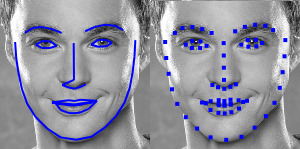

To ensure accuracy, the images in the input test set should be centered on the face, so the face frame needs to be aligned. To this end, face-api.js uses a simple CNN to find 68 landmarks to determine the face image, preparing for the next step of face recognition.

Example

Through the landmark points, the system can further determine the facial image. The following figures show the effects before (left) and after (right) face alignment.

Face alignment effect diagram

Obviously, after alignment, there are fewer things that are not related to the face, which is conducive to improving the recognition accuracy of the system.

Implementation of face recognition

After the face is circled, facial recognition will begin.

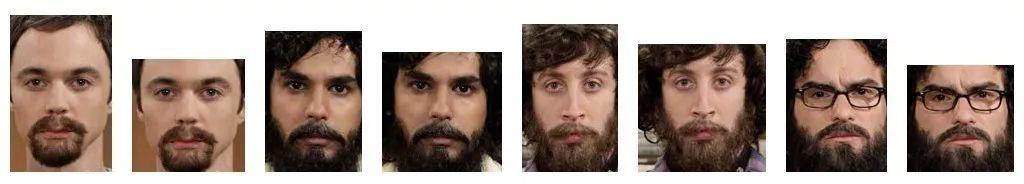

The program inputs the aligned faces into a face recognition deep learning network based on ResNet-34 Architecture, face detection through the Dlib library. This technology can map facial features to a face descriptor (a feature vector with 128 values), a process often referred to as face embedding.

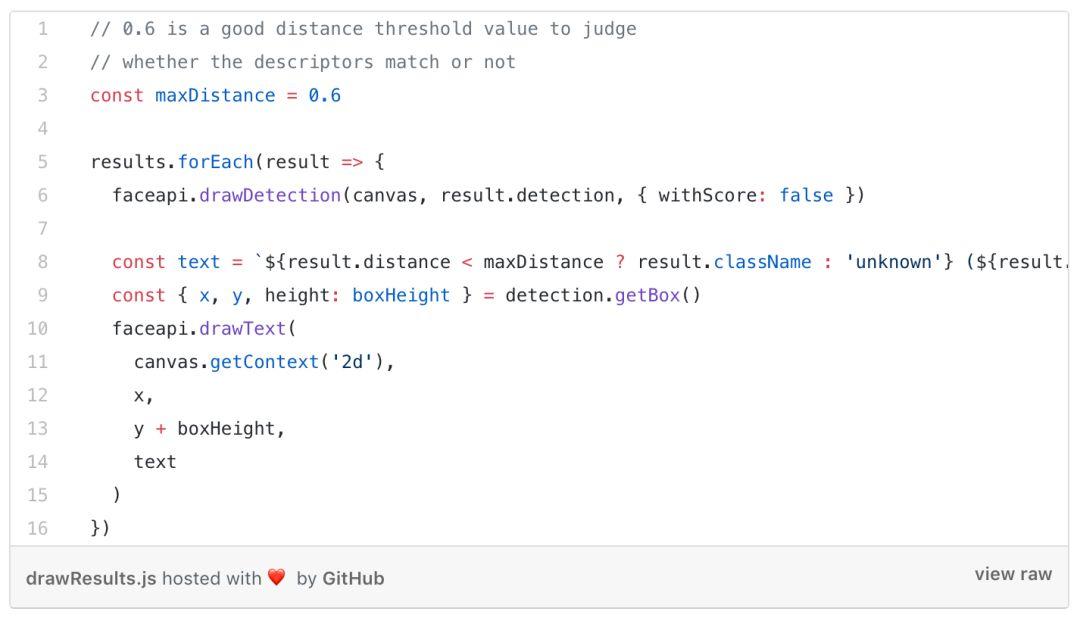

After that, the program compares the face descriptors of each image with the face descriptors in the training set for similarity, and determines whether the two faces are similar based on a threshold (for a 150×150 pixel face image, a threshold of 0.6 is more appropriate).

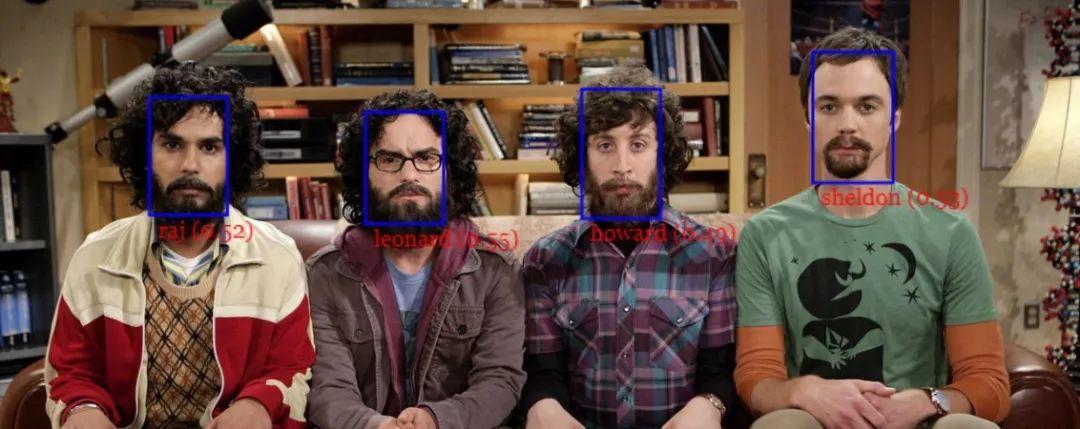

Euclidean distance (i.e. Euclidean metric) can be used for similarity measurement, which works very well. The actual effect can be observed in the gif picture below.

Talk is cheap, show me the code!

After introducing the theoretical knowledge, it is time to walk you through the practical process. The following figure is used as the input image.

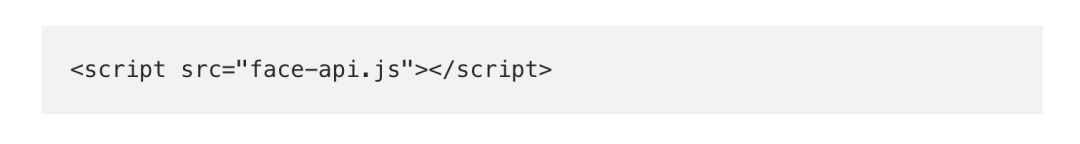

Step 1: Get the script

You can get the latest script from dist/face-api.js:

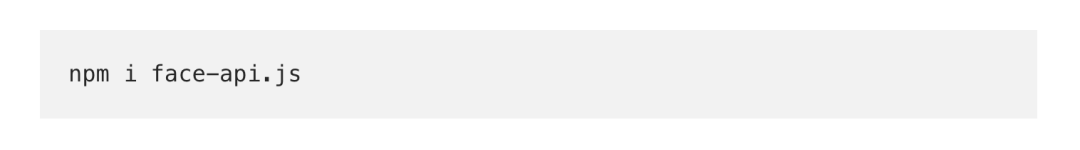

It can also be obtained via NPM:

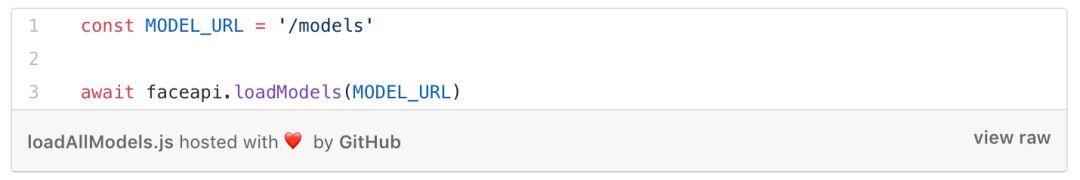

Step 2: Load the data model

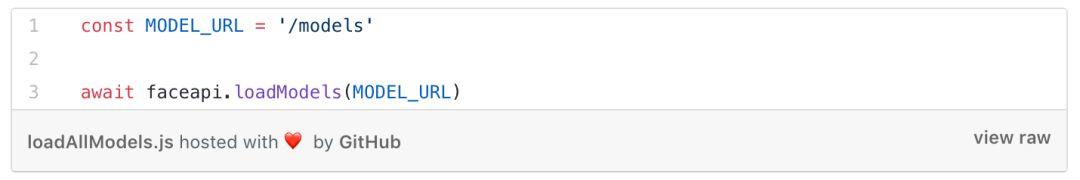

Model files can be used as static resources of web applications or mounted to other locations. Models can be loaded by specifying file paths or URLs.

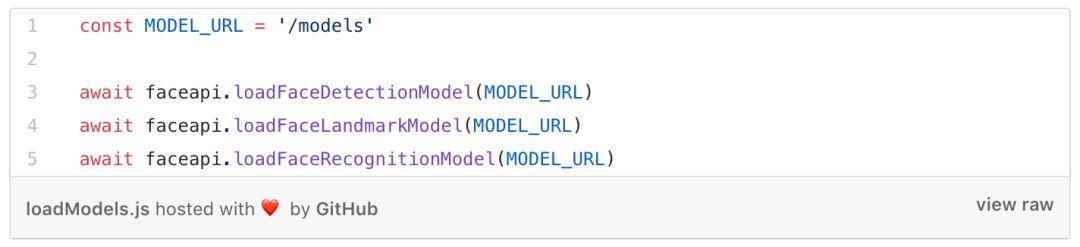

Assuming the model is in the public/models directory:

If you are loading a specific model, then:

Step 3: Get a full description

Any HTML image, canvas, or video can be used as input to the network. Here is a complete description of getting the input image, i.e. all faces:

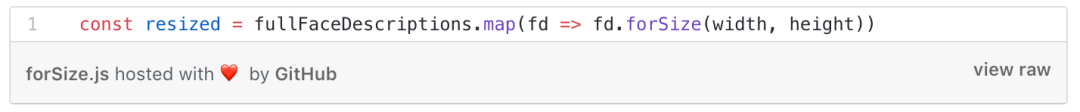

You can also select the face position and features yourself:

You can also visualize the result by displaying a border via HTML canvas:

The facial features are shown below:

Now we can calculate the location and descriptors of each face in the input image, which will serve as reference data.

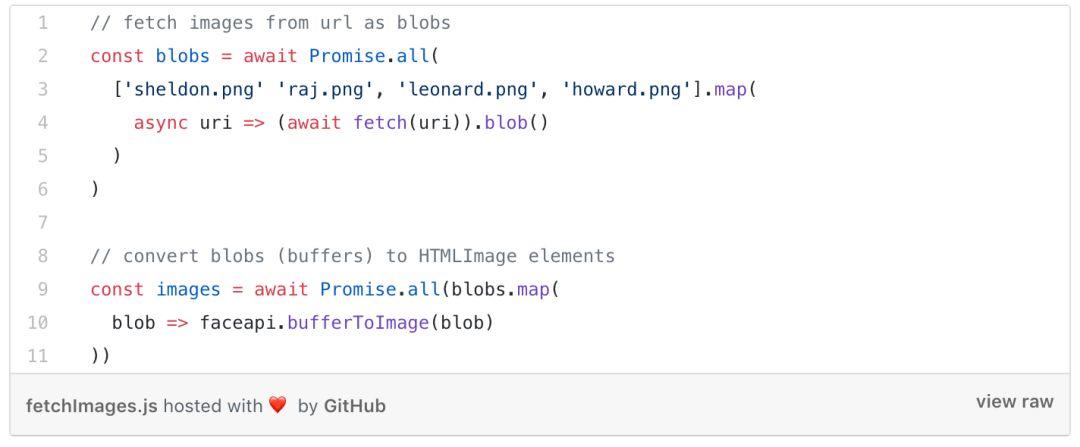

The next step is to get the URL of the image and use faceapi.bufferToImage Create an HTML image element:

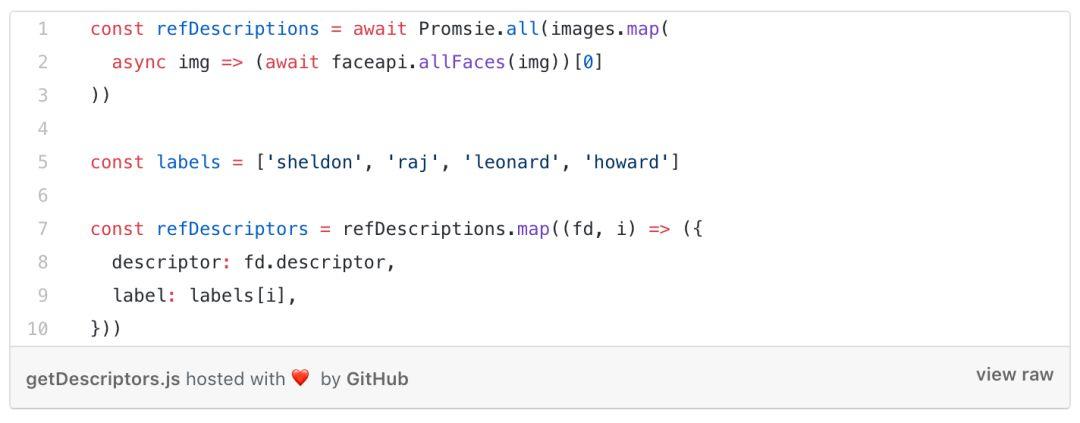

For each image, locate the face and compute the descriptor:

Then iterate over the face descriptors of the input image and find the most similar descriptor in the reference data:

Get the best match for each face in the input image using the Euclidean metric and display the bounding box and its label in an HTML canvas:

This is the whole process of face recognition by face-api.js. Isn’t it very simple?Friends who are interested can try it, and you are welcome to send us your experimental results and experiences.