Command Palette

Search for a command to run...

Moore's Law: Past and Present

HyperAI Introduction

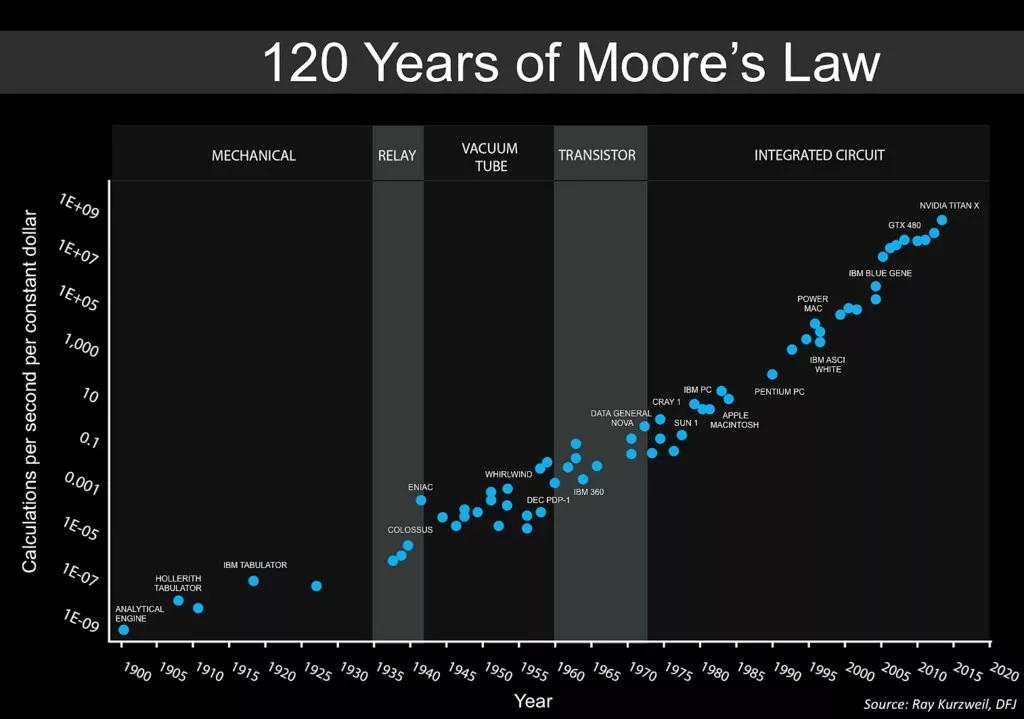

Moore's Law is one of the famous trend laws in computer science, which reveals the high-speed law of hardware development. This law has continuously motivated hardware manufacturers to update their products.

However, in recent years, the chip industry seems to have encountered a technical bottleneck, and the speed of upgrading has been slowing down. Therefore, many people believe that Moore's Law is becoming invalid. But the emergence of AI has made Moore's Law "come alive" again.

What is Moore's Law?

In 1965, Gordon Moore, one of the co-founders of Intel, first proposed Moore's Law in an article titled "Filling More Components into Integrated Circuits."

Moore’s Law:

When the price remains constant, the number of components that can be accommodated on an integrated circuit (ie, chip) will double approximately every 18-24 months, and the performance will also double.

In other words, the computer performance that can be purchased per dollar will more than double every 18-24 months.

After that, Moore made predictions about the development of the chip industry every ten years. In 1975, he predicted that chip complexity would double every two years in the next ten years.

Although this trend has continued for more than half a century, Moore's Law should still be considered an observation or conjecture rather than a physical or natural law.

The updated growth of the International Semiconductor Technology Development Roadmap in 2010 has slowed down, and the density of transistors is expected to double only every three years thereafter.

There is also a view that this law is first based on the IQ of economic laws, which reveals the speed of progress in information technology. Therefore, the electronics industry is able to convince consumers to buy a new product every few years.

At the same time, IBM engineer Robert Dennard proposed Moore's Law's good friend, Dennard Scaling, in 1974.

Dennard scaling law:

Shrinking the size of a chip while increasing its components will allow it to run faster while reducing production costs and energy consumption.

In this way, Moore's Law and Dennard Scaling Law continue to inspire chip manufacturers to increase chip components, improve chip performance, and reduce chip size, leading to more than 30 years of rapid development of the chip industry.

Why did Moore's Law fail?

Since 2005, under the guidance of Moore's Law, chip research and development has entered the nanoscale. As the components become more and more numerous and smaller in size, the quantum tunneling effect (the quantum behavior of microscopic particles such as electrons being able to penetrate or cross potential barriers) has gradually intervened.

Under the influence of this effect, transistor leakage begins to appear, causing power consumption to increase instead of decrease when chips are manufactured using smaller processes, while also causing serious heat dissipation problems.

In order to solve the leakage problem, people stopped developing microchips and turned to multi-core development, that is, running multiple chips at the same time in computers or mobile phones. Even so, the problem has not been effectively solved so far.

The transistor leakage phenomenon completely breaks the Dennard scaling law and raises doubts about Moore's Law. Moreover, under the current chip development conditions, it is difficult to add transistor components, and the only way is to improve the existing chips, but the cost of chip production is increasing.

Chien-Ping Lu, senior director of MediaTek, pointed out in a 2005 paper:Nowadays, although the number of transistors has doubled from the original basis, the overall performance of the processor has not improved much, and the R&D cost and energy consumption are increasing.

The cost of manufacturing chips is rising year by year

Intel also pointed out that it now costs about $10 billion to set up a chip manufacturing plant, which is a huge sum of money for any company.

Dario Gil, IBM's head of research and development, directly stated that Moore's Law has difficulty adapting to the future development of computer science.

Bob Colwell, former chip designer at Intel, also believes thatThe chip industry may be able to manufacture chips using 5-nanometer technology around 2020, but this is likely to be the limit of current chip manufacturing technology.

Since the 21st century, the chip industry has developed rapidly, with smaller and smaller sizes and stronger and stronger performance. However, as the manufacturing process approaches its limit, Moore's Law has indeed lost its leading role in traditional chips to a certain extent.

But in the field of AI, Moore's Law is likely to come back into effect.

How can AI save Moore's Law?

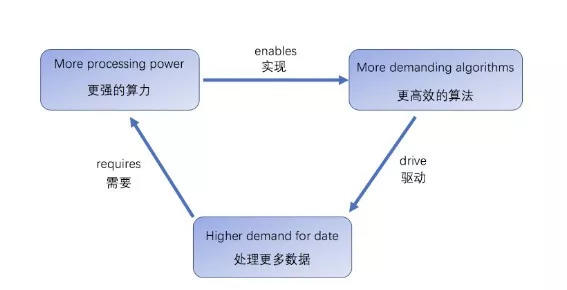

The rise of AI has put forward new requirements for core computer hardware: in order to meet the requirements of deep learning training, hardware needs to be able to process massive amounts of data in a shorter time while maintaining or even reducing the current energy consumption range.

The relationship between computing power, algorithms, and data

It is estimated that by 2020, the computing power required for AI will increase 12 times from the current level. This 12-fold computing power will allow AI models to shorten the work that originally took several days to complete to just a few hours. However, it is still difficult to achieve all this relying solely on existing chips.

To this end, many companies are developing AI-specific processors to increase chip computing power and reduce usage costs. Among the many AI hardware developers, GPUs developed by major hardware suppliers such as Nvidia and AMD are probably one of the few processors that can currently meet AI computing power requirements.

Netizens spoofed Nvidia GPU in the shape of a gas stove

As the world's largest CPU manufacturer, Intel hopes to develop AI processors with more powerful performance than existing GPUs in the future. In 2016, Intel acquired AI software company Nervana for $408 million.

The company has released the ASIC AI series to improve the computing efficiency of its core algorithms. It is said that the chip is 10 times more powerful than the world's top Nvidia Maxwell architecture GPU.

In addition, Google is also developing its own AI processor TPU, which is also designed specifically for AI. TPU is 15 to 30 times faster than existing AI processors in processing benchmark code execution.

In China, many companies are also planning to make great strides in the chip field, not only to promote the overall development of the AI industry, but also to fill the gap in domestic chips.

From CPU to GPU to TPU, AI is using new technologies to create more powerful chips. From this perspective, although Moore's Law is failing in the field of traditional computer hardware, it may be reborn in the field of AI in the future.