Command Palette

Search for a command to run...

Meituan's open-source Video Generation Model, LongCat-Video, Combines text-based Video Generation, image-based Video Generation, and Video Continuation Capabilities, Rivaling top-tier open-source and closed-source models.

World models aim to understand, simulate, and predict complex real-world environments, serving as a crucial foundation for the effective application of artificial intelligence in real-world scenarios. Within this framework, video generation models, through their generation process, progressively compress and learn various knowledge forms, including geometric, semantic, and physical elements.Therefore, it is regarded as a key path to building a world model, and is expected to eventually achieve effective simulation and prediction of the dynamics of the real physical world.In the field of video generation, achieving efficient long video generation capabilities is particularly important.

Based on this,Meituan has open-sourced its latest video generation model, LongCat-Video, which aims to handle various video generation tasks through a unified architecture, including text-to-video, image-to-video, and video-continuation.Based on its outstanding performance in general video generation tasks, LongCat-Video is regarded by the research team as a solid step towards building a true "world model".

The main features of LongCat-Video include:

* Unified architecture for multiple tasks. LongCat-Video unifies text-based video, image-based video, and video continuation tasks within a single video generation framework, distinguishing them by the number of conditional frames.

* Long video generation capability. LongCat-Video is pre-trained based on video continuation tasks, enabling it to generate videos that are several minutes long and effectively avoid color distortion or other forms of image quality degradation during the generation process.

* Efficient reasoning. LongCat-Video employs a "coarse-to-fine" strategy to generate 720p, 30fps video in just a few minutes, effectively improving video generation accuracy and efficiency.

* The powerful performance of the multi-reward reinforcement learning framework (RLHF). LongCat-Video employs Group Relative Policy Optimization (GRPO), which further enhances model performance by using multiple rewards, achieving performance comparable to leading open-source video generation models and the latest commercial solutions.

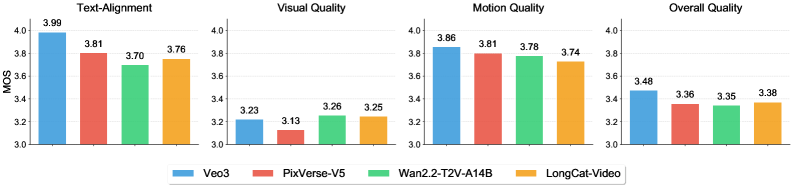

Based on internal benchmark performance evaluations, LongCat-Video performs well in textural video tasks.It performs exceptionally well in both visual and motion quality, scoring almost on par with the top model, Wan2.2.The model also achieved robust results in text alignment and overall quality, providing users with a consistent high-quality experience across multiple dimensions.

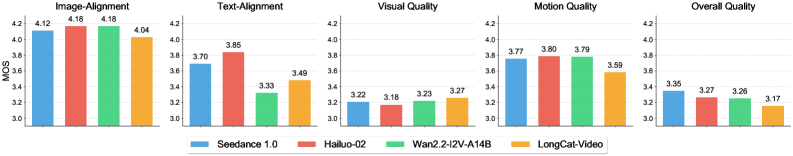

In the image-to-video task, LongCat-Video stands out for its visual quality, outperforming other models such as Wan2.2 and demonstrating its significant advantage in generating high-quality images. However, there is still room for improvement in areas such as image alignment and overall quality.

Recently, Cloudflare experienced a outage, causing connection failures for a wide range of internet applications, including X, ChatGPT, and Canva. Let's take a look at how LongCat-Video simulated the outage's response 👇

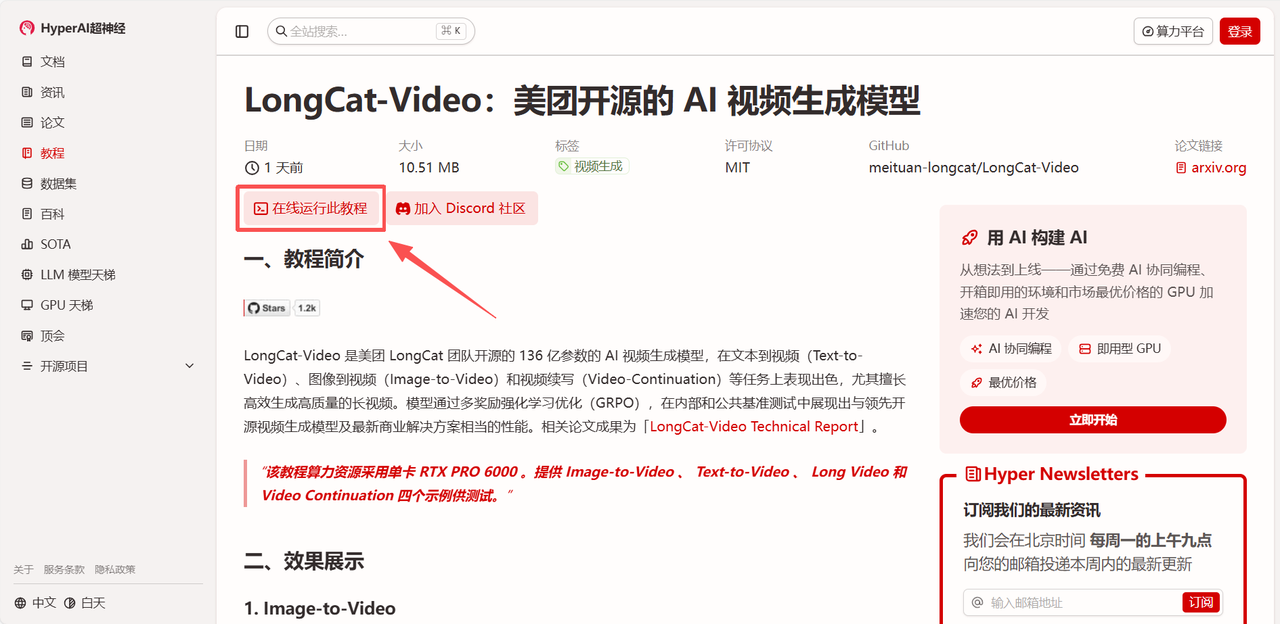

Currently, "LongCat-Video: Meituan's Open-Source AI Video Generation Model" is available on the HyperAI website's "Tutorials" section. Click the link below to experience the one-click deployment tutorial ⬇️

Tutorial Link:

Demo Run

1. After entering the hyper.ai homepage, select "LongCat-Video: Meituan's open-source AI video generation model", or go to the "Tutorials" page and select it, then click "Run this tutorial online".

2. After the page redirects, click "Clone" in the upper right corner to clone the tutorial into your own container.

Note: You can switch languages in the upper right corner of the page. Currently, Chinese and English are available. This tutorial will show the steps in English.

3. Select the "NVIDIA RTX PRO 6000 Blackwell" and "PyTorch" images, and choose "Pay As You Go" or "Daily Plan/Weekly Plan/Monthly Plan" as needed, then click "Continue job execution".

4. Wait for resource allocation. The first clone will take approximately 3 minutes. Once the status changes to "Running", click the jump arrow next to "API Address" to go to the Demo page.

Effect Demonstration

After entering the demo interface, you can choose from four examples for testing: Image-to-Video, Text-to-Video, Long Video, and Video Continuation. This article selects Image-to-Video for example.

After uploading the sample image, enter "Prompt". In "Advanced Options", you can make more settings for parameters such as negative prompts, resolution, and the starting point of randomness in the generation process to achieve a more ideal generation effect.

Recently, Cloudflare experienced a outage, causing connection failures for a wide range of internet applications, including X, ChatGPT, and Canva. Let's take a look at LongCat-Video's simulation of how people reacted to the outage 👇

The above is the tutorial recommended by HyperAI this time. Everyone is welcome to come and experience it!

Tutorial Link: