Command Palette

Search for a command to run...

A Breakthrough in Image Geolocation! The University of Maine, Google, OpenAI, and Others Have Proposed the LocDiff Framework, Achieving Precise Global Positioning Without the Need for Grids or Reference libraries.

Location decoding technology, which infers geographical location from contextual information, is widely used in trajectory synthesis, building outline segmentation, and image geolocation. Among these, image geolocation, which associates visual content with geographic coordinates, has become a key research focus. It predicts latitude and longitude coordinates by analyzing image features and is suitable for data types such as wildlife monitoring and urban street views.

However, unlike mature image classification tasks, image geolocation faces complex nonlinear mapping problems, making accurate modeling difficult. Early studies used regression models to directly map image features to latitude and longitude, but these models exhibited poor stability and prediction errors often reaching hundreds of kilometers on a global scale. To overcome this problem, researchers proposed the "discretization transduction" method, transforming the geolocation task into a classification or retrieval problem. However, these methods still have limitations in terms of spatial resolution and geographic coverage.

In recent years, generative techniques, represented by diffusion models, have opened up new avenues for geolocation research due to their excellent ability to model continuous data distributions. Based on this, a joint team from the University of Maine, the University of Texas, the University of Georgia, the University of Maryland, Google, OpenAI, and Harvard University has proposed an innovative approach.They found that the fundamental reason why traditional generation methods fail is that the spatial properties of geographic coordinates are different from those of conventional data: the coordinates are located in an embedded Riemannian manifold rather than Euclidean space, and directly applying noise will cause projection distortion; at the same time, the original coordinates lack multi-scale spatial information, making it difficult to support the modeling of complex distributions.To address these two major issues, the team proposed the "Spherical Harmonics Dirac Delta (SHDD)" and the integrated framework LocDiff. By constructing an encoding method and diffusion architecture adapted to spherical geometry, they achieved accurate positioning without relying on preset grids or external image libraries, providing a groundbreaking technical path for this field.

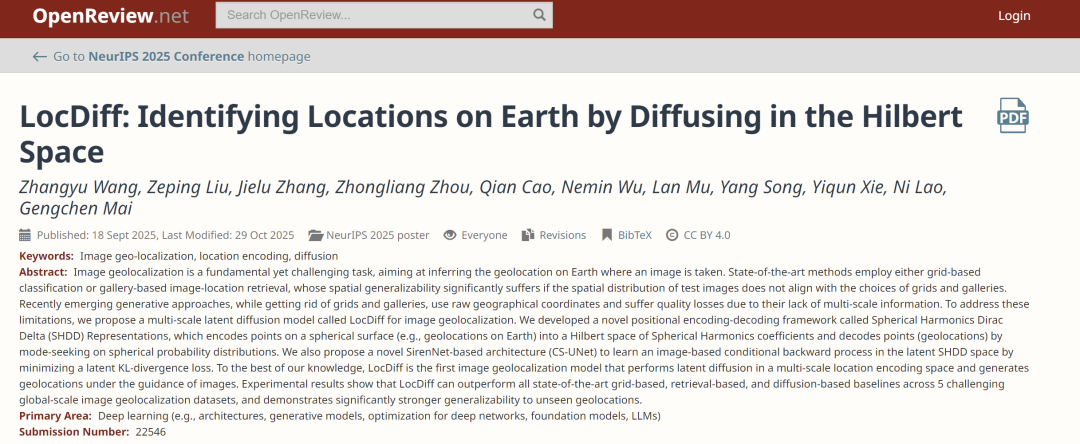

The related research findings, titled "LocDiff: Identifying Locations on Earth by Diffusing in the Hilbert Space," have been included in NeurIPS 2025.

Paper address:

https://openreview.net/forum?id=ghybX0Qlls

Follow our official WeChat account and reply "LocDiff" in the background to get the full PDF.

More AI frontier papers:

https://hyper.ai/papers

Datasets: Based on GeoCLIP, this dataset expands upon three major global-scale typical image geolocation datasets.

To ensure the comparability and reliability of the research results, the researchers followed the benchmark settings of the GeoCLIP model, which is widely used in the field of image geolocation.The training phase used the MP16 dataset (MediaEval Placing Tasks 2016), which contains 4.72 million images with precise geographic annotations, providing ample data support for model training.During the testing phase, three typical global-scale image geolocation datasets were selected: Im2GPS3k, YFCC26k, and GWS15k.

It should be noted that the test sets Im2GPS3k and YFCC26k are quite similar to the training set MP16 in terms of data distribution, and some images may overlap. This characteristic gives retrieval-based methods (such as GeoCLIP) a certain advantage in the matching process, which helps to improve their retrieval accuracy.During the model inference phase, the researchers adopted the strategy used by mainstream models such as GeoCLIP and SimCLR, generating 16 enhanced versions for each test image and using the geographic center of its multiple samplings as the final predicted location.This strategy significantly improves model performance. For example, in comparative experiments, if the image enhancement and result averaging steps are removed, GeoCLIP's 1-kilometer-scale positioning accuracy on the Im2GPS3k dataset drops from 14% to below 10%.

To comprehensively evaluate the model's positioning capabilities at different spatial scales, this study set five evaluation levels: street level (1 km), city level (25 km), regional level (200 km), national level (750 km), and continental level (2,500 km). The model's performance was quantified by statistically predicting the proportion of samples that fall within the neighborhood of the actual location.

LocDiff: Latent diffusion model for generating spherical positions

The core objective of the LocDiff model is to construct a latent diffusion framework adapted to spherical position generation. Its core idea is to build a position encoding space that can overcome the problems of sparsity and nonlinearity. Specifically, this is achieved through the deep integration of the spherical harmonic Dirac function (SHDD) encoding-decoding framework, the conditional Siren-UNet (CS-UNet) architecture, and efficient computational strategies.

To clarify the technical direction, this research first mathematically defines the core properties that an ideal position encoding space should possess: Let the coordinate space C be a unit sphere embedded in three-dimensional Euclidean space, parameterized using angular coordinates (θ, φ); the ideal position encoder PE must be an injective function from C to the high-dimensional space ℝ^d (ensuring encoding uniqueness), while the decoder PD must be a surjective function mapping back from ℝ^d to C (ensuring decoding integrity). More importantly, the encoding space needs to be densely filled through a continuous difference metric ℰ, and the decoder must meet the stability requirement that "small perturbations in the encoding space only cause small changes in the spherical coordinates"—these two properties are key to overcoming existing technical bottlenecks.

However, existing methods face a double dilemma in achieving the above goals: if the location encoding space itself is sparse, the diffusion model will have difficulty in carrying out a stable diffusion process in it, which directly leads to training convergence difficulties and low decoding accuracy; if a dense location embedding space is used instead, although it can support the smooth progress of the diffusion process, the highly nonlinear mapping between location encoding and coordinate space will cause the task of "inferring the correct geographic coordinates from the embedding results" to fall into a deadlock - minimizing the distance in the embedding space often cannot correspond to minimizing the distance in the geographic space.

To overcome this challenge, researchers proposed the SHDD encoding scheme.The innovative approach involves first transforming a spherical point (θ₀, φ₀) into a spherical harmonic Dirac function δ_(θ₀, φ₀), then encoding this function into a spherical harmonic function coefficient vector, ultimately forming the SHDD representation. In practical applications, by setting the maximum order L of the spherical harmonic function, the theoretically infinite-dimensional coefficient vector can be truncated into a compact (L+1)²-dimensional representation. Furthermore, the larger the value of L, the more refined the spatial information captured by the representation, providing flexible support for multi-scale positioning requirements.

The SHDD Encoding Space is inherently dense: each point e in it uniquely corresponds to a spherical function Fₑ. The difference between this function and the spherical harmonic Dirac function δ_(θ₀, φ₀) corresponding to the real location is quantified by the inverse KL divergence. This difference measure ℰ is the continuous metric required for the study.More importantly, the SHDD KL divergence and the Wasserstein-2 distance have a clear constraint relationship, which mathematically guarantees the consistency between the differences in the coding space and the differences in the spherical probability distribution, laying the foundation for decoding stability.Meanwhile, SHDD encoding effectively solves the nonlinearity problem of traditional methods. The relevant heatmap comparison shows that, compared with traditional embedding methods, the spherical distance measured by SHDD is smoother. This smoothness greatly reduces the risk of error propagation in the decoding process and provides a guarantee for accurate positioning.

Based on the characteristics of SHDD representation, researchers designed a modality search decoder to achieve efficient decoding.This decoder utilizes the modal search nature of inverse KL divergence to perform coordinate inversion by finding the region with the highest concentration of probability mass of spherical functions. The hyperparameter ρ is used to balance decoding resolution and stability—a larger ρ value makes the decoding result less sensitive to local peaks but results in coarser accuracy, while a smaller ρ value improves accuracy but makes it more susceptible to local noise. This parameterless design has dual advantages: it avoids introducing additional losses during the decoding stage and completely eliminates the dependence on pre-defined spherical partitions or external reference image libraries, breaking the application limitations of traditional methods.

As the conditional generation backbone network of LocDiff, as shown in the figure below, the CS-UNet architecture uses SirenNet as its base module. This choice stems from the fact that the coefficients of spherical harmonic functions are essentially a superposition of sine and cosine functions, and SirenNet's sinusoidal activation function can effectively maintain gradient flow, adapting to the propagation requirements of spherical harmonic features. The core unit of CS-UNet, C-Siren, achieves efficient conditional denoising through a sophisticated feature fusion mechanism: after inputting the latent vector x, the image conditional embedding e_I, and the diffusion step t, x and e_I are first projected into hidden vectors, then the discrete diffusion time step t is transformed into scale and offset vectors to complete unconditional denoising. Finally, the image conditions and denoising features are fused, and the adjusted features are output and passed to the next level module, forming a complete conditional guidance chain.

The training process of LocDiff follows the standard DDPM framework, using "image-spherical position" as training sample pairs: First, the image is converted into a fixed-dimensional embedding representation e_I through a frozen CLIP encoder, and the corresponding spherical position (θ, φ) is encoded as an SHDD representation and stored for later use. During the forward propagation phase, noise is gradually added to the spherical harmonic Dirac function until it is transformed into a pure Gaussian noise vector. During the backpropagation phase, CS-UNet, guided by the image embedding e_I, gradually recovers the original SHDD representation from the noise vector. The loss function used for training is the SHDD KL divergence, which, compared to the traditional spherical MSE loss, is not only more numerically stable but also effectively preserves multi-scale spatial information, helping the model learn global and local features.

During the inference phase, the model starts with random Gaussian noise and, guided by the embedded features of the input image, gradually generates SHDD coefficient vectors through CS-UNet. These vectors are then transformed into spherical coordinates (θ, φ) via a modality search decoder. In practical engineering implementation, the calculation of the SHDD KL divergence and the integration operation of the modality search are approximated by summing a discrete spherical anchor point set. During training, anchor points are randomly sampled globally to avoid overfitting.

Focusing on three key dimensions, LocDiff performs exceptionally well in most test scenarios.

To systematically evaluate the performance of the LocDiff model, this study conducted experiments across three dimensions: localization accuracy, generalization ability, and computational efficiency. All experiments adhered to domain-standard settings to ensure fair comparisons.

Experiments show that, as shown in the table below, LocDiff performs excellently in most test scenarios. To further improve fine-grained performance, researchers designed a hybrid model, LocDiff-H, which effectively combines the advantages of two methods by limiting the retrieval range of GeoCLIP to a 200-kilometer radius of the LocDiff-generated location. LocDiff-H performs outstandingly on Im2GPS3k and YFCC26k, but it lags behind the original LocDiff on GWS15k, especially at fine-grained scales. This is mainly due to the significant distributional difference between GWS15k and the training set, which negatively impacts the inductive bias of GeoCLIP.

As shown in the table below, in comparison with similar generative models, LocDiff outperforms DiffR³, FMR³, and other comparable models on both the OSM-5M and YFCC-4k datasets, validating the advantages of the multi-scale latent diffusion method.

Generalization analysis reveals the unique value of generative methods. The retrieval-based GeoCLIP heavily relies on the spatial coverage of the map repository: its performance degrades significantly when the test set distribution does not match the training set; even using millions of uniform grid points as candidate locations, its performance at scales of 200 kilometers and above is far inferior to using the original map repository. This reflects the limited adaptability of this method to unseen locations.

In contrast, LocDiff demonstrates robust generalization ability. As shown in the table below, experimental results show that LocDiff's performance remains stable regardless of whether the anchor points use MP16 library locations or uniform grid points, and regardless of whether the number of anchor points increases from 21,000 to 1 million, further confirming its robustness.

In terms of computational efficiency, LocDiff performs exceptionally well. SHDD encoding/decoding, as a deterministic closed-form operation, has a near-constant time complexity and linear space complexity. During training, SHDD encoding can be pre-computed as an embedding lookup table, and decoding is implemented through efficient matrix multiplication and argmax operations. In particular, multi-scale SHDD representations significantly accelerate the convergence of the diffusion process—LocDiff converges on the YFCC dataset in only about 2 million steps, while the best-in-class model requires 10 million steps.

Academic Breakthroughs and Industrial Emergence in Image Geolocation Technology

Image geolocation technology, as an important bridge connecting visual information and the physical world, has made significant progress in both academic research and practical application in recent years.

In academia, a research team at MIT's Computer Science and Artificial Intelligence Laboratory (CSAIL) has achieved a significant breakthrough in spherical position encoding. Addressing the challenge of nonlinear mapping in traditional methods, they proposed an improved scheme based on manifold diffusion, combining spherical harmonic functions with manifold learning. This innovation significantly improves the model's positioning performance in data-sparse regions such as polar regions and oceans, increasing accuracy by 231 TP3T at a 100 km scale. The research also introduces an adaptive scale adjustment mechanism, effectively improving the model's generalization ability across cross-regional scenarios.

Paper Title:LocDiffusion: Identifying Locations on Earth by Diffusing in the Spherical Harmonics Dirac Delta Space

Paper link:https://arxiv.org/abs/2503.18142

Meanwhile, the UAE Digital University proposed the GeoCoT framework—a novel multi-step reasoning paradigm designed to enhance the geolocation reasoning capabilities of large vision models. GeoCoT significantly improves positioning performance by gradually integrating contextual information and spatial cues through simulation of the human cognitive process of geolocation. Experiments based on the GeoEval metric show that this framework improves geolocation accuracy by up to 25% while maintaining good interpretability.

Paper Title:Geolocation with Real Human Gameplay Data: A Large-Scale Dataset and Human-Like Reasoning Framework

Paper link:https://arxiv.org/pdf/2502.13759

These academic concepts are rapidly being translated into practical productivity, driving innovative practices in industry. The geospatial intelligence platform developed by PRISM Intelligence, the winner of the 2023 NASA Startup Challenge, is a prime example. This platform uses radiation field technology to transform two-dimensional remote sensing images into high-fidelity three-dimensional digital environments, and combines AI-driven semantic segmentation and dynamic optimization algorithms to achieve natural language interaction with geospatial data.

The Google Earth team used a generative model trained on massive amounts of global street view data to achieve accurate location prediction guided by images, and to automatically fill in image information missing due to weather, construction, and other factors. This technology has increased the efficiency of Google Earth's street view updates by 3 times and expanded its coverage to more remote areas.

These industry practices not only validate the applied value of academic research, but also provide new directions for theoretical innovation through feedback from real-world scenarios, continuously driving image geolocation technology toward greater accuracy, efficiency, and accessibility.

Reference Links:

1.https://science.nasa.gov/science-research/science-enabling-technology/technology-highlights/entrepreneurs-challenge-winner-prism-is-using-ai-to-enable-insights-from-geospatial-data/

2.https://ai.google.dev/competition/projects/prism