Command Palette

Search for a command to run...

Online Tutorial: HuMo-1.7B, a Multimodal Collaborative Video Generation Framework, Enables Integrated Video Creation Experience With Graphics, Text, and Audio

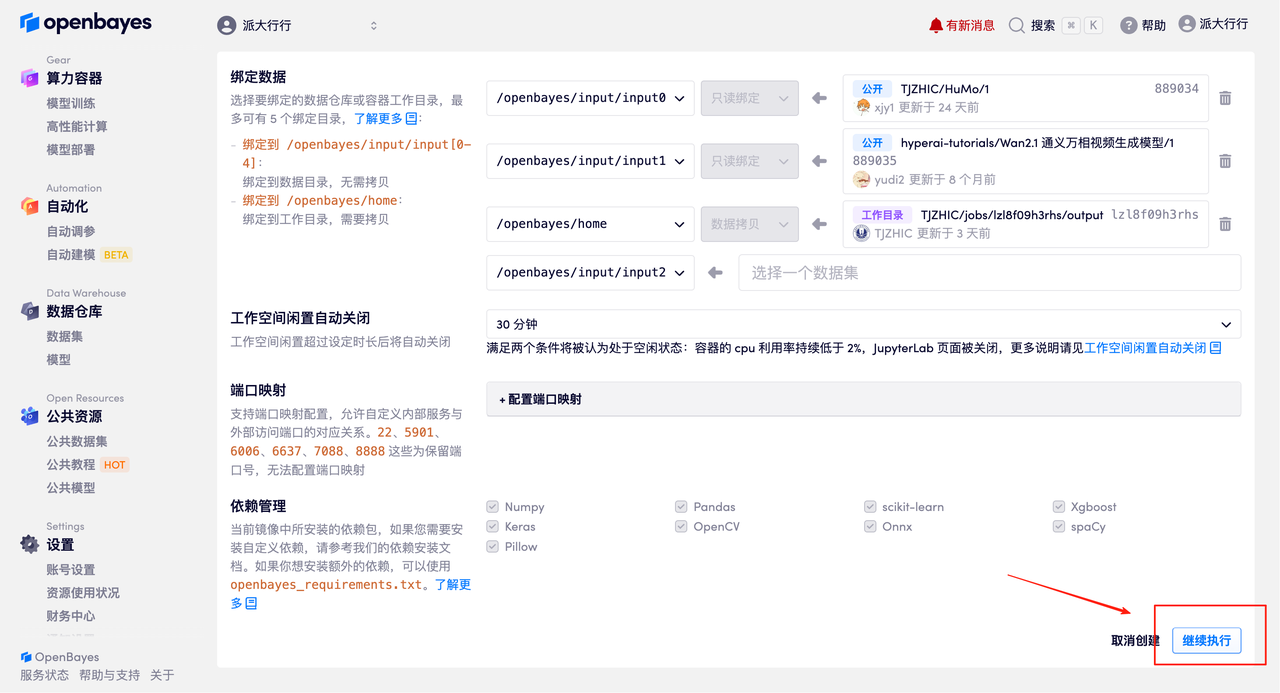

Nowadays, AI-generated videos are becoming increasingly realistic. They're often stunning at first glance, but upon closer inspection, something seems off. This "uncanny valley" effect, between the real and the fake, is both astonishing and unavoidable.

Think of a common scenario in creative work. If the client only throws out a vague idea, the final product often fails to meet expectations. Only when the requirements are refined to dimensions such as style, characters, tone and atmosphere, the result will be closer to the ideal. The same is true for video generation. Unlike pictures and texts, videos carry multiple information such as sound, characters and actions.This means that the model must not only "understand" the semantics of the text, but also "coordinate" the visual and auditory expressions.

However, most current models rely on a single modality for input. Recent attempts at multimodal control often struggle to achieve effective collaboration between voice, expression, and movement. Enabling these different modalities to truly collaborate and generate natural and believable human-like videos remains a daunting challenge.

In view of this,Tsinghua University and ByteDance Intelligent Creation Lab jointly released the HuMo framework. HuMo proposes the concept of "cooperative multimodal conditional generation", incorporating text, reference images and audio into the same generative model, and through a progressive training strategy and time-adaptive guidance mechanism, dynamically adjusting the guidance weights in the denoising step.It not only makes breakthroughs in maintaining the consistency of character appearance and audio and video, but also advances video generation from "multi-stage splicing" to "one-stop generation."

Paper address:

https://arxiv.org/abs/2509.08519

Original warehouse:

https://github.com/phantom-video/humo

In addition, HuMo's performance in multiple subtasks such as text tracking and image consistency has reached SOTA.The project provides two models, 1.7B and 17B, which are lightweight, playable, professional and researchable, and suitable for the different needs of creators and developers. Let's take a look at the generated effect of the 17B model:

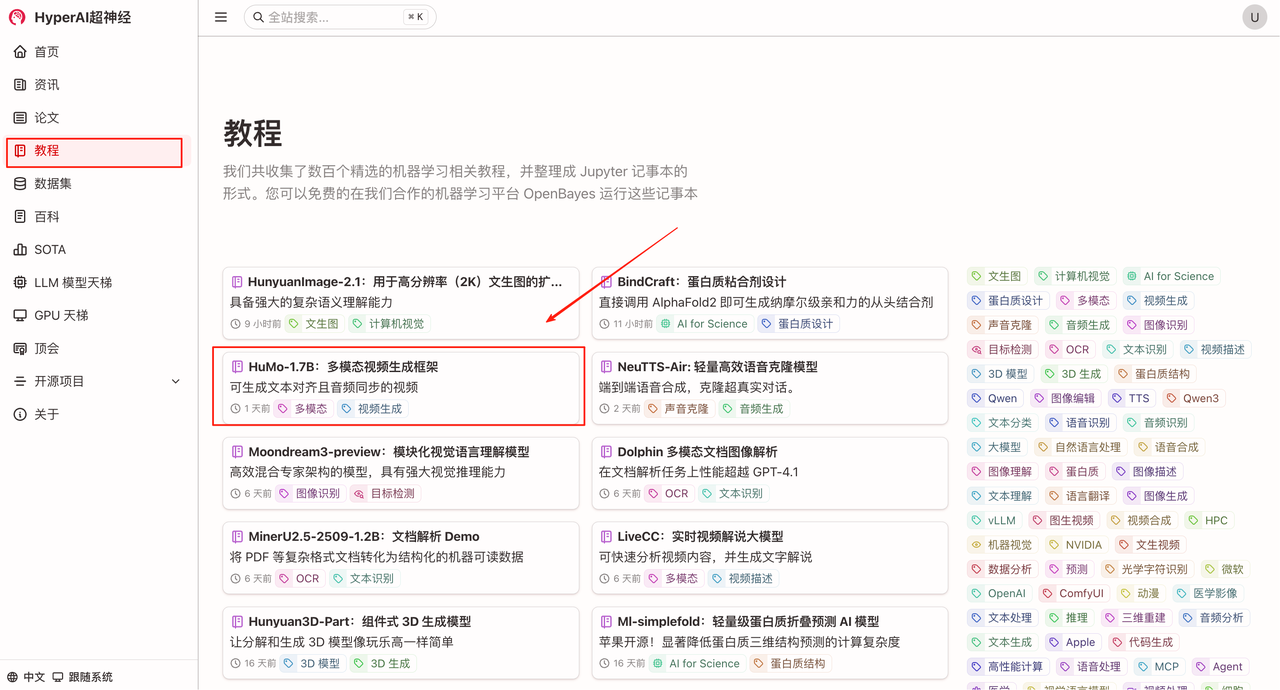

"HuMo-1.7B: Multimodal Video Generation Framework" + "HuMo-17B: Trimodal Collaborative Creation" are now available in the "Tutorials" section of HyperAI's official website (hyper.ai). Why not give it a try? When you give it more information, can the model produce a video that satisfies you?

Tutorial Link:

HuMo-1.7B:https://go.hyper.ai/BGQT1

HuMo-17B:https://go.hyper.ai/RSYA

Demo Run

1. On the hyper.ai homepage, select the Tutorials page, choose HuMo-1.7B: Multimodal Video Generation Framework, and click Run this tutorial online.

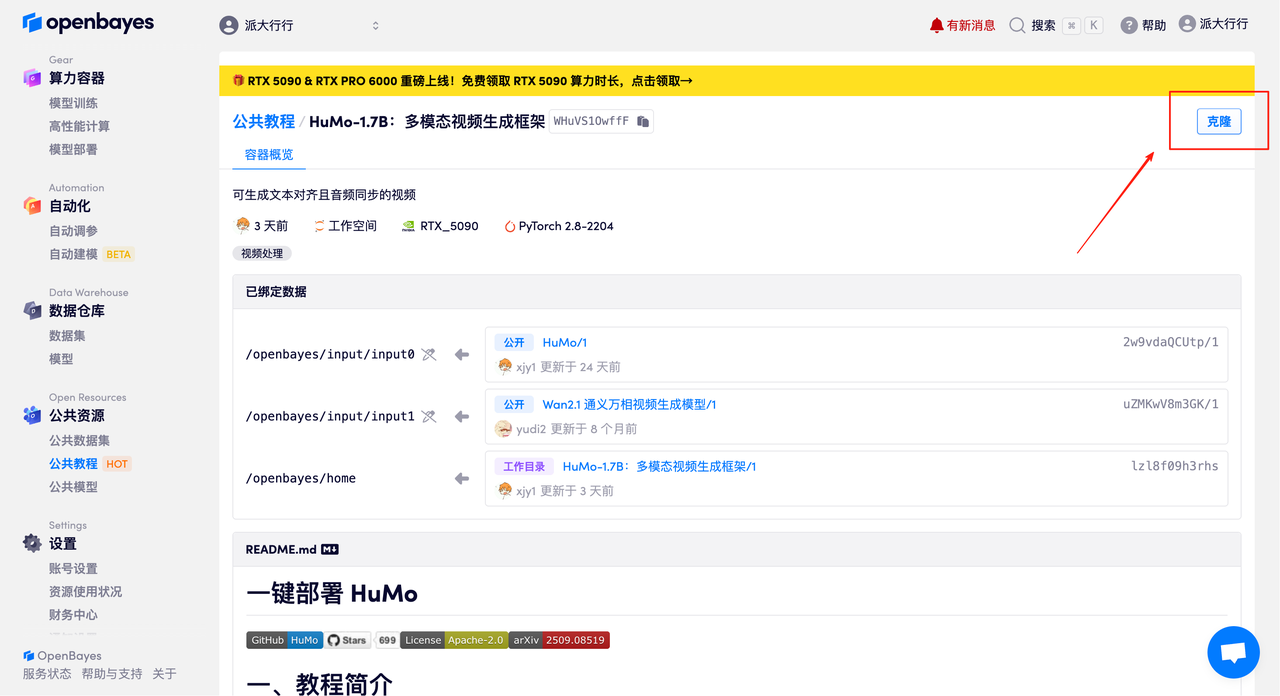

2. After the page jumps, click "Clone" in the upper right corner to clone the tutorial into your own container.

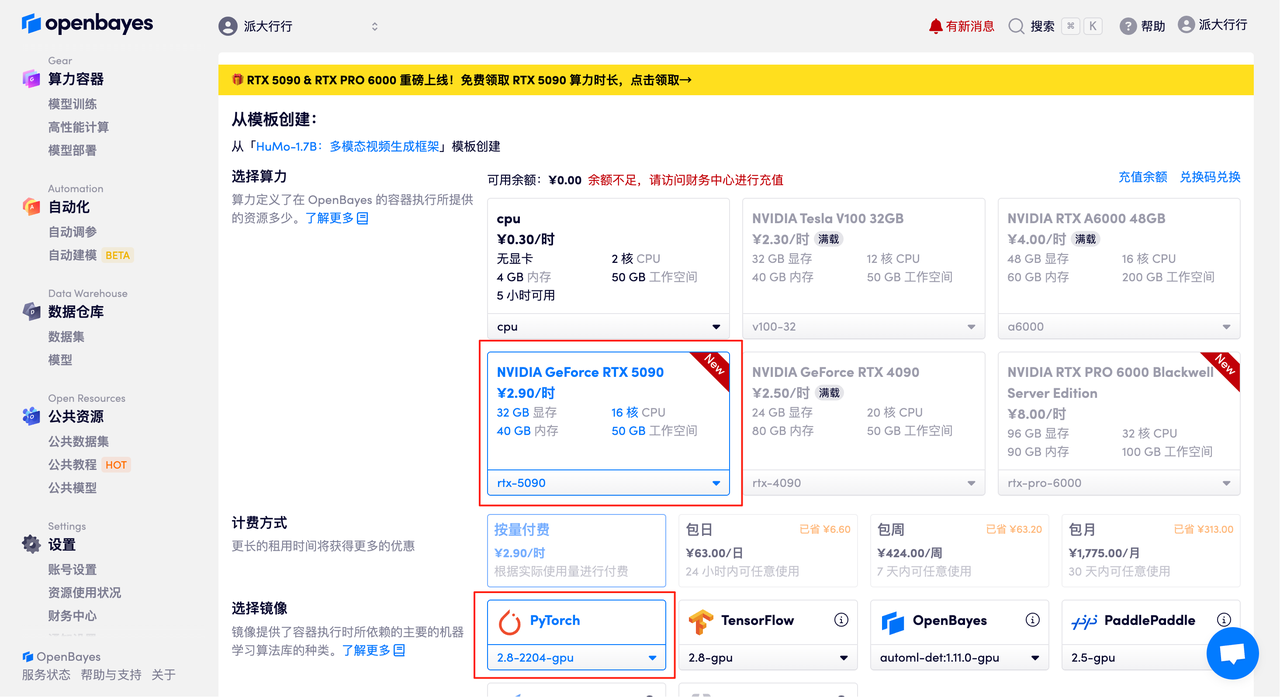

3. Select the NVIDIA GeForce RTX 5090 and PyTorch images and click Continue. The OpenBayes platform offers four billing options: pay-as-you-go or daily/weekly/monthly plans. New users can register using the invitation link below to receive 4 hours of free RTX 5090 and 5 hours of free CPU time!

HyperAI exclusive invitation link (copy and open in browser):

https://openbayes.com/console/signup?r=Ada0322_NR0n

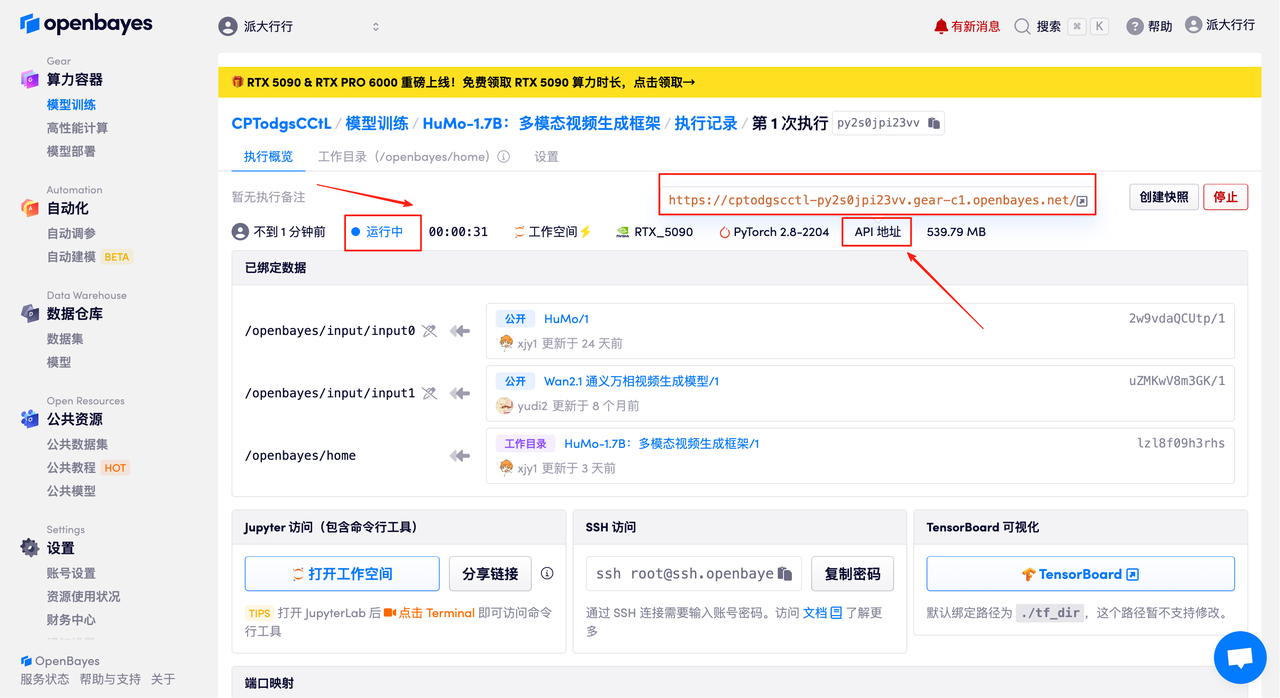

4. Wait for resources to be allocated. The first clone will take about 2 minutes. When the status changes to "Running", click "Open Workspace" to jump to the Demo page.

Effect Demonstration

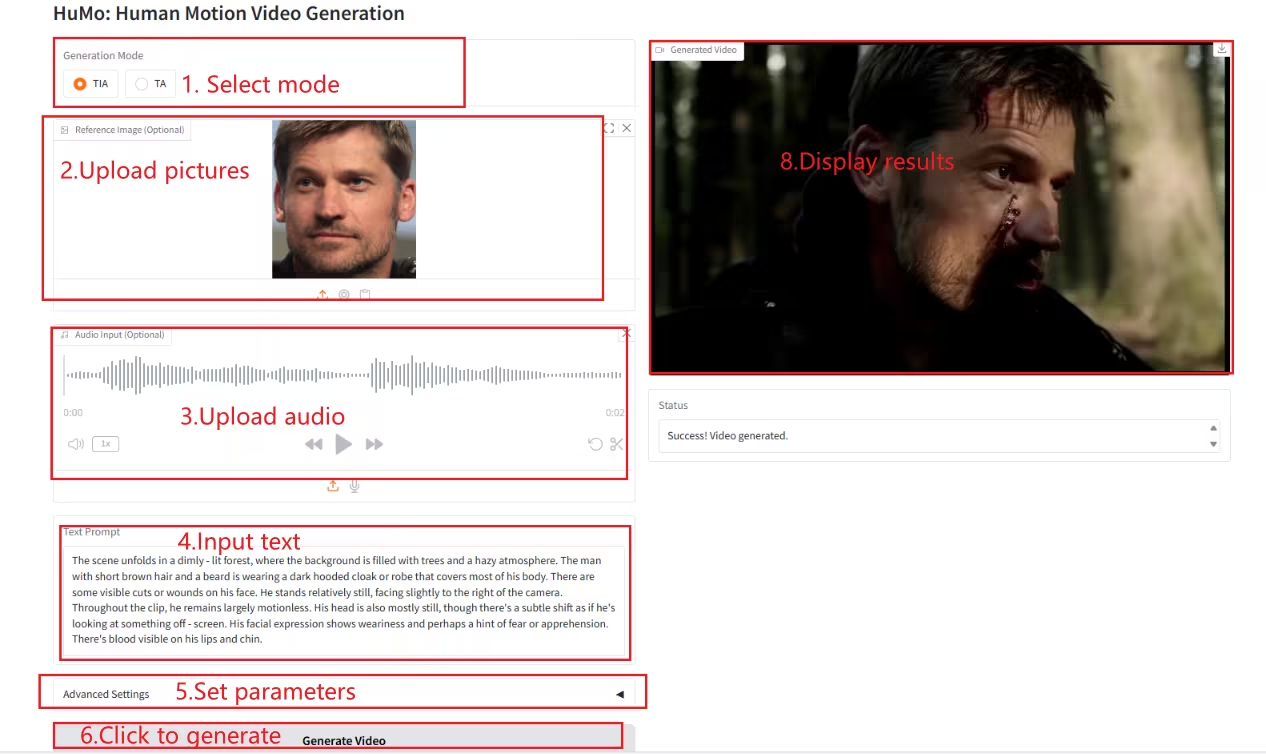

Once you're on the Demo page, enter a description in the text box, upload images and audio, adjust the parameters as needed, and click "Generate Video" to generate the video. (Note: When Sampling Steps is set to 10, generating the video takes approximately 3-5 minutes.)

Generate an example

The above is the tutorial recommended by HyperAI this time. Everyone is welcome to come and experience it!

Tutorial Link:

HuMo-1.7B:https://go.hyper.ai/BGQT1

HuMo-17B:https://go.hyper.ai/RSYAi