Command Palette

Search for a command to run...

AI Predicts Plasma Runaway; MIT and Others Use Machine Learning to Achieve high-precision Predictions of Plasma Dynamics With Small samples.

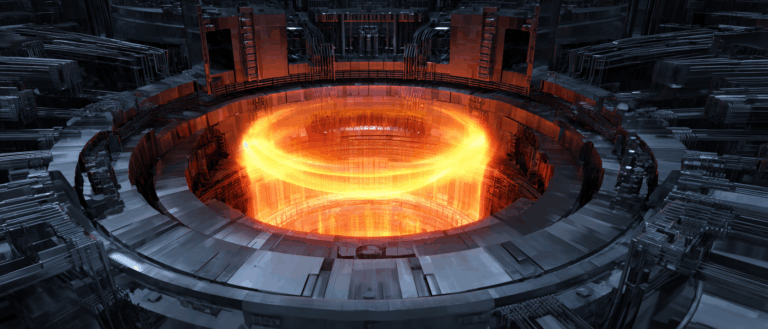

If you mention the "Tokamak" directly, you may feel unfamiliar with it. If you introduce it like this:Tokamak device is one of the important technologies leading to the most ideal energy source - nuclear fusion energy.Perhaps you'll have a moment of realization: "So it's you." However, the "nuclear energy" here doesn't refer to nuclear fission in nuclear power plants, but rather nuclear fusion, which is much more energy-efficient, clean, safe, and produces virtually no radioactive waste.

Nuclear fusion simulates the energy-generating process inside the sun, releasing energy by combining light nuclei (such as deuterium and tritium) at extremely high temperatures.To do this, it is necessary to "create a small sun" on the earth.The tokamak device maintains the stability of the fusion reaction by containing plasma with a temperature higher than that of the sun's core in a ring-shaped vacuum chamber and confining it with a strong magnetic field.

However, ideals are full of hope, but reality is extremely "sensitive".For a tokamak, the current ramp-down at the end of the discharge is a highly dangerous phase. It faces plasma flows at speeds of up to 100 kilometers per second and temperatures exceeding 100 million degrees Celsius. During this period, the plasma undergoes intense transient changes, and any slight control error could trigger destructive disturbances, potentially damaging the device.

In this context, a research team led by MIT used scientific machine learning (SciML) to intelligently integrate physical laws with experimental data.A neural state space model (NSSM) was developed to predict the plasma dynamics during the Tokamak Configuration Variable (TCV) ramp down process using a small amount of data.As well as possible unstable situations, it adds another force to the safe control of the stopping of the "artificial sun".

The related research, titled "Learning plasma dynamics and robust rampdown trajectories with predict-first experiments at TCV," was published in Nature Communications.

Research highlights:

* A neural state space model (NSSM) combining physical constraints and data-driven methods was proposed to achieve high-precision dynamic prediction and fast parallel simulation during the tokamak discharge ramp-down phase;

* Complete "prediction first" extrapolation verification in TCV experiments, and the closed-loop method of "prediction first, experiment later" realizes true data-driven control verification.

Paper address:

https://www.nature.com/articles/s41467-025-63917-x

Follow the official account and reply "Tokamak" to get the full PDF

More AI frontier papers:

https://hyper.ai/papers

Dataset: Efficient Learning with Small Samples

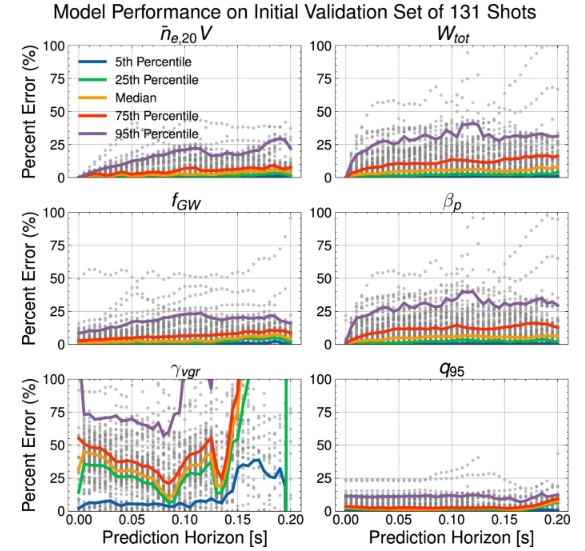

The dataset the research team used to train the model consists of 442 recent discharge test records from a TCV device. 311 of these records were used for training (of which only 5 were in the high-performance range) and 131 for validation. Do you feel how "mini" this dataset is?

With just these smaller-scale data, the model learned to predict complex plasma dynamics and was able to simulate tens of thousands of descent trajectories in parallel per second on a single A100 GPU, demonstrating its powerful learning and prediction capabilities.

Model validation metrics

The Neural State Space Model: Physics as the skeleton and nerves as the soul

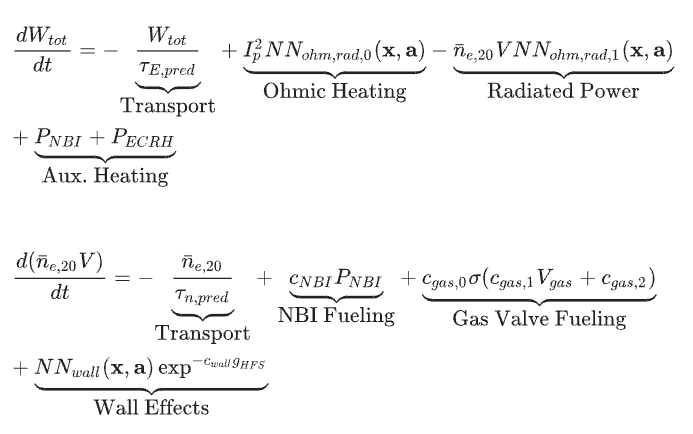

The core of the research is to build a model that can accurately predict the complex dynamics of plasma during the shutdown phase. To this end, the research team designed a "neural state space model" that integrates physics and data.

The skeleton of this model is the zero-dimensional physical equation, which mainly describes the energy balance and particle balance of plasma. However, some key parameters (such as confinement time, radiation loss, etc.) are difficult to accurately model using first principles.Therefore, the research team embedded "neural networks" in these core parts.This allows the model to learn these hard-to-simulate physical effects from experimental data, like a self-driving car with a standard vehicle chassis, but whose "driving experience" is trained using real-world road data.

Specifically, the model takes as input a series of controllable "actions," such as the rate of change of the plasma current and the neutral beam injection power. By solving this hybrid differential equation system composed of "physics equations + neural networks," the model can predict the future step by step.

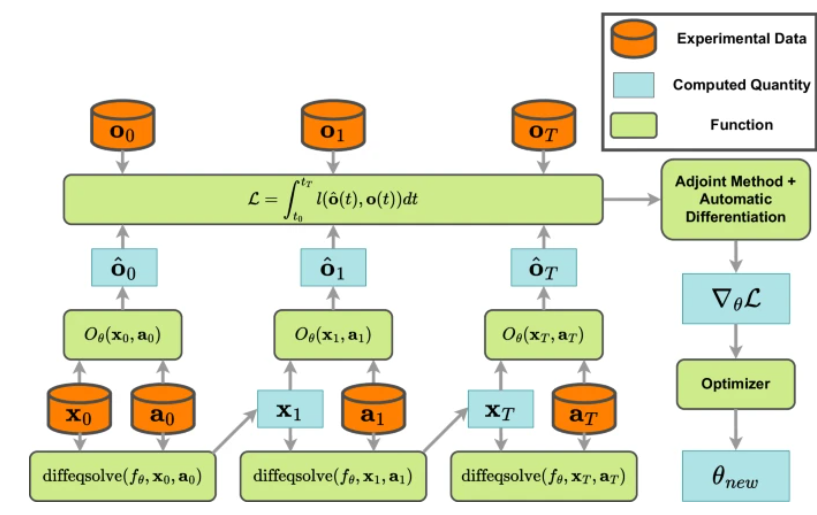

The training process of the Neural State Space Model (NSSM) follows an efficient and automated process. The model consists of a dynamics function fθ and the observation function Oθ We define the model, perform forward simulations to generate predictions, and compare the predictions with the experimental observations to calculate the loss. We then optimize the model parameters using the automatic differentiation adjoint method of diffrax and JAX.

Interesting and inspiring experimental sessions

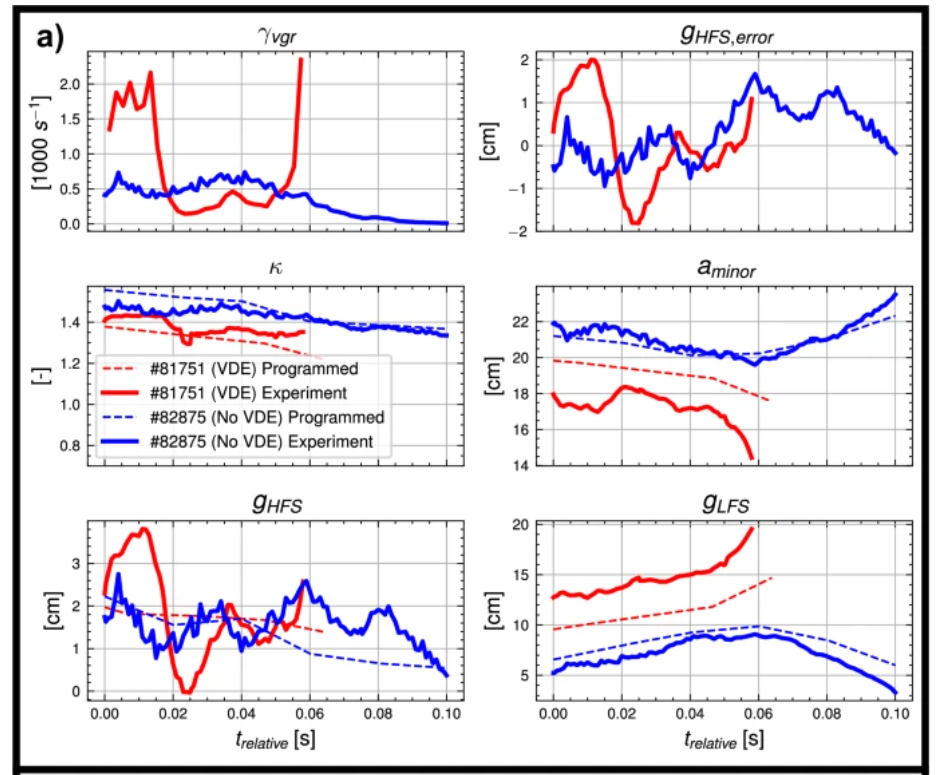

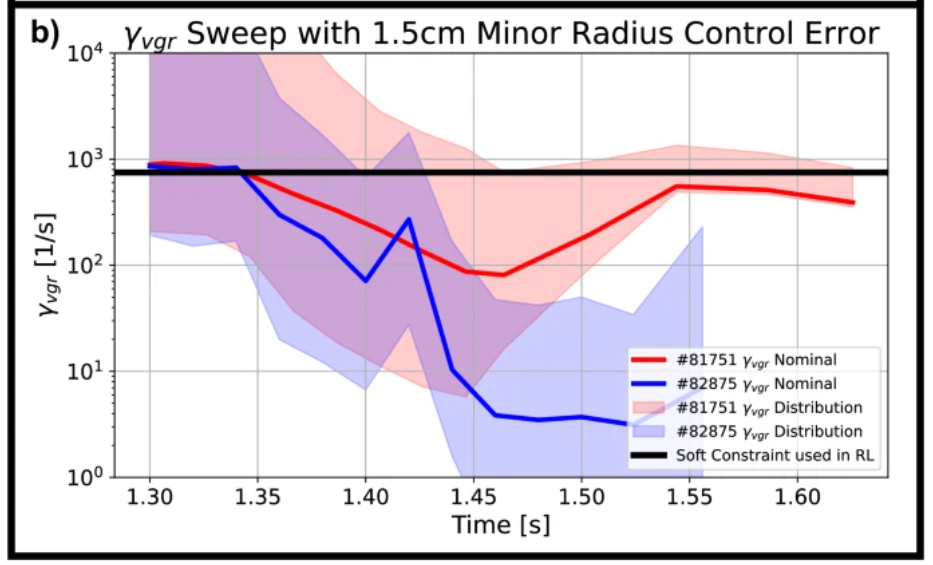

Among all the experiments, the two most inspiring results came from the robustness verification of "control error sensitivity" and the "forecast-ahead" extrapolation test.The former reveals a vulnerable point in the slow-down phase - when there is a slight deviation in the gap on the high-field side, the vertical instability growth rate may be amplified by orders of magnitude, thereby triggering a vertical displacement event (VDE).

In a numbered #81751 In the discharge of a plasma, this phenomenon leads to a sudden deviation and termination of the plasma. Based on this, the research team introduced the uncertainty distribution of the gap error in the reinforcement learning (RL) environment, so that the trajectory actively adapts to the uncertainty during training.The results show that the reoptimized trajectory (#82875) remains stable under similar error conditions.This improvement demonstrates the model's ability to learn robustness from real errors, and also proves that data-driven optimization can actually improve the fault tolerance of device operation under safety constraints.

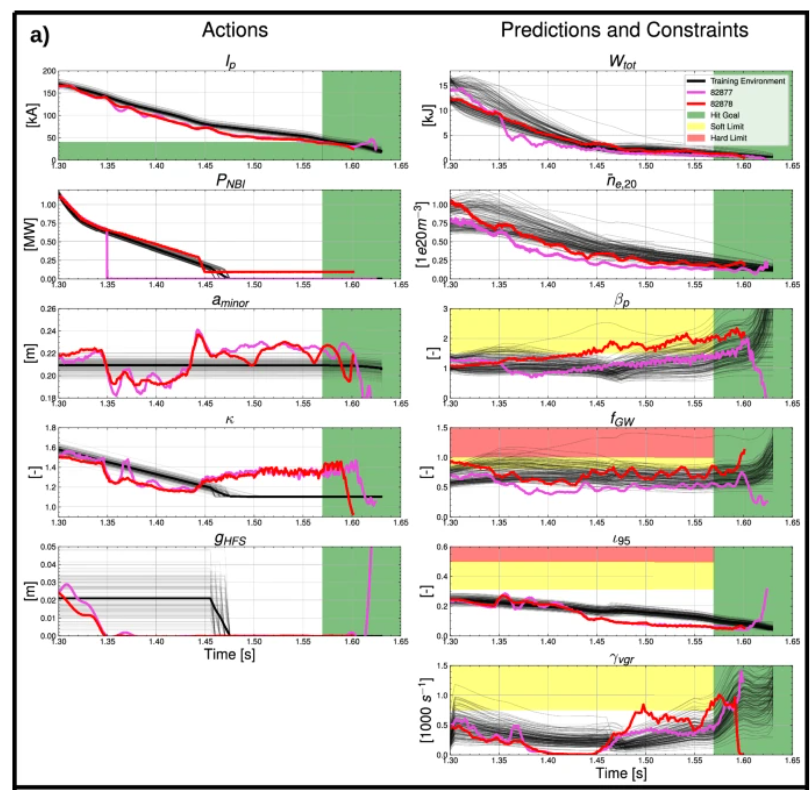

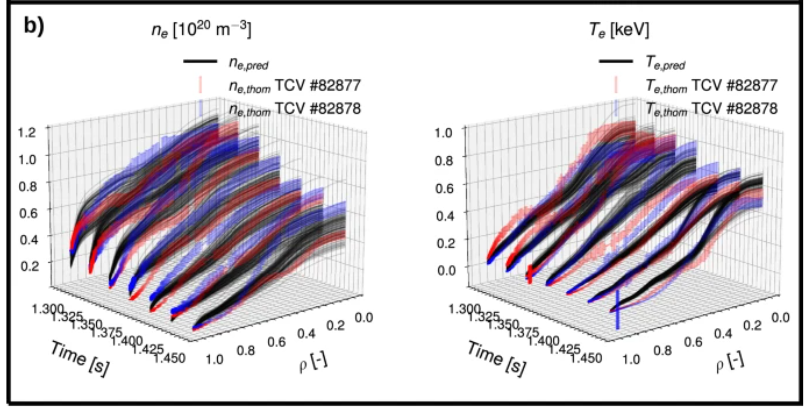

Another extrapolation experiment, called "predict-first,"This validates the model's generalization potential in unknown parameter ranges. The researchers increased the upper current limit from 140 kA to 170 kA and relied entirely on the predictions of the neural state space model (NSSM) to generate trajectories before the experiment. The experimental results showed that the model's predictions of key physical quantities were highly consistent with the measured results, and the discharge was successfully terminated without rupture.

The journey of making "optimal energy" a reality

The research team is reportedly collaborating with Commonwealth Fusion Systems (CFS) to explore how to use new predictive models and similar tools to better predict plasma behavior, avoid machine disruptions, and achieve safe fusion power generation. Team member Allen Wang stated, "We are committed to overcoming the scientific challenges to achieve routine application of nuclear fusion. While this is just the beginning of a long journey, I believe we have made some good progress." Furthermore, a wealth of new research is emerging in this interdisciplinary field.

The Princeton Plasma Physics Laboratory (PPPL) in the United States, in collaboration with several universities, has developed the Diag2Diag model. By learning the correlations between diagnostic signals from multiple sources, this model can virtually reconstruct key plasma parameters when some sensors fail or observations are limited, significantly improving the monitoring and early warning capabilities of fusion devices. The related research, titled "Diag2Diag: AI-enabled virtual diagnostics for fusion plasma," was published on the arXiv platform.

Paper address:

https://arxiv.org/abs/2405.05908v2

In addition, a study titled "FusionMAE: Large-Scale Pretrained Model to Optimize and Simplify Diagnostic and Control of Fusion Plasma," published on the arXiv platform, proposed FusionMAE, a large-scale self-supervised pretrained model for fusion control systems. This model integrates over 80 diagnostic signals into a unified embedding space. Using a masked autoencoder (MAE) architecture, it learns the underlying correlations between different channels, achieving efficient alignment of diagnostic and control data streams. This pioneers the integration of large-scale AI models in the fusion energy field.

Paper address:

https://arxiv.org/abs/2509.12945

There is no doubt that artificial intelligence is becoming an indispensable force in the journey of making "the most ideal energy source - nuclear fusion energy" a reality.

References:

1.https://news.mit.edu/2025/new-prediction-model-could-improve-reliability-fusion-power-plants-1007