Command Palette

Search for a command to run...

NeurIPS 2025: Huazhong University of Science and Technology and Others Release OCRBench v2. Gemini Wins First Place in the Chinese Language Rankings, but Only Scores a Passing grade.

Over the past few decades, the positioning and value of optical character recognition (OCR) technology has undergone a tremendous evolution, from a traditional image recognition tool to a core capability of intelligent information systems. Initially, it primarily extracted printed or handwritten text from images and converted it into computer-understandable text data. Today, with the development of deep learning and multimodal models, driven by diverse demands, the boundaries of OCR technology are constantly expanding. It not only recognizes characters but also understands the structure and semantics of documents.Accurately parse tables, layouts, and mixed text and graphics in complex scenarios.

Furthermore, in large-scale multimodal pre-training, the model is exposed to a large amount of image data containing text, such as web page screenshots, UI interfaces, posters, documents, etc., and OCR capabilities naturally emerge in the unsupervised learning process. This makes the large model no longer dependent on external OCR modules.Instead, it can directly complete recognition, understanding and answering in the end-to-end reasoning process.More importantly, OCR technology is becoming a prerequisite for higher-level intelligent tasks. Only when the model can accurately recognize text in images can it further complete chart parsing, document question answering, knowledge extraction, and even code understanding.

It can be said that performance in OCR tasks is also one of the important indicators for evaluating the capabilities of large multimodal models. Current needs have long gone beyond "reading out text". Tables, charts, handwritten notes, complex layouts in documents, text positioning of text images, and text-based reasoning are all difficult challenges that models need to overcome.However, most traditional OCR evaluation benchmarks have single tasks and limited scenarios, which leads to rapid saturation of model scores and makes it difficult to truly reflect their capabilities in complex applications.

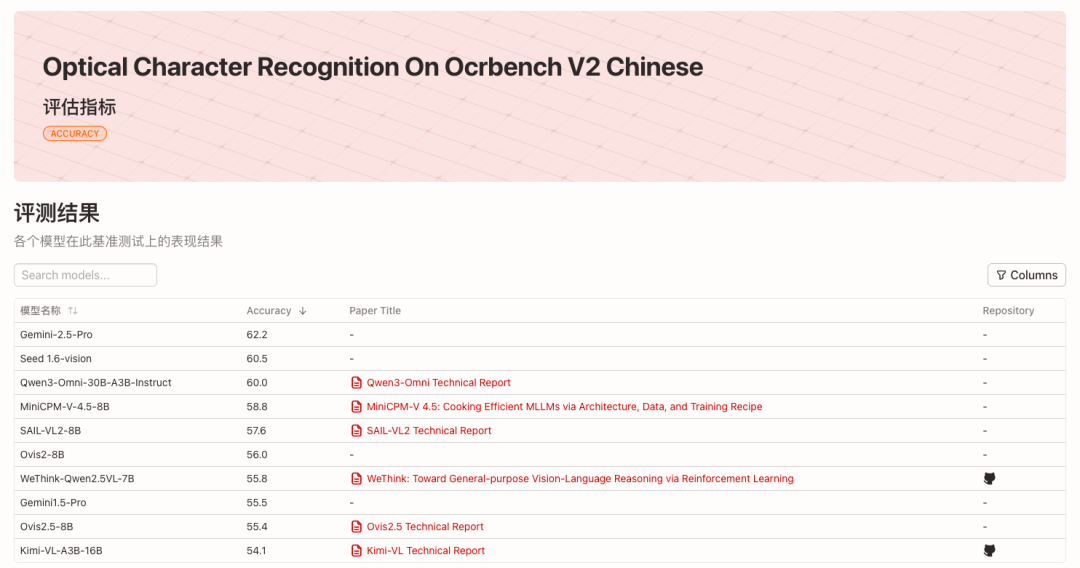

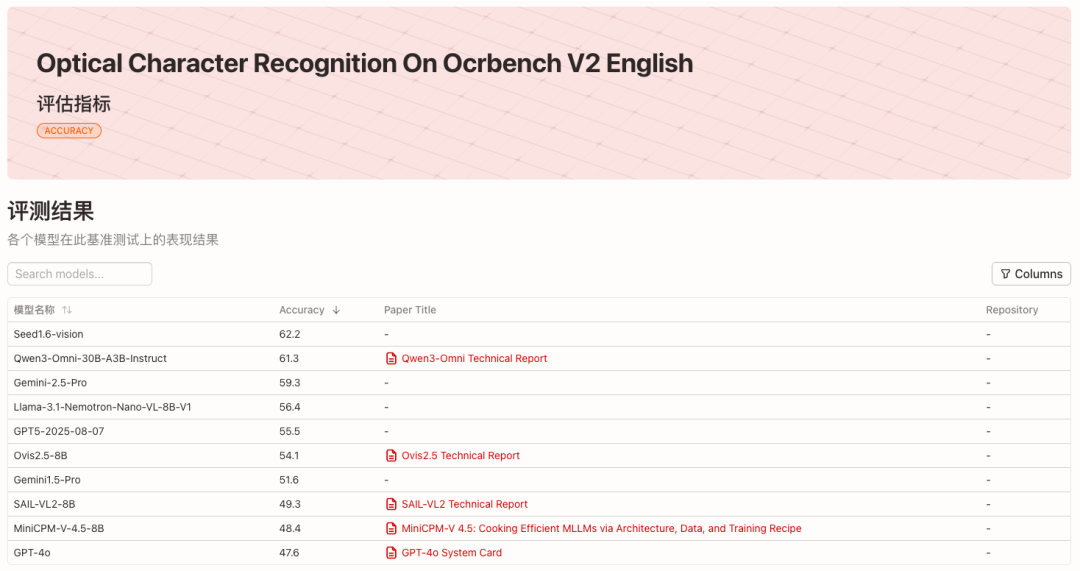

In view of this,Bai Xiang's team at Huazhong University of Science and Technology, in collaboration with South China University of Technology, the University of Adelaide, and ByteDance, launched the next-generation OCR benchmark OCRBench v2.We evaluated 58 mainstream multimodal models from 2023 to 2025 in both Chinese and English. The top 10 models in each list are shown in the figure below:

* View the English rankings:

* View the Chinese list:

* Project open source address:

https://github.com/Yuliang-Liu/MultimodalOCR

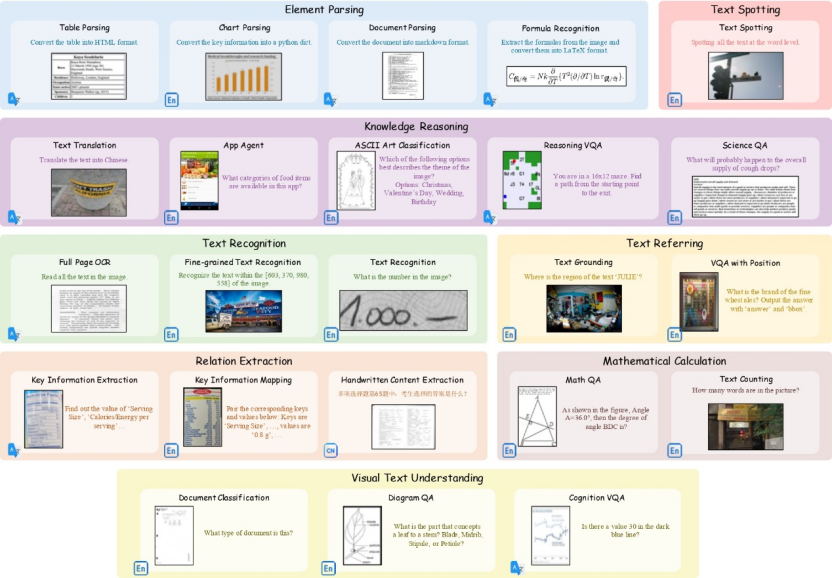

23 sub-tasks covering diverse scenarios

OCRBench v2 covers 23 sub-tasks and 8 core capability dimensions.——Text recognition, text positioning, text detection and recognition, relationship extraction, element parsing, mathematical calculation, visual text understanding and knowledge reasoning.

OCRBench v2's public dataset includes 10,000 high-quality QAs from over 80 academic datasets and some proprietary data. These have been manually reviewed to ensure coverage of diverse scenarios in real-world OCR applications. Additionally, OCRBench v2 includes independent private data, consisting of 1,500 manually collected and annotated QAs. The task settings and scenario coverage are consistent with the public dataset.

* Dataset download address:

https://go.hyper.ai/VNHSX

The team's experiments found that the rankings of public data and private data are highly consistent.This proves the rationality of OCRBench v2 task design, data construction and evaluation indicators.This demonstrates its important value in measuring the existing limitations of large multimodal models.

The related research paper, titled "OCRBench v2: An Improved Benchmark for Evaluating Large Multimodal Models on Visual Text Localization and Reasoning," has been included in the NeurIPS 2025 (Datasets and Benchmarks Track).

* Paper address:

https://go.hyper.ai/VNHSX

Mainstream models are generally biased, with the highest score being just passing.

In the latest evaluation list released by OCRBench v2,Gemini-2.5-Pro won the first place in the Chinese list and the third place in the English list, while Seed1.6-vision won the first place in the English list and the second place in the Chinese list.In the open source camp, Qwen3-Omni-30B-A3B-Instruct ranked second on the English list and third on the Chinese list respectively.

By analyzing the performance of the core capabilities of the models, we can find that these large multimodal models generally have a "biased" phenomenon. Few models can perform well in all core capabilities.Even the top-ranked models only achieved an average score of around 60 out of 100 on both English and Chinese tasks.In addition, each model has slightly different strengths. For example, commercial models such as Gemini-2.5-Pro have a clear advantage in computational questions, demonstrating their strong logical reasoning capabilities. Llama-3.1-Nemotron-Nano-VL-8B-V1, with its powerful text localization capabilities, achieved fourth place on the English list.

While most models perform reasonably well in basic text recognition, they generally score low in tasks requiring fine-grained spatial perception and structured understanding, such as referring, spotting, and parsing. For example, even the top-ranked Seed1.6-vision model scored only 38.0 in spotting, limiting its effectiveness in real-world scenarios like text-based scenes and documents with mixed text and images.

In addition, by comparing the Chinese and English lists,It can be found that the multilingual capabilities of many models are uneven.For example, Llama-3.1-Nemotron-Nano-VL-8B-V1 ranks fourth on the English list (average score 56.4), but only 31st on the Chinese list (average score 40.1), indicating that it has a greater advantage in English scenarios, which may be related to data distribution or training strategy.

At the same time, although closed-source models maintain their lead, excellent open-source models have become highly competitive. From the list, closed-source models such as the Gemini series, GPT5, and Seed1.6-vision have better overall performance, but open-source models such as Qwen-Omni, InternVL, SAIL-VL, and Ovis have become highly competitive.Five of the top 10 models on the English list are open source models, while seven of the top 10 models on the Chinese list are open source models.Open source models can also achieve state-of-the-art performance in tasks such as text localization, element extraction, and visual text understanding.

The OCRBench v2 rankings will be updated quarterly, and HyperAI will continue to track the latest evaluation results.