Command Palette

Search for a command to run...

Online Tutorial: Wan2.2-S2V-14B, a film-quality Video Generation Model, Generates minute-long Digital Human Videos Using Only Static Images and audio.

HyperAI recently shared an online tutorial for Wan2.2, the open-source AI video generation model from Alibaba's Tongyi Wanxiang Lab. As the world's first MoE-based video model, Wan2.2 impressed us with its cinematic quality and high computational efficiency. What are your thoughts on using it? Feel free to share your thoughts in the comments section.

In August of this year, the Tongyi Wanxiang team made new progress. Based on the Wan2.2 text-to-video basic model, they built the Wan-14B.Based on it, an audio-driven video generation open source model Wan2.2-S2V-14B was launched.The model only requires a static image and an audio clip.It can generate digital human videos with movie-level texture, with a duration of up to minutes, and supports a variety of image types and frames.The research team conducted experiments comparing it with existing cutting-edge models, and the results showed that Wan2.2-S2V-14B has significant improvements in both expressiveness and the authenticity of generated content.

To support the model's generation quality in complex scenarios, the research team adopted a two-pronged strategy to compile a more comprehensive training dataset.On the one hand, the team automatically screened data from large-scale open-source datasets such as OpenHumanViD. On the other hand, the researchers also manually selected and customized high-quality sample data. Both sets of data were uniformly filtered through multiple methods, including posture tracking, clarity and aesthetics assessment, and audio-visual synchronization detection, ultimately constructing a talking head dataset. Using a hybrid parallel training strategy, they achieved efficient model performance mining.

"Wan2.2-S2V-14B: Cinematic Audio-Driven Video Generation" is now available in the "Tutorials" section of HyperAI's official website (hyper.ai). Come and create your own digital human!

Tutorial Link:

Demo Run

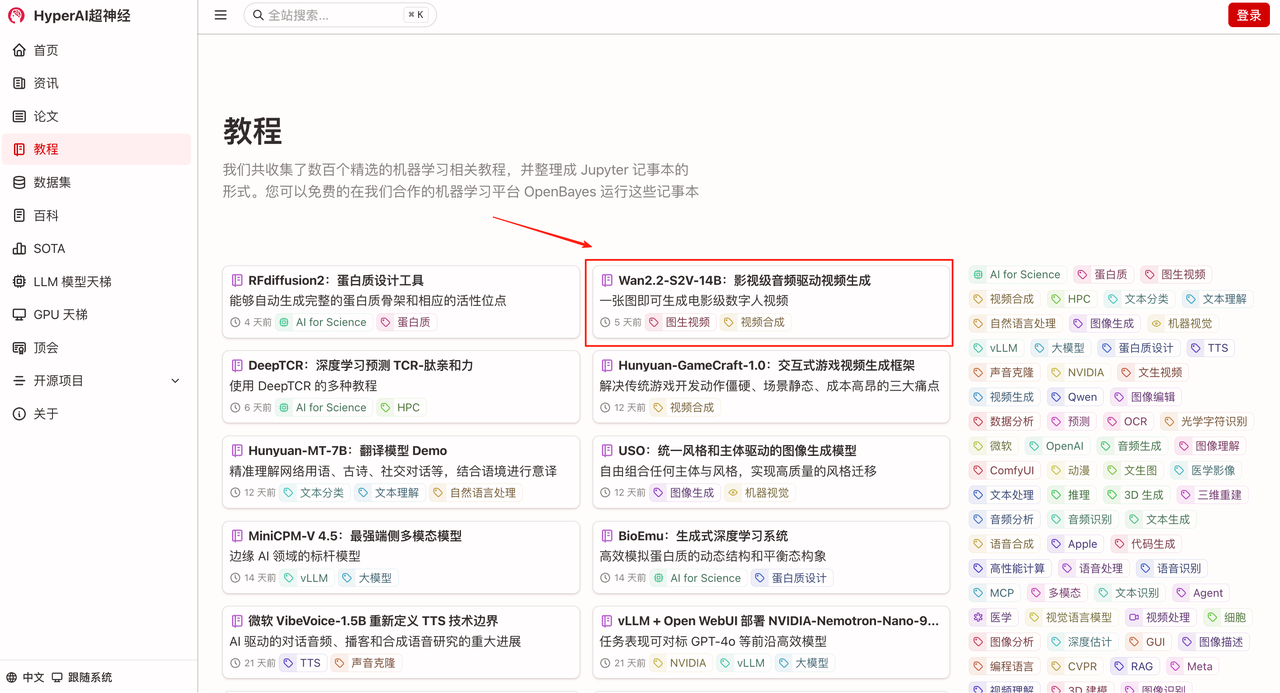

1. On the hyper.ai homepage, select the Tutorials page, choose Wan2.2-S2V-14B: Film-Quality Audio-Driven Video Generation, and click Run this tutorial online.

2. After the page jumps, click "Clone" in the upper right corner to clone the tutorial into your own container.

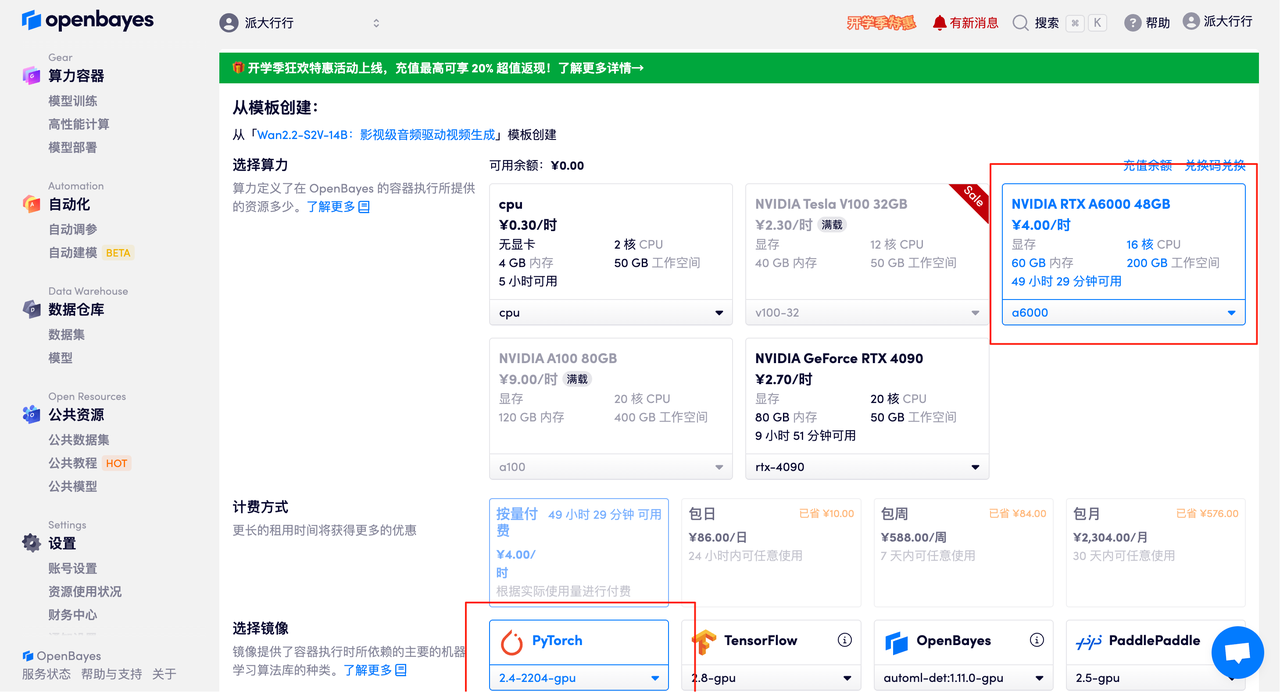

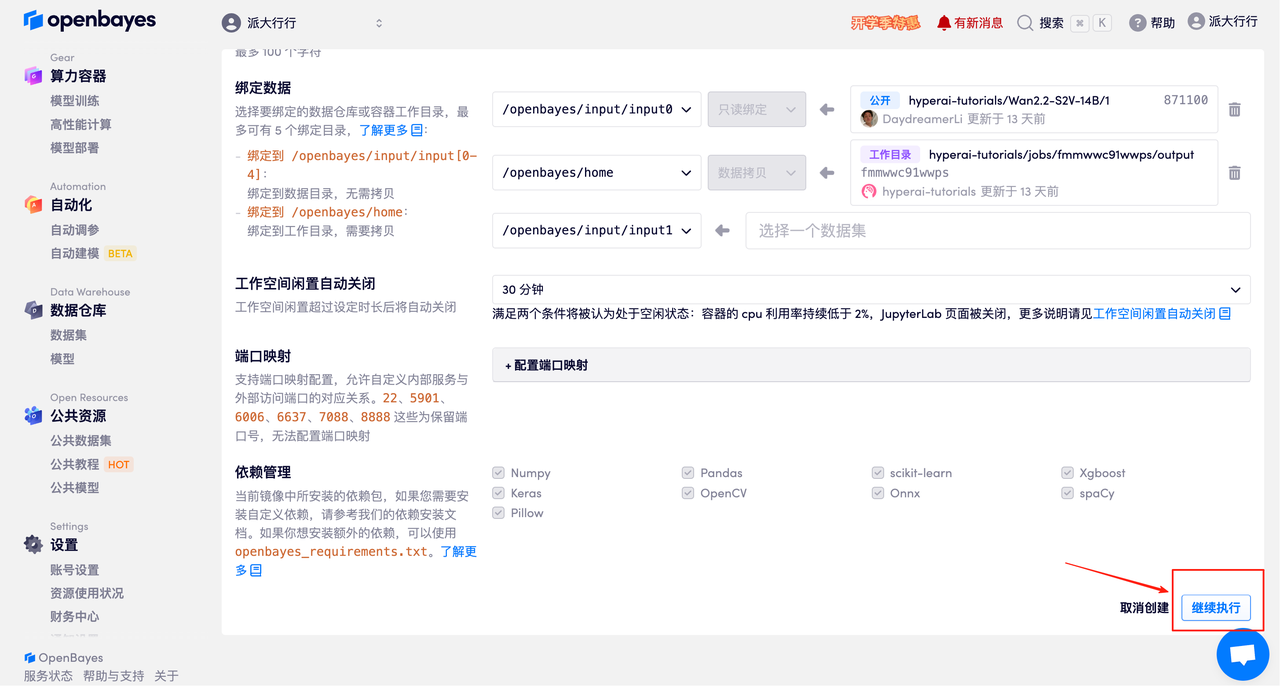

3. Select the NVIDIA RTX A6000 48GB and PyTorch images and click Continue. The OpenBayes platform offers four billing options: pay-as-you-go or daily/weekly/monthly plans. New users can register using the invitation link below to receive 4 hours of free RTX 4090 and 5 hours of free CPU time!

HyperAI exclusive invitation link (copy and open in browser):

https://openbayes.com/console/signup?r=Ada0322_NR0n

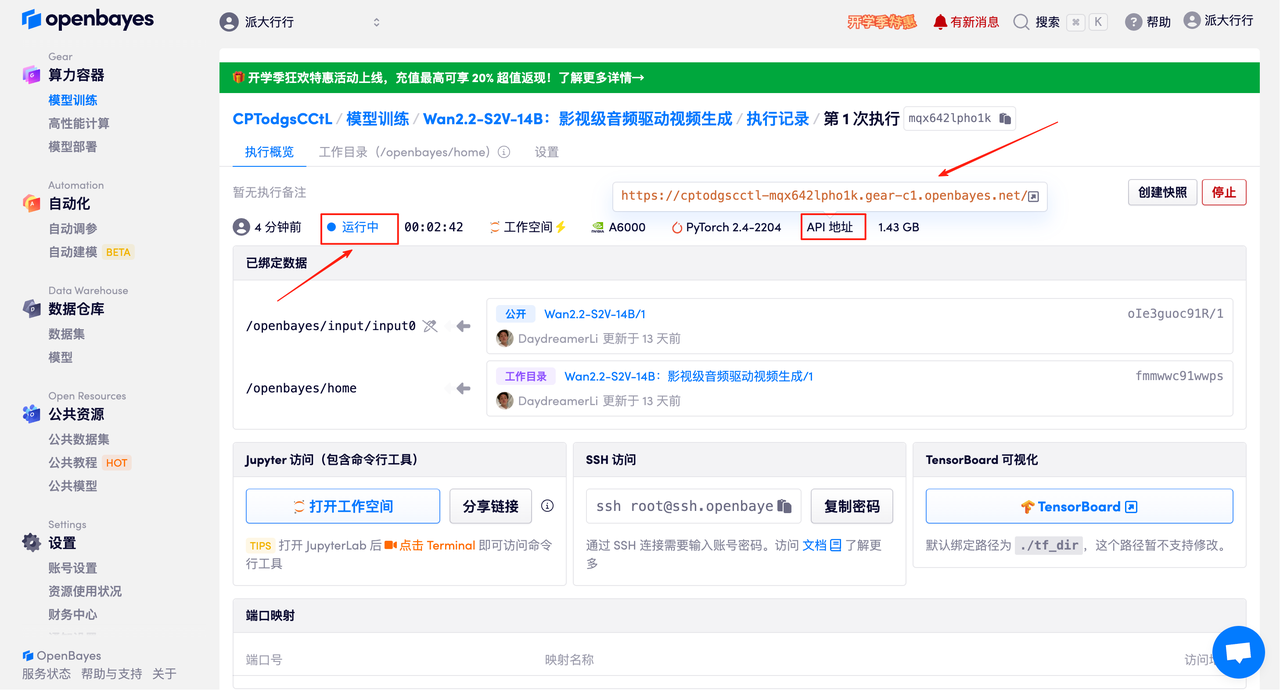

4. Wait for resources to be allocated. The first cloning process will take approximately 3 minutes. When the status changes to "Running," click the arrow next to "API Address" to jump to the Demo page. Please note that users must complete real-name authentication before using the API address.

Effect Demonstration

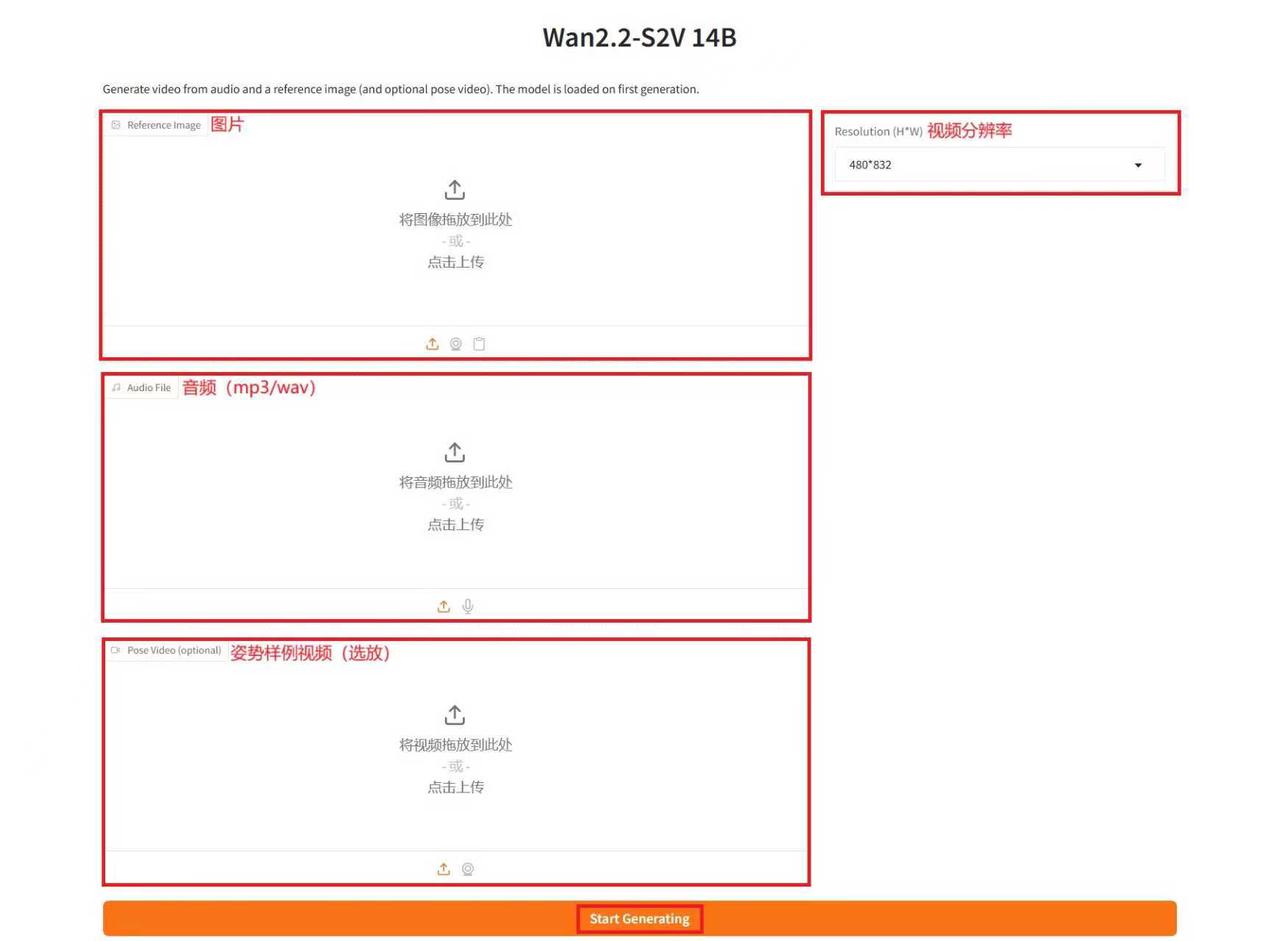

After entering the Demo Run page, enter a description in the text box, upload images and audio, adjust the parameters as needed, and click "Start Generating" to generate the video. (Note: A higher number of inference steps results in better results, but also takes longer to generate. Be sure to set the number of inference steps appropriately. For example, with 10 inference steps, generating a video takes approximately 15 minutes.)

Generate an example

The above is the tutorial recommended by HyperAI this time. Everyone is welcome to come and experience it!

Tutorial Link: