Command Palette

Search for a command to run...

Nature Journal | Tsinghua-MIT Joint Team Proposes Smart City Planning Framework Driven by Large Language Models

Faced with increasingly complex urban systems and diverse social needs, traditional urban planning methods are reaching a bottleneck. Today, artificial intelligence (AI) is bringing disruptive innovation to this ancient and important field.

Recently, an interdisciplinary team composed of scholars from top institutions such as the Center for Urban Science and Computational Research, School of Architecture, Department of Electronic Engineering, Tsinghua University, Massachusetts Institute of Technology (MIT) Senseable City Lab, and Northeastern University in the United States published a viewpoint article in the international cutting-edge journal Nature Computational Science.For the first time, a smart city planning framework driven by a large language model (LLM) is systematically proposed.This framework deeply integrates the powerful computing, reasoning and generation capabilities of AI with the professional experience and creativity of human planners.It aims to build AI into an "intelligent planning assistant" for humans, jointly address the complex challenges in modern urban planning, and open up a new paradigm of human-machine collaboration to achieve a more efficient, innovative and responsive urban design process.

The evolution and bottlenecks of urban planning

The theory and practice of urban planning are constantly evolving, from its early focus on physical space and aesthetic form as "artistic design" to the post-World War II approach to "scientific planning," which views urban planning as a complex system and employs scientific models for analysis. However, these approaches today face new challenges: On the one hand, the planning process remains planner-centric, with limited public participation. On the other hand, the evaluation of planning proposals is often qualitative, subjective, and delayed, making it difficult to make scientific, quantitative decisions and rapidly iterate.

In recent years, traditional AI models, such as generative adversarial networks (GANs) and reinforcement learning (RL), have begun to be applied to urban planning, demonstrating potential in generating street networks and functional zoning. However, these models are typically designed for specific tasks and have a narrow scope, making them unable to cope with the increasing interdisciplinary complexity of modern urban planning.The emergence of large language models (LLMs), with their powerful knowledge integration, logical reasoning, and multimodal generation capabilities, has brought a historic opportunity to break through this bottleneck.

A new process for urban planning driven by LLM

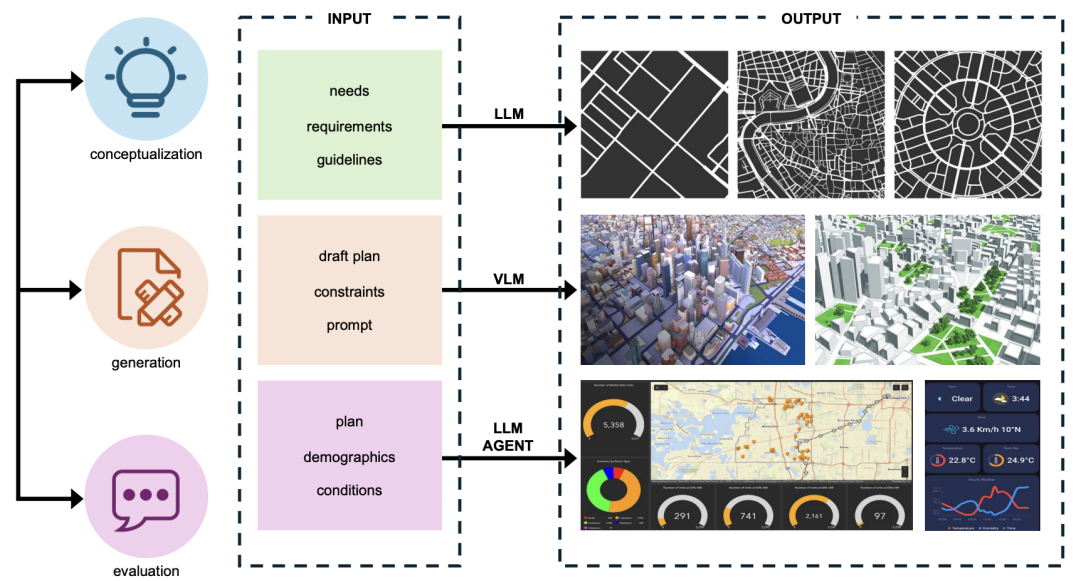

In view of the shortcomings of traditional methods,The research team innovatively proposed a closed-loop framework consisting of three core stages: conceptual design (Conceptualization), solution generation (Generation) and effect evaluation (Evaluation).The framework is collaboratively driven by a large language model, a large visual model (VLM), and a large model agent (LLM Agent), providing human planners with intelligent assistance throughout the entire process.

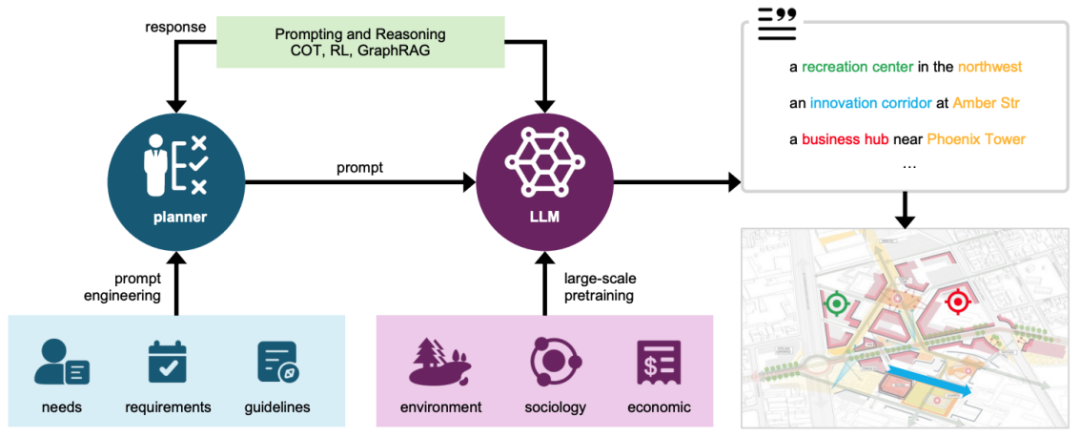

Conceptual Design: LLM Becomes a "Planning Consultant" with Interdisciplinary Knowledge

At the initial planning stage, planners input textual information such as requirements, constraints, and guidelines. LLM, pre-trained on massive amounts of data, can deeply integrate knowledge from multiple fields, including geography, society, and economics, and engage in multiple rounds of "dialogue" with planners.It can not only propose innovative conceptual ideas, but also reason based on complex contexts to generate detailed planning description texts and preliminary spatial concept sketches.Greatly improved the efficiency and depth of the conceptual design stage.

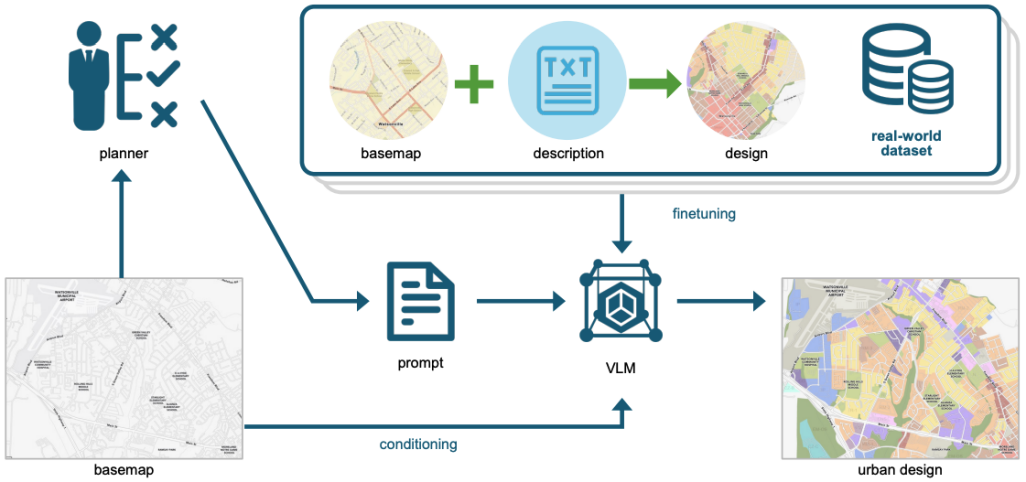

Solution Generation: VLM becomes a "visual designer" and turns text into blueprints

The framework utilizes visual macromodels (VLMs) to transform abstract textual concepts into concrete, visual urban design solutions.Planners can precisely describe planning concepts and constraints through text instructions (prompts). VLM, fine-tuned with urban design data, can generate detailed visual outputs such as land use layout, building outlines, and even realistic three-dimensional urban scenes, while ensuring that the design complies with real-world constraints such as geography.

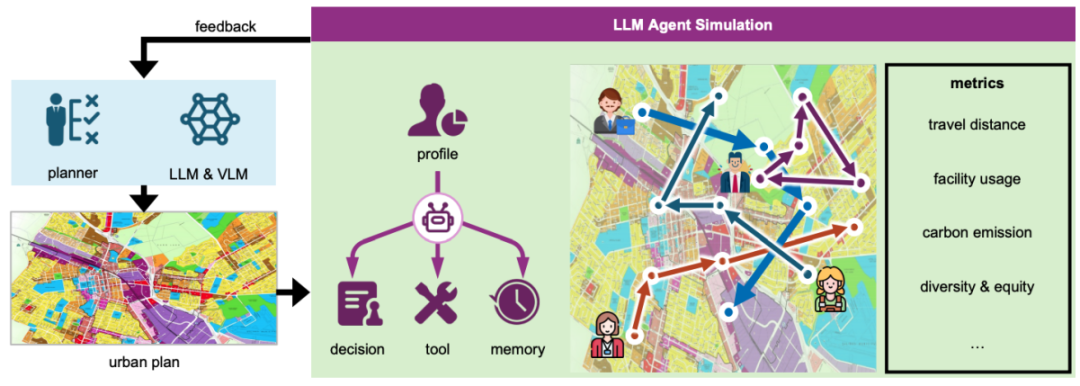

Evaluation: LLM agents build a "virtual city" to preview future life

In order to scientifically evaluate planning schemes, the framework introduces LLM agents to perform urban dynamic simulations.Researchers assigned agents different demographic characteristics (such as age and occupation) and had them simulate residents' daily travel and facility usage within the generated virtual city. By analyzing these simulated behaviors, they were able to obtain quantitative evaluation metrics across multiple dimensions, including travel distance, facility utilization, carbon emissions, and social equity. This provided scientific and forward-looking feedback for iterative optimization of planning solutions.

Early success: AI shows potential to surpass human experts

To verify the feasibility of the core capabilities of the framework, the Center for Urban Science and Computing Research of the Department of Electronics at Tsinghua University has continuously released a series of language-visual cross-modal urban models such as CityGPT, CityBench, and UrbanLLaVA, as well as urban embodied simulation platforms and social simulation systems such as UrbanWord, EmbodiedCity, and AgentSociety, laying a technical foundation for urban planning and social governance in the era of large models. For urban planning in the LLM era, the research team conducted a series of concept verification experiments. In one test, the researchers asked LLM to answer questions from the urban planner professional qualification examination. The results showed thatThe largest-scale LLM outperforms the top 101 TP3T human planners in answering complex planning concept questions, demonstrating its great potential in the conceptualization stage.

During the evaluation phase, the team used an LLM agent to simulate residents' facility visits in two communities in New York and Chicago. The simulation results showed that the agent's hotspots closely aligned with real-world resident mobility data, demonstrating the LLM agent's accuracy and effectiveness in predicting the actual impact of planning proposals.

Challenges and Prospects: Building a Future City of Human-Machine Collaboration

The research team finally emphasized that this framework is not intended to replace human planners, but rather to establish a new workflow for human-machine collaboration. In this model, planners can be freed from tedious data processing and drawing tasks and focus more on innovation, ethical considerations, and communication with various stakeholders.AI is responsible for efficiently completing concept integration, solution generation and simulation evaluation.

The article also identifies challenges facing this technical approach, including the scarcity of high-quality urban design data, the enormous computational resource requirements, and potential geographic and social biases in the models. Future research will require establishing open data platforms, developing more efficient specialized models, and designing fairness algorithms to ensure that AI technology can serve all urban environments in a fair and inclusive manner.

We can expect that in the near future, urban planners will be able to design efficient, livable, and sustainable cities faster and better with the help of powerful AI assistants, fully unleashing human creativity to shape our common urban home.

Paper link:

https://www.nature.com/articles/s43588-025-00846-1

About the Author

The paper's first author is Zheng Yu, a doctoral student in the Department of Electronic Engineering at Tsinghua University. Corresponding authors are Professor Li Yong of the Department of Electronic Engineering, Assistant Professor Lin Yuming of the School of Architecture at Tsinghua University, and Associate Professor Qi R. Wang of the Department of Environmental Engineering at Northeastern University. Collaborators include Assistant Professor Xu Fengli of the Department of Electronic Engineering at Tsinghua University, and Researcher Paolo Santi and Professor Carlo Ratti of the MIT Senseable City Lab.