Command Palette

Search for a command to run...

One-click Deployment of gpt-oss-20b, Testing of Open Source Inference Models With state-of-the-art Performance Approaching That of the o3‑mini

After 6 years since GPT-2, OpenAI has finally released another open source large model - launching gpt-oss-120b and gpt-oss-20b.The former is designed for complex reasoning and knowledge-intensive scenarios with hundreds of billions of parameters, while the latter is more suitable for low-latency, local, or specialized vertical applications, running smoothly on consumer-grade hardware such as laptops and edge devices. This dual version of "large model versatility + small model professionalism" offers differentiated positioning and deployment flexibility, effectively meeting users' diverse needs.

On the technical level, gpt-oss uses the MoE architecture to ensure strong performance while significantly reducing computing and memory requirements.Among them, gpt-oss-120b can run efficiently on a single 80GB GPU, while gpt-oss-20b can run on edge devices with only 16GB of memory.

In actual task evaluations, gpt-oss-120b outperformed o3-mini in Codeforces, MMLU, HLE, and tool call TauBench, and was on par with or even surpassed o4-mini.Furthermore, it outperforms o4-mini on HealthBench and AIME 2024 and 2025. Despite having a smaller model parameter size, gpt-oss-20b performs nearly as well as o3‑mini on these same evaluations.

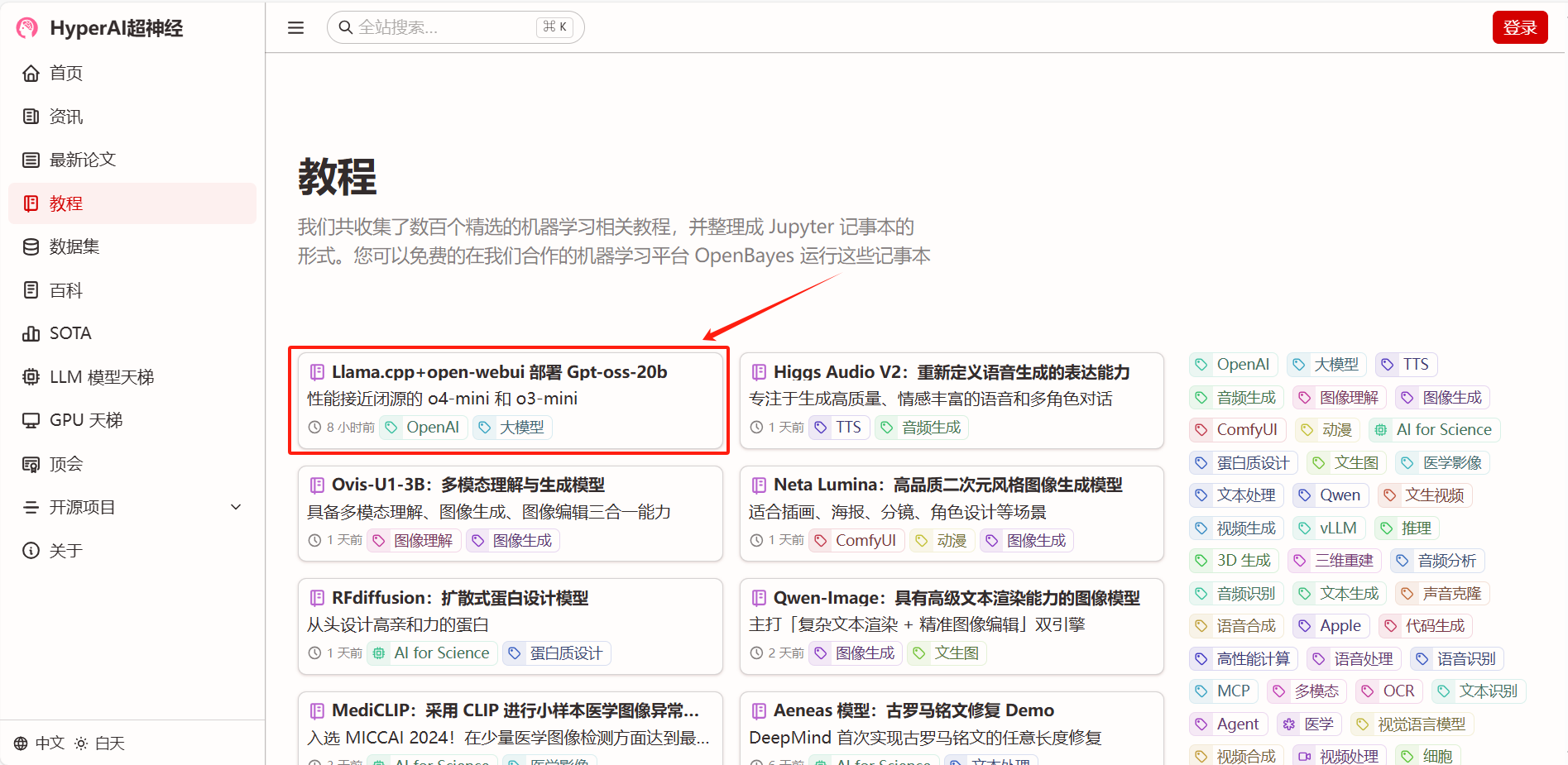

In order to allow everyone to experience gpt-oss more smoothly,The "Llama.cpp+open-webui deployment of Gpt-oss-20b" is now available in the "Tutorials" section of HyperAI's official website (hyper.ai).Start with one click and experience the powerful capabilities of the open source SOTA model based on a single NVIDIA RTX 4090 card.

in addition,The tutorial for gpt-oss-120b is also being produced in full swing, and we are looking forward to it!

Tutorial Link:

Demo Run

1. On the hyper.ai homepage, select the Tutorials page, choose Llama.cpp+open-webui to deploy gpt-oss-20b, and click Run this tutorial online.

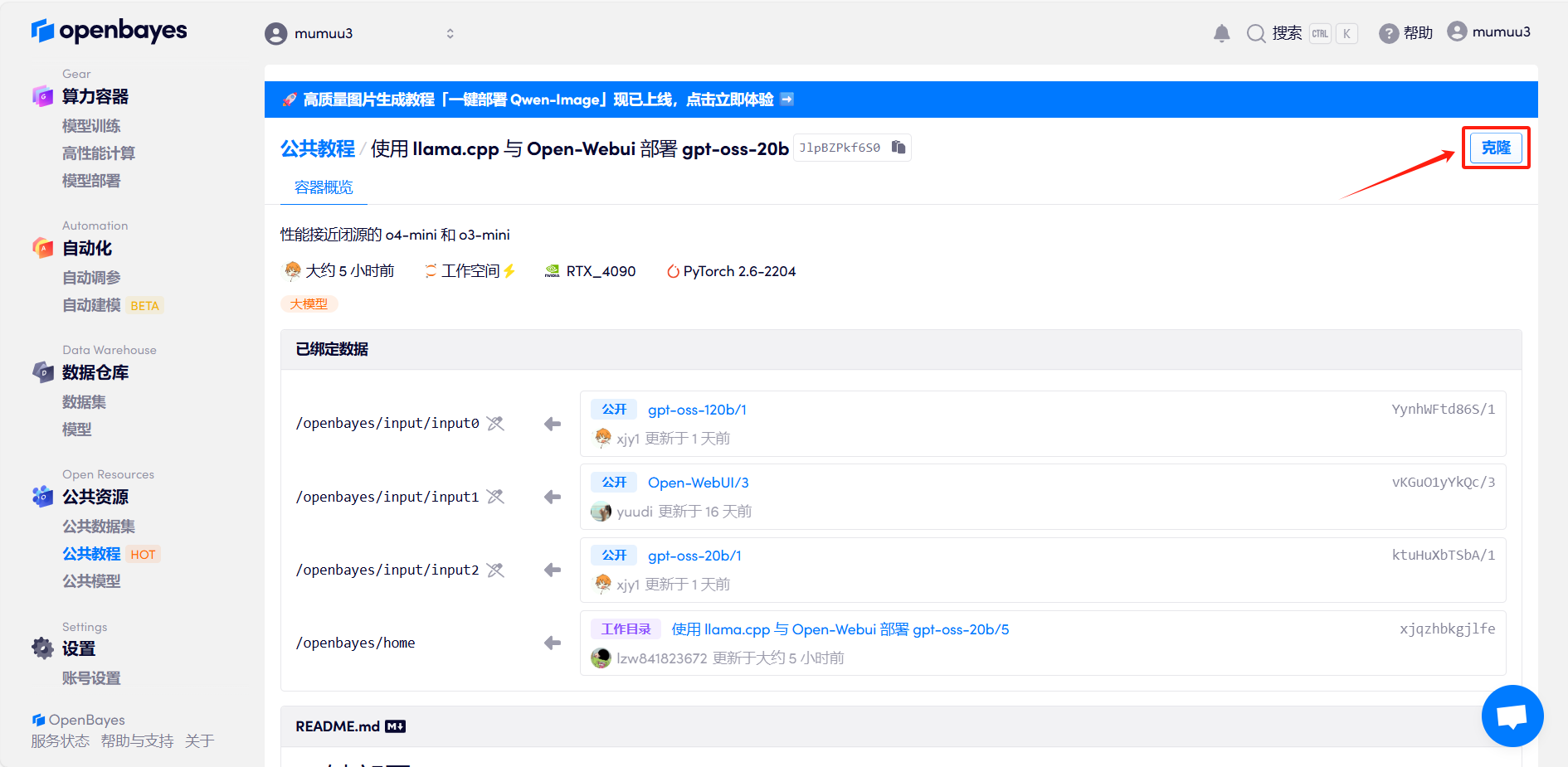

2. After the page jumps, click "Clone" in the upper right corner to clone the tutorial into your own container.

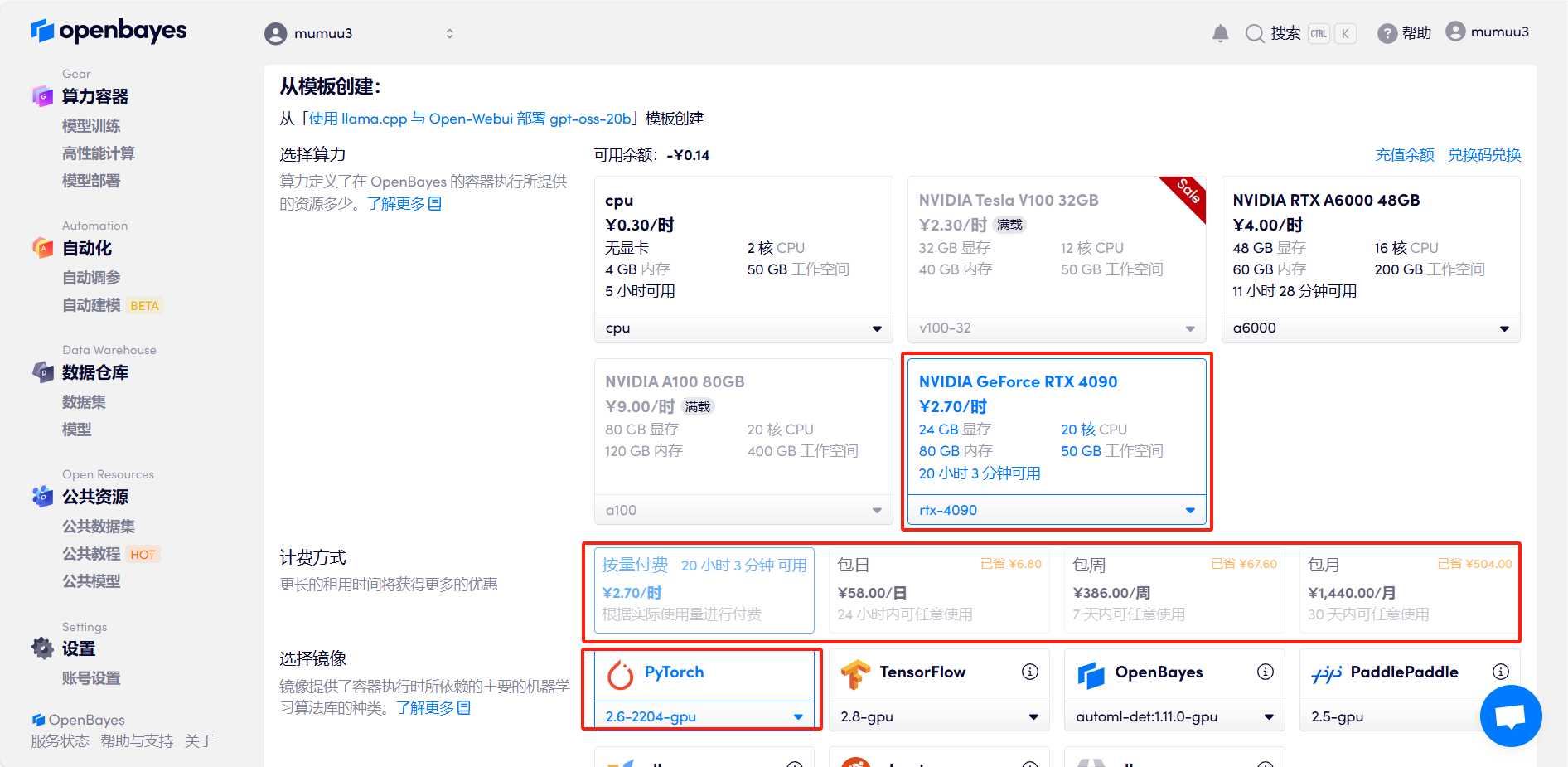

3. Select "NVIDIA GeForce RTX 4090" and "PyTorch" images, select "Pay as you go" or "Daily/Weekly/Monthly Package" according to your needs, and click "Continue". New users can register using the invitation link below to get 4 hours of RTX 4090 + 5 hours of CPU free time!

HyperAI exclusive invitation link (copy and open in browser):

https://openbayes.com/console/signup?r=Ada0322_NR0n

4. Wait for resources to be allocated. The first cloning process will take approximately 3 minutes. When the status changes to "Running," click the arrow next to "API Address" to jump to the Demo page. Please note that users must complete real-name authentication before using the API address.

Effect Demonstration

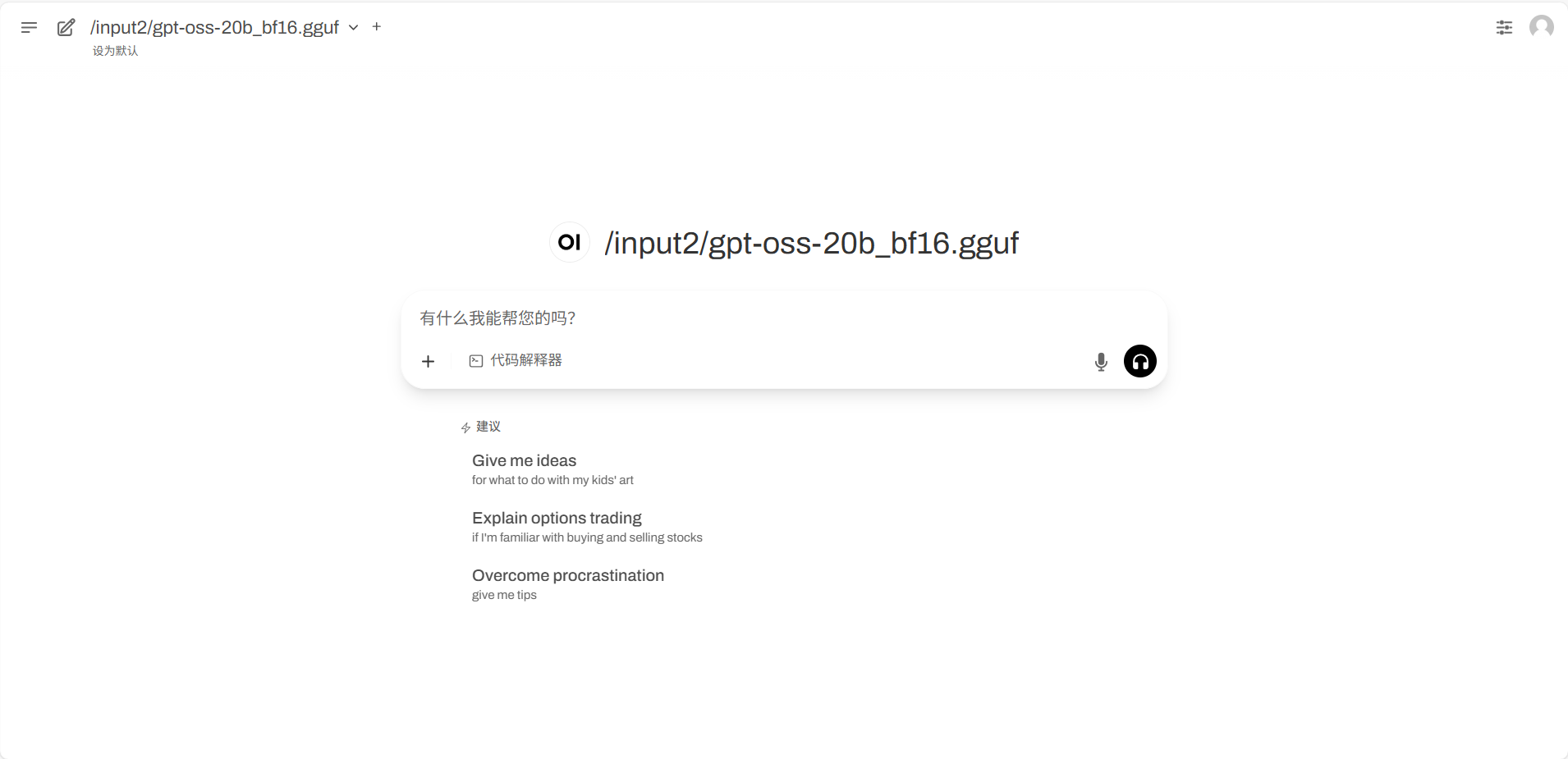

After entering the Demo run page, enter Prompt in the dialog box and click Run.

The author conducted a simple test of the model's content creation, mathematical problem solving, and reasoning ability, and gpt-oss's answers were all quite good.

Prompt: Please write a comedy script about KFC's Crazy Thursdays, about 300 words.

Prompt: What is the first and second digits after the decimal point of (square root of 2 + square root of 3) to the power of 2006?

Prompt: Determine whether the logic is correct: Because the murderer is not a backpacker, and you are not a backpacker, you are the murderer.

The above is the tutorial recommended by HyperAI this time. Everyone is welcome to come and experience it!

Tutorial Link: