Command Palette

Search for a command to run...

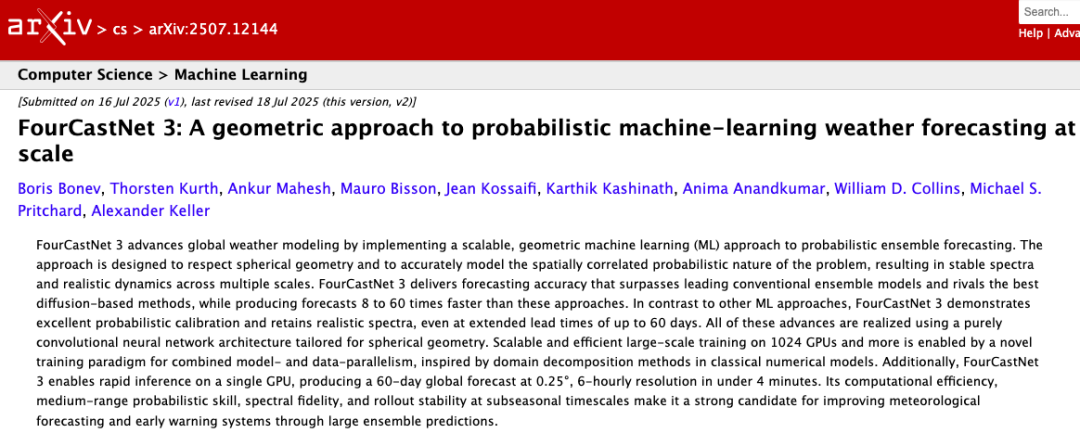

NVIDIA/UC Berkeley and Others Proposed the Machine Learning Weather Forecast System FCN3, Which Can Complete a 15-day Forecast in 1 Minute and Supports single-card ultra-fast inference.

Since its conception and gradual development in the 20th century, Numerical Weather Prediction (NWP) has revolutionized our understanding and prediction of atmospheric phenomena. Early progress in NWP was slowed by limitations in computer performance. It wasn't until breakthroughs in computer technology in the 1950s that NWP experiments achieved initial success. In the 1970s, with the increasing power of supercomputers, NWP began to be widely used in operational settings. Today, continuous optimization of mathematical modeling, dramatic increases in computing power, and continuous improvements in data assimilation technology have made NWP an indispensable tool in numerous areas, including weather forecasting, disaster prevention, energy management, and climate research.

However, traditional NWP models have always faced severe challenges.Because it is based on the numerical solution of fluid mechanics and thermodynamics equations, the amount of calculation is extremely large.When it comes to high-resolution forecasts and large-scale ensemble forecasts, the computational cost of traditional NWP models increases dramatically, making it difficult to meet the needs of fast, accurate, and large-scale probabilistic ensemble forecasts, which seriously limits its further expansion in practical applications.

To solve these problems, a joint research team from NVIDIA, Lawrence Berkeley National Laboratory, University of California, Berkeley, and California Institute of Technology,Introduced FourCastNet 3 (FCN3), a probabilistic machine learning weather forecasting system that combines spherical signal processing with a hidden Markov ensemble framework.

The model's forecast skill surpasses the traditional NWP gold standard IFS-ENS and is comparable to the leading probabilistic ML model GenCast in medium-term forecasts, with double the temporal resolution.Based on a single NVIDIA H100 GPU, a 15-day weather forecast can be completed in 60 seconds.The speed is 8 times that of GenCast and 60 times that of IFS-ENS. It supports single-card ultra-fast inference and can generate a 60-day, 0.25° resolution, 6-hour interval global forecast within 4 minutes.

The related research results were published on arXiv under the title "FourCastNet 3: A geometric approach to probabilistic machine-learning weather forecasting at scale."

Paper address:

https://arxiv.org/pdf/2507.12144

Follow the official account and reply "FCN3" to get the full PDF

More AI frontier papers: https://go.hyper.ai/owxf6

The ERA5 dataset, the core support for FourCastNet 3 training

The core training data of FourCastNet 3 (FCN3) comes from the ERA5 dataset.This is a decades-long hourly reanalysis of Earth's atmospheric state, produced by the European Centre for Medium-Range Weather Forecasts (ECMWF). ERA5 uses a four-dimensional variational assimilation system to integrate observational data from various sources since 1979 (including radiosonde, satellite, aircraft, ground stations, and buoys) with IFS model states. This produces a global atmospheric field with a spatial resolution of 0.25° × 0.25° (represented on a 721 × 1440 latitude and longitude grid), totaling approximately 39.5 TB of data.

A significant advantage of ERA5 is that its reanalysis process is always based on the same IFS cycle (such as CY41R2 and subsequent fixed configurations).This allows it to maintain dynamic consistency on the time axis, effectively avoiding climate drift caused by model upgrades in business analysis.This provides a repeatable and traceable "real atmosphere" benchmark for machine learning models. Furthermore, by integrating multiple data sources and fully accounting for their respective uncertainty estimates, it can consistently characterize Earth's atmospheric history, making it an ideal target for machine learning models to approximate planetary-scale atmospheric dynamics.

To train FCN3, the researchers selected 72 variables from ERA5, covering seven surface variables and five atmospheric variables at 13 isobaric surfaces. Although the model was ultimately trained on a six-hour interval, hourly sampling data from 1980–2018 was used to maximize the dataset size and improve the model's generalization ability.

The dataset is clearly divided into three parts:1980–2016 is the training set, 2017 is the test set, and 2018–2021 is the independent validation set (all reported indicators are calculated on the 2020 validation set). This division can effectively prevent time leakage.

Before training, the data needs to be normalized:Both inputs and outputs are z-score or min-max normalized, averaged over a spherical surface. Water vapor-related variables are scaled to [0, 1] using min-max normalization to satisfy the non-negativity constraint. Wind fields are assumed to have a mean of zero and normalized by the standard deviation of the total wind speed to preserve vector direction information. The normalization constant is calculated by first spatially averaging over the sphere and then temporally averaging over the entire training set.

It is this carefully selected, divided, and processed dataset that provides a solid foundation for FCN3 to learn the probabilistic evolution of the global atmosphere end-to-end on 1,000+ GPUs, ensuring effective model training and accurate forecasting.

Probabilistic Machine Learning Weather Forecasting System FourCastNet 3

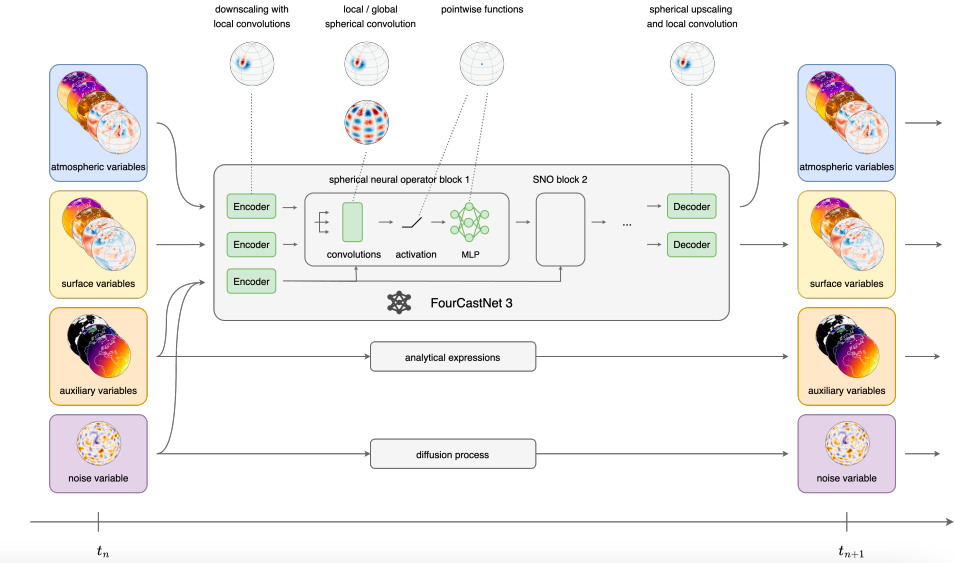

FourCastNet 3 (FCN3) is a probabilistic model consisting of an encoder, a decoder, and 8 neural operator blocks.Based on the hidden Markov model framework, given the 0.25° grid atmospheric state uₙ at a certain time tₙ, the model can predict the state uₙ₊₁=F_θ(uₙ, tₙ, zₙ) 6 hours later, where randomness is introduced through the random noise vector (noise variables) zₙ - zₙ comes from multiple spherical diffusion processes with different space-time scales, thereby capturing the uncertainty of atmospheric evolution.

In terms of model architecture, FCN3 adopts spherical neural operator design, the core of which is local and global spherical group convolution - that is, convolution that maintains equivariance under the action of the rotation group SO(3).Among them, the global convolution kernel is parameterized in the spectral domain, with the help of spherical convolution theorem and spherical harmonic transform, similar to the classical pseudo-spectral method; the local convolution is based on the discrete-continuous (DISCO) convolution framework, which uses numerical integration to approximate the continuous domain convolution, supports anisotropic filters, and is more in line with the geometric characteristics of atmospheric phenomena.

The overall architecture is divided into encoder, processor and decoder.The encoder downsamples the 721×1440 input/output signal to a 360×720 Gaussian grid through a layer of local spherical convolution, with an embedding dimension of 641; the processor consists of several spherical neural operator blocks using the ConvNeXt structure. Experiments show that the prediction skill is best when 4 local blocks are paired with 1 global block, and layer normalization is omitted to retain the absolute value of the physical process; the decoder combines bilinear spherical interpolation with local spherical convolution upsampling to restore the original resolution and suppress aliasing.

It is worth noting that unlike most machine learning weather models which predict the “bias” (the difference between the prediction and the input),FCN3 directly predicts the state at the next moment, effectively suppressing high-frequency artifacts.In addition, both the encoder and decoder do not mix across channels, and the water vapor channel is processed by a smooth spline output activation function to ensure positive values and reduce high-frequency noise.

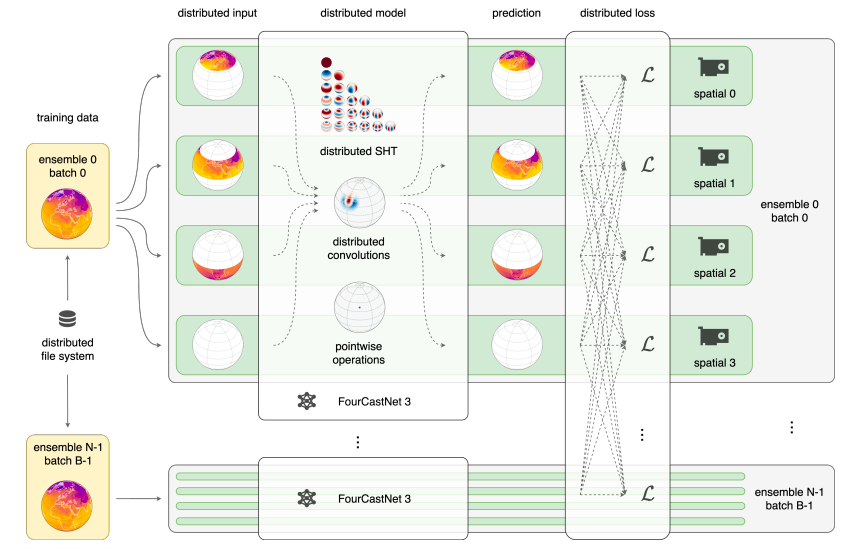

Since the internal representation of FCN3 is huge, it is difficult for a single GPU memory to handle it, and the autoregressive rollout needs to store multiple calculation results at the same time, which puts significant memory pressure. For this reason, as shown in the figure below,The research team adopted a hybrid parallel strategy to achieve scalable training:On the one hand, we draw on spatial model parallelism (domain parallelism) from traditional numerical methods. By using spatial domain decomposition, we simultaneously partition the model and data across different GPUs, requiring all spatial algorithms to be rewritten as distributed versions. On the other hand, we incorporate data parallelism, including both set and batch parallelism. Because each member is independent of each other before loss calculation, communication is required only during the loss phase, resulting in extremely high efficiency. These features are implemented in the Makani framework, supporting scalability to thousands of GPUs.

The training process is divided into three stages:The initial pre-training focused on 6-hour forecasting skills. It used hourly samples from the ERA5 training set from 1980 to 2016 to construct 6-hour input-target pairs starting at every UTC hour. It was trained on 1,024 H100s on an NVIDIA Eos supercomputer with a batch size of 16 and an ensemble size of 16 for 208,320 steps, taking 78 hours.

The second stage of pre-training was based on a 6-hour initial field with a 4-step autoregressive rollout, and was trained for 5,040 steps (reducing the learning rate every 840 steps) on a NERSC Perlmutter system with 512 A100s, which took 15 hours.

The fine-tuning phase took eight hours, spanning six hours of samples from 2012–2016, and was performed on 256 H100 GPUs on the Eos system. This corrected for potential distribution drift and improved performance on recent data. Due to the limited 80GB of video memory on a single graphics card, training employed spatially parallel slicing of the data and model. Pre-training was split into four slicing steps, and fine-tuning was split into 16 slicing steps due to the higher autoregressive requirements. This ultimately enabled efficient training of large models.

Performance evaluation: FCN3 comprehensively surpasses traditional NWP and catches up with the most advanced diffusion models at a very low cost.

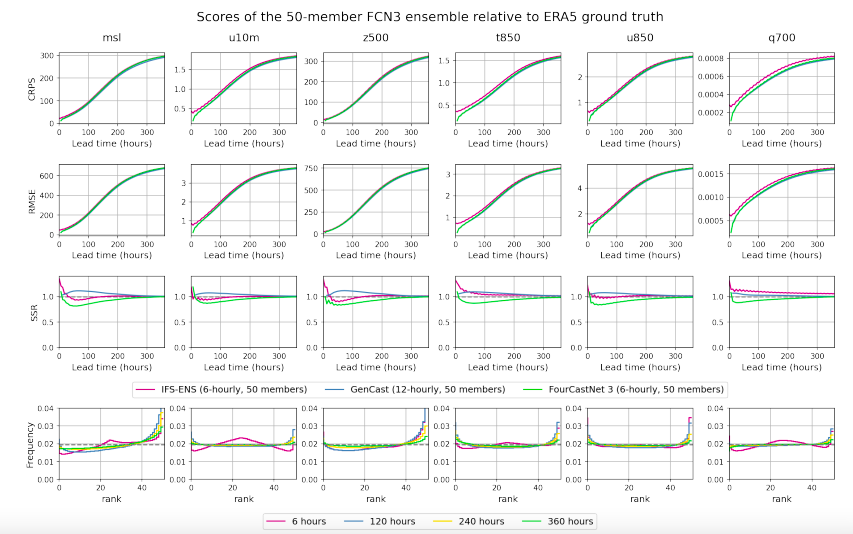

To comprehensively evaluate the performance of FourCastNet 3 (FCN3), the research team designed experiments from multiple key dimensions, covering forecast accuracy, computational efficiency, probability calibration, and physical fidelity. As shown in the figure below, in terms of core performance indicators, the average results of the initial field every 12 hours in 2020 (excluding the training set) are:,FCN3’s continuous graded probability score (CRPS) and ensemble average root mean square error (RMSE) perform well,Not only does it comprehensively surpass the gold standard IFS-ENS for traditional physical numerical weather forecasting, but the gap with the current best data-driven model GenCast is almost negligible.

In terms of computational efficiency, thanks to the design of directly generating set members in one step,FCN3 can complete a 15-day, 6-hour interval, 0.25° spatial resolution forecast in just about 60 seconds on a single NVIDIA H100 GPU.In comparison, GenCast takes 8 minutes to complete the same-length forecast (with a temporal resolution of only half that of FCN3) on a Cloud TPU v5 instance, while IFS takes about 1 hour to run at a 9 km operational resolution on 96 AMD Epyc Rome CPUs. If we ignore the differences in hardware and resolution,FCN3 is about 8 times faster than GenCast and about 60 times faster than IFS-ENS.

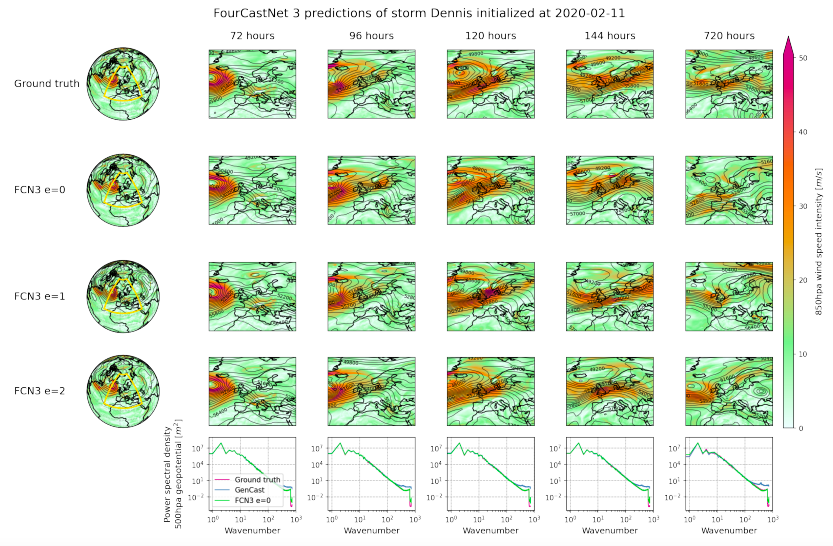

Considering that CRPS and RMSE can only be evaluated point by point and cannot measure spatiotemporal correlations, the research team supplemented the physical fidelity of the model through case studies. As shown in the figure below, taking the extratropical storm Dennis, which was reported at 00 UTC on February 11, 2020, as an example, the results of the 850 hPa wind speed and 500 hPa geopotential height forecast 48 hours before its landfall in Ireland and the British Isles show thatFCN3 can reproduce this weather event, and the covariance relationship between wind speed and pressure field is reasonable.The angular power spectral density (PSD) of the 500 hPa geopotential height maintains the correct slope; even when the forecast period is extended to 30 days, the angular power spectrum does not decay, and the forecast always maintains a sharp resolution.

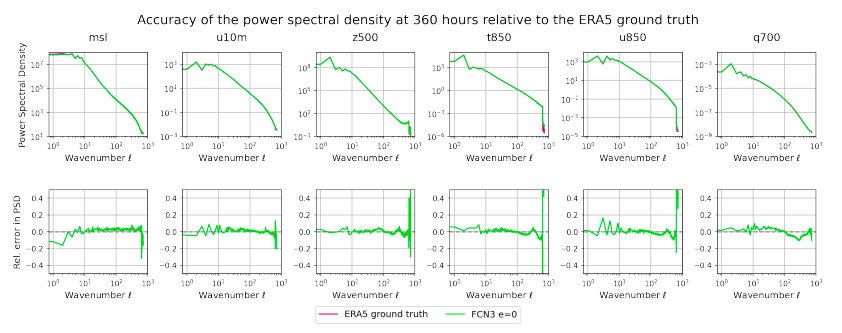

Analysis of the power spectral density for the entire year of 2020 and its relative error to the true ERA5 value shows that the error in high-wavenumber regions is always bounded (between -0.2 and 0.2), thanks to the geometric and signal processing principles followed by the model architecture, as well as the CRPS loss that takes into account both local and global distributions.Encourage the model to learn the correct spatial correlation.

In contrast, most deterministic machine learning weather models suffer from significant attenuation of high-frequency information and ambiguous forecast results. Even the hybrid model NeuralGCM trained using CRPS exhibits significant ambiguity in high-frequency modes. The latest probabilistic models GenCast and AIFS-CRPS are unable to fully retain the correct spectral shape and may even experience high-frequency mode accumulation, which is often a precursor to pattern divergence in traditional numerical weather forecasts.

Comprehensive testing of diagonal spectra, zonal spectra, and physical consistency confirms that FCN3 is a weather model that combines unprecedented spectral fidelity and physical realism in terms of probabilistic techniques, computational efficiency, and global scale.Its forecast remains stable on the 60-day sub-seasonal scale.It paved the way for the development of subseasonal forecasts and large-scale ensemble forecasts.

Power spectral density for the entire year of 2020 and its relative error relative to the true value of ERA5

Breakthroughs and Prospects of Probabilistic Machine Learning Weather Forecasting Systems

In fact, global industry, academia and research institutions have conducted in-depth research in the field of probabilistic machine learning weather forecasting systems, and a series of influential results have emerged.

GenCast, launched by Google's DeepMind team,A benchmark in its field, this probabilistic weather model, based on the conditional diffusion model, can generate a set of random 15-day global forecasts within 8 minutes, with a time step of 12 hours and a resolution of 0.25°, covering more than 80 surface and atmospheric variables.

In a comparative evaluation with the world's top medium-range forecast system, the European Centre for Medium-Range Weather Forecasts (ECMWF) Ensemble Forecast (ENS),GenCast outperforms TP3T in 97.21 of the 1320 evaluation metrics, and the marginal and joint forecast distributions it generates are more accurate.

Microsoft's Aurora AI weather forecast model integrates deep learning and large-scale heterogeneous data processing technology.It can not only accurately predict the weather, but after fine-tuning can also be applied to various natural environment monitoring fields such as ocean currents and air quality.Its training data is massive, covering more than 1 million hours of meteorological and environmental data from multiple sources including satellites, radars, weather stations, and computer simulations.

According to test data from the Microsoft research team, in the global tropical cyclone forecasting task from 2022 to 2023, Aurora's trajectory prediction performance was comprehensively superior to industry competitors and traditional observation and inference algorithms. It also demonstrated high accuracy in complex environmental scenarios such as ocean wave forecasting and air quality forecasting.

Academic research has also yielded fruitful results. Many universities have conducted in-depth research on probabilistic machine learning weather forecasting systems and achieved breakthroughs. Research teams at the University of Cambridge and the Alan Turing Institute have taken a different approach, developing the Aardvark Weather system.It is the first system that can be trained and run on a desktop computer and can replace all steps in the weather forecasting process with a single AI model.The processing speed is thousands of times faster than traditional methods.

The system can efficiently process multimodal complex data from satellites, weather stations, and weather balloons to generate a 10-day global forecast. On four NVIDIA A100 GPUs, it only takes about one second to generate a complete forecast from observation data.

The FuXi Weather system proposed by the FuXi team of Fudan University,It is the first end-to-end machine learning global weather forecasting framework that can independently complete data assimilation (DA) and cycle forecasting.It generates reliable 10-day forecasts at a resolution of 0.25° by fusing multi-source satellite observation data. Even in sparsely observed areas such as central Africa, its performance even surpasses the European Centre for Medium-Range Weather Forecasts (ECMWF) High Resolution Forecast (HRES).

These explorations and breakthroughs not only propel probabilistic machine learning weather forecasting systems toward higher accuracy, higher efficiency, and a wider range of applications, but also provide strong technical support for addressing global issues such as climate change, mitigating the impact of meteorological disasters, and optimizing energy utilization. With the continued iteration of technology and deepening cross-disciplinary collaboration, future probabilistic machine learning weather forecasting systems will more accurately capture the complex dynamics of the atmosphere, building a more robust meteorological defense.

Reference articles: