Command Palette

Search for a command to run...

A Leap in Reasoning Ability! GLM-4.1V-Thinking Promotes the Evolution of Cognitive Intelligence; 5 Million step-by-step Thinking Data Examples! MathX-5M Unlocks a New Realm of Mathematical Reasoning

At present, multimodal large models are evolving from "perceptual intelligence" to "cognitive intelligence". Previous studies have attempted to enhance the reasoning ability of visual language models, but they are mostly limited to specific fields. Although relevant research is ongoing, there is still a lack of a universal multimodal reasoning model.

In this context, Zhipu AI and Tsinghua University jointly proposed GLM-4.1V-Thinking, a visual-language model (VLM) designed to promote general multimodal understanding and reasoning.Its core innovation lies in the "Reinforcement Learning with Curriculum Sampling (RLCS)" strategy.Not only does it achieve the strongest performance of the visual language model at the 10B parameter level,In 18 of the list tasks, the Qwen-2.5-VL-72B has the same or even more than 8 times the parameters.It also achieves a leap in the dynamic cognitive capabilities of multimodal models - upgrading from passive "image recognition" to active "thinking", solving the pain points of reasoning while maintaining the advantages of lightweight deployment.

At present, the HyperAI official website has launched the "GLM-4.1V-Thinking: Versatile Multimodal Reasoning through Scalable Reinforcement Learning" tutorial, come and try it~

GLM-4.1V-Thinking: Versatile Multimodal Reasoning via Scalable Reinforcement Learning

Online use:https://go.hyper.ai/B3Vzs

From July 7 to July 11, hyper.ai official website updates:

* High-quality public datasets: 10

* High-quality tutorial selection: 7

* This week's recommended papers: 5

* Community article interpretation: 5 articles

* Popular encyclopedia entries: 5

* Top conferences with deadline in July: 4

Visit the official website:hyper.ai

Selected public datasets

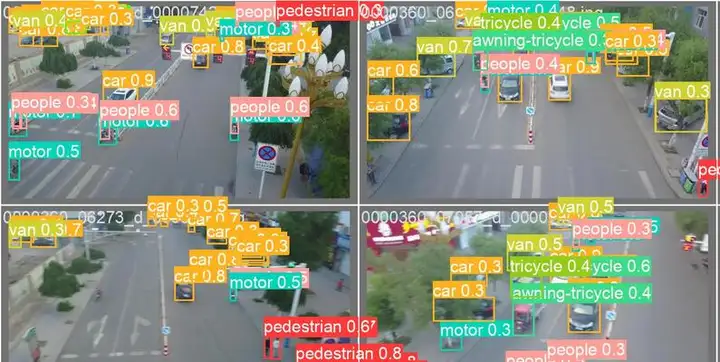

1. VisDrone drone detection dataset

VisDrone is a large-scale drone visual target detection and tracking benchmark dataset designed to help develop and evaluate computer vision tasks such as target detection, object tracking, and image segmentation. The dataset contains high-resolution images and videos collected using drones in urban and suburban environments in various cities in China, covering 6 categories (such as people, vehicles, buildings, animals, etc.).

Direct use:https://go.hyper.ai/hQ5lh

2. MathX-5M Mathematical Reasoning Dataset

MathX is a mathematical reasoning dataset designed for instruction-based model tuning and fine-tuning of existing models to enhance thinking capabilities. This dataset is the largest and most comprehensive public mathematical reasoning data corpus to date, including 5 million carefully selected step-by-step thinking data examples, each of which contains: problem statement, detailed reasoning process, and verified correct solution.

Direct use:https://go.hyper.ai/h0eLq

3. Fruit Classification Fruit Classification Image Dataset

Fruit Classification is a fruit classification image dataset designed to train machine learning and deep learning models for fruit recognition and classification. The dataset covers 101 fruit species, and each category contains about 400 images for training, 50 images for validation, and 50 images for testing.

Direct use:https://go.hyper.ai/a8gfG

Dog Breeds Image is a dog breed image dataset that contains images of different dog breeds, designed to help train and evaluate dog breed classification models. This dataset contains thousands (over 17,000) images of different dog breeds, more than 100 breeds (terriers, hounds, mastiffs, spaniels, bichon frise, etc.), designed to help develop dog breed recognition systems.

Direct use:https://go.hyper.ai/DoFA3

5. Mushroom Species Mushroom species identification dataset

Mushroom is a mushroom species recognition dataset. The dataset contains images of more than 100 mushroom species. The data contains the physical characteristics of each mushroom, such as color, shape, smell, surface texture, etc., and annotates whether each mushroom is poisonous or edible. These images show the morphology of mushrooms at different growth stages and growth conditions, making them ideal for fine-grained classification tasks.

Direct use:https://go.hyper.ai/ws0pi

6. Text-to-Image-2M text-to-image training dataset

Text-to-Image-2M is a high-quality text-image pair dataset designed for fine-tuning text-to-image models. The dataset contains about 2 million samples and is divided into 2 core subsets: data_512_2M (2 million 512×512 resolution images and annotations) and data_1024_10K (10,000 1024×1024 high-resolution images and annotations), providing flexible options for model training with different accuracy requirements.

Direct use:https://go.hyper.ai/lTBaT

7. CIFAKE synthetic image recognition dataset

CIFAKE is a synthetic dataset for identifying AI-generated images. It is a binary classification image dataset that has important practical application value for enhancing the robustness of image processing technology and improving the recognition ability of AI-generated content, especially in the fields of news dissemination and social media monitoring. The dataset contains 60,000 real images and 60,000 AI-generated images, and is designed to evaluate the ability of computer vision models to identify AI-generated images.

Direct use:https://go.hyper.ai/wxeA3

8. II-Medical SFT Public Medical Reasoning Dataset

II-Medical SFT is a public medical reasoning dataset designed to support supervised fine-tuning of large language models (LLMs) for medical reasoning tasks. The dataset contains approximately 2.2 million samples, covering multi-source medical scenarios, meeting the fine-tuning needs of complex medical models, and aims to help models develop key capabilities such as differential diagnosis, evidence-based decision-making, patient communication, and guideline-based treatment plans.

Direct use:https://go.hyper.ai/TGMjl

9. Traffic Sign Detection Traffic Sign Detection Dataset

Traffic Sign Detection is a traffic sign detection dataset suitable for traffic sign recognition research in autonomous driving, driver assistance systems, and smart cities. The dataset contains about 9,000 clearly labeled traffic sign images and about 4,969 street view images, covering different scenes in multiple countries. The images include multiple categories and are divided into training sets, validation sets, and test sets, providing accurate bounding box annotations.

Direct use:https://go.hyper.ai/VfwUw

10. UniMate Mechanical Metamaterials Benchmark Dataset

The UniMate dataset is a benchmark dataset for mechanical metamaterials, containing 15,000 samples. Each sample contains three-dimensional topological structure, density information and its corresponding homogenized mechanical properties, covering low-density (ρ=0.1) to medium-density (ρ=0.5) scenarios. The topological structure satisfies cubic symmetry and periodicity.

Direct use:https://go.hyper.ai/1ki2l

Selected Public Tutorials

This week, we have summarized 3 types of high-quality public tutorials

*Large model deployment tutorial: 1

*AI for Science Tutorials: 2

*Multimodal tutorials: 4

Large Model Deployment Tutorial

1. Ollama+Open WebUI deploys Kimi-Dev-72B-GGUF

Kimi-Dev-72B is an open source large language model designed for software engineering tasks. It mainly includes functions such as code repair, test code generation (TestWriter), automated development process, and development tool integration.

Run online:https://go.hyper.ai/t6ps1

AI for Science Tutorial

1. Use State to predict cell perturbation responses in different situations

The State model can predict the response of stem cells, cancer cells and immune cells to drugs, cytokines or genetic interventions. Experimental results show that the model performs significantly better than current mainstream methods in predicting transcriptome changes after intervention.

Run online:https://go.hyper.ai/4AM6P

2. HealthGPT: AI medical assistant

HealthGPT is a medical large visual language model (Med-LVLM) that implements a unified framework for medical visual understanding and generation tasks through heterogeneous knowledge adaptation technology. It uses innovative heterogeneous low-rank adaptation (H-LoRA) technology to store the knowledge of visual understanding and generation tasks in independent plug-ins to avoid conflicts between tasks.

Run online:https://go.hyper.ai/KiBWB

Multimodal Tutorial

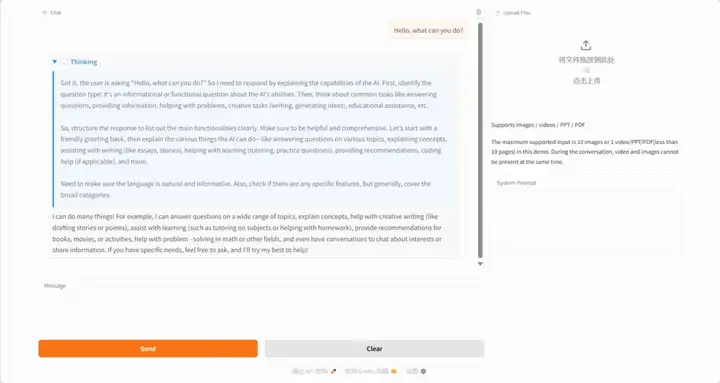

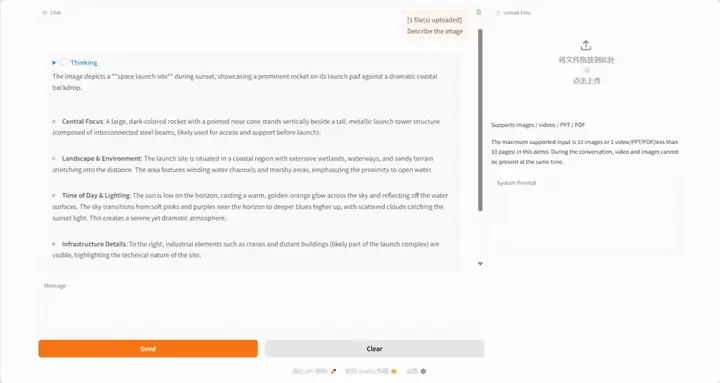

1. GLM-4.1V-Thinking: Versatile Multimodal Reasoning via Scalable Reinforcement Learning

GLM-4.1V-Thinking is a visual-language model (VLM) designed to advance general multimodal understanding and reasoning. By combining reinforcement learning with curriculum sampling (RLCS), it achieves comprehensive capability improvement in diverse tasks including STEM problem solving, video understanding, content recognition, programming, coreference resolution, GUI-based agents, and long document understanding.

Run online:https://go.hyper.ai/qPF8a

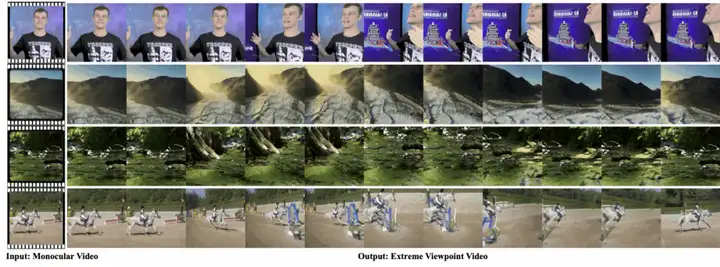

2. EX-4D: Generate free view from monocular video

EX-4D is a new 4D video generation framework that can generate high-quality 4D videos at extreme viewpoints from monocular video input. The framework is based on a unique deep waterproof mesh (DW-Mesh) representation that explicitly models visible and occluded areas to ensure geometric consistency under extreme camera poses. The framework uses a simulated occlusion mask strategy to generate effective training data based on monocular video and a lightweight LoRA-based video diffusion adapter to synthesize physically consistent and temporally coherent videos. EX-4D significantly outperforms existing methods at extreme viewpoints and provides a new solution for 4D video generation.

Run online:https://go.hyper.ai/WyAPN

3. MonSter: Unleashing the potential of monocular depth and stereo vision

MonSter integrates monocular depth and stereo matching into a two-branch architecture to iteratively improve each other. The iterative mutual enhancement enables MonSter to evolve from coarse object-level structures to pixel-level geometry, fully realizing the potential of stereo matching.

Run online:https://go.hyper.ai/a9Ekd

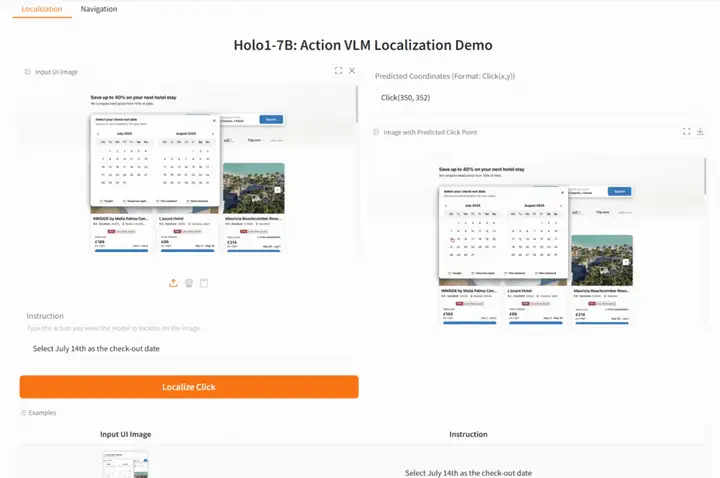

4. Holo1-7B: Natural language accurate positioning of UI elements

Holo1-7B is an action visual language model (VLM) for the Surfer-H web agent system. It is designed to interact with web interfaces like a human user. As part of a broader agent architecture, Holo1 can act as a policy model, localization model, or verification model, helping agents understand and manipulate digital environments.

Run online:https://go.hyper.ai/6oQuF

This week's paper recommendation

1. MemOS: A Memory OS for AI System

This paper proposes MemOS, a memory operating system that treats memory as a manageable system resource. It unifies plain text, activation-based, and parameter-level memory representation, scheduling, and evolution, enabling cost-effective storage and retrieval. As a basic unit, MemCube encapsulates memory content and its metadata, such as provenance and version information. MemCubes can be combined, migrated, and fused over time, enabling flexible conversion between different types of memory and bridging retrieval with parameter-based learning. MemOS establishes a memory-centric system framework that brings controllability, plasticity, and evolvability to LLMs, laying the foundation for their continuous learning and personalized modeling.

Paper link:https://go.hyper.ai/PgtHH

2. SingLoRA: Low Rank Adaptation Using a Single Matrix

This paper proposes a new method, SingLoRA, which redefines low-rank adaptation by expressing weight updates as a decomposition of a single low-rank matrix and its transpose. This simple design inherently eliminates the scale conflict between matrices, ensures the stability of the optimization process, and reduces the number of parameters by approximately half. The researchers analyzed SingLoRA within the framework of infinite-width neural networks and showed that its design inherently ensures the stability of feature learning, and verified these advantages through extensive experiments.

Paper link:https://go.hyper.ai/kUu4u

3.Should We Still Pretrain Encoders with Masked Language Modeling?

Research has shown that decoder models pre-trained with causal language models (CLMs) can be effectively reused for encoder tasks, but the reason for the performance improvement is unclear. This paper explores this question through a series of large-scale, carefully controlled pre-training ablation experiments, experimentally demonstrating that a two-stage training strategy - first applying CLM and then MLM - can achieve the best performance under a fixed computational resource budget, and this strategy is more attractive when initialized from pre-trained CLM models from the existing large language model ecosystem.

Paper link:https://go.hyper.ai/eN7kf

4. A Survey on Latent Reasoning

To promote the research of latent reasoning, this paper provides a comprehensive overview of the emerging field of latent reasoning. By exploring the fundamental role of neural network layers as the computational matrix for reasoning, studying various latent reasoning methods, and discussing advanced paradigms (such as unlimited depth latent reasoning achieved by masked diffusion models), it aims to clarify the conceptual framework of latent reasoning and point out future directions for research on the frontier of LLM cognition.

Paper link:https://go.hyper.ai/kIuD8

5.Agent KB: Leveraging Cross-Domain Experience for Agentic Problem Solving

This paper introduces Agent KB, a hierarchical experience framework that enables complex agent problem solving through a novel Reason-Retrieve-Refine pipeline. Our results show that Agent KB provides a modular and framework-agnostic infrastructure that enables agents to learn from past experience and generalize successful strategies to new tasks.

Paper link:https://go.hyper.ai/2wJPd

More AI frontier papers:https://go.hyper.ai/iSYSZ

Community article interpretation

The research team of Shanghai Jiao Tong University, together with Shanghai University of Sport and Tsinghua University, has created the world's first VR intelligent sports intervention system "Spirit Realm" for weight control of overweight or obese adolescents. It uses a virtual coach twin agent driven by deep reinforcement learning and based on the Transformer architecture to provide safe and immersive sports guidance, and its biomechanical performance and exercise heart rate response are not significantly different from the same type of real-world sports.

View the full report:https://go.hyper.ai/Q3KKv

Recently, research papers from 14 universities around the world were exposed to have hidden instructions embedded in them, guiding AI reviewers to give positive reviews. This report has sparked heated discussions in the academic community and has aroused people's attention to the risks and ethical challenges of using AI reviewers. Xie Saining's team's paper was also accused of hiding positive comments, and he published a long article in response, calling for attention to the evolution of scientific research ethics in the AI era.

View the full report:https://go.hyper.ai/LZ0TJ

The National University of Singapore and Zhejiang University jointly proposed an innovative NeuralCohort method, which opened up a new path for EHR representation learning and fully unleashed the potential of EHR data. It simultaneously utilized local intra-cohort and global inter-cohort information, which are key elements that have not been fully addressed in previous electronic health record analysis studies.

View the full report:https://go.hyper.ai/1b8lG

The 7th 2025 Meet AI Compiler Technology Salon was successfully concluded in Beijing Zhongguancun on July 5. Zhang Ning, an AI architect from AMD, gave a speech entitled "Assisting the open source community, analyzing the AMD Triton compiler". Focusing on the company's technical contributions to the open source community, he systematically interpreted the core technology, underlying architecture support, and ecological construction achievements of the AMD Triton compiler, providing developers with a comprehensive perspective for in-depth understanding of high-performance GPU programming and compiler optimization. This article is a transcript of the highlights of Zhang Ning's sharing.

View the full report:https://go.hyper.ai/jJLD8

In an era dominated by social media and visual content, "photo editing" has evolved from a design skill to a daily need for the public. Users' desire for convenient and efficient tools has never stopped, and "photo editing in one sentence" is gradually becoming a reality with the leap-forward progress of technology. The recently open-sourced FLUX.1-Kontext-dev has achieved high performance comparable to a number of closed-source models such as GPT-image-1 with only 12B parameters.

View the full report:https://go.hyper.ai/EJIIa

Popular Encyclopedia Articles

1. DALL-E

2. Reciprocal sorting fusion RRF

3. Pareto Front

4. Large-scale Multi-task Language Understanding (MMLU)

5. Contrastive Learning

Here are hundreds of AI-related terms compiled to help you understand "artificial intelligence" here:

One-stop tracking of top AI academic conferences:https://go.hyper.ai/event

The above is all the content of this week’s editor’s selection. If you have resources that you want to include on the hyper.ai official website, you are also welcome to leave a message or submit an article to tell us!

See you next week!