Command Palette

Search for a command to run...

AI Paper Weekly | Chai-2 Refreshes Antibody Design Efficiency, With a 100-fold Increase in Hit Rate; a Quick Look at Multiple ICML Shortlisted Papers

Visual language models (VLMs) are gradually breaking through the boundaries of traditional text understanding and moving towards deep perception and analysis of complex visual information, becoming an indispensable core component of today's intelligent systems. With the significant leap in model intelligence, its application scenarios have expanded from basic visual perception to solving scientific problems and building autonomous intelligent agents, which has put forward higher requirements on model capabilities. Although relevant research is ongoing, these works are mostly limited to specific fields, and there is still a lack of a general multimodal reasoning model.

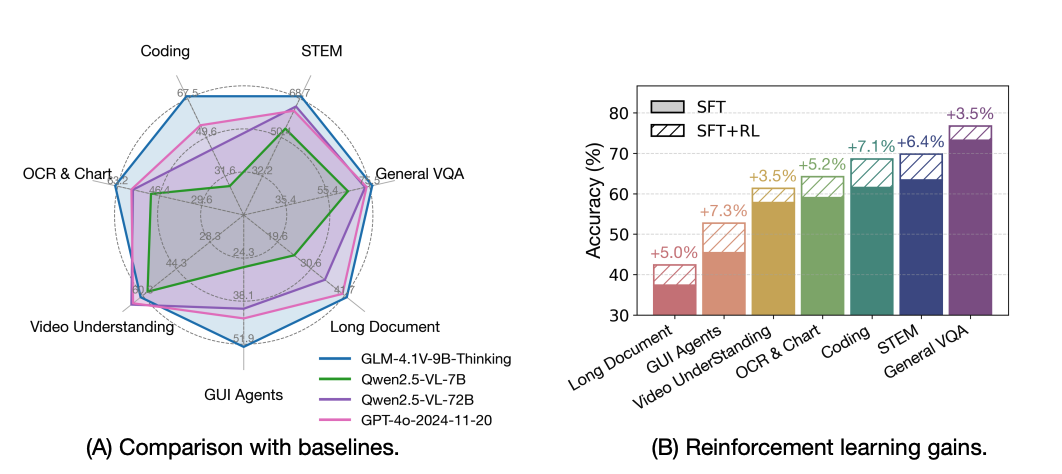

In this regard, Zhipu AI and Tsinghua University jointly proposed GLM-4.1V-Thinking. As a general multimodal understanding and reasoning visual language model, this model has excellent performance in a variety of tasks such as STEM problem solving, video understanding, content recognition, programming, reference resolution, GUI-based agents and long document understanding. Among them, it shows comparable or better performance than closed-source models such as GPT-4o in tasks such as long document understanding and STEM reasoning.

Paper link:https://go.hyper.ai/fEPb4

Latest AI Papers:https://go.hyper.ai/hzChC

In order to let more users know the latest developments in the field of artificial intelligence in academia, HyperAI's official website (hyper.ai) has now launched a "Latest Papers" section, which updates cutting-edge AI research papers every day.Here are 5 popular AI papers we recommend,Includes the UniMate Mechanical Metamaterials Benchmark Dataset and its download link. Take a quick look at this week's cutting-edge AI achievements⬇️

This week's paper recommendation

1 GLM-4.1V-Thinking: Towards Versatile Multimodal Reasoning with Scalable Reinforcement Learning

This paper introduces GLM-4.1V-Thinking, a visual language model designed to advance general multimodal understanding and reasoning. The team open-sourced the GLM-4.1V-9B-Thinking model, which achieves state-of-the-art performance among models of similar size.

After comprehensive evaluation on 28 public benchmarks, the model outperforms Qwen2.5-VL-7B on almost all tasks, and shows comparable or better performance than the significantly larger Qwen2.5-VL-72B on 18 benchmarks. Notably, the model also shows comparable or better performance than closed-source models such as GPT-4o on challenging tasks such as long document understanding and STEM reasoning, further highlighting its powerful capabilities.

Paper link:https://go.hyper.ai/fEPb4

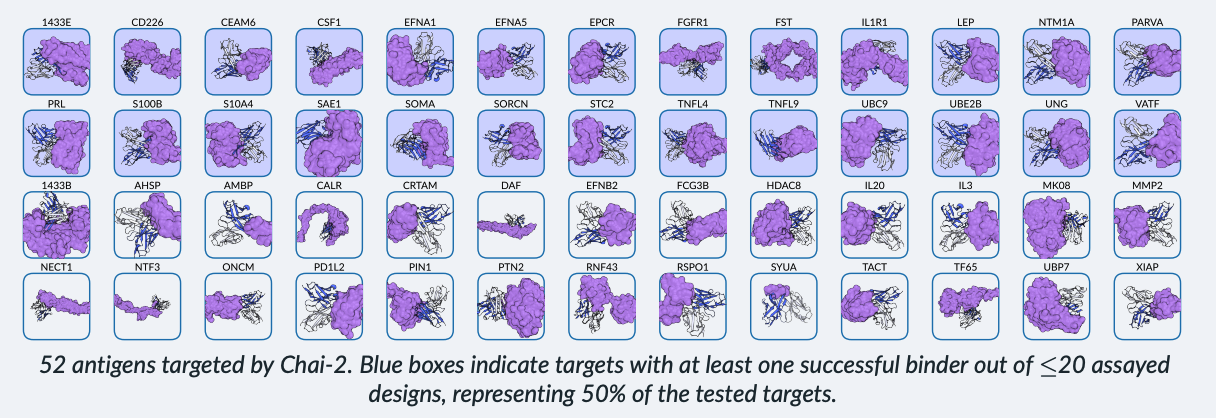

2 Zero-shot antibody design in a 24-well plate

This paper introduces the multimodal generative model Chai-2, which achieves a hit rate of 16% in completely de novo antibody design, an improvement of more than 100-fold over previous computational methods. In addition to antibody design, Chai-2 has a wet lab success rate of 68% in mini-protein design, often generating picomolar binders. Chai-2's high success rate allows novel antibodies to be rapidly experimentally validated and characterized in less than two weeks, paving the way for a new era of rapid and precise atomic-scale molecular engineering.

Paper link:https://go.hyper.ai/rRRML

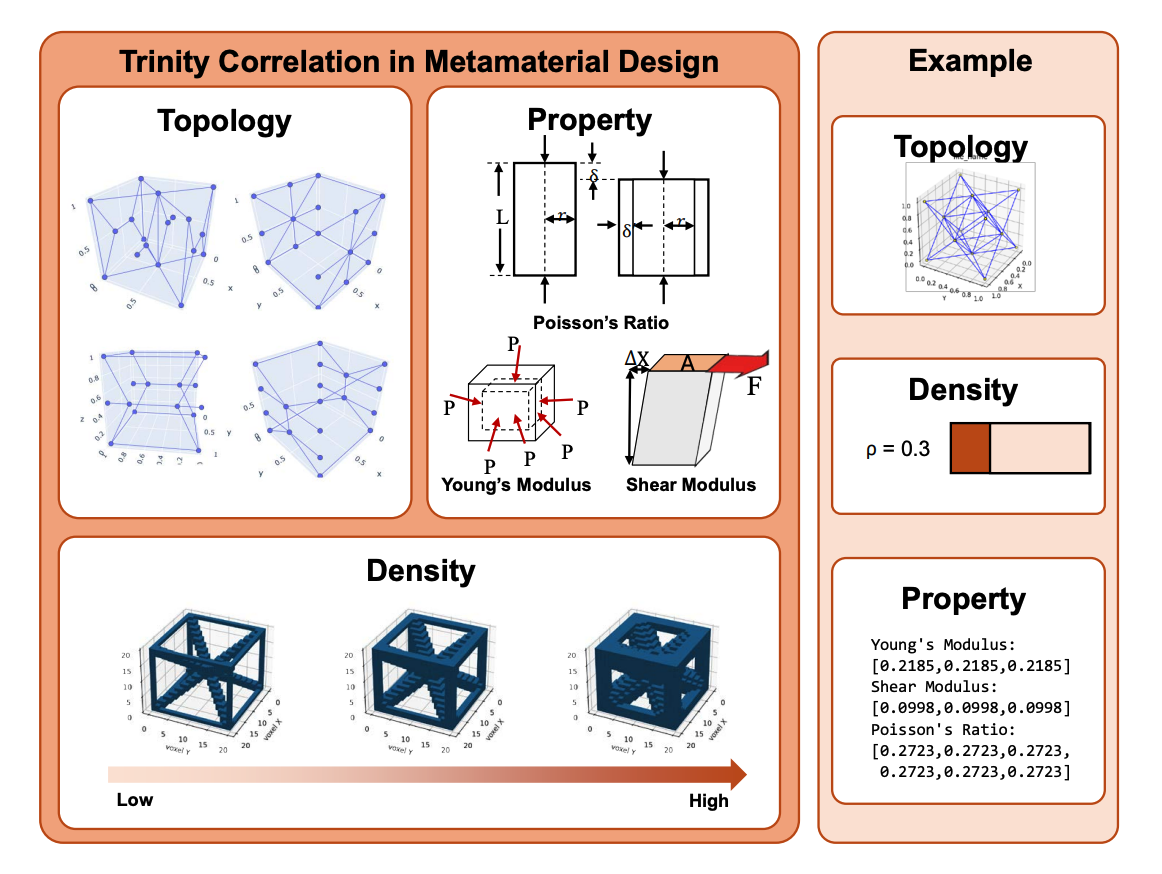

3 UniMate: A Unified Model for Mechanical Metamaterial Generation, Property Prediction, and Condition Confirmation

In the design of mechanical metamaterials, three key modes are usually involved, namely three-dimensional topological structure, density condition and mechanical properties. However, most existing studies only consider two modes. This paper proposes a unified model UniMate, which consists of a modal alignment module and a cooperative diffusion generation module. Experimental results show that UniMate outperforms other baseline models by 80.2%, 5.1% and 50.2% in topology generation tasks, performance prediction tasks and condition confirmation tasks, respectively.

Paper link:https://go.hyper.ai/KNcmr

UniMate Mechanical Metamaterials Benchmark Dataset:https://go.hyper.ai/p4535

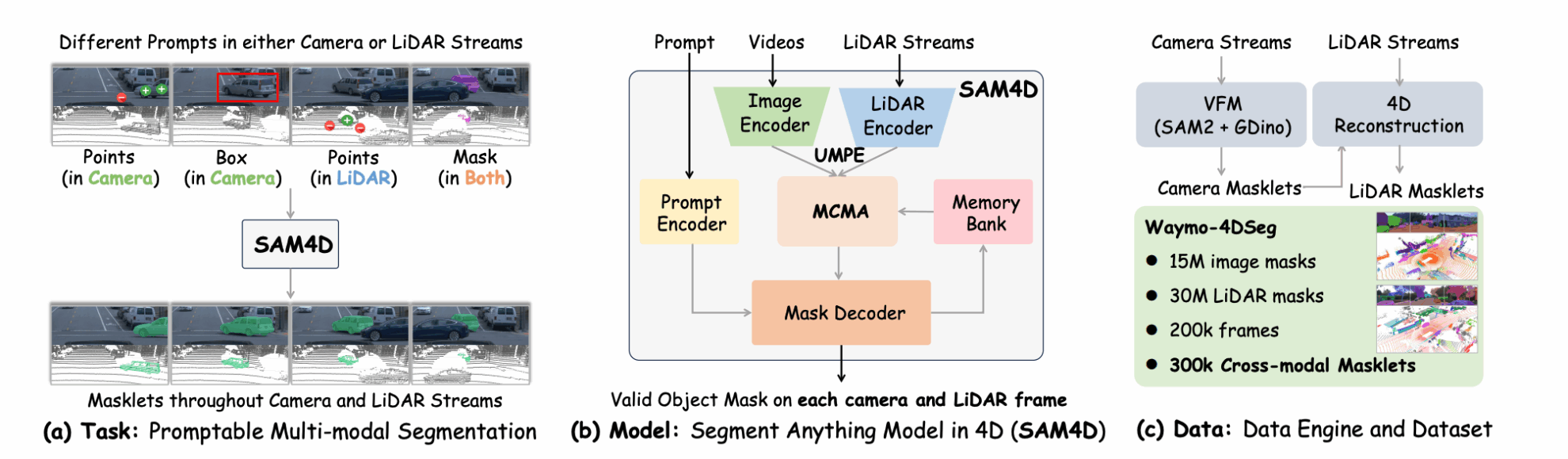

4 SAM4D: Segment Anything in Camera and LiDAR Streams

This paper introduces a new model SAM4D, which aims to achieve multimodal, spatiotemporal-sensitive segmentation tasks between camera and radar streams. The model aligns camera and radar features through a unified multimodal position encoding, and uses a motion-aware cross-modal memory attention mechanism to enhance temporal consistency, ensuring robust segmentation in dynamic environments. To avoid the annotation bottleneck, this paper also proposes an automatic data engine that can automatically generate high-quality pseudo-labels based on video frame masklets, 4D reconstruction, and cross-modal masklet fusion, effectively improving the annotation efficiency while maintaining the semantic accuracy derived from VFM.

Paper link:https://go.hyper.ai/QtQEx

5 WebSailor: Navigating Super-human Reasoning for Web Agent

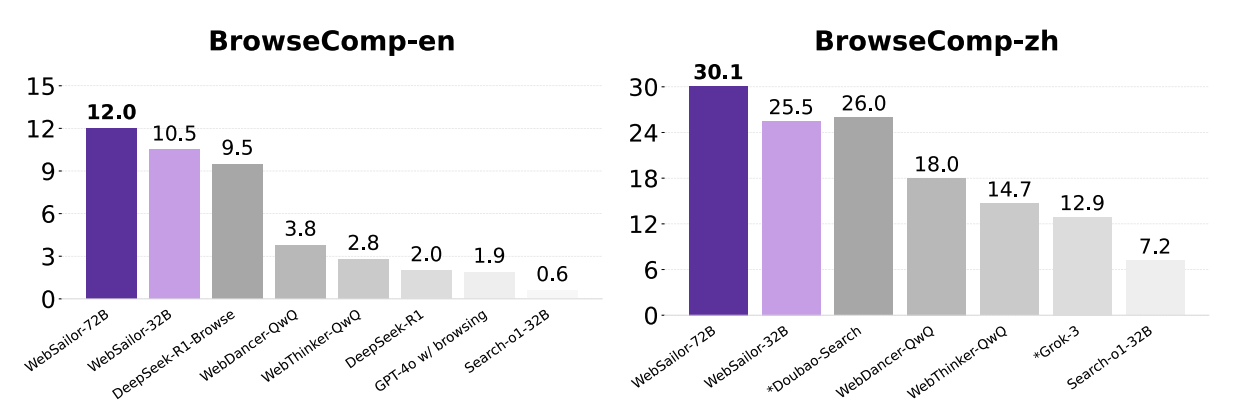

This paper proposes a method for training large language models to achieve reasoning capabilities beyond the limits of human cognition, with a particular focus on the performance of complex information seeking tasks. The method is based on generating difficult-to-parse task data, leveraging effective reinforcement learning strategies, and implementing appropriate cold start techniques to improve the model's capabilities. In this way, the developed WebSailor model is able to significantly outperform open source models on complex English and Chinese information seeking benchmarks such as BrowseComp, and approach or reach the performance level of some proprietary systems.

Paper link:https://go.hyper.ai/qyvf2

The above is all the content of this week’s paper recommendation. For more cutting-edge AI research papers, please visit the “Latest Papers” section of hyper.ai’s official website.

We also welcome research teams to submit high-quality results and papers to us. Those interested can add the NeuroStar WeChat (WeChat ID: Hyperai01).

See you next week!