Command Palette

Search for a command to run...

ICML 2025 | Technical University of Munich and Others Develop Satellite Image Generation Methods Based on SD3 to Build the Largest Remote Sensing Dataset Currently

Satellite images are images of the earth's surface obtained through satellite remote sensing technology. They digitize earth information by establishing a "space perspective" to achieve large-scale detection, dynamic tracking and data support. In people's daily lives, both macro environmental governance and micro urban life are inseparable from them. For example, in forestry monitoring, satellite images can quickly delineate the distribution range of forests, calculate the coverage ratio of different forest species, and detect changes in forest coverage caused by logging, planting, pests and diseases, etc.

However,Satellite monitoring is easily affected by multiple factors, which greatly reduces its performance and application effect.The interference of cloud cover is particularly serious. For example, in areas with frequent clouds, satellite monitoring may be interrupted for days or even weeks. This not only hinders the real-time dynamic monitoring of satellites, but also puts forward new requirements for combining satellite images with climate data to improve the accuracy of predictions. The rapid development of artificial intelligence technology and machine learning algorithms has provided an opportunity to address this requirement, but most of the current methods are designed for specific tasks or specific regions and lack the universality to be promoted to global applications.

To solve the above problems,A team from the Technical University of Munich in Germany and the University of Zurich in Switzerland proposed a new method to generate satellite imagery conditioned on geographic climate cues using Stable Diffusion 3 (SD3), while creating EcoMapper, the largest and most comprehensive remote sensing dataset to date.The dataset collects more than 2.9 million RGB satellite image data from Sentinel-2 from 104,424 locations around the world, covering 15 land cover types and corresponding climate records, laying the foundation for two satellite image generation methods using a fine-tuned SD3 model. By combining synthetic image generation with climate and land cover data, the proposed method promotes the development of generative modeling technology in remote sensing, fills the observation gap in areas affected by persistent cloud cover, and provides new tools for global climate adaptation and geospatial analysis.

The research results, titled "EcoMapper: Generative Modeling for Climate-Aware Satellite Imagery", were selected for ICML 2025.

Research highlights:

* Constructed EcoMapper, the largest and most comprehensive remote sensing dataset to date, containing more than 2.9 million satellite images

* Developed a text-to-image generative model based on fine-tuned Stable Diffusion 3 to generate realistic synthetic images of specific areas using text cues containing climate and land cover details.

* Developed a multi-conditional (text + image) model framework using ControlNet to map climate data or generate time series to simulate landscape evolution

Paper address:

Dataset download address:

More AI frontier papers:

Dataset: The largest and most comprehensive remote sensing dataset to date

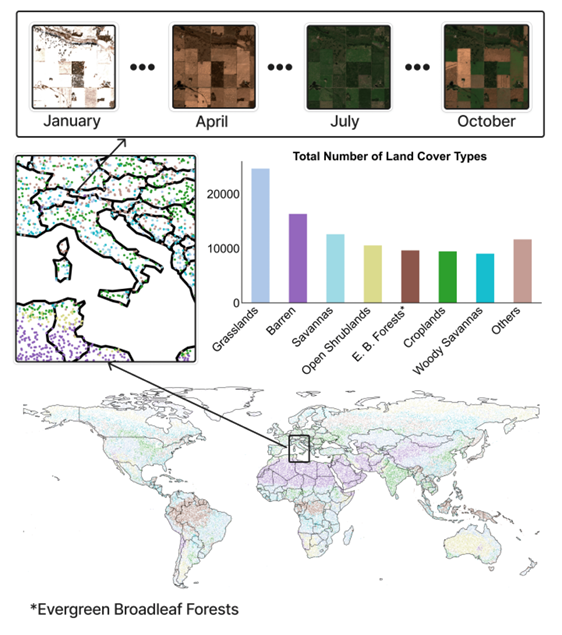

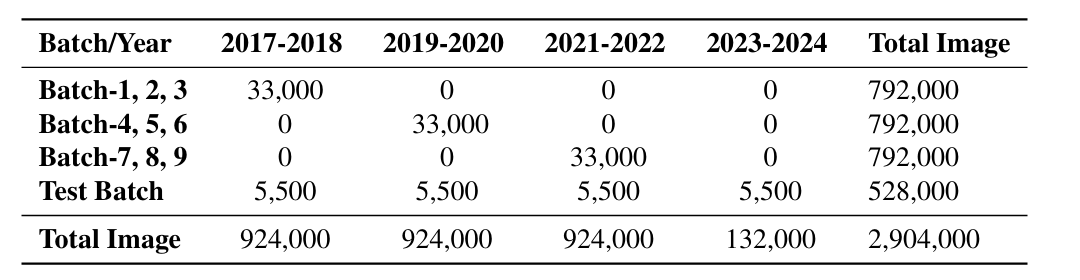

EcoMapper is the largest and most comprehensive remote sensing dataset to date.The dataset consists of 2,904,000 satellite images with climate metadata, sampled from 104,424 geographic locations around the world.It covers 15 different land cover types. As shown in the figure below:

in,The training set contains 98,930 geographical points, and the observation period for each point is 24 months.The researchers selected one observation per month for each location over two years, based on the days with the least cloud cover, resulting in a sequence of 24 images per location. The two-year observation period was randomly distributed between 2017 and 2022.

The test set contains 5,494 geographic points.The observation period for each location is 96 months (8 years), spanning from 2017 to 2024, and monitoring is also carried out monthly.

From a spatial perspective, the spatial coverage area of each observation is approximately 26.21 square kilometers.The overall dataset covers approximately 2,704,000 square kilometers, accounting for approximately 2.05% of the Earth's total land area.These data ensure sufficient spatial and temporal independence in the evaluation, enabling a robust assessment of the model’s generalization across different regions and unseen climate conditions.

In addition, each sampling location is enriched with metadata, including geographic location (latitude and longitude), observation date (year and month), land cover type and cloud cover, as well as monthly average temperature, solar radiation and total precipitation from NASA Power. These data show benefits to agriculture, forestry, land cover and biodiversity.

Model architecture: text-image generation model and multi-conditional generation model

The goal of this research is to synthesize satellite imagery conditioned on geographic and climate metadata to enable realistic predictions of environmental conditions.The researchers had to address two key tasks: text-to-image generation and multi-conditional image generation.

The researchers evaluated the ability of two generative models to incorporate climate metadata into satellite imagery synthesis:

The first one is Stable Diffusion 3.This is a multimodal latent diffusion model that integrates CLIP and T5 text encoders to enable flexible prompt condition settings. The researchers used the collected dataset to fine-tune Stable Diffusion 3 so that it can be based on realistic satellite imagery with geographical, climate, and temporal metadata.

The second one is DiffusionSat,This is a basic model specifically for satellite imagery, which is based on Stable Diffusion 2 and has been extended with a dedicated metadata embedding layer for numerical conditioning. Compared with general diffusion models, this model is designed specifically for remote sensing tasks, can encode key spatial and temporal attributes, and has super-resolution, image restoration, and temporal prediction capabilities.

For the text-to-image generation task, the researchers compared various configurations of Stable Diffusion 3 and DiffusionSat, including fine-tuned and unfine-tuned models, and conducted experiments at different resolutions:

* Baseline models: Both models are evaluated at 512 x 512 resolution without fine-tuning.

* Fine-tuned models (-FT): Both models were evaluated after fine-tuning using climate metadata at 512 x 512 resolution.

* High-resolution SD3 model: SD3 is fine-tuned and tested using climate metadata at 1024 x 1024 resolution, labeled SD3-FT-HR.

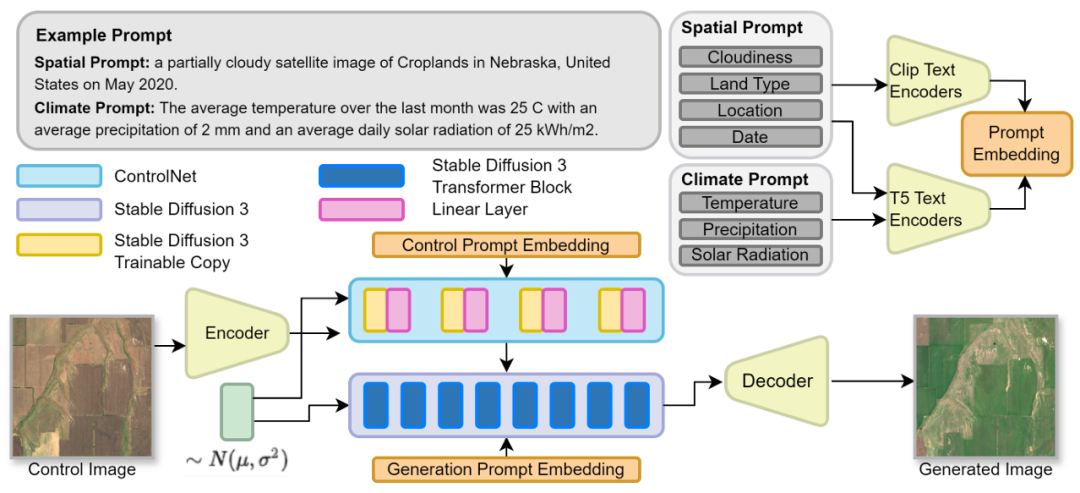

For multi-conditional image generation tasks,The researchers chose to use a fine-tuned Stable Diffusion 3 model enhanced with LoRA (low-rank adaptation) technology to perform multi-conditional image generation tasks.The model was trained at 512 x 512 resolution as a basis for generating high-quality and contextually relevant images. The study used ControlNet technology to build a dual-conditional mechanism:

* ControlNet enhances the diffusion model by integrating explicit spatial control into the generation process. This design ensures that the control block has minimal initial impact on the main block, functioning like a skip link.

* Satellite images as control signals:Satellite images from the previous few months serve as control signals to maintain the spatial structure of the generated images, ensuring that landforms, urban layouts, and other geographic features remain unchanged. This allows the model to incorporate changes over time, thereby reflecting environmental changes in the real world.

* Climate Tips:Specifiy the climate and atmospheric conditions for generating satellite imagery with the help of a textual conditioning mechanism.

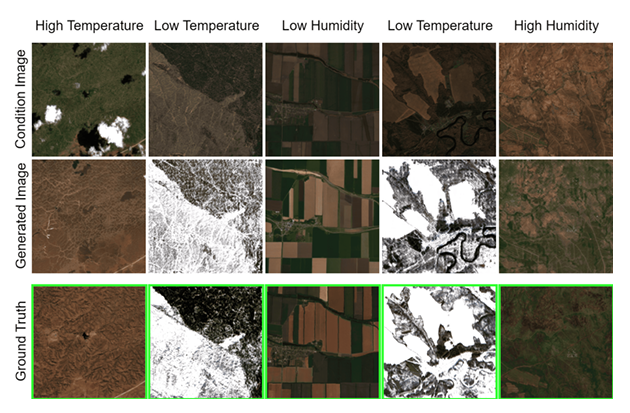

By combining these two adjustment factors, the study enables the model to generate realistic satellite images that incorporate climate change while maintaining spatial consistency. This approach also supports time series generation, which can simulate landscape evolution under changing climate conditions. As shown in the figure below:

The framework of Stable Diffusion 3 and ControlNet is integrated to achieve multi-condition satellite image generation

In terms of prompt structure, in order to effectively generate satellite images,The researchers designed two types of prompts to guide satellite image generation, namely spatial prompts and climate prompts.The former is used to encode basic metadata, including information such as land cover type, location, date and cloud cover, to ensure that the generated image is consistent with the geographical and temporal context; the latter integrates monthly climate variables (temperature, precipitation and solar radiation) based on spatial cues to provide richer environmental condition information for image generation. Both cues use the text encoder of Stable Diffusion 3, with spatial information processed by CLIP and climate data processed by the T5 encoder.

Experimental results: The generation performance exceeds the baseline model, but there is still room for improvement

The researchers designed a multidimensional experimental system and verified the performance of the designed generative model in generating climate-aware satellite images through multiple horizontal and vertical comparisons and experiments.

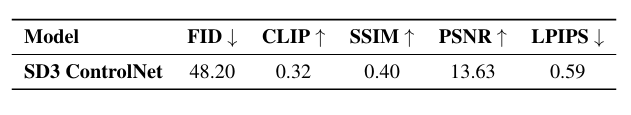

First, the researchers identified five established indicators:Including FID (Fréchet Inception Distance), LPIPS (Learned Perceptual Image Patch Similarity), SSIM (Structural Similarity Index), PSNR (Peak Signal-to-Noise Ratio) and CLIP Score. Among them, FID and LPIPS evaluate image distribution similarity and perceptual difference, SSIM and PSNR measure structural consistency and reconstruction quality, and CLIP Score evaluates text-image alignment.

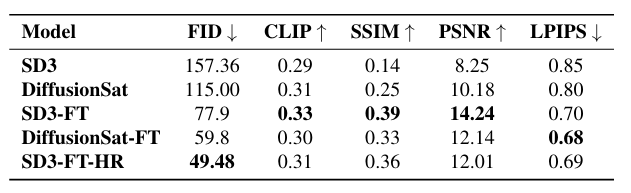

In terms of text-to-image generation, the researchers verified the effectiveness of the designed model by comparing the performance of Stable Diffusion 3 and DiffusionSat and their fine-tuned versions (SD3-FT and DiffusionSat-FT) and SD3-FT-HR on 5,500 geographic points.

As shown in the figure below. The baseline models SD3 and DiffusionSat have the lowest evaluation scores.However, the latter performs significantly better than the former, which shows the advantages of remote sensing pre-training; and the indicators of all fine-tuning models are significantly improved.SD3-FT performs better in CLIP, SSIM, and PSNR, while DiffusionSat-FT performs better in FID and LPIPS. SD3-FT-HR has the lowest FID (lower FID values indicate higher authenticity), which is 49.48, indicating that its generated images have finer details.

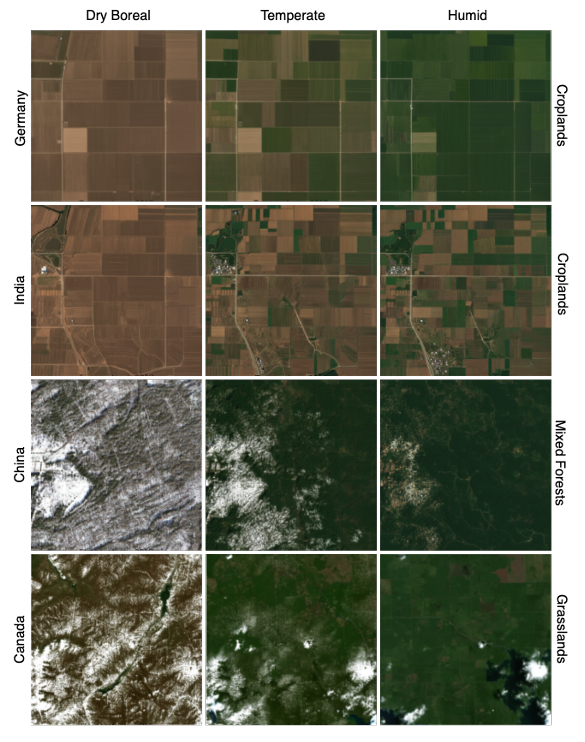

The qualitative results analysis shows that the designed model can capture the regular texture of farmland and grassland and the characteristics of mountainous terrain, especially SD3-FT-HR performs better in vegetation density changes and high-resolution details.

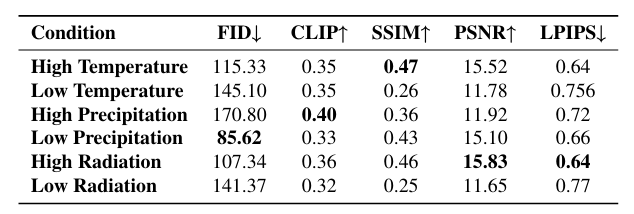

In the climate sensitivity analysis, as shown in the figure below,The vegetation density generated by the model is significantly correlated with climate change.The study conducted a quantitative stress test of the SD3-FT model on samples exhibiting extreme weather conditions. The results showed that under high temperature and high radiation conditions, the FID of the images generated by the model was lower (such as the high radiation FID was 107.34), and the vegetation was more obvious; the opposite was true under low temperature and low radiation conditions, and the simulation effect was slightly worse.

Satellite images generated by SD3-FT for different regions under extreme climate conditions

In the multi-conditional image generation task, the multi-conditional generation combined with ControlNet outperforms the text-to-image model in all indicators.For example, the FID of SD3 ControlNet is 48.20. In addition, the generated image and the real-time image also show strong spatial alignment, maintaining key geographical features while incorporating specific climate changes. As shown in the following figure:

In the robustness test, land cover type has a higher impact on the stability of model generation.Common types such as grasslands and savannas have high generation stability and low FID; complex or rare types such as wetlands and cities have higher FIDs, such as 284.65 for cities, which is due to insufficient training data. In addition, the model's performance on the test set from 2017 to 2024 is stable, and there is no performance degradation on the 2023 to 2024 data set, which proves that the designed model still has high adaptability to unseen spatiotemporal scenarios.

In summary, EcoMapper introduces a generative framework for simulating satellite imagery based on climate variables, with the goal of modeling how landscapes respond to weather and long-term climate change. This opens new opportunities for visualization of climate change impacts, scenario exploration, and enhancing downstream models that integrate satellite and climate data, such as crop yield prediction, land use monitoring, or image filling of cloudy areas.

Machine learning algorithms open up a new paradigm for satellite image generation

The application of generative models in satellite image generation is achieving breakthroughs through deep learning technology, which combines the deep learning capabilities of neural networks with massive satellite data to generate realistic high-resolution, multi-modal remote sensing images. In addition to the above content, the research community in this field has long formed a "relay race", and through continuous innovation of methods and approaches, it has paved a solid path for research in the field of satellite images.

For example, DiffusionSat mentioned in the article is the first large-scale diffusion model designed specifically for satellite images, supporting multispectral input, time series generation and super-resolution.It innovatively uses metadata such as geographic location as conditional information to solve the problem of lack of text annotations in satellite images.The related research was published by a team from Stanford University, titled "DIFFUSIONSAT: A GENERATIVE FOUNDATION MODEL FOR SATELLITE IMAGERY", and was included in ICLR 2024.

Paper address:

https://arxiv.org/pdf/2312.03606

In addition, a team from the Beijing University of Aeronautics and Astronautics published a study titled "MetaEarth: A Generative Foundation Model for Global-scale Remote Sensing Image Generation". They proposed a global-scale generation model called MetaEarth.Through the resolution-guided self-cascading framework, the model is able to generate high-resolution geographic images from low resolution in stages.The sliding window and noise sharing strategy are used to achieve borderless stitching.

Paper address:

https://arxiv.org/pdf/2405.13570

In addition, researchers from MIT, Columbia University, Oxford University and other teams also demonstrated the research progress of generative visual models in synthesizing satellite images for climate change related visualization. They proposed a method called Earth Intelligence Engine (EIE).Combining physically based flood model projections and satellite imagery as input to a deep generative visual model,This is achieved by evaluating the intersection of the generated image and the flood input. The results show that the method performs well in physical consistency and visual quality, outperforming the baseline model without physical conditions, and has generalization capabilities for different remote sensing data and climate events. The title of the paper is "Generating Physically-Consistent Satellite Imagery for Climate Visualizations".

Paper address:

https://arxiv.org/html/2104.04785v5

There is no doubt that generative models are reshaping the generation and application scope of satellite images, from flood warning to global-scale generative surface models, from multispectral data fusion to spatiotemporal dynamic simulation, which not only demonstrates advanced technological breakthroughs, but also shows great application potential. I believe that in the near future, with the further optimization of technologies such as diffusion models and self-cascading frameworks, generative models are expected to inject more powerful impetus into the development of satellite images.

References:

1.https://arxiv.org/pdf/2312.03606

2.https://arxiv.org/html/2104.04785v5

3.https://arxiv.org/pdf/2405.13570