Command Palette

Search for a command to run...

Online Tutorial: Peking University Shi Boxin Team and Bayesian Computing Propose Video Instance Redrawing Method VIRES, With Multiple Performance Indicators Reaching SOTA

Can videos also be photoshopped?

As we all know, video editing is extremely difficult. If you want to adjust or replace the subject, change the scene, color, or remove an object,This often means manual annotation, mask painting, and careful color grading of countless frames.Even experienced post-production teams find it difficult to maintain temporal consistency in editing content in complex scenes. In recent years, with the rapid development of generative AI, functions such as "one-click elimination" have gradually appeared in various editing software, allowing people to see the huge potential of AI in video editing.

Indeed, in practical applications, in addition to the "one-size-fits-all" elimination function, the more frequently used and more difficult functions are often to replace and add subjects, which involve more accurate target recognition, segmentation, and video generation. However, current AI methods still face challenges in handling such video redrawing tasks in complex scenes. For example,Many current zero-shot methods are prone to causing screen flickering when processing continuous video frames; for scenes with complex backgrounds or multiple targets, misalignment, blur or semantic deviation may occur.

In response to this, Peking University Camera Intelligence Laboratory (Shi Boxin's team) teamed up with OpenBayes Bayesian Computing and Associate Professor Li Si's team from the Pattern Recognition Laboratory of the School of Artificial Intelligence at Beijing University of Posts and Telecommunications.They jointly proposed a video example redrawing method VIRES that combines sketch and text guidance.Supports multiple editing operations such as redrawing, replacing, generating and removing the video body.

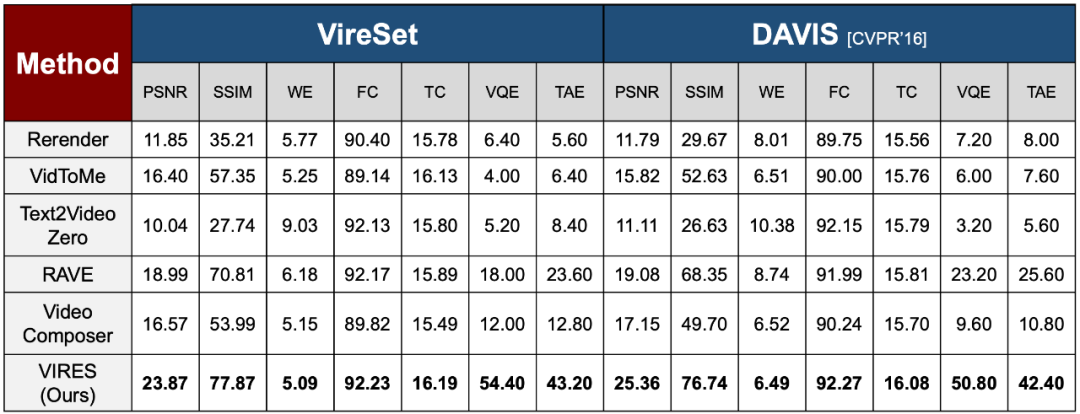

This method uses the prior knowledge of the text-to-video model to ensure temporal consistency, and also proposes a Sequential ControlNet with a standardized adaptive scaling mechanism, which can effectively extract the structural layout and adaptively capture high-contrast sketch details. Furthermore, the research team introduced a sketch attention mechanism in the DiT (diffusion transformer) backbone to interpret and inject fine-grained sketch semantics. Experimental results show thatVIRES outperforms existing SOTA models in many aspects, including video quality, temporal consistency, conditional alignment, and user ratings.

The related research is titled "VIRES: Video Instance Repainting via Sketch and Text Guided Generation" and has been selected for CVPR 2025.

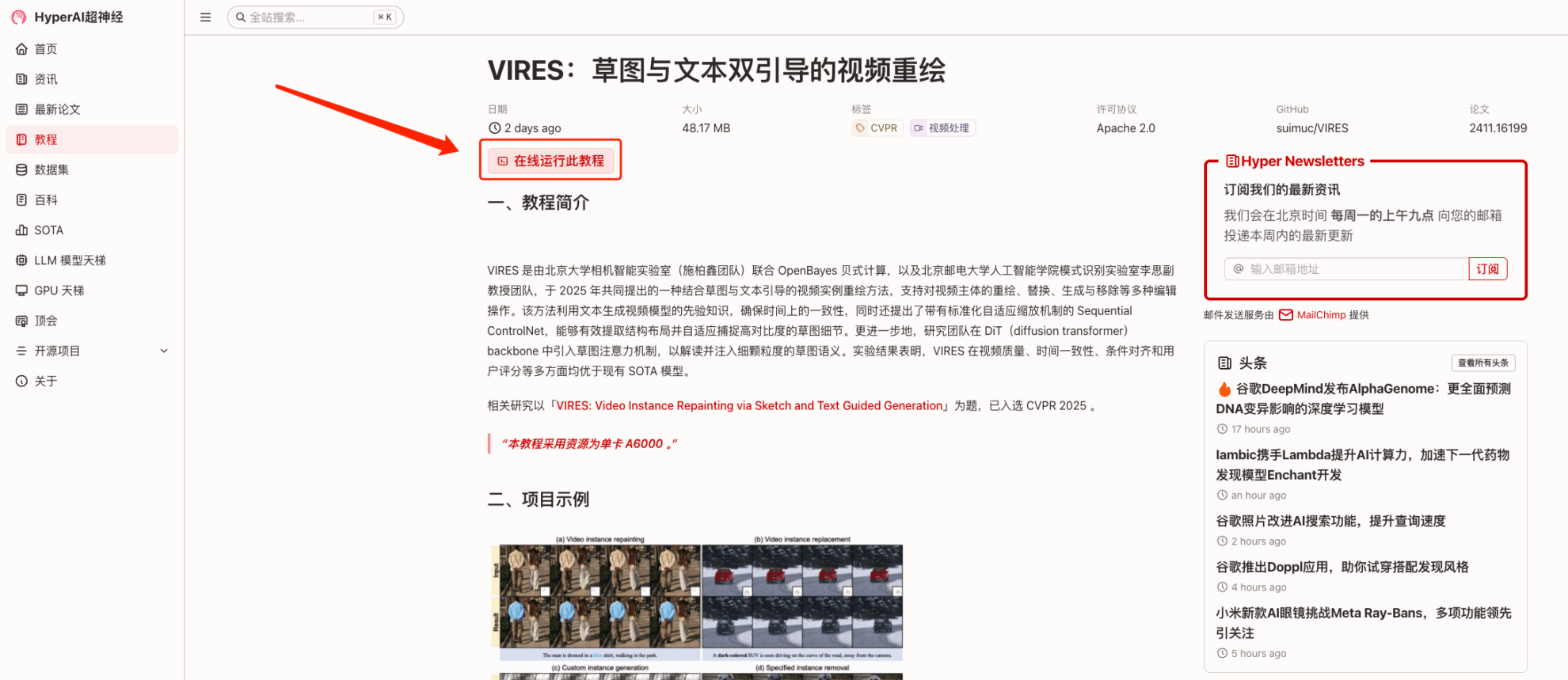

At present, "VIRES: Sketch and Text Dual-Guided Video Redrawing" has been launched on the tutorial section of HyperAI's official website (hyper.ai). With one-click deployment, you can experience high-quality video editing functions online. Taking the generation of customized instances as an example, the author added a running corgi in the outdoor snow, which is lifelike and has no sense of disobedience ⬇️

Tutorial Link:https://go.hyper.ai/49koQ

We have also prepared surprise benefits for new registered users. Use the invitation code "VIRES" to register on the OpenBayes platform.You can get 4 hours of free use of RTX A6000 (the resource is valid for 1 month).Limited quantity, first come first served!

Demo Run

1. After entering the hyper.ai homepage, select the "Tutorials" page, select "VIRES: Video Redrawing with Sketch and Text Dual Guide", and click "Run this tutorial online".

2. After the page jumps, click "Clone" in the upper right corner to clone the tutorial into your own container.

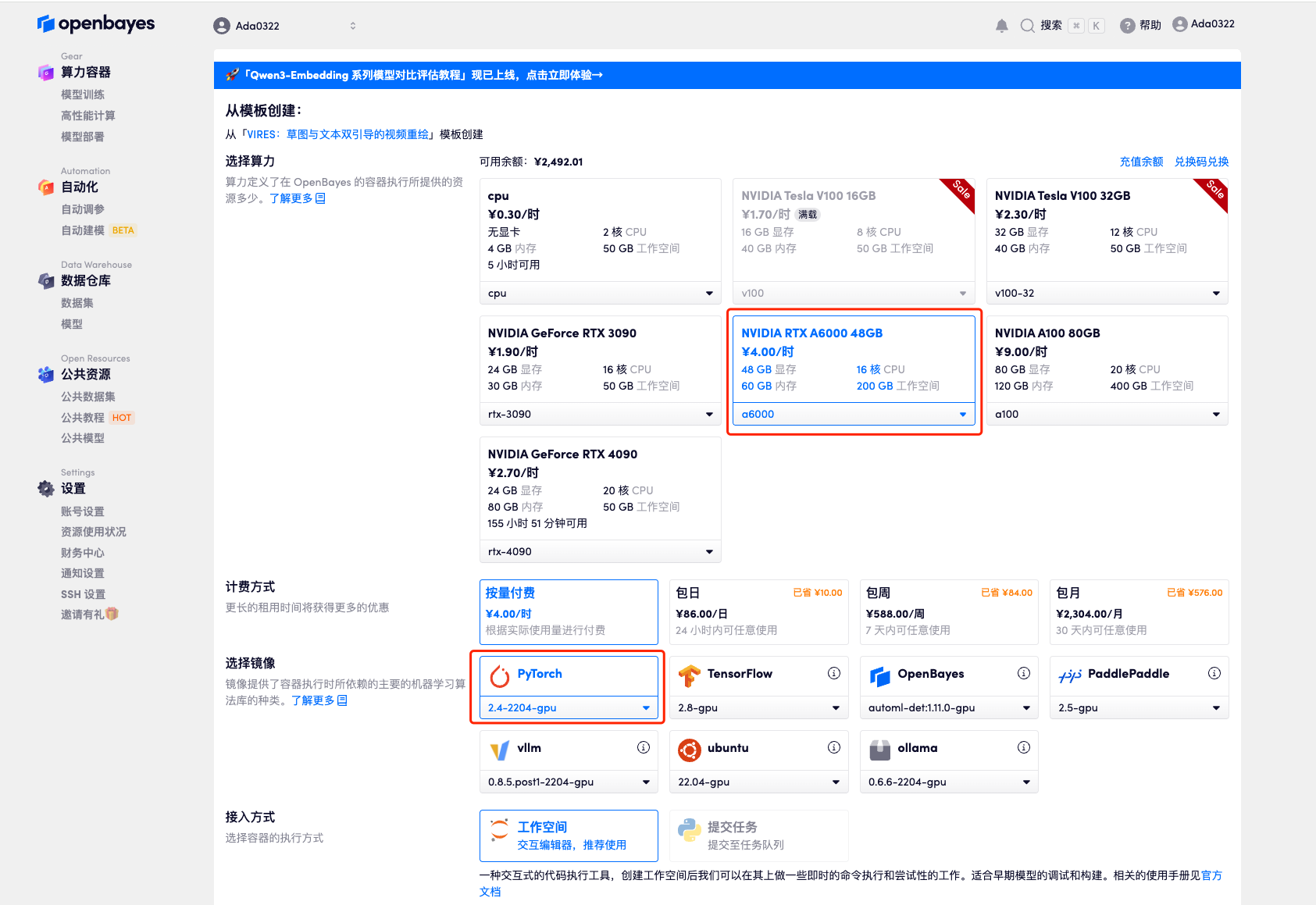

3. Select "NVIDIA RTX A6000" and "PyTorch" images. The OpenBayes platform provides 4 billing methods. You can choose "pay as you go" or "daily/weekly/monthly" according to your needs. Click "Continue". New users can register using the invitation link below to get 4 hours of RTX 4090 + 5 hours of CPU free time!

HyperAI exclusive invitation link (copy and open in browser):

https://openbayes.com/console/signup?r=Ada0322_NR0n

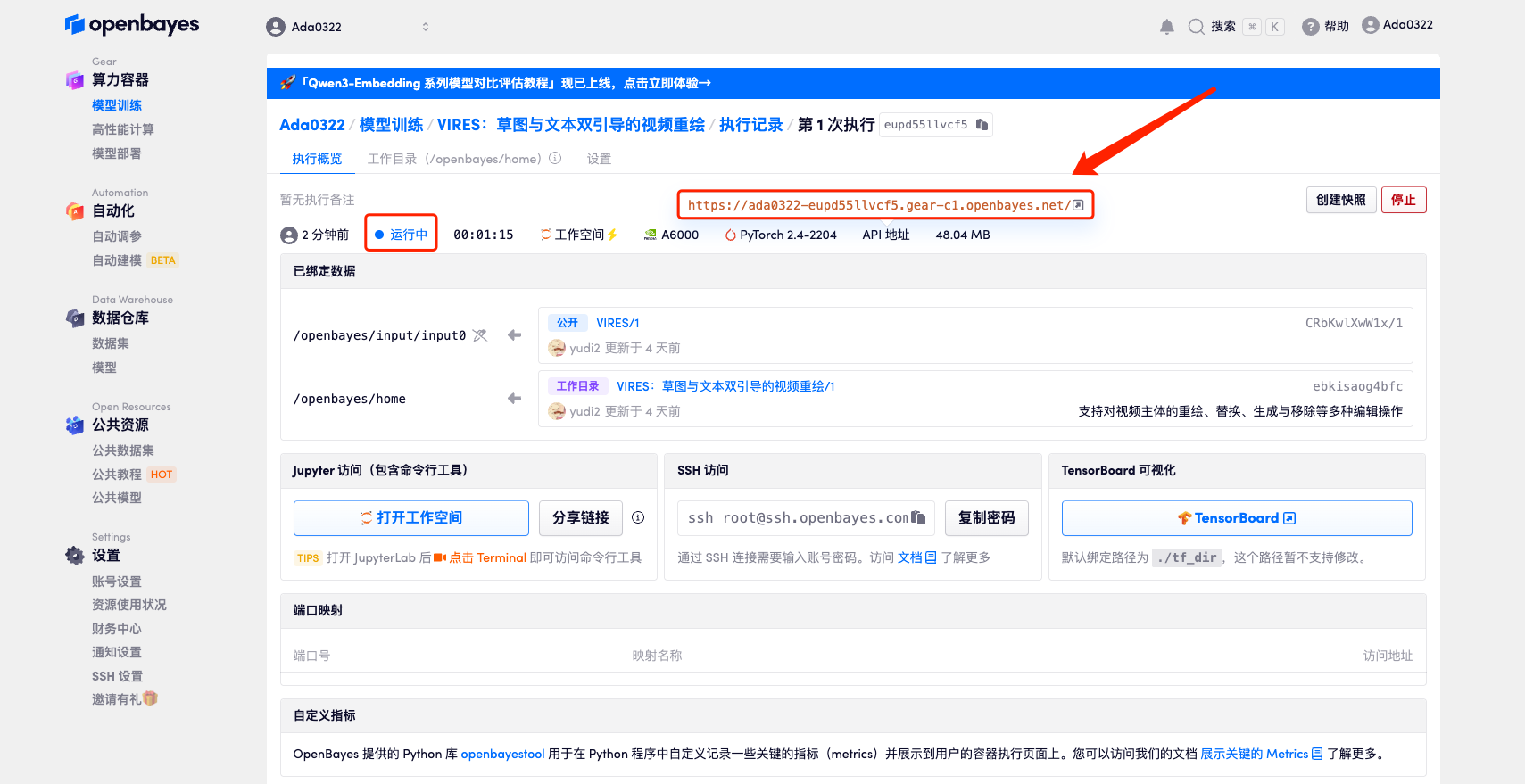

4. Wait for resources to be allocated. The first clone will take about 2 minutes. When the status changes to "Running", click the jump arrow next to "API Address" to jump to the Demo page. Due to the large model, it will take about 3 minutes to display the WebUI interface, otherwise "Bad Gateway" will be displayed. Please note that users must complete real-name authentication before using the API address access function.

Effect Demonstration

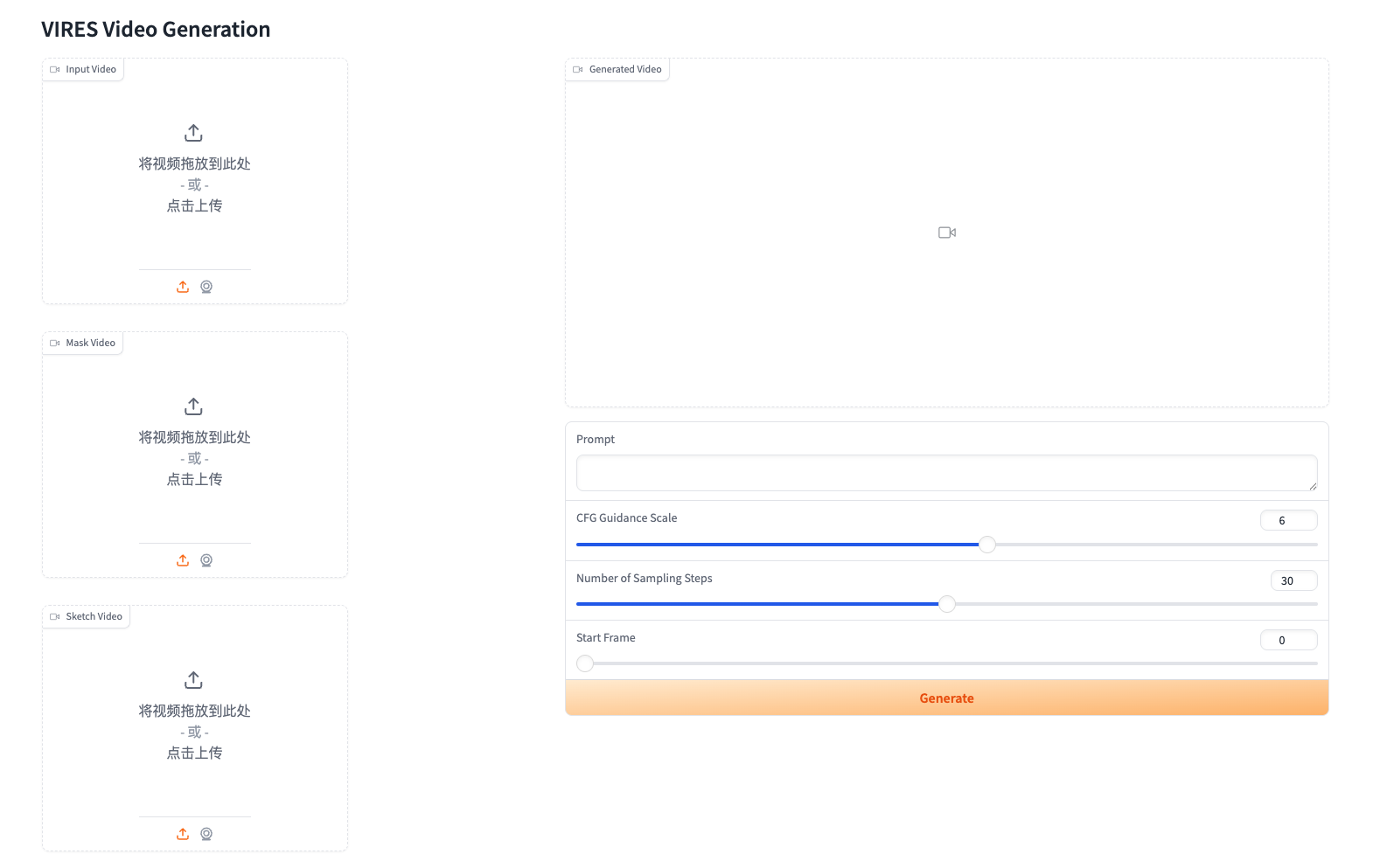

Click the API address to directly experience the model, as shown in the figure below. We have prepared multiple examples for you in the tutorial, welcome to experience!

Taking "custom instance generation" as an example, the author added a running corgi in the outdoor snow, which is lifelike and has no sense of disobedience!

* Original video:

* Generate effect:

* prompt:

The video showcases a delightful scene of a corgi dog joyfully running back and forth in a snowy park. The park is adorned with trees and a playground in the background, setting a picturesque winter atmosphere. The corgi, with its orange and white fur and expressive eyes, repeatedly runs towards and away from the camera, kicking up snow with its paws and displaying a playful demeanor. energy. The video captures the corgi's movements in detail, focusing on its bright eyes, muscular legs, and agile form as it frolics in the snow. The creator likely intended to share a heartwarming and visually appealing moment that showcases the joy and liveliness of a beloved pet in a beautiful snowy setting.

The above is the tutorial recommended by HyperAI this time. Everyone is welcome to come and experience it!

Tutorial Link:https://go.hyper.ai/49koQ