Command Palette

Search for a command to run...

From Whole Heart Model to LLM-based Disease Network Analysis, Li Dong From Tsinghua Chang Gung Hospital Analyzes the Development Trend of Medical Big Models From a Data Perspective

As artificial intelligence technology continues to mature, AI has also brought about a profound change in the medical field - by integrating multi-source data and intelligent algorithms, it has provided new solutions for improving efficiency and accurate diagnosis in the medical industry. Medical data, as the "fuel" of large models and the core carrier of medical decision-making, plays a vital role.Especially in the context of China's accelerated digital transformation of the medical system, analyzing medical models from a data perspective is an inevitable path to innovation.

Recently, at the 2025 Beijing Zhiyuan Conference, Professor Li Dong, Director of the Medical Data Science Center of Tsinghua Chang Gung Hospital, spoke at the "AI+Science & Engineering & Medicine" forum.The topic is "How to use medical data to conduct innovative research in the era of smart healthcare".Combined with the practical experience of Tsinghua Chang Gung Memorial Hospital,From a data perspective, multiple dimensions including the implementation model of the big model, technical limitations, resource reconstruction, and application exploration were shared.

HyperAI has compiled and summarized Professor Li Dong’s in-depth sharing without violating the original intention. The following is the transcript of the speech.

Application and challenges of large models in medical scenarios

"Local deployment + custom development + offline use" mode application

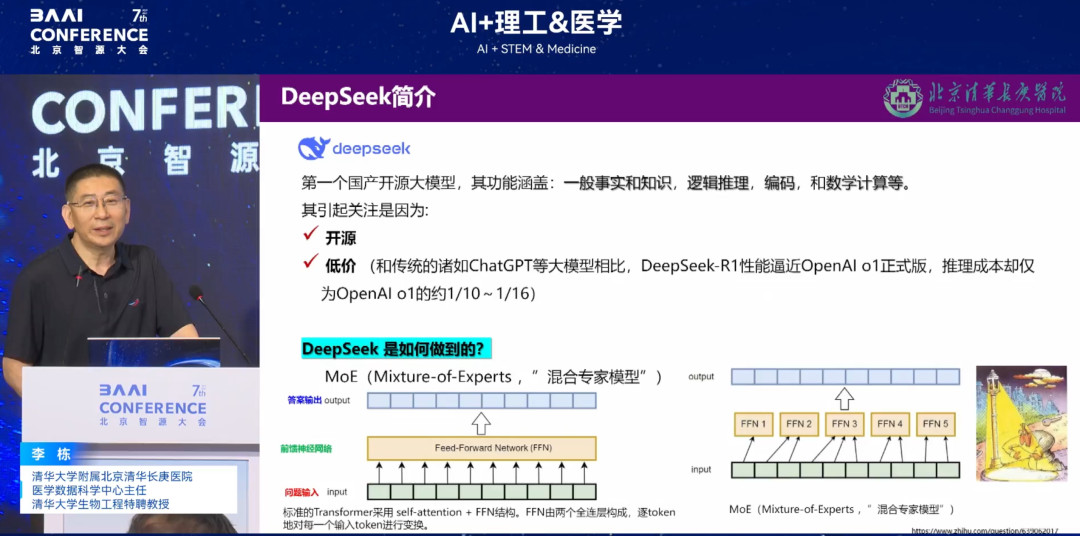

DeepSeek is a large model that has become very popular in recent years. It has three main usage modes in medical scenarios: lightweight usage mode on mobile phones, cloud access mode, and "local deployment + customized development + offline use".

Among these three access methods,"Local deployment + customized development + offline use" has become the optimal solution in practice.Due to the policy restriction of "data cannot leave the hospital", the cloud model cannot use real data to train the model, making it a "static template"; and the lightweight mobile application can only handle simple consultations and cannot meet the core medical needs. Although "local deployment + customized development + offline use" can avoid data leakage and pollution risks (such as the mixing of exogenous hallucination data), it also means that the hospital needs to bear the high computing power costs independently.

Challenges of Large Models in Healthcare

In the process of implementing the large model in the hospital, we faced many challenges.For example, algorithmic flaws, hallucination problems, computing power traps, AI fairness, etc.

* Algorithm flaws:The reason why DeepSeek is so popular is attributed to its open source and low price. The "mixed expert mode (MoE)" it relies on lowers the computing power threshold by splitting the neural network, but it exposes limitations in medical scenarios: first, it cannot support multi-modal consultation, and "single expert decision-making" is prone to missed diagnoses when facing complex cases; second, in order to maintain computing power, data will be released randomly online, which may lead to the loss of key information (such as allergy history, surgical history), and lay hidden dangers for diagnosis and treatment.

* Hallucination Problems:DeepSeek has a certain percentage of hallucinations in certain specific medical scenarios. We use a "triple verification mechanism" (algorithm initial screening + doctor review + knowledge base comparison) to reduce risks, but it increases the time cost of diagnosis and treatment.

* Hash power trap:The power consumption of small computing centers is already staggering, and training more complex large medical models requires continued investment.

* AI Fairness:Leading hospitals monopolize advanced models by leveraging their resource advantages, which may exacerbate the "digital divide".

Reconstruction of medical evaluation standards: from "three-level standard" to "six-factor competition"

Deploying large models in the medical field is far more complicated than expected. The National Health Commission originally hoped to alleviate the imbalance of medical resources through AI, but three months after we deployed it, we found that the results were counterproductive. Far from improving the imbalance of medical resources, the big model is reshaping the competitive landscape of tertiary hospitals.

The evaluation criteria for traditional tertiary hospitals are "famous doctors, equipment, and hardware environment", but in the era of big models, three new thresholds have been added:

The first is powerful computing power.Chang Gung Memorial Hospital once had the second largest computing capacity among medical institutions in Beijing, but it still could not support long-term training. When a small computing center was started, it would even cause a power outage in half of the building.

The second is a first-class data governance engineer.Medical data involves electronic medical records, images, tests and other types of data, which need to be cleaned, labeled and structured. We invested 5 million yuan in a round of data governance, but the effect was not significant;

Finally, a first-class algorithm engineer.Algorithms need to be customized according to medical scenarios to solve the "black box" problem and "hallucination" recognition.

Smart healthcare: data-driven innovation in healthcare models

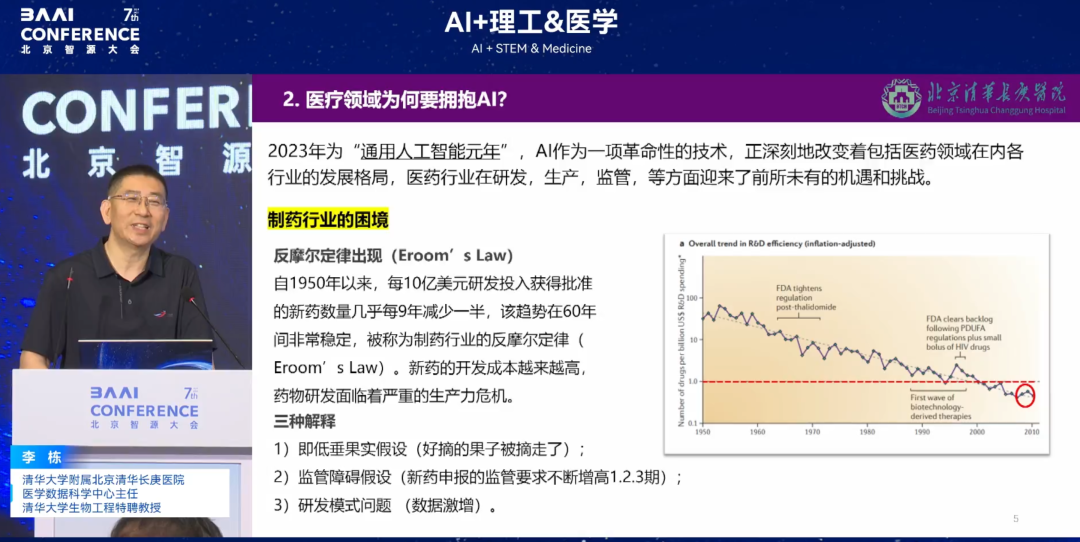

As shown in the figure below, since 1950, the number of new drugs approved for every $1 billion of R&D investment has been reduced by half almost every nine years. This trend has been very stable over the past 60 years. This phenomenon is known as the anti-Moore's Law in the pharmaceutical industry.The cost of developing new drugs is getting higher and higher, and drug research and development is facing a serious productivity crisis.

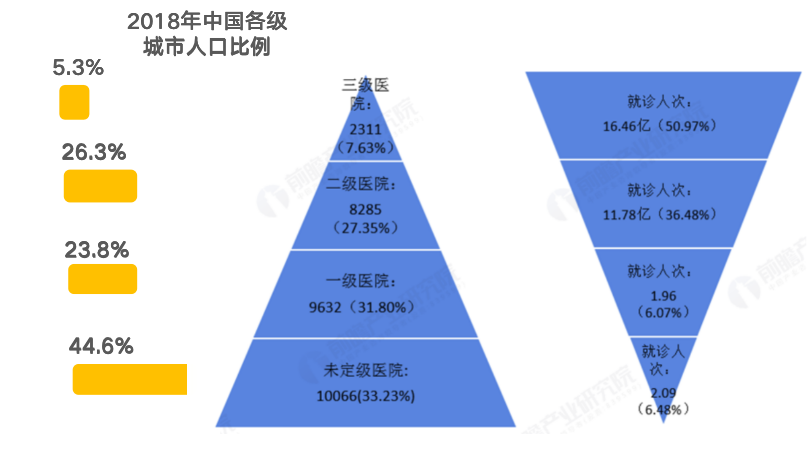

This is not only true for the pharmaceutical industry, but also for the entire medical industry. As shown in the figure below,According to statistics in 2018, the number of Class III hospitals in China accounted for 7.63% of the country, but they were responsible for 50.97% of the country's outpatient volume.A series of problems have emerged, including uneven distribution of medical resources, low diagnosis and treatment efficiency, and pressure from changes in the disease spectrum caused by an aging population. Therefore, in the era of smart healthcare, it is imperative for AI to accelerate medical transformation.

(Unit: household, 100 million people, %), Source: National Health Commission (organized by Prospective Industry Research Institute)

Traditional logistic regression steering algorithm as a benchmark

As the clinical and pharmaceutical fields embrace the trend of AI, traditional logistic regression can be used for clinical research, but it has significant shortcomings. Taking the study of quantitative evaluation of the correlation between long-term air pollution and myocardial fibrosis as an example, traditional methods usually collect sociodemographic characteristics, biomarkers and imaging reports (non-imaging omics), incorporate variables such as PM2.5 and PM10 into the model, and analyze their correlation with diseases (such as body fibrosis).

However, this type of correlation analysis since the 1970s has fundamental flaws: medical research needs to explore causality, but traditional methods can only discover the correlation of preset variables and cannot find new risk factors that have not been pre-screened into the model, falling into the "chicken and egg" cycle paradox. In addition,Traditional correlation analysis has difficulty in handling variable interactions and can usually only analyze the interactions of two or three factors. It cannot accommodate hundreds or thousands of variables and cannot directly access image data.

In contrast,Algorithmic analysis has significant advantages: it can handle multivariate interactions, incorporate massive amounts of data (including images), and through repeated training of tokens (running 10,000 or even 100 million times),If a risk factor persists, it can be considered "causal," which is closer to the causal relationship required for medical research.

Reconstructing the 4 Elements of Medical AI: Scenario-Prioritized Resource Allocation

Smart healthcare is a new medical model that uses modern information technology to improve and enhance medical services and management, aiming to improve medical efficiency, reduce medical costs, and improve patients' medical experience. Its core foundation is composed of big data, cloud computing, the Internet of Things, and AI.

In traditional cognition, the three elements of artificial intelligence are algorithms, computing power, and data.However, in the medical scenario, we propose the "four-element theory", namely algorithm, computing power, data, and application scenarios, with their respective proportions being 10%, 30%, 40%, and 20%.Since there is not much difference between algorithms at home and abroad, and most of them are open source, they account for the lowest proportion of medical AI elements; computing power can be relieved by leasing cloud computing power; application scenarios are used as an auxiliary to provide semantics to convert clinical needs into "tasks" that can be understood by the model. From this we found that "data" is the decisive factor. China leads the world in medical data volume, but the low rate of electronicization has made it an "unmined gold mine." It is estimated that by 2028, the growth of traditional structured medical data in the world will be difficult to meet the needs of large models (data collection began in 1550), and China will become the core data base for global medical research and development because its historical data has not been fully informatized.

Two approaches to medical data training

Many people have doubts about the training of large models, such as whether hospital data can be used directly for training. However, based on experience, this approach is not feasible.There are two approaches to training large models.

First of all, the data requirements of large models far exceed those of clinical research.Although it is not easy for hospitals to manage data to the extent that it can be used for clinical research, large model training has higher requirements for data. This is because although large models have unsupervised learning capabilities, relying solely on unsupervised learning is like a doctor naturally growing into a chief physician, which is too slow and cannot meet actual needs. If you want to speed up the training, you need to equip it with a doctor's decision tree, so you can't just simply input the data into the large model, but you need to process and optimize the data more deeply.

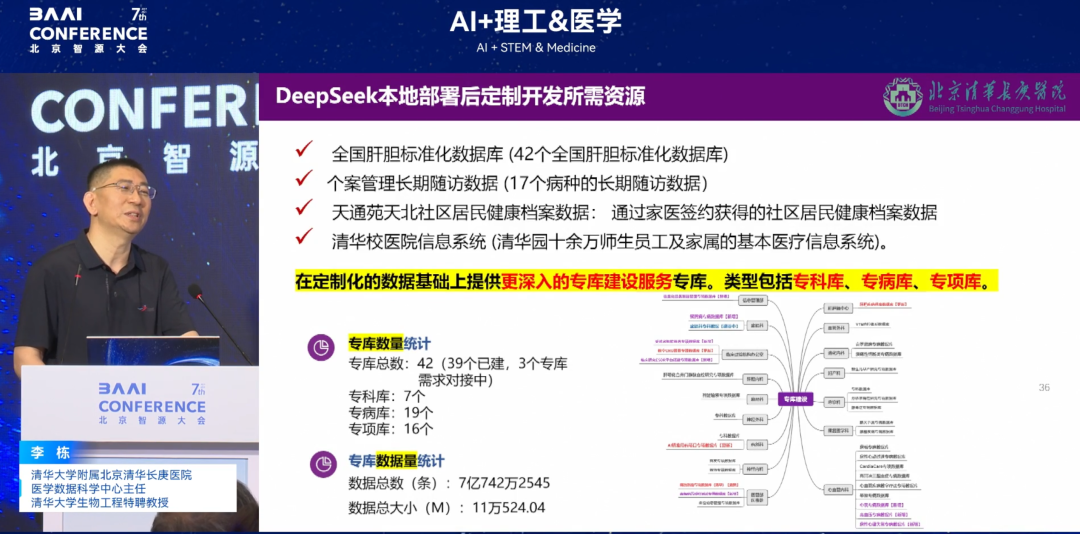

Secondly, if hospitals want to use large models for training directly, they must adopt the data governance model of "library + specialist library + special disease library + special project library".This model was developed after integrating the practical exploration of several hospitals such as Tiantan Hospital, and is considered to be a data model that is currently more suitable for large model training. This hierarchical data governance structure can provide high-quality and systematic data for large models in a more targeted manner, thereby improving the effect and efficiency of large model training.

Cardiovascular and diabetes research: a model for data-driven innovation

Finally, let me briefly talk about two studies we conducted based on smart healthcare.

Cardiovascular AI: From “wearable devices” to “whole heart models”

According to Statista's forecast of the global smart healthcare market size in 2025, the cardiovascular field accounts for a quarter, making it the largest market segment. Digitalization runs through the acute and recovery stages of cardiovascular diseases.

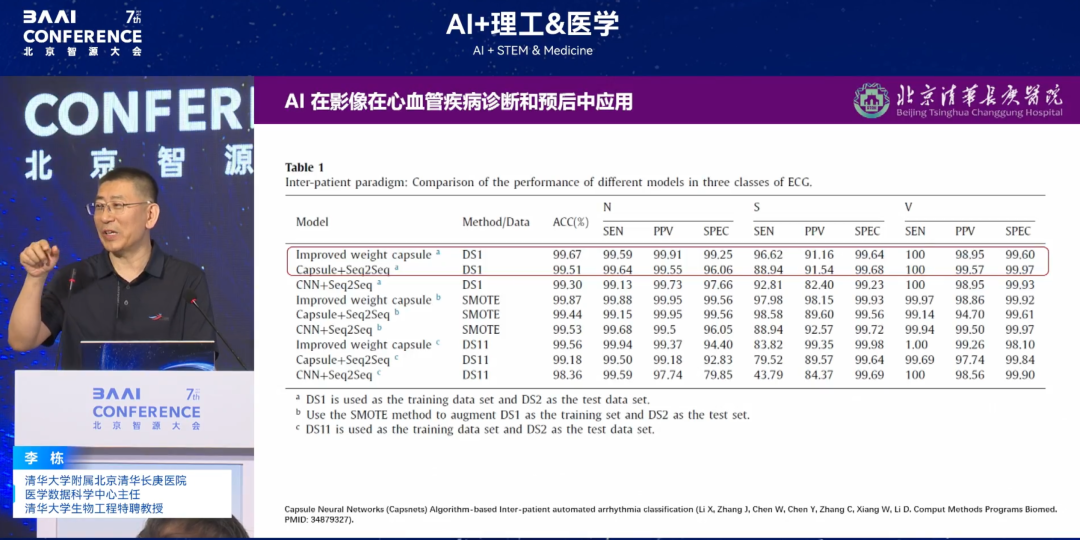

After the first generation of Apple Watch was launched, its single lead achieved more accurate predictions than twelve leads, and was able to identify atrial fibrillation (AFib) and other types of arrhythmias in the wearer, achieving innovation in primary care. Based on this inspiration,Our team proposed the hypothesis: "Since the electrocardiogram (ECG) waveform based on wearable devices can predict arrhythmia early, can other wearable devices without ECG function achieve the same effect only through heart rate?"After a series of verifications, we found that other devices can achieve the same effect with an accuracy of up to 99.67%. Our team collected the heartbeats per minute of ordinary sports bracelets within 24 hours to predict the duration of arrhythmia.

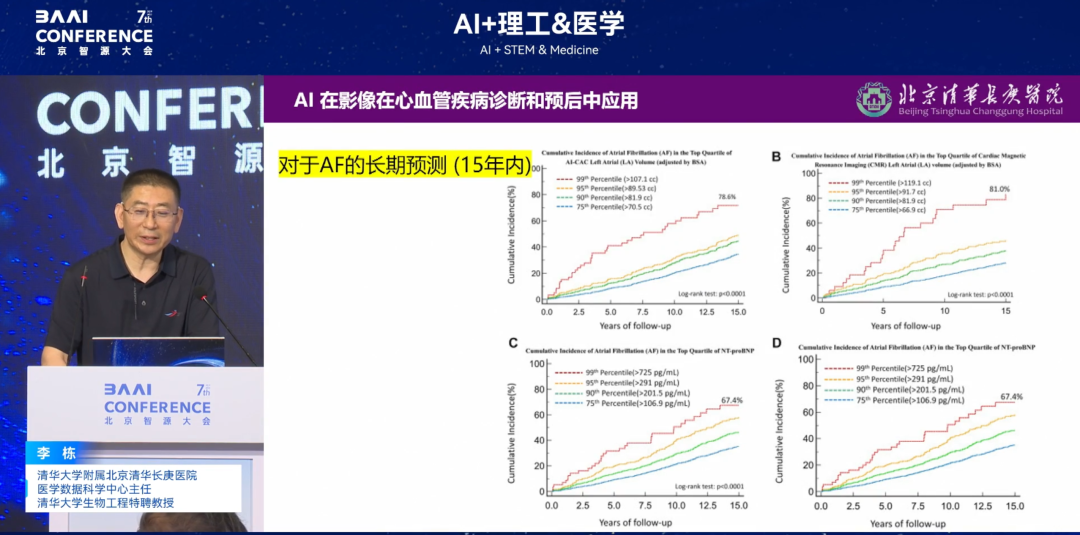

Going further,We proposed a second hypothesis: "In addition to ECG waveforms and heart rate, can arrhythmias be predicted early? Is the contraction/relaxation of the four chambers of the heart involved in arrhythmias? If so, can it be predicted?"After further verification by us, the "whole heart model" that integrates multi-dimensional data such as cardiovascular, nerves, and muscles can "package" the heart through algorithms. The final results show that integrating all cardiac function data to predict arrhythmia risk can achieve accurate prediction of the risk of disease for up to 15 years. The relevant results were published in a JACC sub-journal (impact factor 24+).

* Paper Title:AI-Enabled CT Cardiac Chamber Volumetry Predicts Atrial Fibrillation and Stroke Comparable to MRI

* Paper address:https://www.jacc.org/doi/abs/10.1016/j.jacadv.2024.101300

Diabetes research: from "complication spectrum" to "causal mechanism"

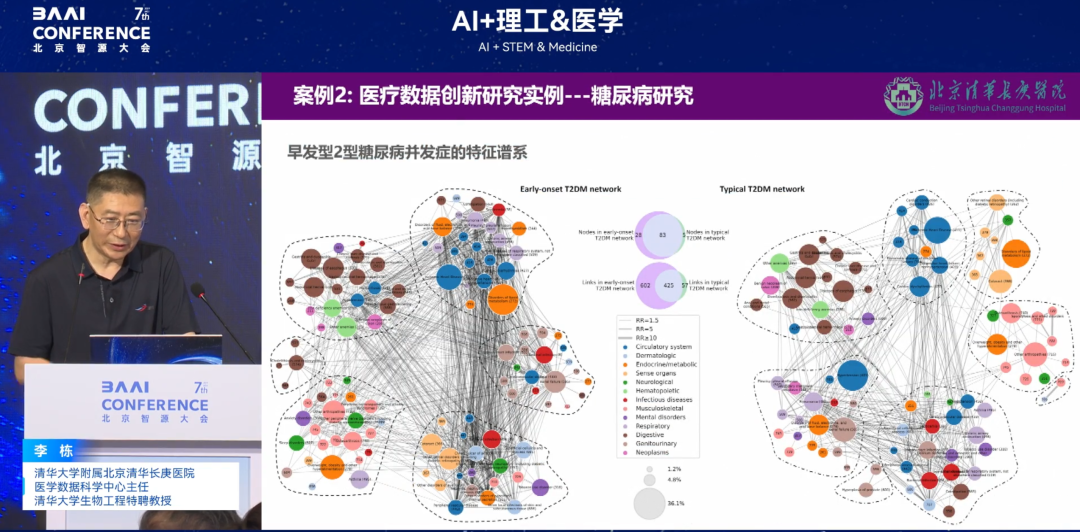

Another study is a disease network analysis based on a large model. Previously, people believed that early-onset diabetes (onset before the age of 40) was milder than normal onset diabetes. For example, a person who develops diabetes at the age of 20 may have normal blood pressure and blood lipids and no complications at the age of 30, while a person who develops diabetes at the age of 40 may have abnormal indicators and other diseases at the age of 50. However, through the study of the spectrum of diabetes complications in the whole body, it was found thatThe system interactions of complications of early-onset diabetes are more intensive, and there are vector pathway associations, which is different from people's inherent cognition.

(Left: Early-onset diabetes; Right: Normally diagnosed diabetes; each circle of different colors represents a different system)

Future Outlook: A New Paradigm for Healthcare in the Era of Data Intelligence

In recent years, China's medical AI is accelerating. As Academician Li Guojie said, "Now human beings are in the intelligent stage of the information age and are moving towards the intelligent era. The intelligent scientific research paradigm has emerged and can become the "fifth scientific research paradigm." We cannot make mistakes in our understanding of the times. If we miss the opportunity of the transformation of the times, we will suffer a historic dimensionality reduction blow."

In the future, we need to work on the following areas:

* Doctor level:Data is an inevitable trend in the future, and interdisciplinary cooperation (combining medicine and engineering) is a necessary condition for conducting innovative research using data.Cultivating "medical + data" amphibious talents is a top priority.Doctors need to master certain AI knowledge (such as model evaluation and data interpretation) in order to better collaborate with algorithm engineers and data scientists to improve the application effect of AI in healthcare.

* Algorithm level:Today, data-driven models face the problem of high training costs. In the future, we hope to develop lightweight models that are more suitable for medical scenarios.Lower the computing power threshold and improve the interpretability and trustworthiness of the algorithm’s clinical application.In particular, it will increase the acceptance of AI among doctors and patients and integrate AI into medical care.

* Hospital level:When you don't have good research ideas and are at a loss for innovation, you might as well start with data and make good use of the latest information science research methods. Therefore, hospitals should encourage and give strong support. Scientific research data rooms should be equipped with corresponding computing, storage, network, security and other infrastructure to provide key services for medical innovation at the data level.

Although the big model is not a panacea, the data thinking behind it is reshaping the essence of medicine. When we truly learn to tell stories with data and find answers with algorithms, we can deeply integrate "data intelligence + medical essence" to seize the initiative in medical innovation and make smart medicine truly serve patients and give back to society.

About Professor Li Dong

Professor Li Dong, MD, is an internationally renowned expert in medical data science. He is currently the director of the Medical Data Science Center of Beijing Tsinghua Chang Gung Hospital affiliated to Tsinghua University and a distinguished professor of bioengineering at Tsinghua University. Professor Li Dong served as the first Chinese director of the Clinical Research Center at the Harbor Medical Center of the University of California, Los Angeles, and was hired as a distinguished professor at the West China Hospital of Sichuan University.

Professor Li Dong has published more than 100 SCI papers in top international academic journals, which have been cited nearly 4,000 times in the past five years. He has also published more than 220 academic conference abstracts. In addition, he has been invited to give more than 40 academic lectures, participated in the writing of 4 academic monographs, and has 2 invention patents.

His research covers a wide range of areas, including clinical research design, measurement and evaluation, modeling analysis, medical data mining, and the application of artificial intelligence in medicine. He has extensive experience in leading clinical research teams to conduct medical big data mining and develop intelligent medical decision analysis systems, and is a recognized authority in this field.