Command Palette

Search for a command to run...

Online Tutorial | Won the Best Paper of CVPR 2025, the Universal 3D Vision Model VGGT Inference Speed Can Reach Seconds

On June 13, CVPR 2025, one of the world's top three computer vision conferences, announced the best paper awards. According to official data, CVPR 2025 received 13,008 papers submitted by more than 40,000 authors, an increase of 13% compared to last year.The conference finally accepted 2,872 papers, with an overall acceptance rate of approximately 22.1%.

Among the nearly 3,000 papers that were finally selected, only one broke through and won the title of Best Paper. You can imagine how valuable it is.This achievement is a general 3D vision model VGGT based on a pure feedforward Transformer architecture jointly proposed by Oxford University and Meta AI.It breaks through the challenge of previous models being limited to a single task and can directly infer all key 3D properties of the scene from one, several, or hundreds of perspectives, including camera parameters, point maps, depth maps, and 3D point tracks.

* Paper Title:「VGGT: Visual Geometry Grounded Transformer"

* Paper link:https://go.hyper.ai/Nmgxd

More importantly, this method is simple and efficient.Capable of completing image reconstruction in less than 1 second.The performance of VGGT exceeds that of alternatives that rely on visual geometry optimization post-processing techniques. Experimental results show that VGGT has achieved SOTA level performance in multiple 3D tasks, including camera parameter estimation, multi-view depth estimation, dense point cloud reconstruction, and 3D point tracking.

at present,The tutorial section of HyperAI Hyper.ai has launched "VGGT: A Universal 3D Vision Model".Support one-click deployment experience effect, come and feel the powerful strength of this breakthrough⬇️

* Tutorial link:https://go.hyper.ai/GX3bC

Demo Run

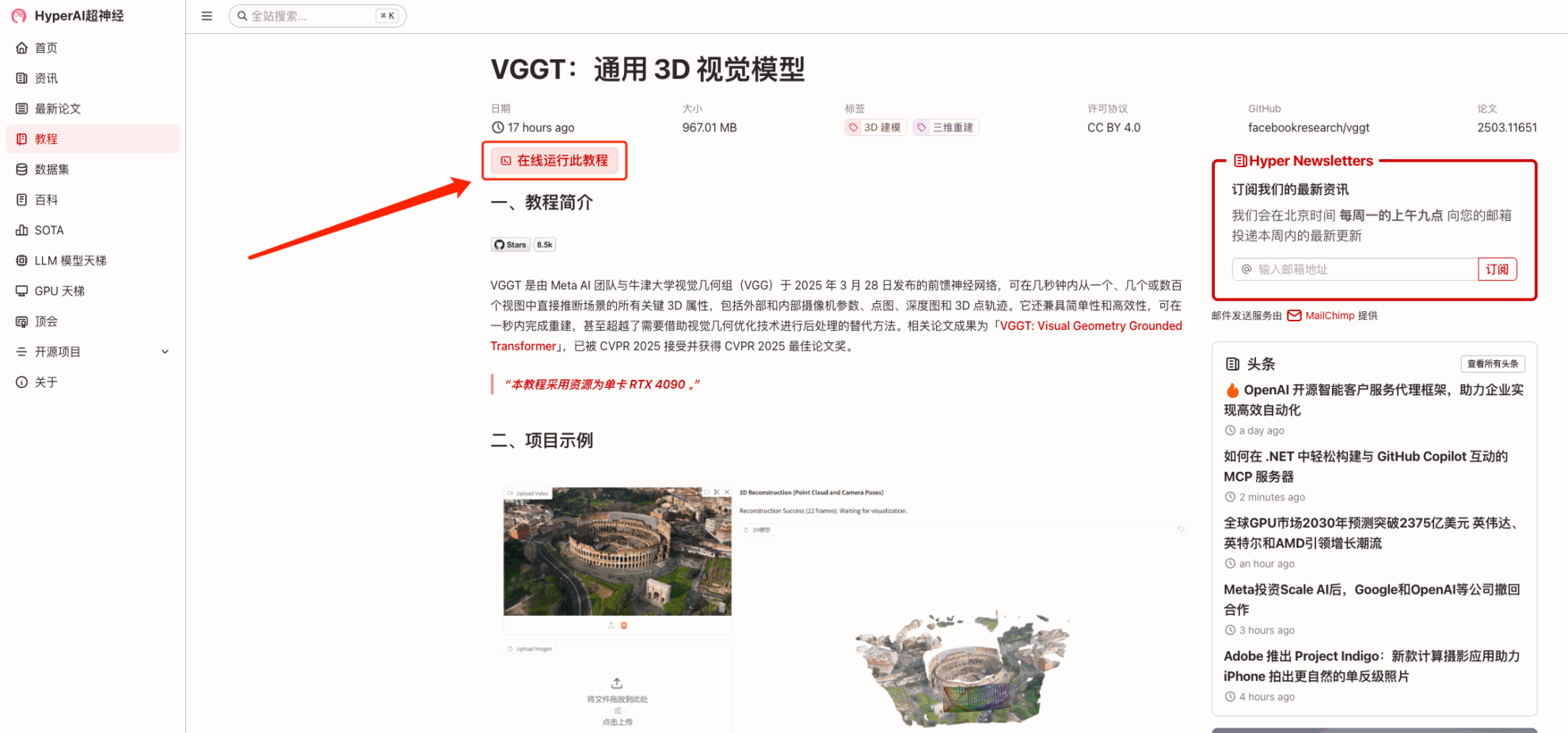

1. After entering the hyper.ai homepage, select the "Tutorial" page, select "VGGT: General 3D Vision Model", and click "Run this tutorial online".

2. After the page jumps, click "Clone" in the upper right corner to clone the tutorial into your own container.

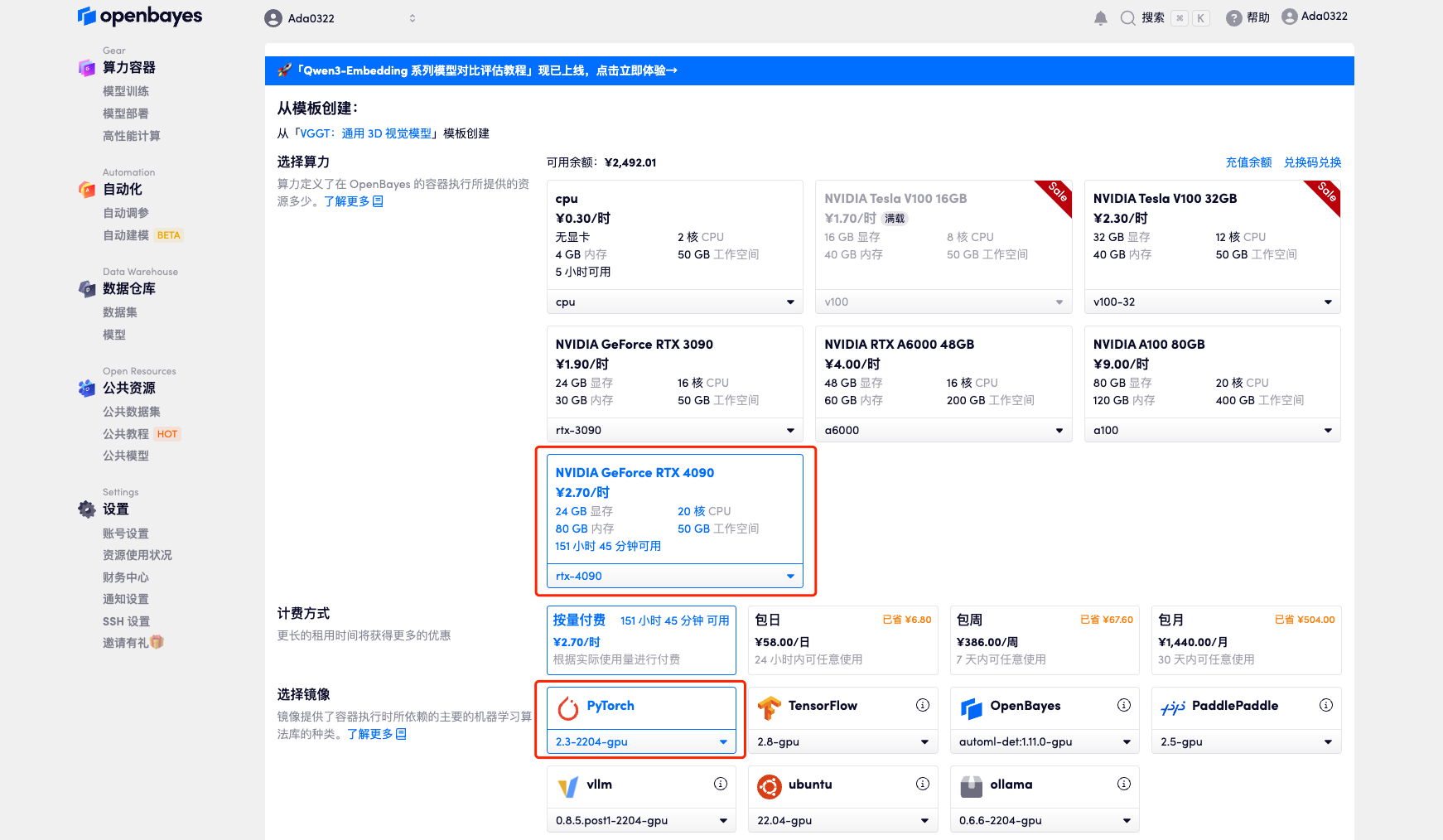

3. Select "NVIDIA RTX 4090" and "PyTorch" images. The OpenBayes platform provides 4 billing methods. You can choose "Pay as you go" or "Pay per day/week/month" according to your needs. Click "Continue". New users can register using the invitation link below to get 4 hours of RTX 4090 + 5 hours of CPU free time!

HyperAI exclusive invitation link (copy and open in browser):

https://openbayes.com/console/signup?r=Ada0322_NR0n

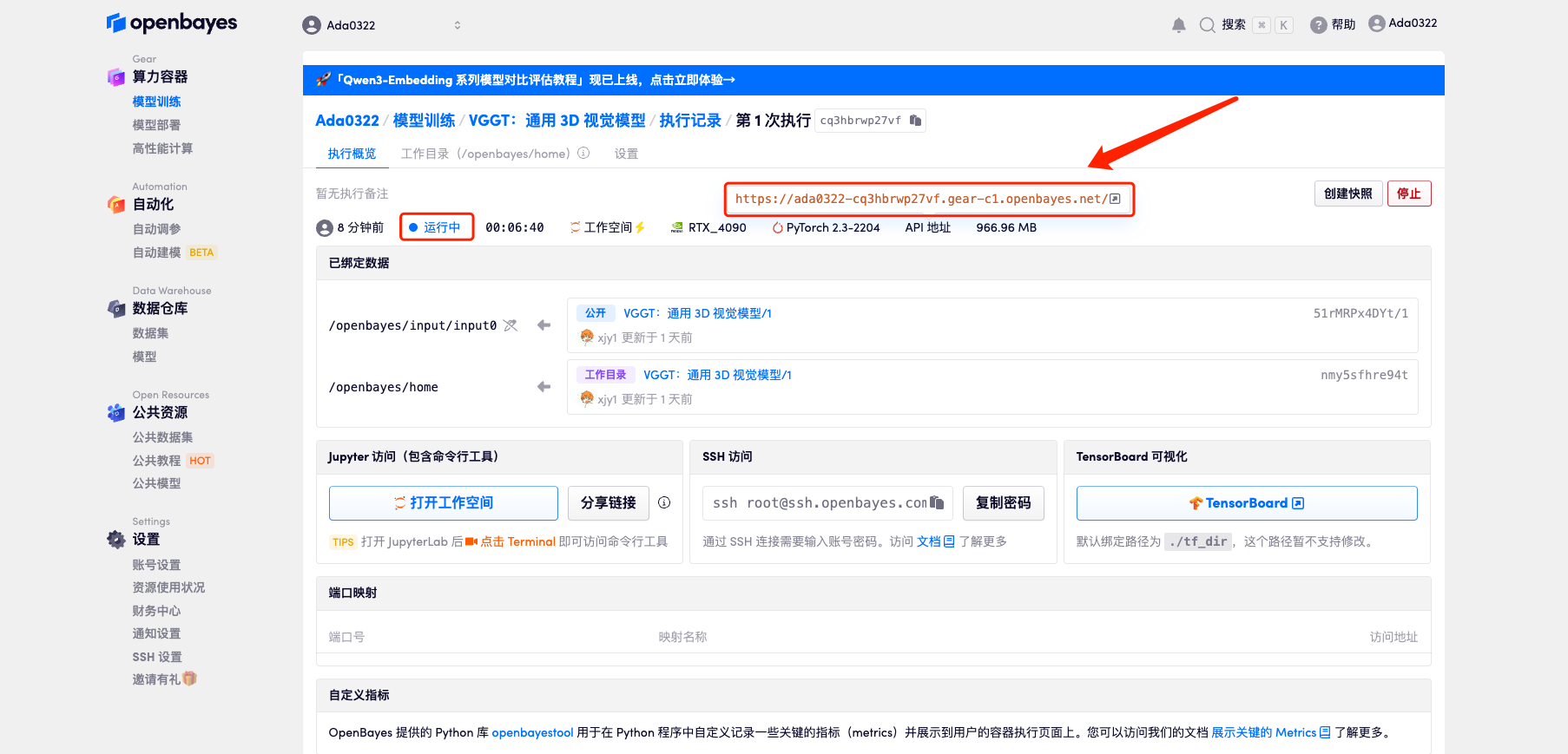

4. Wait for resources to be allocated. The first clone will take about 2 minutes. When the status changes to "Running", click the jump arrow next to "API Address" to jump to the Demo page. Due to the large model, it will take about 3 minutes to display the WebUI interface, otherwise "Bad Gateway" will be displayed. Please note that users must complete real-name authentication before using the API address access function.

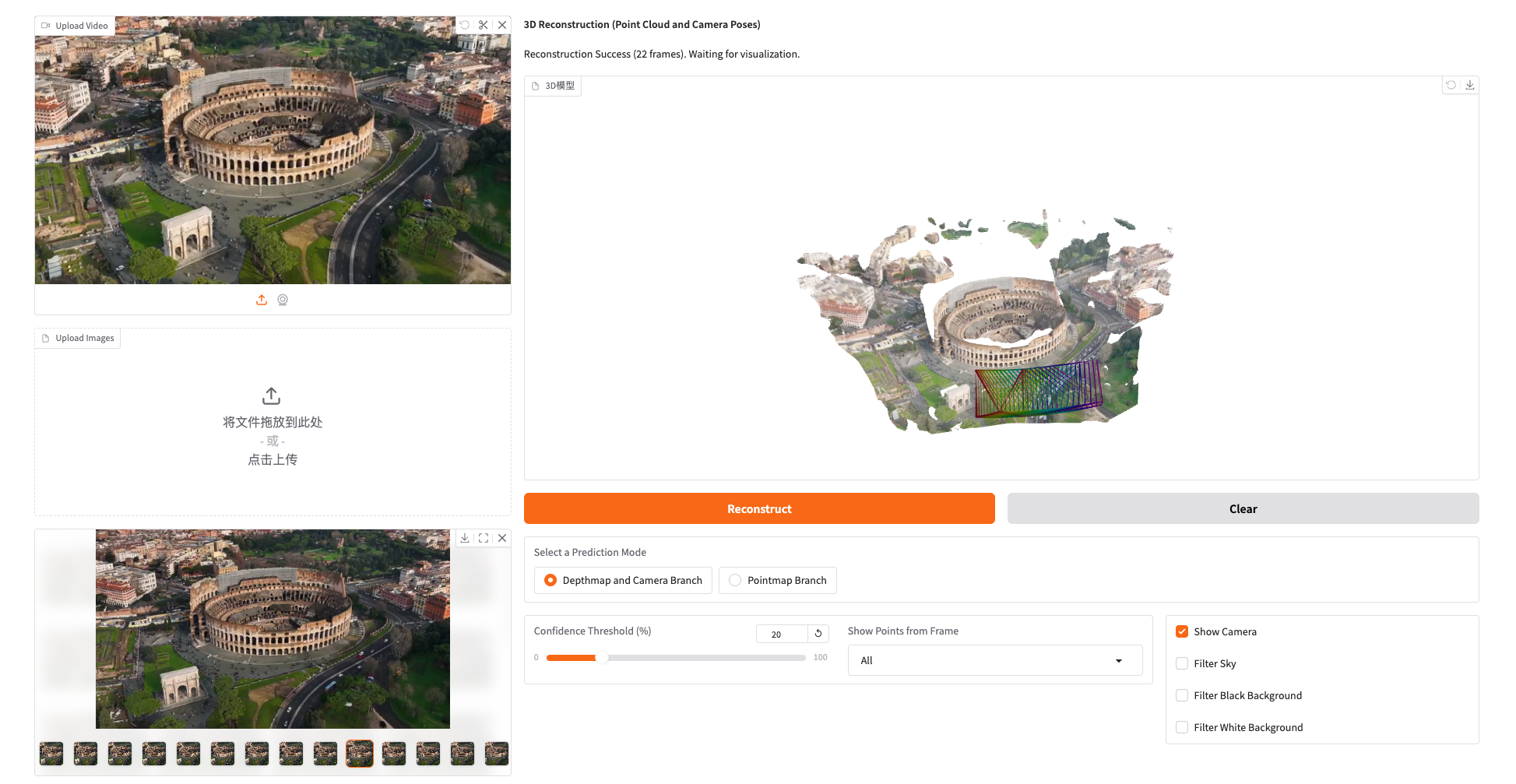

Effect Demonstration

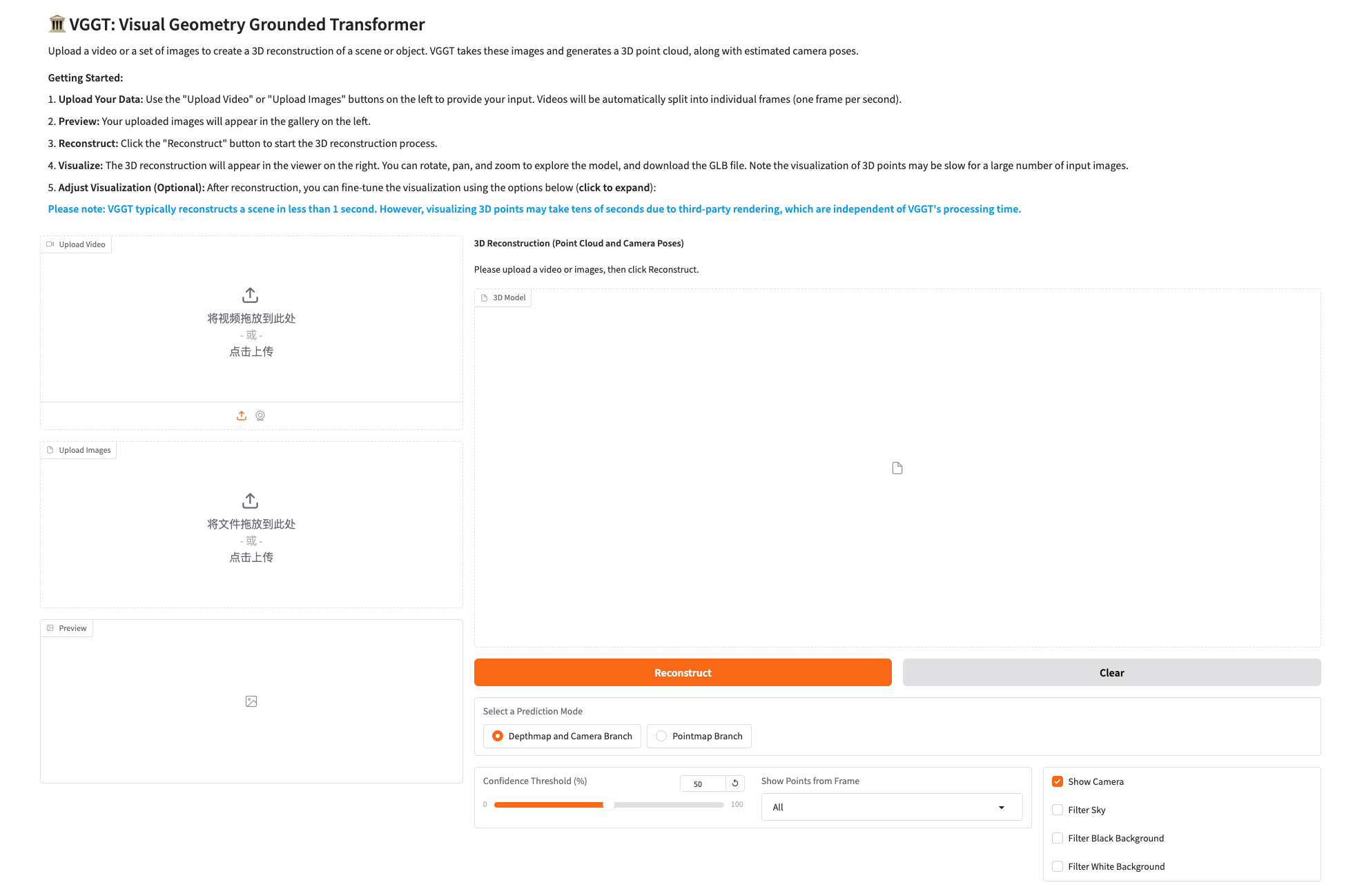

1. Click the API address to enter the demo page to experience the model, as shown below:

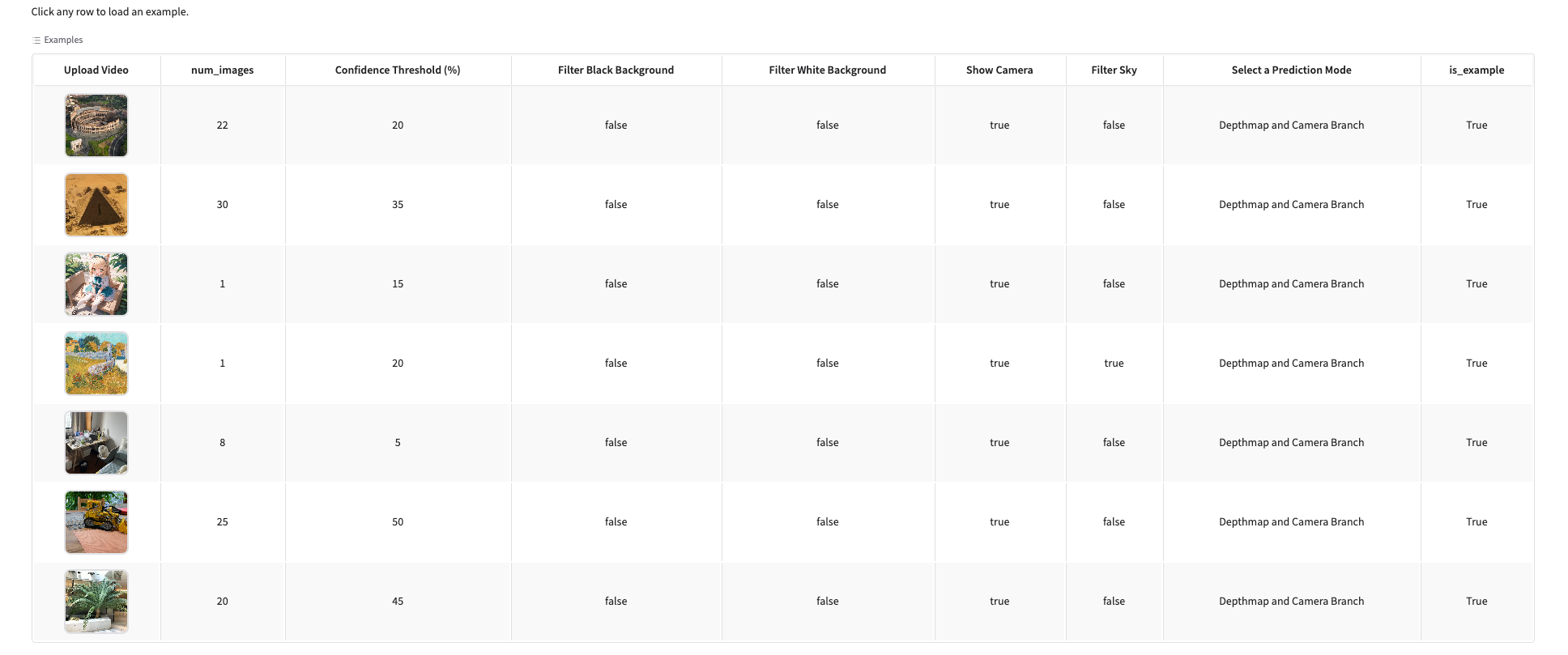

2. We have prepared examples for you in the tutorial. You can directly experience the model effect by clicking on them, as shown below.

This is the recommended tutorial for this issue. Welcome everyone to try it out for yourself⬇️

* Tutorial link:https://go.hyper.ai/GX3bC