Command Palette

Search for a command to run...

Online Tutorial | National University of Singapore Show Lab Releases OmniConsistency Model to Achieve plug-and-play Image Style Transfer

Image stylization aims to transform an image from one style to another while keeping the semantic content unchanged through a stylized model. In recent years, with the emergence of diffusion models, mainstream image stylization methods have shifted from deep neural networks to methods fine-tuned through low-rank adaptation (LoRA), and combined with image consistency modules, the stylization quality has been significantly improved.

Although the image stylization model has received good feedback from the market,However, current image stylization methods still face three key challenges:First, there is limited consistency between the stylized output and the input - although existing modules can ensure overall structural alignment, they have difficulty retaining details and semantic information in complex scenes; second, there is a style degradation problem in image-to-image scenarios - the style fidelity of LoRA and IPAdapter in this setting is usually lower than that of text-generated images; third, the layout control is not flexible enough - methods that rely on rigid conditions (such as edges, sketches, postures) find it difficult to support creative structural changes such as Q-version transformations.

To bridge this gap,On May 28, 2025, the Show Lab of the National University of Singapore released OmniConsistency, a universal consistency plug-in that uses the massively diffuse Transformer (DiT).It is a completely plug-and-play design, compatible with any style of LoRA under the Flux framework, and based on the consistency learning mechanism of stylized image pairs to achieve robust generalization.

Experiments show that OmniConsistency significantly improves visual coherence and aesthetic quality.It achieves comparable performance to GPT-4o, filling the performance gap between open source models and commercial models in terms of style consistency.It provides a low-cost, highly controllable solution for AI creation. In addition, its compatibility and plug-and-play features also lower the threshold for developers and creators to use it.

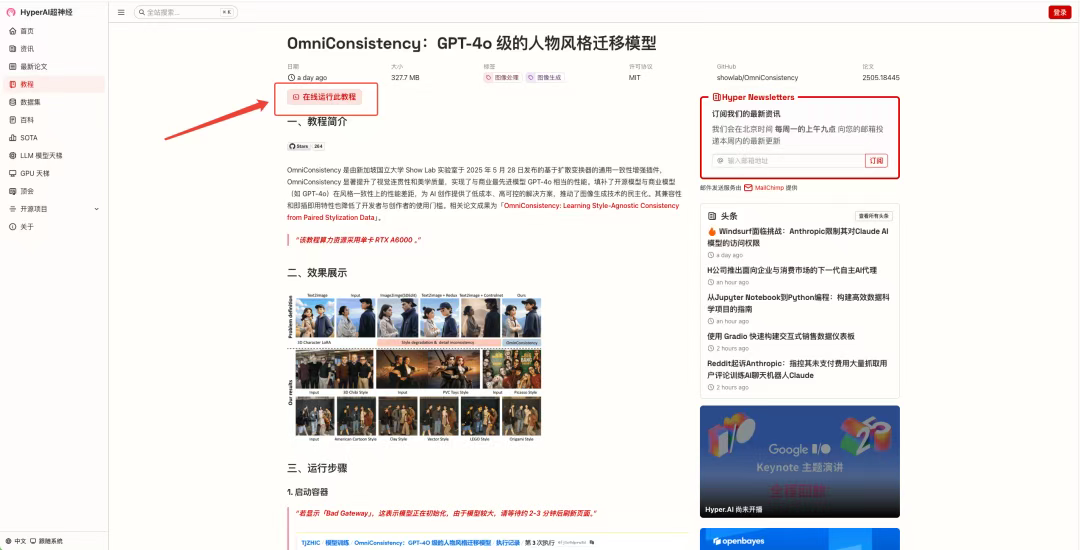

at present,"OmniConsistency: A GPT-4o-level Character Style Transfer Model" has been launched in the "Tutorials" section of HyperAI's official website.Click the link below to experience the one-click deployment tutorial ⬇️

* Tutorial address:https://go.hyper.ai/3mCyv

We have also prepared surprise benefits for new registered users. Use the invitation code "OmniConsistency" to register on the OpenBayes platform to get 4 hours of free use of RTX A6000 (the resource is valid for 1 month). The quantity is limited, first come first served!

Demo Run

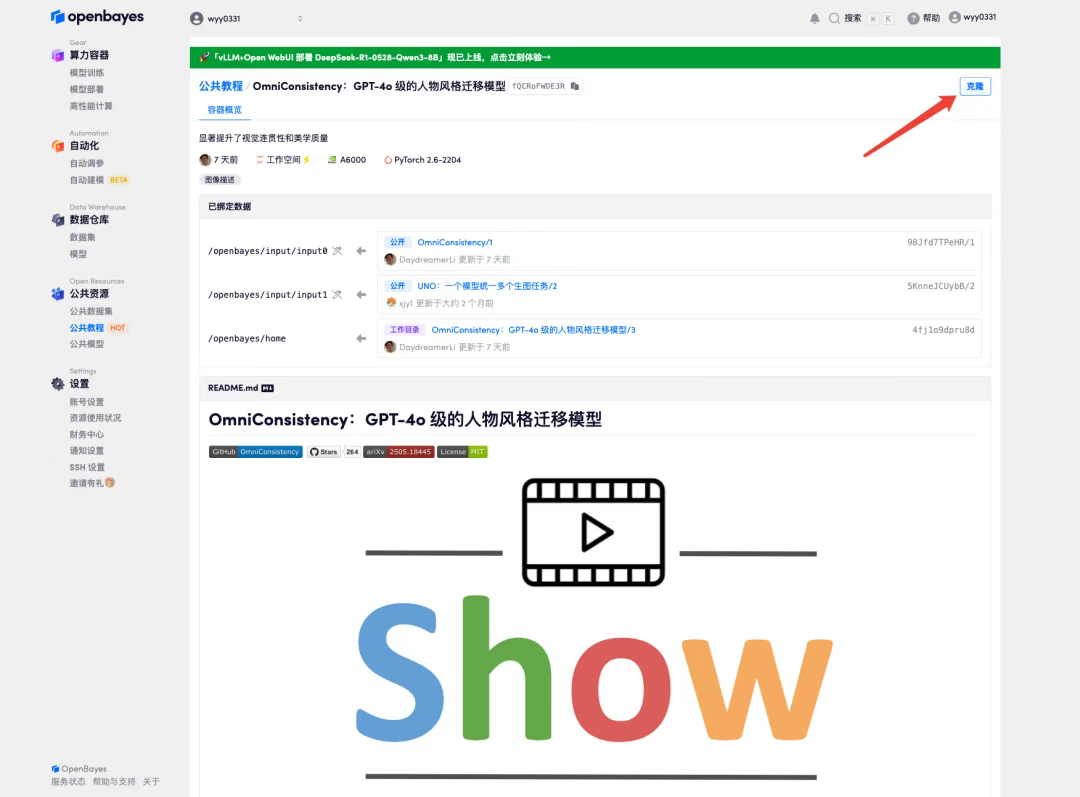

1. After entering the hyper.ai homepage, select the "Tutorial" page, select "OmniConsistency: GPT-4o-level character style transfer model", and click "Run this tutorial online".

2. After the page jumps, click "Clone" in the upper right corner to clone the tutorial into your own container.

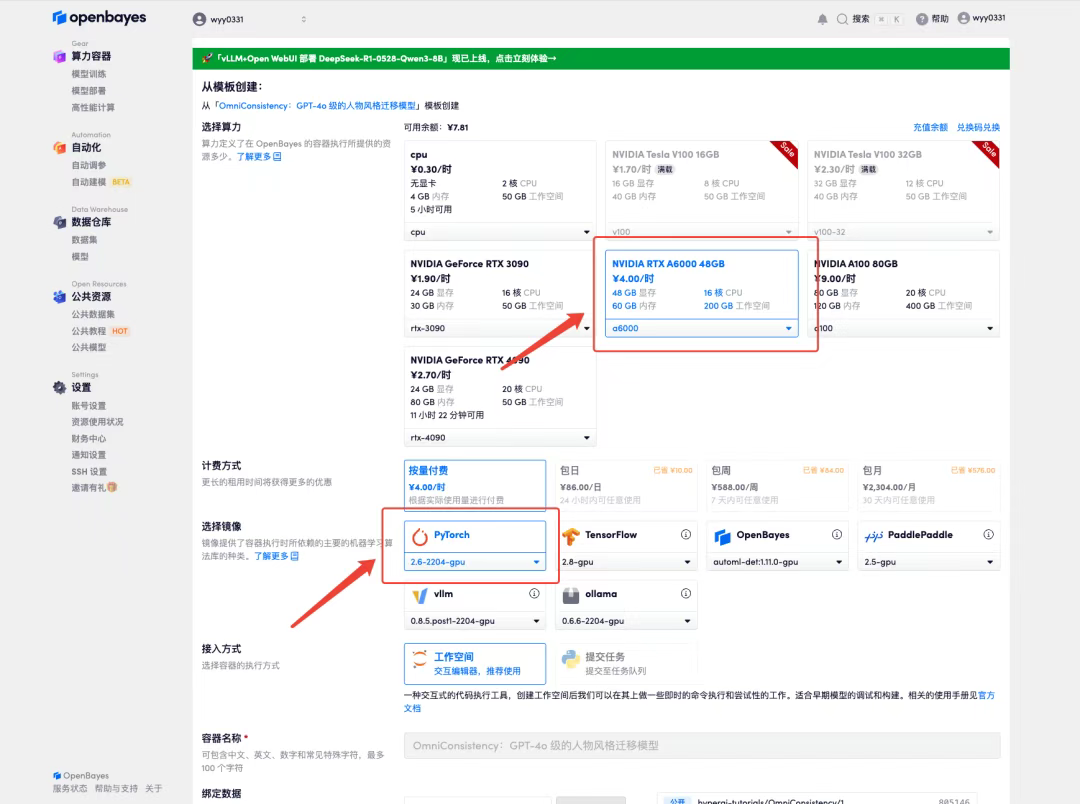

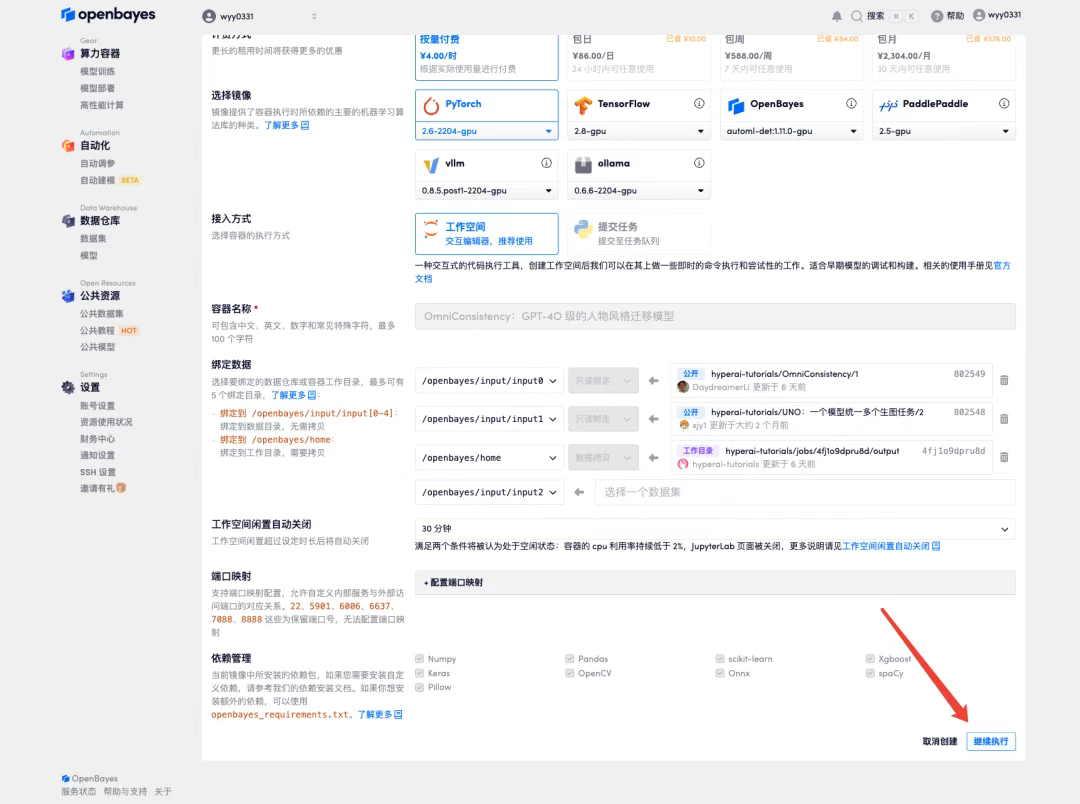

3. Select "NVIDIA RTX A6000 48GB" and "PyTorch" images. The OpenBayes platform provides 4 billing methods. You can choose "Pay as you go" or "Daily/Weekly/Monthly" according to your needs. Click "Continue". New users can register using the invitation link below to get 4 hours of RTX 4090 + 5 hours of CPU free time!

HyperAI exclusive invitation link (copy and open in browser):

https://openbayes.com/console/signup?r=Ada0322_NR0n

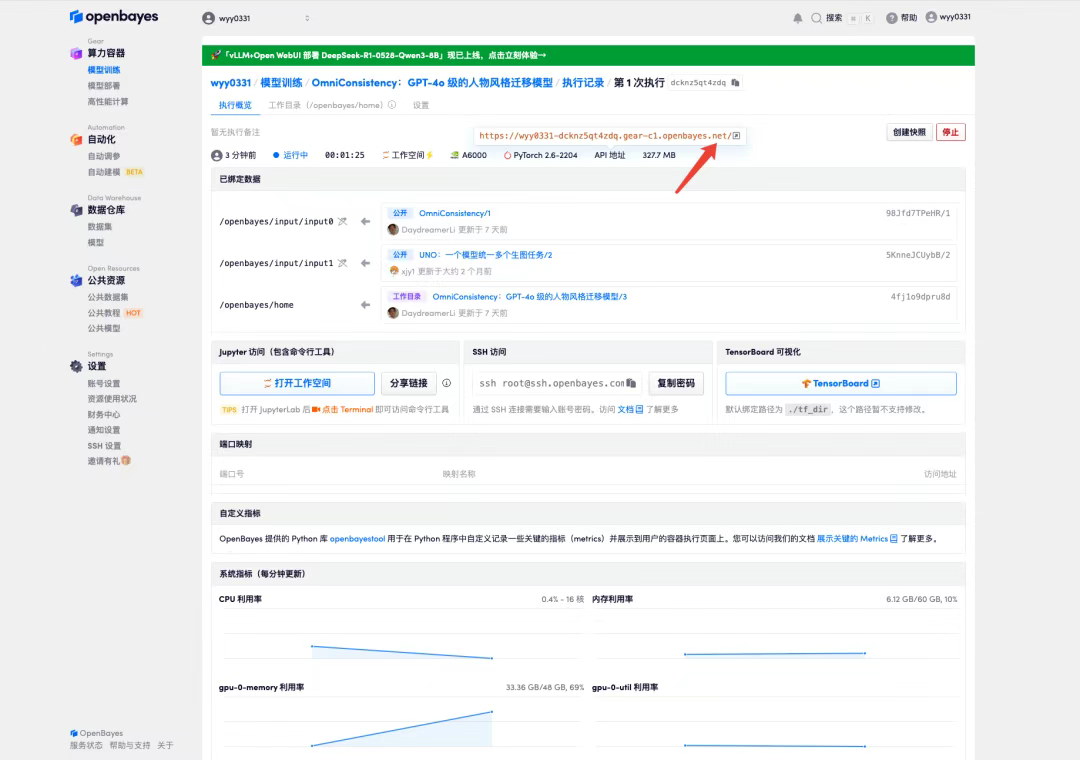

4. Wait for resources to be allocated. The first clone will take about 2 minutes. When the status changes to "Running", click the jump arrow next to "API Address" to jump to the Demo page. Due to the large model, it will take about 3 minutes to display the WebUI interface, otherwise "Bad Gateway" will be displayed. Please note that users must complete real-name authentication before using the API address access function.

Effect Demonstration

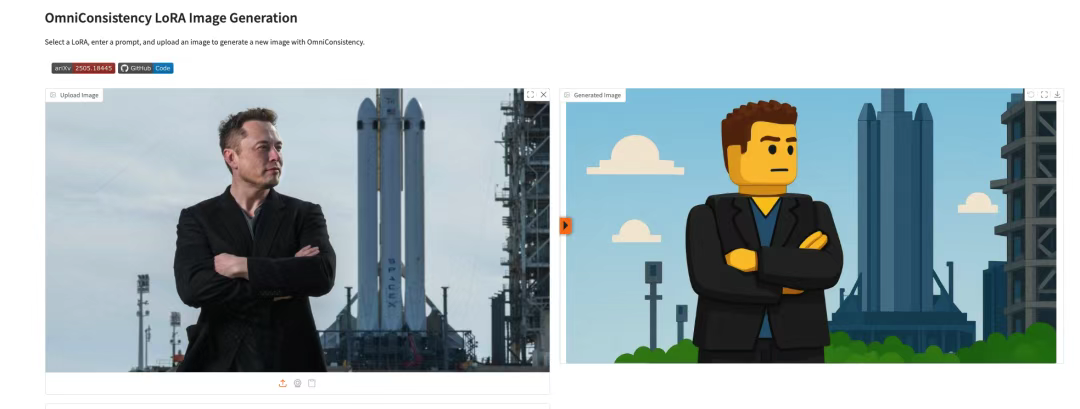

I uploaded a portrait photo, and the "Select built-in LoRA" parameter is LEGO. The effect is shown in the figure below~