Command Palette

Search for a command to run...

Online Tutorial: Process an Image in 9 Seconds! In-Context Edit, an Efficient Image Editing Framework, Is Now Available

Existing image editing methods mainly face the problem of balancing accuracy and efficiency. Fine-tuning methods require a lot of computing resources and high-quality datasets, while techniques that do not require training are difficult to meet the requirements of instruction understanding and editing quality.A research team from Zhejiang University and Harvard University launched In-Context Edit (ICEdit), a command-based image editing framework.Precise image modification can be achieved with only a few text commands, providing more possibilities for image processing and content creation.

In-Context Edit addresses the limitations of existing technologies through the following three key contributions: contextual editing framework, LoRA-MoE hybrid tuning strategy, and early filter inference time scaling method. Compared with previous methods, it only uses 1% of trainable parameters (200M) and 0.1% of training data (50k), but it shows better generalization ability and is capable of handling a variety of image editing tasks. At the same time, compared with Gemini and GPT-4o,This open source tool is not only cheaper and faster (it only takes about 9 seconds to process an image), but also has very powerful performance.

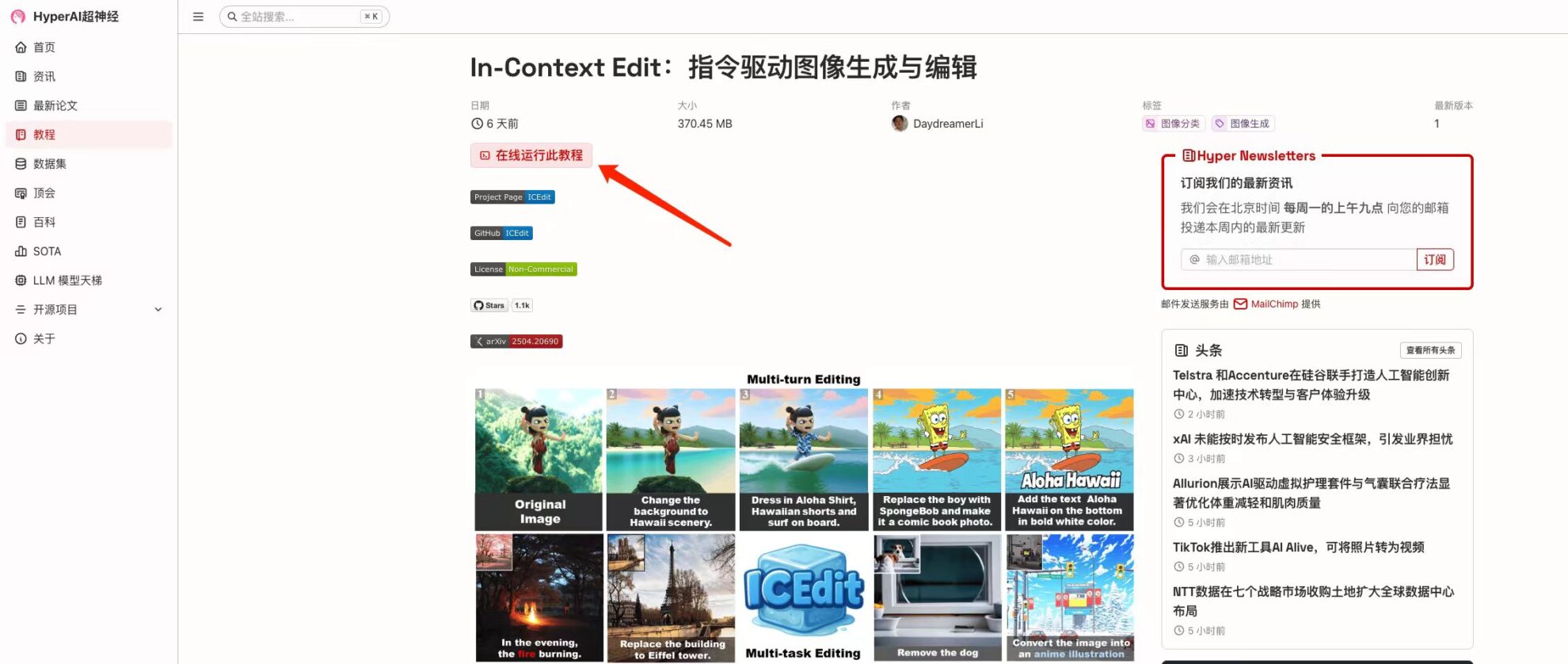

at present,"In-Context Edit: Command-Driven Image Generation and Editing" has been launched in the "Tutorials" section of HyperAI's official website.Click the link below to experience the one-click deployment tutorial ⬇️

Tutorial Link:https://go.hyper.ai/SHowG

Demo Run

1. After entering the hyper.ai homepage, select the "Tutorial" page, select "In-Context Edit: Command-driven Image Generation and Editing", and click "Run this tutorial online".

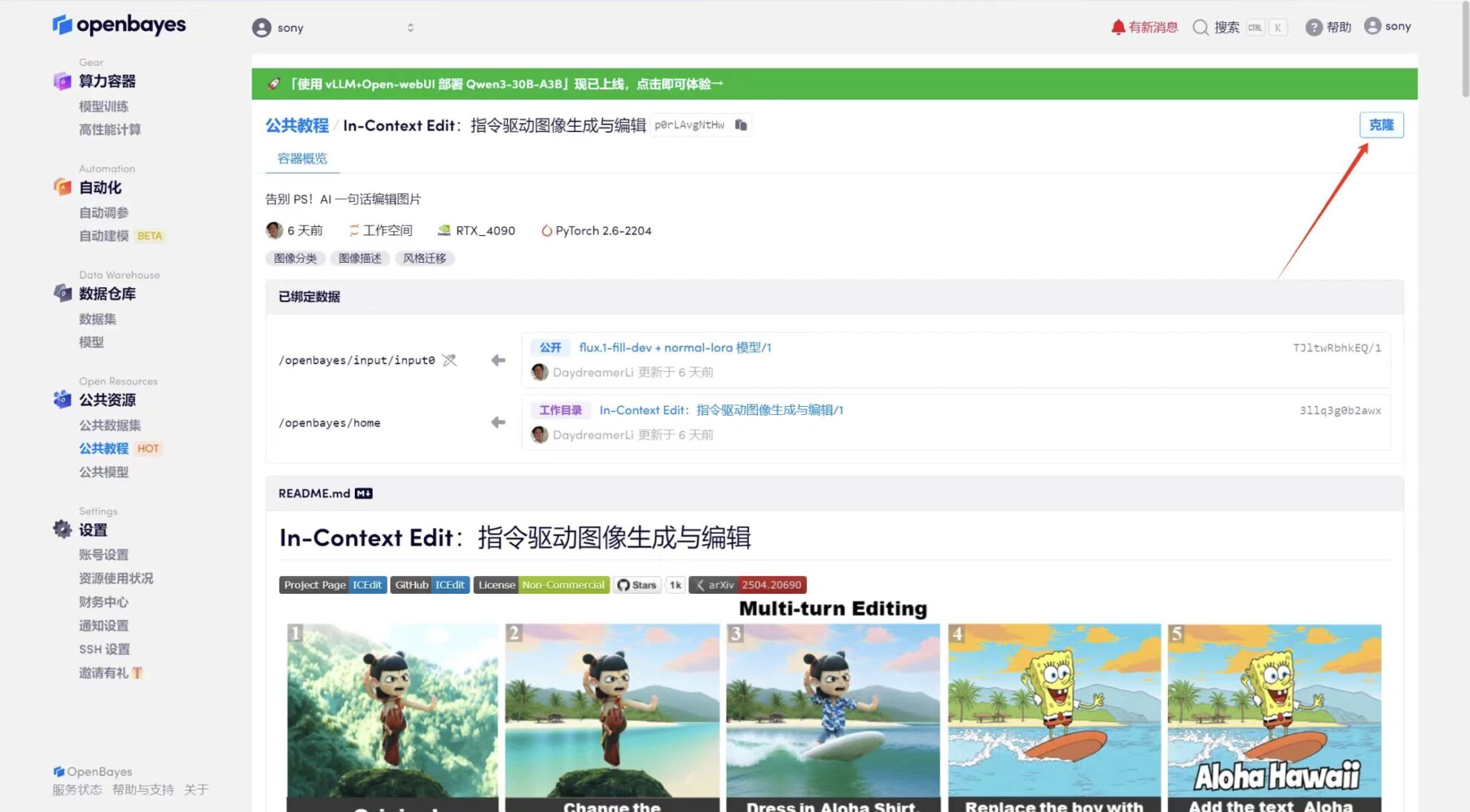

2. After the page jumps, click "Clone" in the upper right corner to clone the tutorial into your own container.

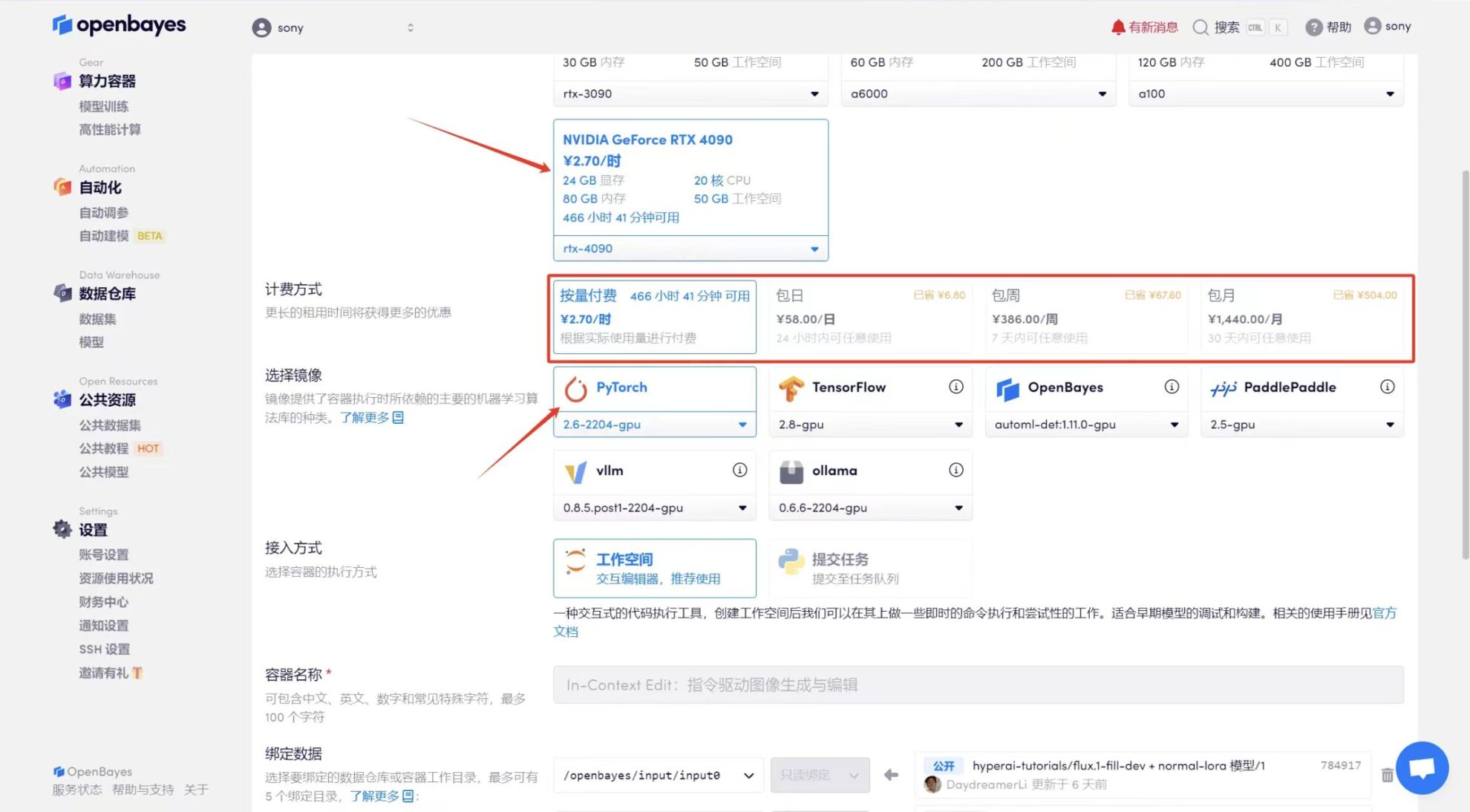

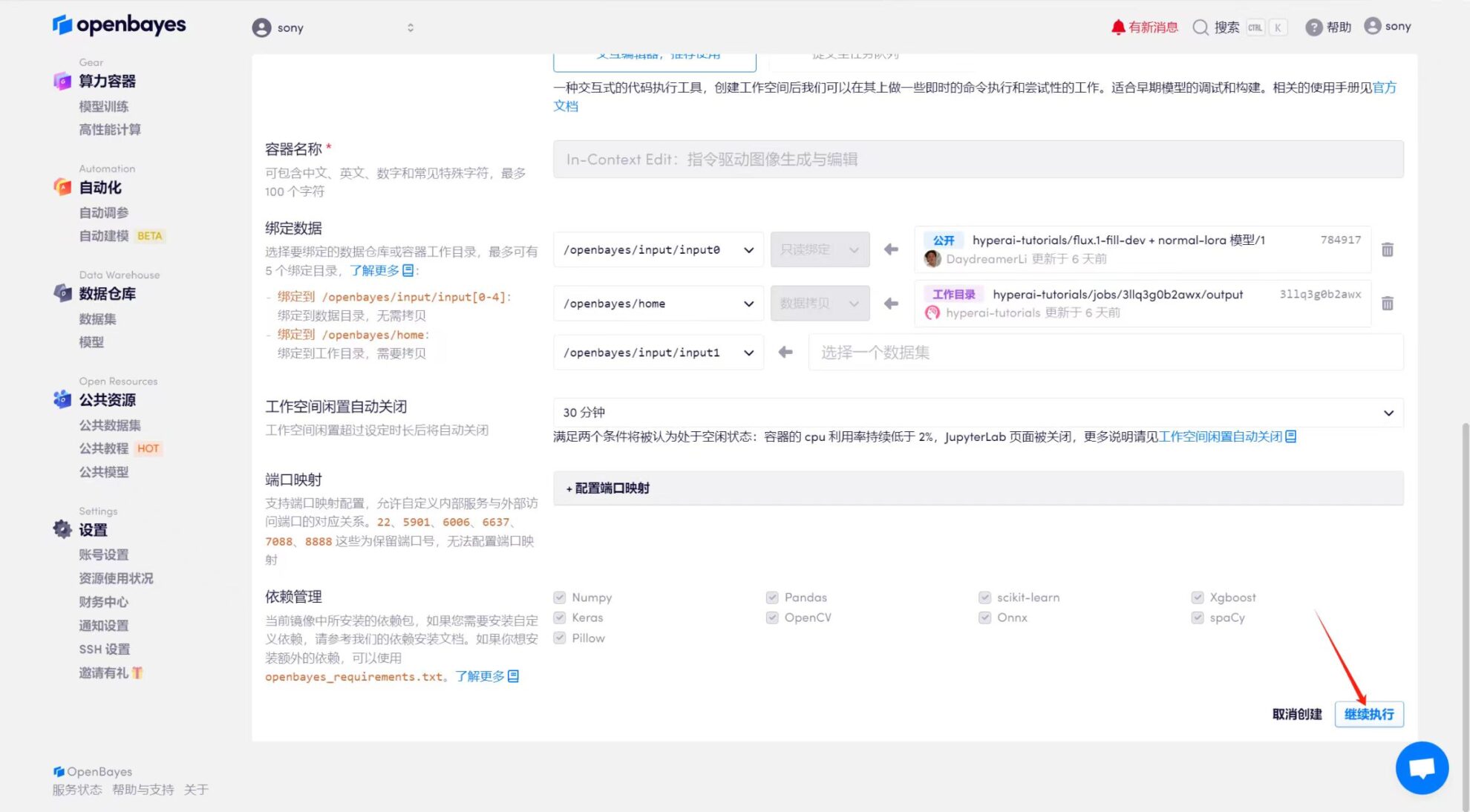

3. Select "NVIDIA GeForce RTX 4090" and "PyTorch" images. The OpenBayes platform provides 4 billing methods. You can choose "Pay as you go" or "Pay per day/week/month" according to your needs. Click "Continue". New users can register using the invitation link below to get 4 hours of RTX 4090 + 5 hours of CPU free time!

HyperAI exclusive invitation link (copy and open in browser):

https://openbayes.com/console/signup?r=Ada0322_NR0n

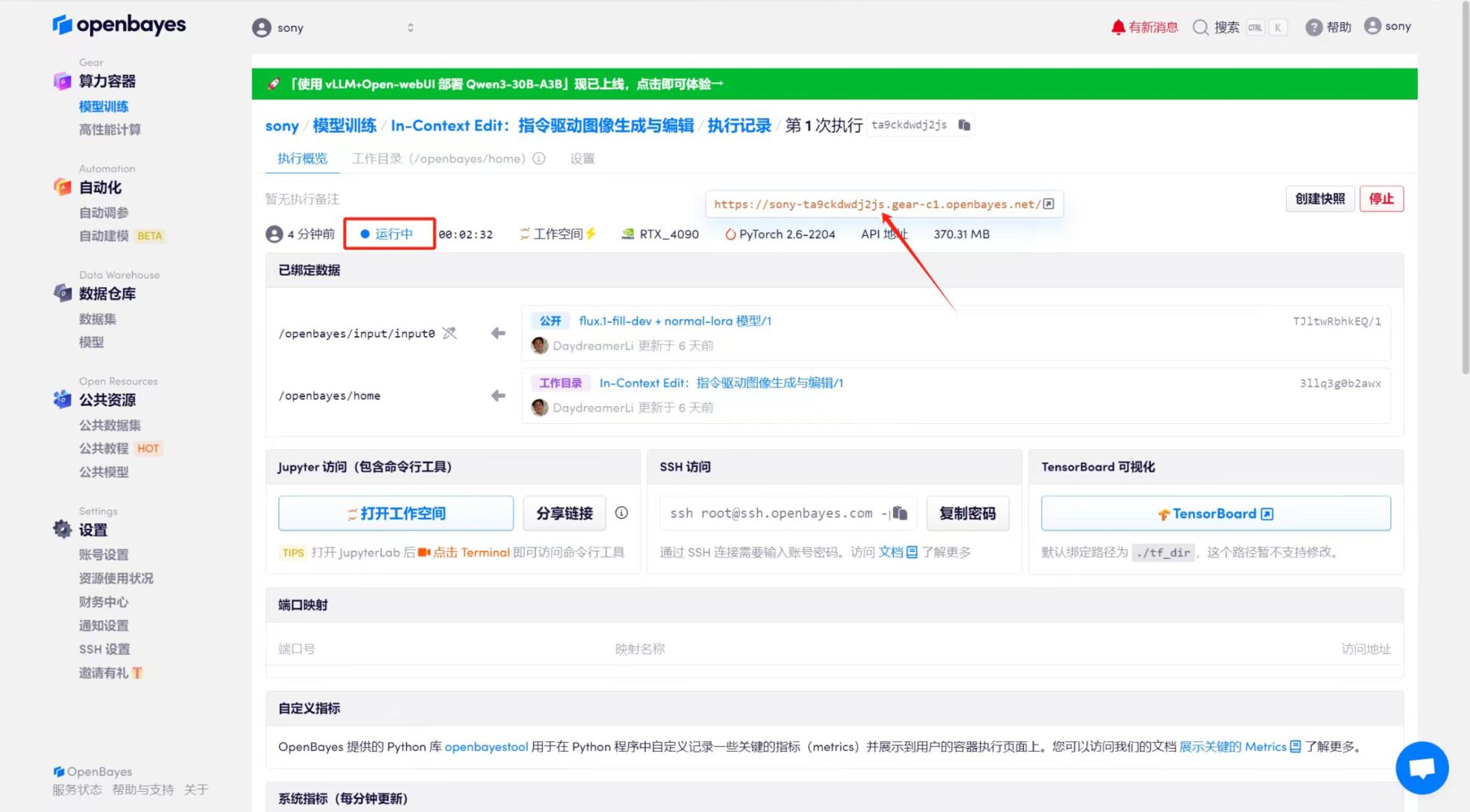

4. Wait for resources to be allocated. The first clone will take about 2 minutes. When the status changes to "Running", click the jump arrow next to "API Address" to jump to the Demo page. Due to the large model, it will take about 3 minutes to display the WebUI interface, otherwise "Bad Gateway" will be displayed. Please note that users must complete real-name authentication before using the API address access function.

Effect display

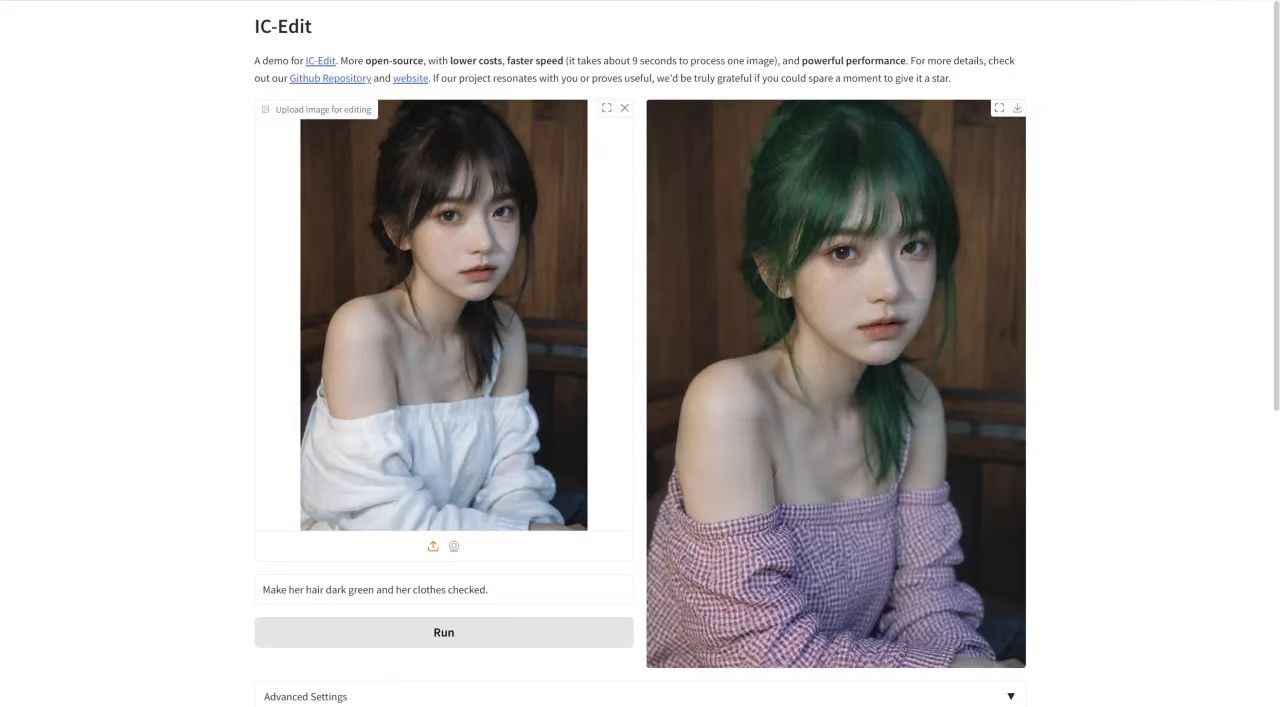

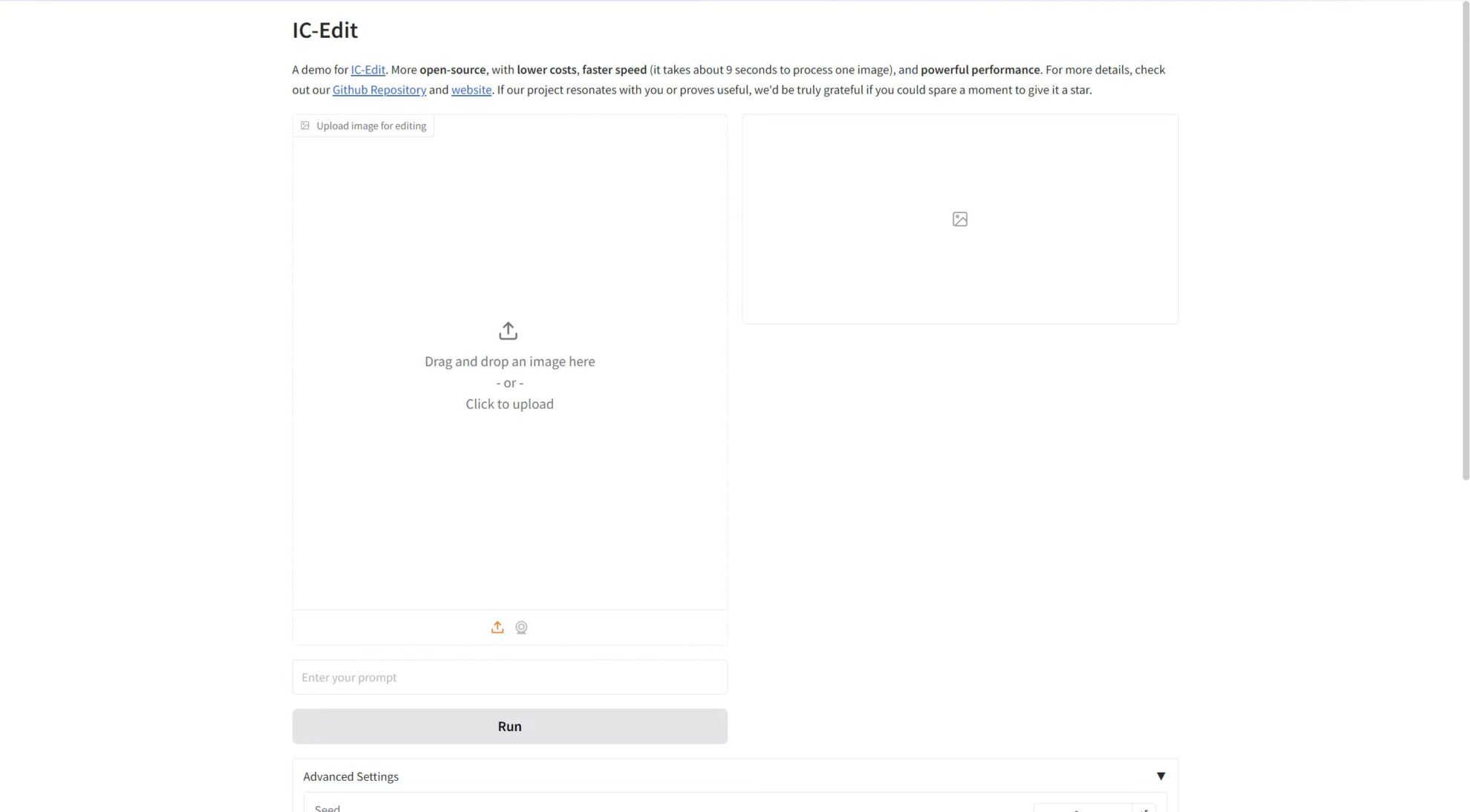

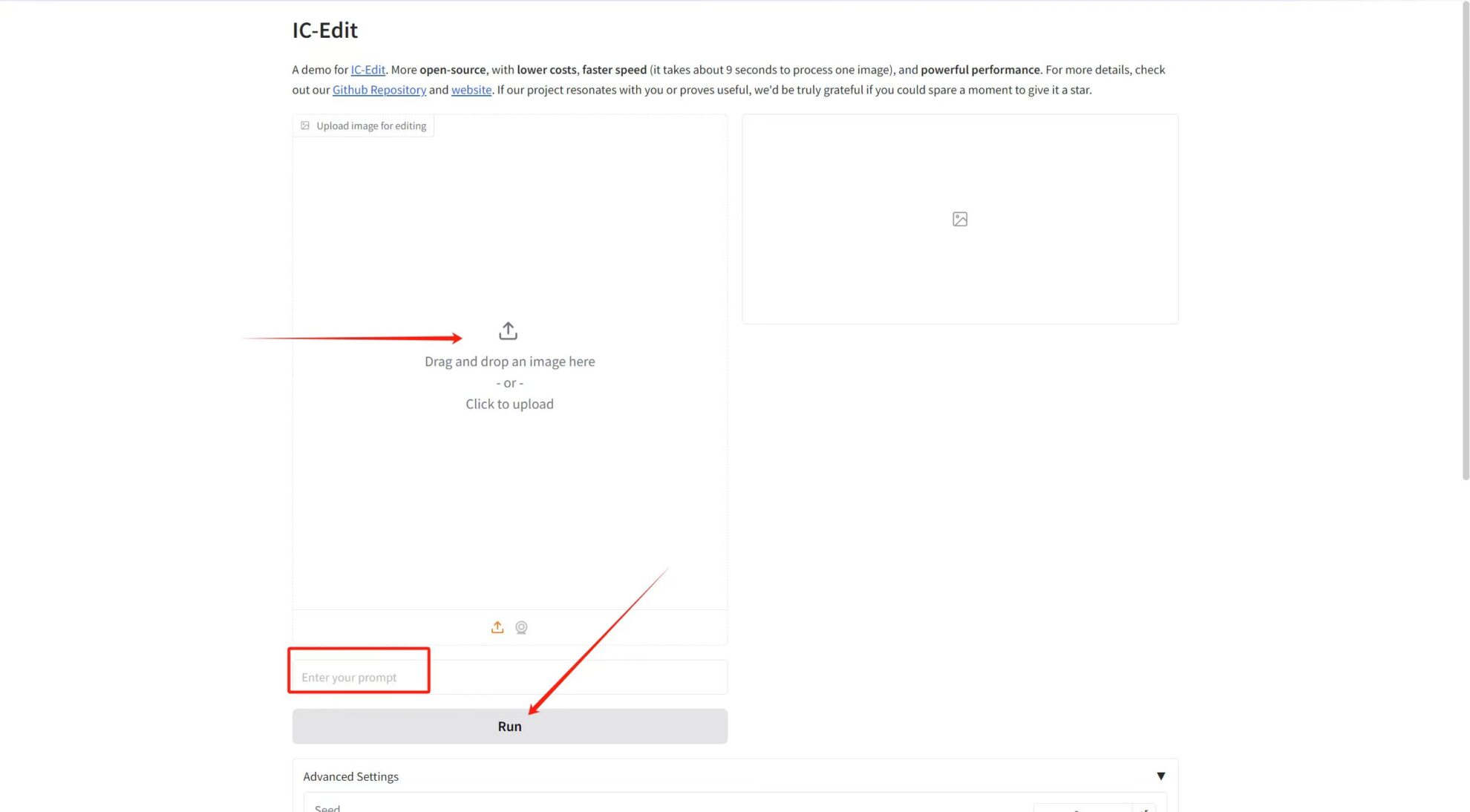

Upload the image in "Upload image for editing", then enter the prompt word in the text box, and finally click "Run" to generate.

Parameter adjustment introduction:

* Guidance Scale: Used to control the influence of conditional input (such as text or images) on the generated results in the generative model. A higher guidance value will make the generated results closer to the input conditions, while a lower value will retain more randomness.

* Number of inference steps: Indicates the number of iterations of the model, or the number of steps in the inference process, representing the number of optimization steps the model takes to produce the result. A higher number of steps generally produces more refined results, but may increase computation time.

* Seed: The random number seed is used to control the randomness of the generation process. The same Seed value can generate the same results (provided that other parameters are the same), which is very important in reproducing the results.

I uploaded a portrait photo with the prompt: Make her hair dark green and her clothes checked. The effect is as shown below~