Command Palette

Search for a command to run...

Developing the Largest Remote Sensing Command Dataset to Date, IBM Research and Others Proposed VLM Designed Specifically for Earth Observation Data, Selected for CVPR 2025

The field of Earth observation is experiencing a revolutionary development wave, and its importance is growing day by day. In terms of industry scale, the World Economic Forum report in May 2024 shows thatIts potential economic value will climb from $266 billion in 2023 to over $700 billion in 2030.Many countries and international organizations have long attached importance to the strategic significance of Earth observation and have actively made plans.

However, earth observation technology has difficulties in processing complex data. Traditional satellite image analysis systems are slow in processing multi-source remote sensing data and have shortcomings in geospatial and spectral dimension analysis. Vision-Language Models (VLMs) have made significant progress in the field of general visual interpretation, but general models are difficult to cope with earth observation data.Because its unique geospatial, spectral and temporal dimensions place higher demands on models, even advanced proprietary models are less accurate in processing specific remote sensing data.

Previously, VLMs for specific fields of earth observation, such as RS-GPT and GeoChat, were launched, but they have limitations in high-resolution image processing, multi-spectral and multi-temporal analysis, etc. In this context, IBM Research, Emirates University of Artificial Intelligence, Australian National University, Linköping University, Sweden, etc.,Jointly launched EarthDial, a conversational VLM that can uniformly process multi-resolution, multi-spectral and multi-temporal remote sensing imagery, innovatively transforming complex multi-sensory Earth observations into interactive natural language dialogues to support a variety of remote sensing tasks.The research team built a massive dataset of more than 11.11 million instruction pairs, covering a variety of multispectral modalities, laying a solid foundation for the model's powerful capabilities.

The related research results, titled "EarthDial: Turning Multi-sensory Earth Observations to Interactive Dialogues", have been selected for CVPR 2025.

Research highlights:

* EarthDial is a conversational VLM that can process multispectral, multitemporal, and multiresolution remote sensing images to meet the needs of diverse earth observation missions.

* This study introduced the largest remote sensing instruction fine-tuning dataset, containing more than 11.11 million pairs of instructions, covering multiple modalities, significantly enhancing the model's understanding and generalization capabilities.

* Experiments show that EarthDial performs well in 44 downstream Earth observation tasks, showing higher accuracy and better generalization ability than existing domain-specific VLMs.

Paper address:

The open source project "awesome-ai4s" brings together more than 100 AI4S paper interpretations and also provides massive data sets and tools:

https://github.com/hyperai/awesome-ai4s

Dataset: Over 10 million instructions, covering multiple resolutions and geographic location information

In the field of earth observation technology, the complexity of data dimensions and the diversity of mission scenarios pose severe challenges to the generalization ability of models. In order to break through the performance bottleneck of traditional models in multi-modal, multi-resolution, and multi-temporal remote sensing data processing,EarthDial has built a large-scale dataset EarthDial-Instruct specifically for the remote sensing field, which contains more than 11 million professional instruction pairs.The pre-training strategy of this dataset focuses on building generalization capabilities across modalities, resolutions, and time periods. By selecting high-quality question-answer pairs from professional platforms such as SkyScript and SatlasPretrain, it integrates multi-source heterogeneous remote sensing data such as Sentinel-2 optical images, Sentinel-1 synthetic aperture radar data, NAIP aerial images, and Landsat satellite images, and simultaneously configures geographic label information.

In terms of data quality control,The research team implemented a triple filtering mechanism:First, sparse samples with less than 3 label fields are removed. Second, invalid data is excluded based on the spectral brightness value distribution and geographic coverage. Finally, with the help of the InternLM-XComposer2 model, standardized question-answer instruction pairs are automatically generated based on the image geographic elements. This data purification system lays a solid foundation for the model to understand the spectral feature differences, spatial resolution characteristics, and temporal reflectance change laws of remote sensing data.

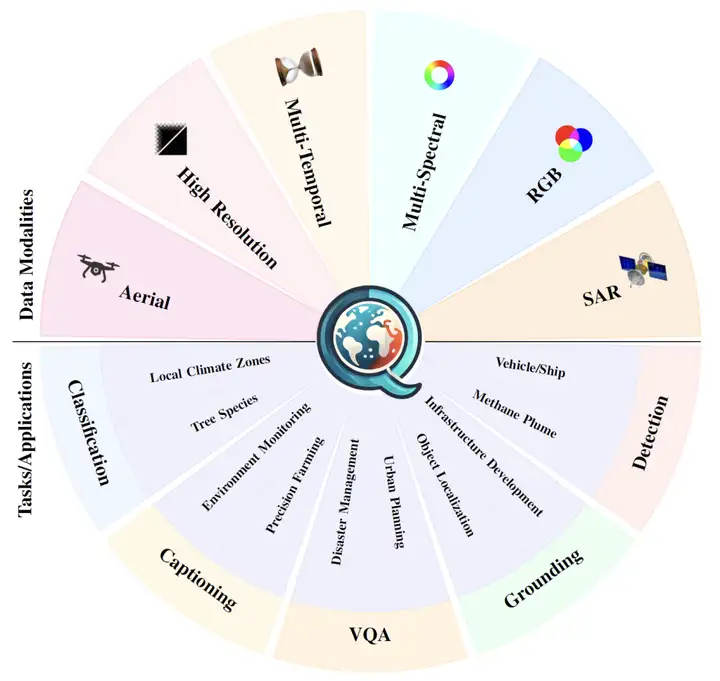

Aiming at practical application needs, EarthDial has built a refined downstream task instruction system.Covers 10 core tasks, 6 visual modalities and 2 phase types.

In the scene classification task,The research team introduced the BigEarthNet dataset to handle complex surface cover classification problems, used the FMoW multi-temporal dataset to realize dynamic identification of land use changes, and combined local climate zoning data with the TreeSatAI time series dataset to carry out urban heat island effect classification and forest tree species distribution identification, effectively solving the problem of insufficient generalization ability of traditional models in small sample professional fields.

In the target detection task,The research team designed an instruction system that includes three types of labels: reference, identification, and positioning, covering multimodal images such as optical, SAR, and infrared, and achieving precise spatial positioning and feature description by quantifying the key attributes of the target.

Visual question answering and image description tasks build composite instruction sets by integrating multi-source datasets.Significantly improve task diversity and model processing performance.The change detection task adopts a multi-dataset fusion strategy.Combined with manual sequence analysis, a standardized description framework was generated.

In response to the special needs of methane plume detection, the research team designed a conversational prompt template based on the STARCOP dataset to achieve precise target guidance; the urban heat island effect study inverted key thermal indicators through image data, established a regional classification model and generated thematic analysis instructions; the disaster assessment module integrated the xBD earthquake disaster dataset and QuakeSet earthquake sequence data to construct a dedicated instruction system for disaster level analysis and post-earthquake impact assessment.

EarthDial: A dedicated model for unified processing of multi-resolution, multi-spectral and multi-temporal remote sensing data

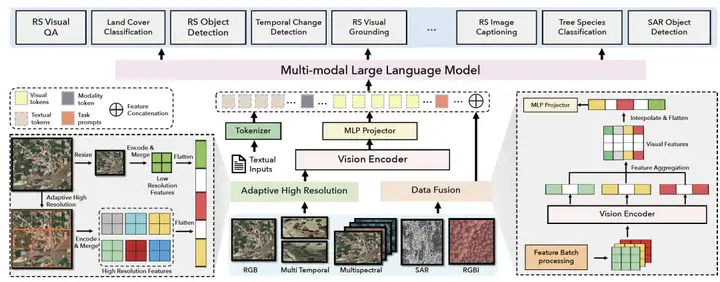

EarthDial can be flexibly applied to tasks such as classification, visual localization, and change detection.It is based on advanced natural image visual language models (VLMs).Through multi-stage fine-tuning, the capabilities are expanded, with the improved InternVL as the architecture, to support multi-spectral and multi-temporal data.

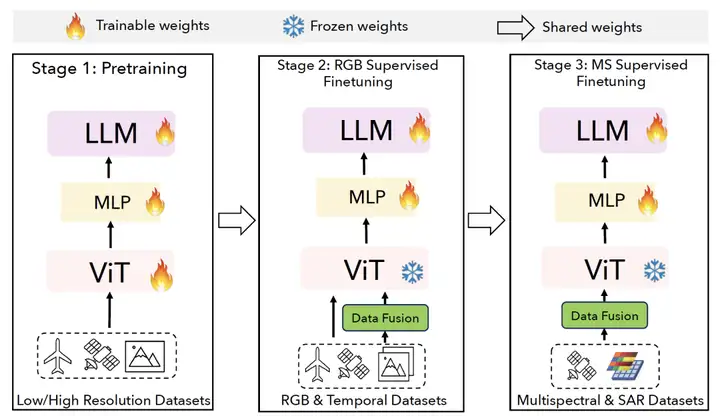

As shown in the figure below,The model consists of three components: Visual encoder, MLP layer projector and LLM.,The visual encoder is connected with LLM through MLP as a connection block to map the visual tags into LLM space.

At the same time, the model has a lightweight design of only 4 billion parameters, which can receive multiple types of remote sensing data sets and generate accurate remote sensing dialogue content while ensuring efficient operation. Among them, the visual encoder uses the lightweight InternViT-300M distilled from the 6 billion parameter InternViT to ensure strong visual encoding capabilities; the Phi-3-mini pre-trained LLM gives the model excellent language understanding and generation capabilities; the simple MLP connection block effectively builds a bridge between the visual and language spaces.

also,The addition of two core modules, Adaptive High Resolution and Data Fusion, has become the key to the model's processing of complex remote sensing data.The adaptive high-resolution module draws on the dynamic strategy of InternVL 1.5. By dividing the image into tiles and generating thumbnails, it not only retains the details of the high-resolution image but also provides global scene understanding. The data fusion module uses strategies such as channel processing and feature aggregation and dimensionality reduction for multi-spectral, SAR and other data to deeply fuse visual and text features, significantly improving the performance of the model in complex tasks.

During the training phase, EarthDial uses a three-stage strategy to gradually enhance performance:

The first stage is RS Conversational Pretraining.In this stage, 7.6 million image-text pairs from datasets such as Satlas and Skyscript are used for pre-training to establish vision and text alignment capabilities.

The second stage is RS RGB and Temporal finetuning.This stage fine-tunes the RGB and temporal data and optimizes the MLP and LLM layers.

The third stage is RS Multispectral and SAR Finetuning.This stage is extended to multispectral and SAR data, fine-tuning the MLP and LLM layers.

These three stages of training are progressive, giving EarthDial powerful earth observation data analysis and task execution capabilities, bringing innovative breakthroughs in areas such as environmental monitoring and disaster response.

Experimental results: Multiple tasks outperform existing models and perform well in processing dual-phase and multi-phase sequence analysis

In the experiment, the EarthDial model demonstrated excellent performance in a variety of application scenarios, covering RGB, multispectral, SAR, infrared and thermal imaging data, and evaluating tasks such as scene classification, object detection, visual question answering (VQA), image description, change detection and methane plume detection.

In the scene classification task,Through zero-shot evaluation, EarthDial significantly improves the performance of existing VLMs on multiple datasets, especially on the fMoW and xBD test sets.

In the target detection task,EarthDial outperforms models such as GPT-4o, InternVL2-4B and GeoChat in the three subtasks of reference target detection, area description and location description, and performs particularly well in location description tasks and SAR image datasets.

For image description and VQA tasks,EarthDial outperforms existing models on relevant datasets. In the VQA task, EarthDial is evaluated using the RSVQA-LRBEN and RSVQA-HRBEN datasets, and has an advantage in most categories.

In the change detection task,EarthDial effectively processes temporal data through data fusion strategies, demonstrating powerful temporal data interpretation and response capabilities.

In disaster assessment missions,Based on 8 subtasks of the xBD dataset, EarthDial continues to surpass existing VLMs in subtasks such as image classification test set 1. On the QuakeSet dataset, using SAR images for earthquake prediction, EarthDial achieved an accuracy of 57.53%, surpassing GPT-4o.

In terms of multimodal data processing,EarthDial significantly improves the performance of GPT-4o in the classification and representative object detection tasks of multispectral, RGB-infrared, and SAR images, highlighting the effectiveness of its multi-band fusion strategy.

In the Urban Heat Island (UHI) experiment,EarthDial achieves an accuracy of 56.77% and can identify temperature trends in the Landsat8 band, which is better than GPT-4o's 22.68%.

In the methane plume classification task,Using the STARCOP dataset, EarthDial achieved an accuracy of 77.09%, an improvement of 32.16% over GPT4o.

The AI revolution in Earth observation: a paradigm shift from data collection to intelligent decision-making

Amid the global digital transformation, AI technology is driving profound changes in the field of earth observation. With technological breakthroughs such as multimodal large models and on-orbit intelligent processing, this field is accelerating its transformation from traditional data collection to a closed-loop intelligent system of "perception-cognition-decision-making", becoming the core infrastructure supporting global sustainable development.

First, technological breakthroughs have driven the industry to move from passive recording to active intervention. The TerraMind model jointly developed by the European Space Agency and IBM integrates eight types of heterogeneous data sources.It has become the world's first multimodal basic model for Earth observation.Its modal reasoning technology intelligently completes data in the monitoring of methane leaks in the Siberian tundra, improving prediction accuracy by 20% and reducing computing power consumption by 50%; in the Amazon rainforest monitoring scenario, it uses generative capabilities to automatically repair missing images and achieve all-weather monitoring.

The "Space Lingmou" 3.0 model of the Space Information Innovation Research Institute of the Chinese Academy of Sciences builds a full-link interpretation system with tens of billions of parameters.The accuracy is improved by 4-10% compared with the traditional model.It is also applied to scenarios such as the ecological assessment of Xiongan New Area. In terms of on-orbit intelligent processing, the intelligent upgrade of satellite payloads has led to breakthroughs in edge computing capabilities. ESA's Φsat-2 satellite is equipped with 6 AI application modules, including a wildfire monitoring system that captures fire points in real time and algorithms that quickly identify ecological threats. These technological breakthroughs help Earth observation move towards real-time decision-making.

Secondly, AI technology has a wide range of application scenarios in the field of earth observation, achieving full coverage from macro-monitoring to micro-governance. In terms of climate and ecological governance,TerraMind is an advanced Earth observation AI model developed by the European Space Agency (ESA) and IBM Research Europe.Integrating Sentinel satellite hyperspectral data with ground sensor networks has achieved meter-level positioning accuracy in Siberian natural gas pipeline monitoring, and the accuracy of leakage trend prediction has increased by 30%; NASA and Google’s Global Forest Watch 3.0 system combines AI and drone inspections.Successfully identified 87% of illegal logging areas in the Congo Basin,Build a strong “digital fence” to protect tropical rainforests.

* Paper link:

https://doi.org/10.1016/j.rse.2021.112470

In terms of disaster response and urban planning, Alibaba Damo Academy's remote sensing AI large model AIE-SEG completed the building damage assessment in the disaster area within 3 hours during the 2024 Türkiye earthquake.The efficiency is 50 times higher than traditional manual analysis;The spatiotemporal prediction model developed by the Tsinghua University team simulates the airflow movement in urban ventilation corridors, providing quantitative decision support for Beijing's urban planning. In terms of agriculture and resource management, Microsoft Project Premonition is being piloted in Andhra Pradesh, India.AI-based precision seeding recommendations increase crop yields by 30% per hectare.Provide real-time data support for smart agriculture.

Finally, in terms of ecosystem construction, industry-university-research collaboration and global governance in the field of earth observation are steadily advancing, and the construction of open source ecosystem and tool chain is constantly improving. For example,Google Earth AI opens API interface,Help global developers access satellite data intelligent processing capabilities and lower the threshold for technology application. The United Nations "AI for Good" initiative uses artificial intelligence to resist natural disasters, is committed to establishing a global unified disaster assessment standard, and promotes data interoperability and technology collaboration.

It can be seen that AI technology is pushing earth observation from "passive recording" to "active intervention". In the future, with the integration of multimodal large models, on-orbit intelligent processing, quantum computing and other technologies, earth observation is expected to become the digital cornerstone supporting global issues such as carbon neutrality, disaster prevention and mitigation, and resource management, and write a new chapter of sustainable development in the symbiotic relationship between humans and nature.

Reference articles:

1.https://www.thepaper.cn/newsDetail_forward_30704895

2.https://mp.weixin.qq.com/s/i_Ar0RJ7g32s1ckCq81P-Q