Command Palette

Search for a command to run...

The multi-agent Driven Generation Capability Reaches SOTA, and the Byte UNO Model Can Handle a Variety of Image Generation Tasks

Nowadays, subject-driven generation has been widely used in the field of image generation, but it still faces many challenges in data scalability and subject scalability. For example, it is particularly difficult to switch from a single-subject dataset to a multi-subject dataset and expand it. The current hot research direction is single-subject, which performs poorly when faced with multi-subject generation tasks.

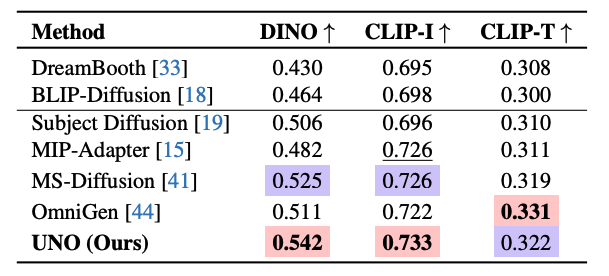

In view of this,The Intelligent Creation team of ByteDance, a Chinese Internet technology company, used the context generation capability of the diffusion Transformer model to generate highly consistent multi-agent paired data, and proposed the UNO model based on FLUX, which can handle different input conditions in image generation tasks.It uses the new paradigm of "model-data co-evolution" to enrich training data and improve the quality and diversity of generated images while optimizing model performance. The researchers conducted a large number of experiments on DreamBench and multi-agent driven generation benchmarks. UNO achieved the highest DINO and CLIP-I scores in both tasks, indicating that it performs well in subject similarity and text controllability, and its capabilities reach the SOTA level.

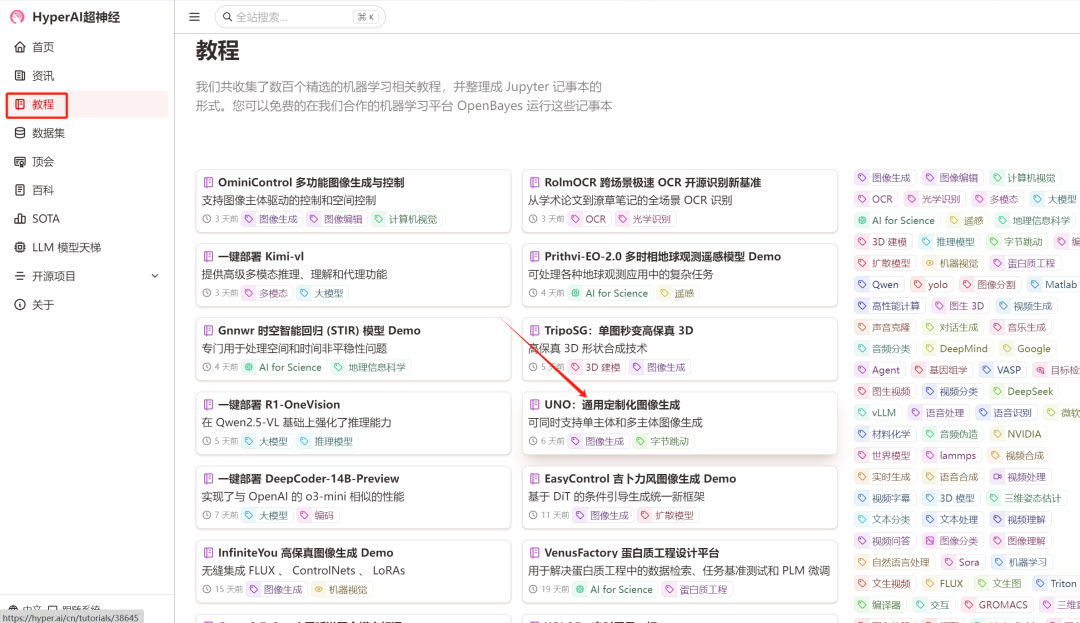

Currently, the "UNO: Universal Customized Image Generation" tutorial has been launched in the tutorial section of HyperAI's official website.Click the link below to quickly experience it↓

Tutorial Link:https://go.hyper.ai/XELg5

Demo Run

1. Log in to hyper.ai, on the Tutorials page, select UNO: Universal Customized Image Generation, and click Run this tutorial online.

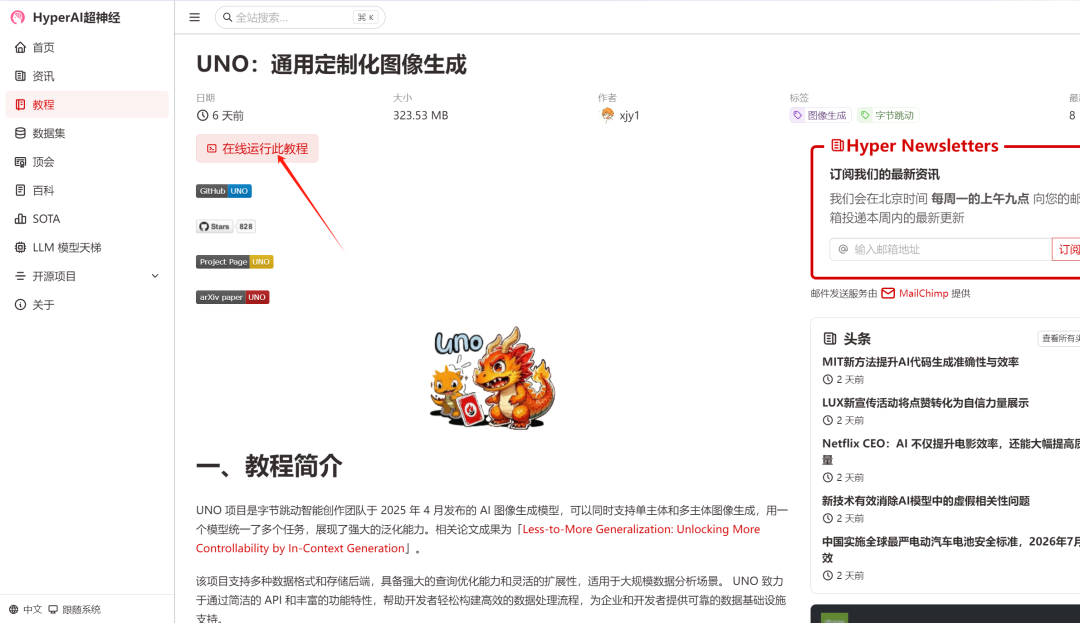

2. After the page jumps, click "Clone" in the upper right corner to clone the tutorial into your own container.

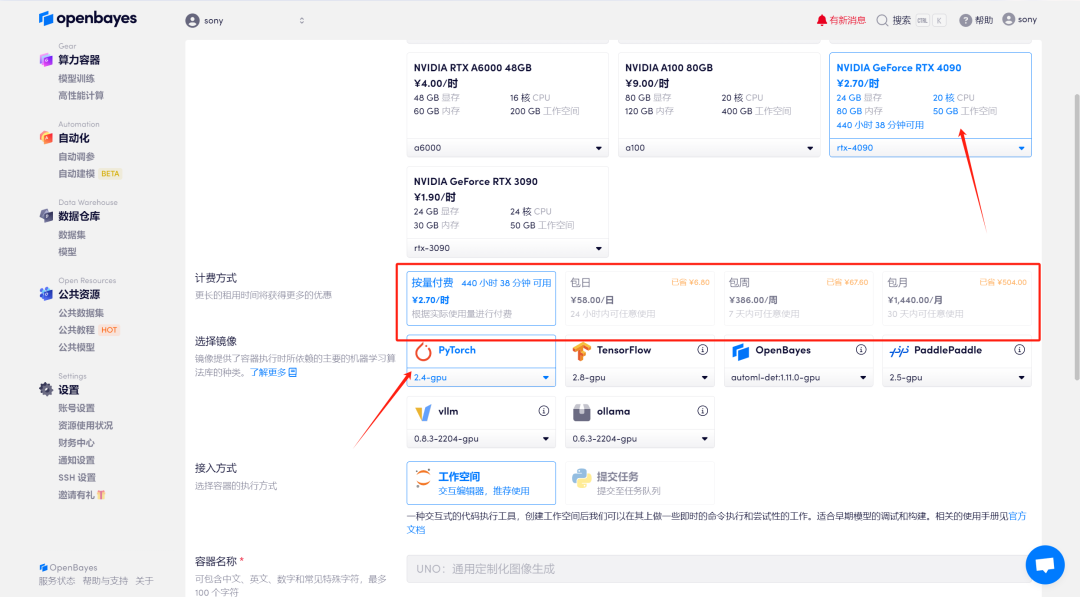

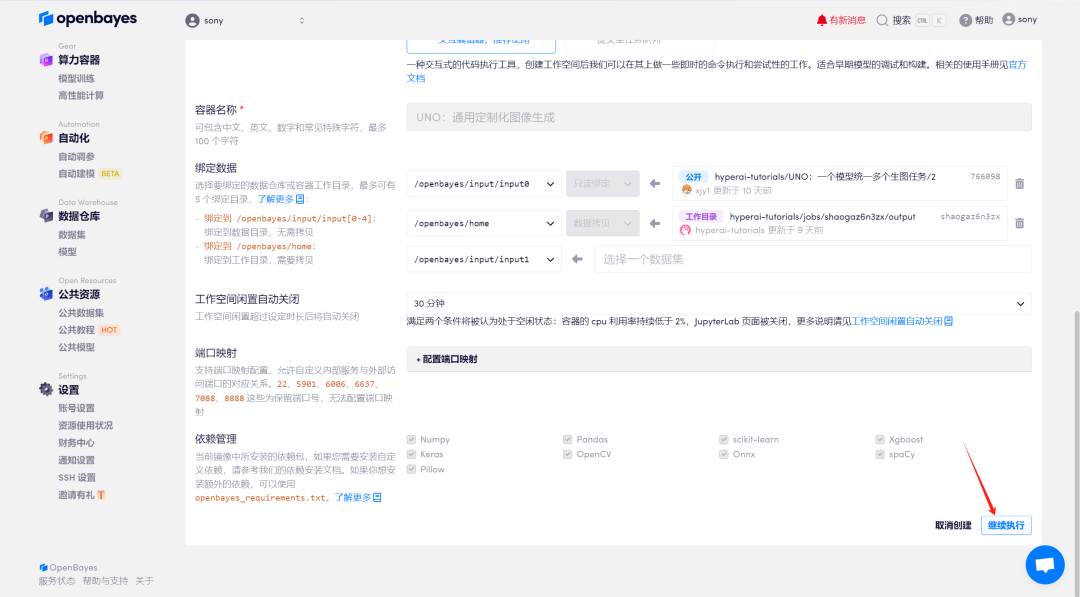

3. Select "NVIDIA GeForce RTX 4090" and "PyTorch" images. The OpenBayes platform provides 4 billing methods. You can choose "Pay as you go" or "Pay per day/week/month" according to your needs. Click "Continue". New users can register using the invitation link below to get 4 hours of RTX 4090 + 5 hours of CPU free time!

HyperAI exclusive invitation link (copy and open in browser):

https://openbayes.com/console/signup?r=Ada0322_NR0n

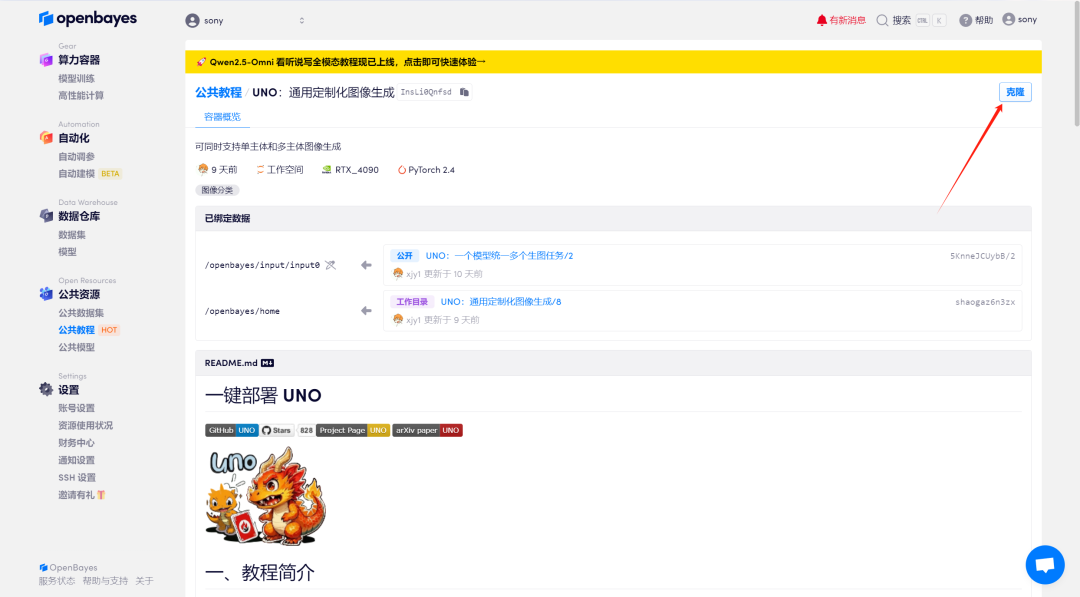

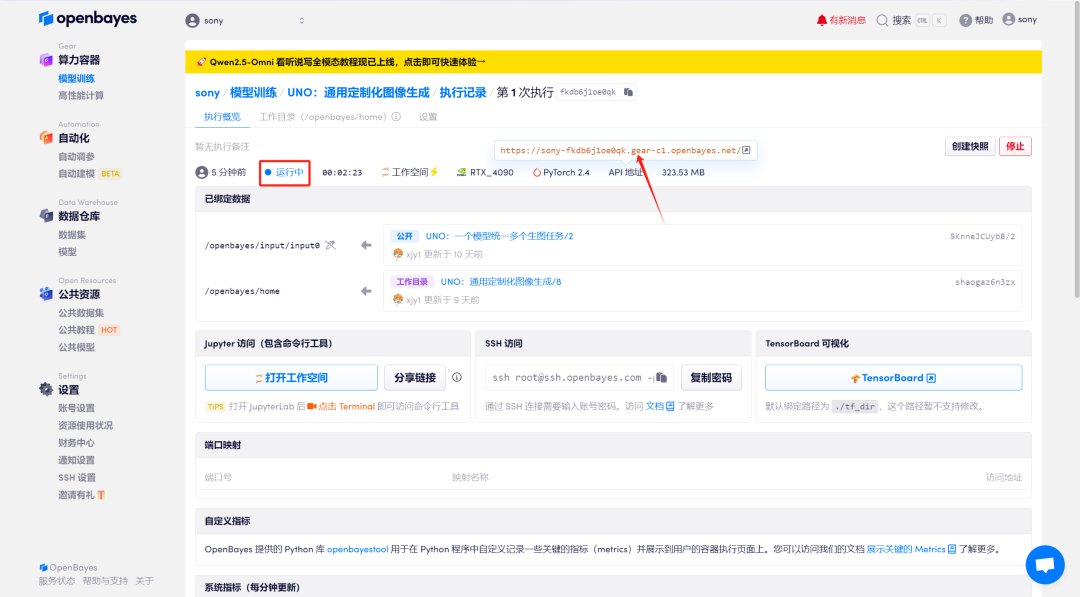

4. Wait for resources to be allocated. The first clone will take about 2 minutes. When the status changes to "Running", click the jump arrow next to "API Address" to jump to the Demo page. Please note that users must complete real-name authentication before using the API address access function.

Effect Demonstration

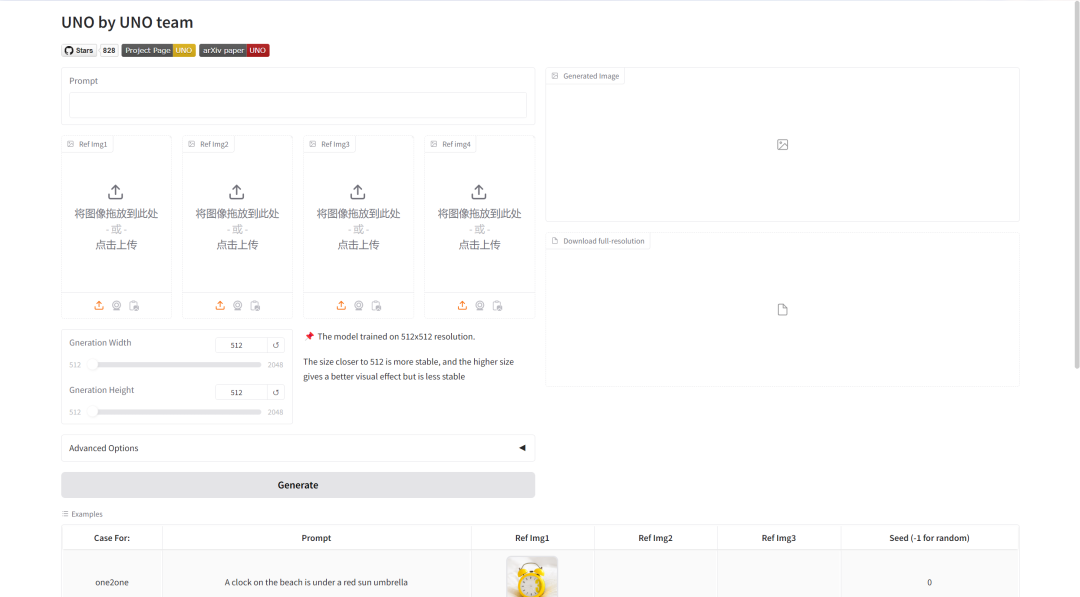

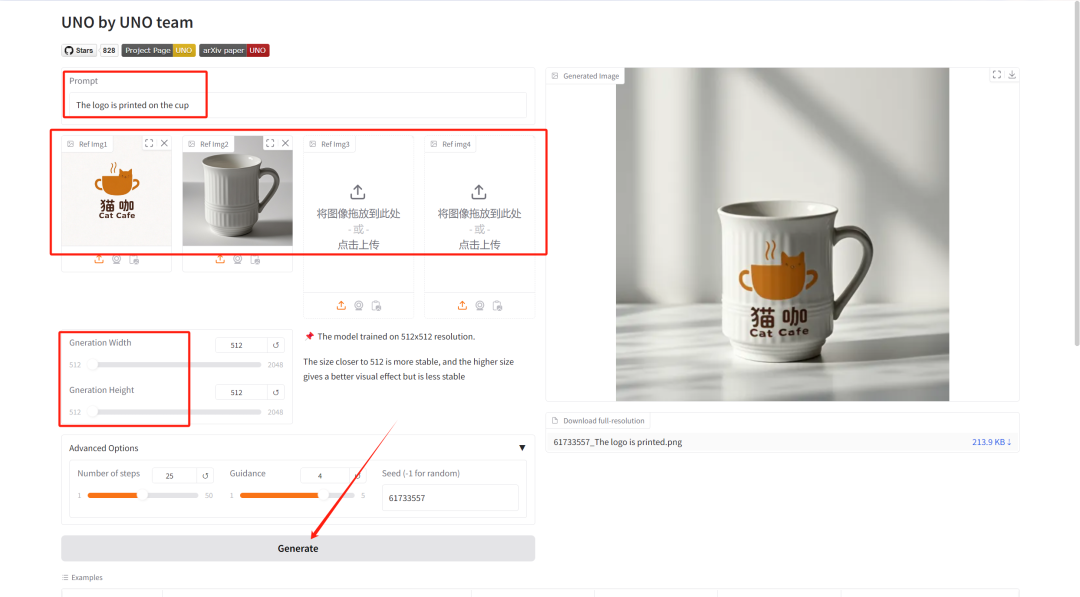

Enter the text describing the generated image in "Prompt", and then upload the image content of the generated image in "Ref Img". Adjust "Gneration Width/Height" to select the height/width of the generated image, and finally click "Generate".

Parameter adjustment introduction:

- Number of steps: Indicates the number of iterations of the model or the number of steps in the inference process, representing the number of optimization steps the model uses to produce the result. A higher number of steps generally produces more refined results, but may increase the computation time.

- Guidance: It is used to control the influence of conditional input (such as text or image) on the generated results in the generative model. A higher guidance value will make the generated results closer to the input conditions, while a lower value will retain more randomness.

- Seed: It is a random number seed, which is used to control the randomness of the generation process. The same Seed value can generate the same results (provided that other parameters are the same), which is very important in reproducing the results.

Here we uploaded a logo and a cup image, with the text description: The logo is printed on the cup. We can see that the model processed the image very accurately for us.