Command Palette

Search for a command to run...

Online Tutorials | Comparable to o3-mini, Open Source Code Inference Model DeepCoder-14B-Preview Has 3k Stars

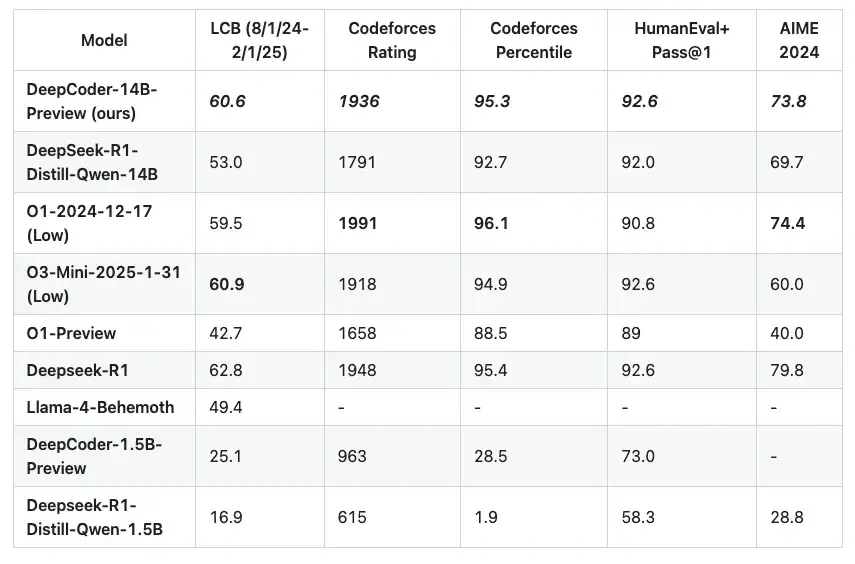

In the early morning of April 9, the Agentica team and Together AI jointly open-sourced a new DeepCoder-14B-Preview The code reasoning model,This new model, which only requires 14B and is comparable to OpenAI o3-Mini, has quickly attracted widespread attention in the industry.It has 3k stars on GitHub.

Specifically, DeepCoder-14B-Preview is a DeepSeek-R1-Distilled-Qwen-14B Fine-tuning code reasoning LLM,extending context length using distributional reinforcement learning (RL).The model achieved a single pass rate (Pass@1) of 60.6% on LiveCodeBench v5 (8/1/24-2/1/25).Not only does it surpass its base model, but it also achieves the same performance as the original model with only 14 billion parameters. OpenAI o3-mini Decent performance.

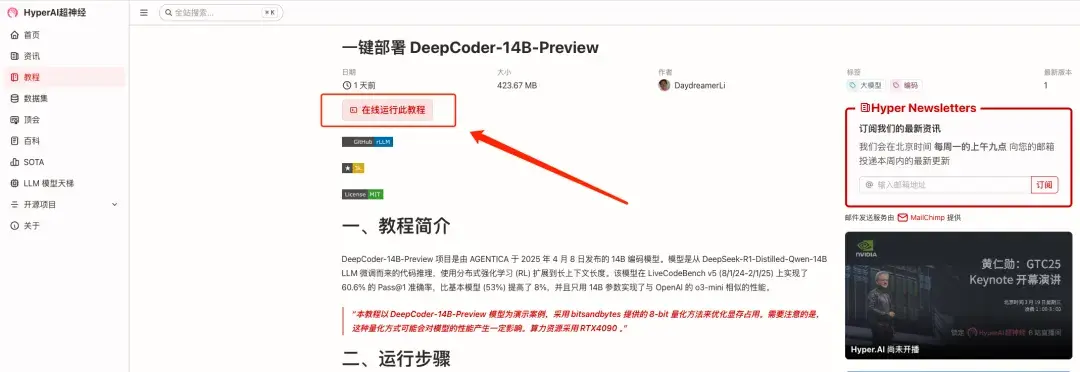

Currently, the "One-click deployment of DeepCoder-14B-Preview" tutorial is available online. HyperAIIn the tutorial section of the official website, after the model is cloned, enter the "API address" to quickly experience the model!

Tutorial Link:https://go.hyper.ai/0J82f

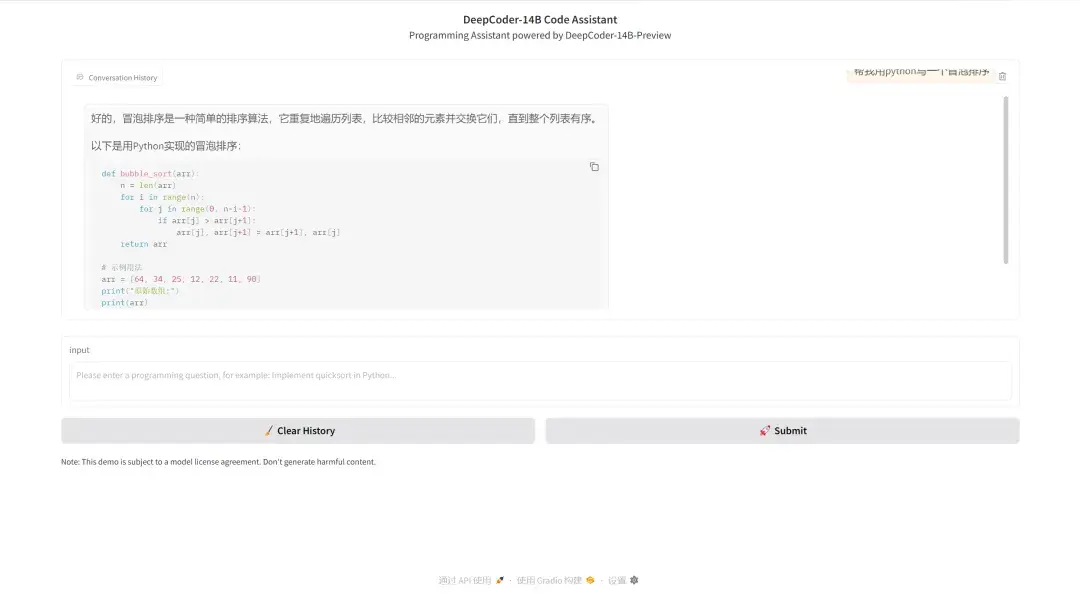

Demo Run

1. Log in to hyper.ai, on the Tutorial page, select One-click deployment of DeepCoder-14B-Preview, and click Run this tutorial online.

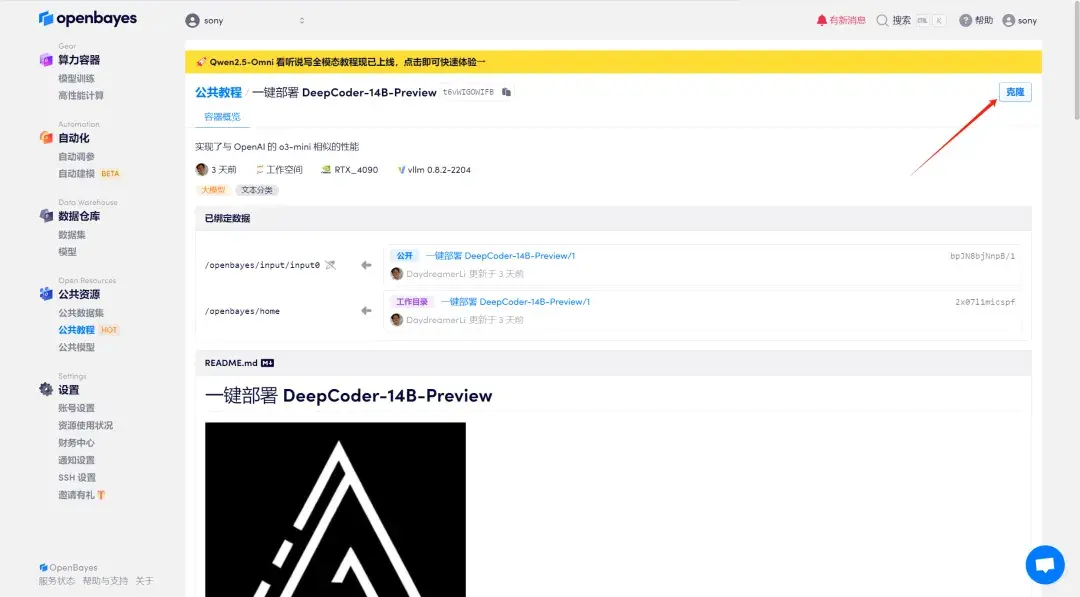

2. After the page jumps, click "Clone" in the upper right corner to clone the tutorial into your own container.

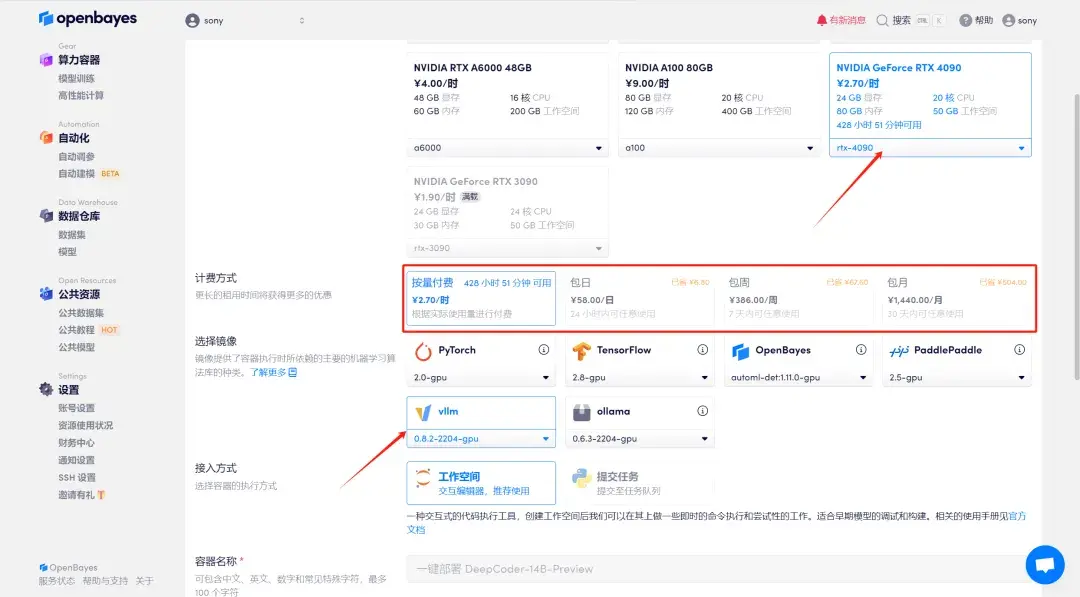

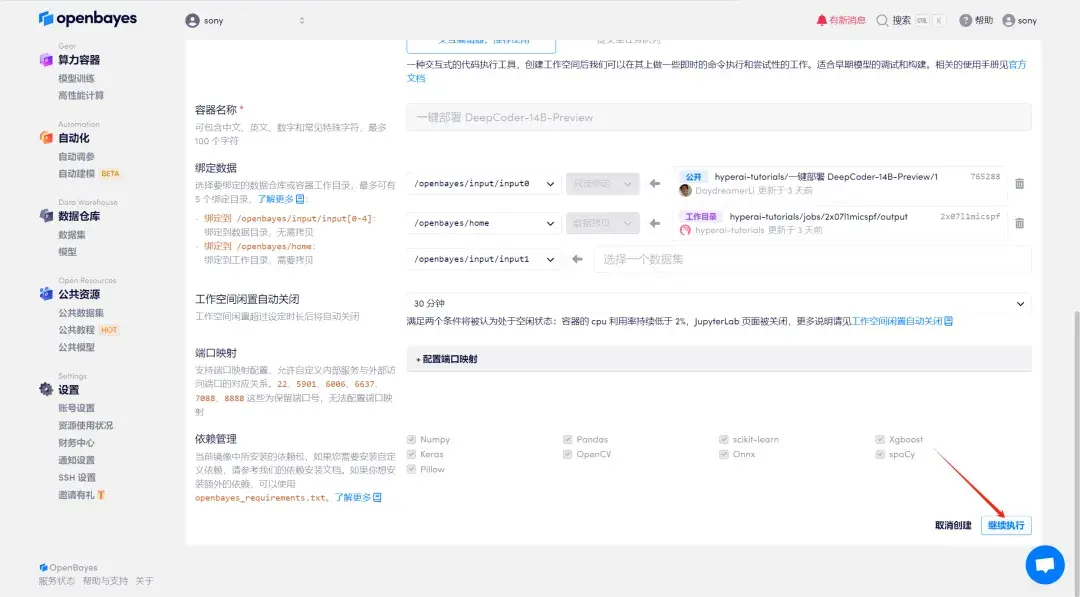

3. Select "NVIDIA GeForce RTX 4090" and "vLLMMirror image,OpenBayes The platform provides 4 billing methods. You can choose "pay as you go" or "daily/weekly/monthly" according to your needs and click "continue". New users can register using the invitation link below to get 4 hours of RTX 4090 + 5 hours of CPU free time!

HyperAI exclusive invitation link (copy and open in browser):

https://openbayes.com/console/signup?r=Ada0322_NR0n

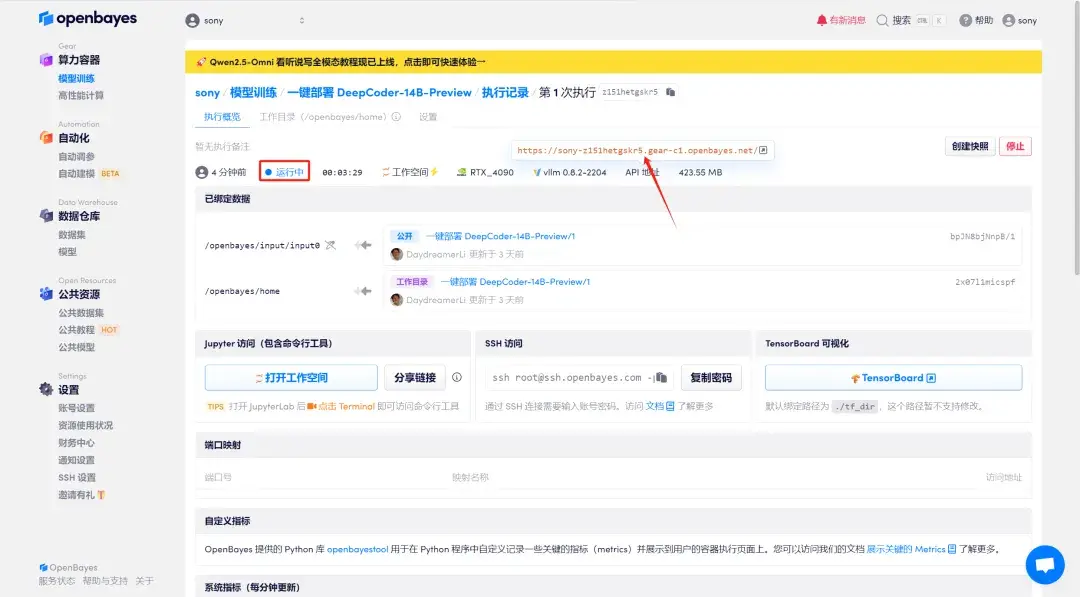

4. Wait for resources to be allocated. The first clone will take about 2 minutes. When the status changes to "Running", click the jump arrow next to "API Address" to jump to the Demo page. Please note that users must complete real-name authentication before using the API address access function.

Effect display

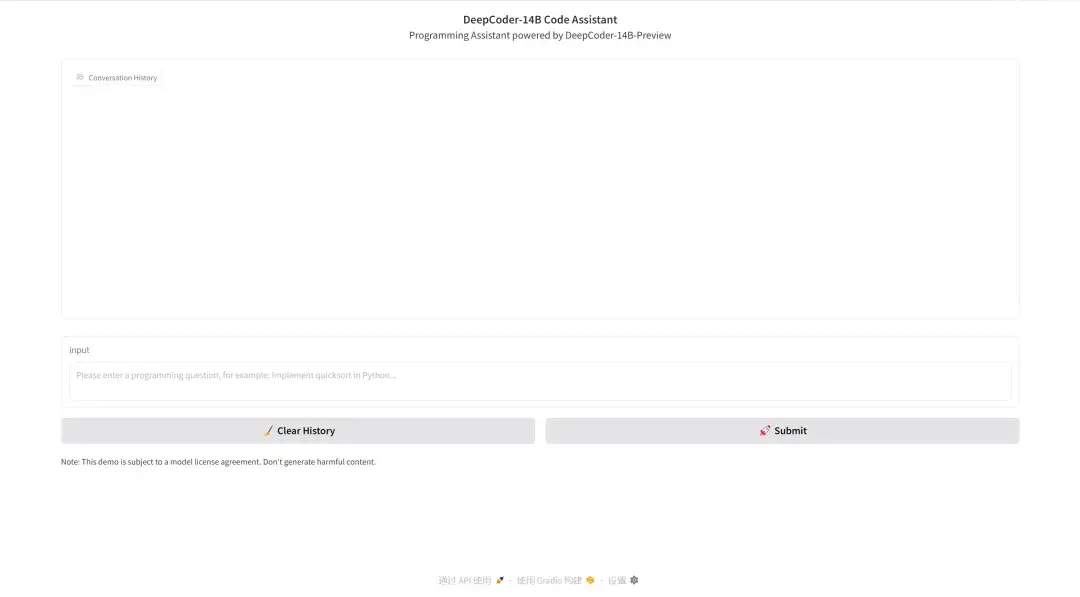

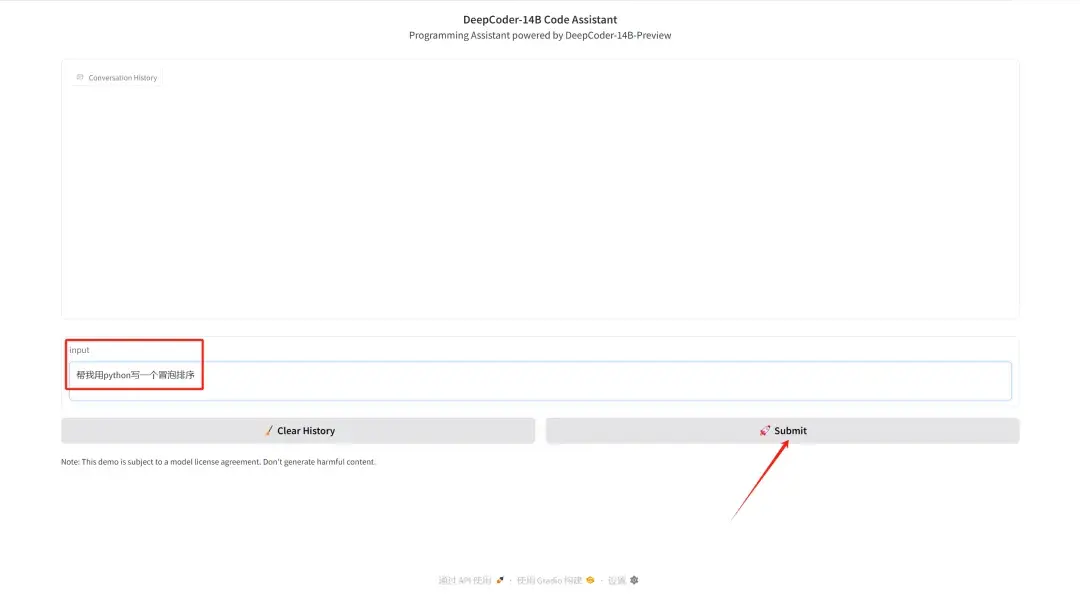

After entering the Demo page, you can experience the model. This tutorial uses the DeepCoder-14B-Preview model as a demonstration case and uses the 8-bit quantization method provided by bitsandbytes to optimize video memory usage.

Enter the content in the "input" column and click "Submit" to generate. Click "Clear History" to clear the conversation history.

Here we take the classic bubble sort as an example, and we can see that the model responds to the question very quickly.