Command Palette

Search for a command to run...

Selected for CVPR 2025, Shanghai AI Lab and Others Proposed the First full-modality Medical Image re-identification Framework, Which Achieved SOTA on 11 Datasets

In the field of AI-driven medical image management, medical image re-identification (MedReID) is a key technology that aims to automatically associate patient image data from different modalities and at different times, thereby providing strong data support for personalized diagnosis and treatment. However, this field has been little explored.Traditional methods mostly rely on low-level image features or manually maintained metadata, which are difficult to meet the clinical needs for accurate matching of massive, multimodal images.

To address this challenge,The Shanghai Artificial Intelligence Laboratory, together with a number of well-known universities, proposed the MaMI (Modality-adaptive Medical Identifier) model.This is a new medical image re-identification method that breaks through the traditional single modality limitation by introducing a continuous modality parameter adapter, so that a unified model can be automatically adjusted to a modality-specific model suitable for the current input (such as X-ray, CT, fundus, pathology, MRI, etc.) at runtime.

With this strategy, MaMI has demonstrated state-of-the-art re-identification performance in evaluations on 11 public medical imaging datasets, providing accurate and dynamic historical imaging data retrieval support for personalized medicine.

Paper address:

https://arxiv.org/pdf/2503.08173

Code and model open source address:

https://github.com/tianyuan168326/All-in-One-MedReID-Pytorch

Research highlights

Propose the All-in-One Medical Re-ID Model (MaMI)

For the first time, the research team built a model that can uniformly handle the problem of re-identification of medical images of multiple modalities, and used a single model to achieve the recognition of images of different modalities (such as X-ray, CT, fundus, pathology, MRI, etc.).

Building a comprehensive medical re-ID benchmark

The research team built a comprehensive and fair benchmark on 11 public medical imaging datasets covering multiple imaging modalities and different organs, providing a standardized evaluation platform for subsequent research on this issue.

Design of Continuous Modal Parameters Adapter (ComPA)

The research team innovatively proposed a continuous modal parameter adapter, which can dynamically generate modal-specific parameters based on the input image, thereby adjusting the original modality-independent model to a modality-specific model that adapts to the current input characteristics.

Integrate medical prior knowledge

By modeling cross-image differences, the research team transferred the rich medical prior knowledge in the pre-trained medical basic model to the re-identification task, effectively improving the model's ability to capture subtle identity clues.

Verify application value in actual scenarios

* Historical data-assisted diagnosis: MaMI can retrieve personalized patient information from unorganized historical imaging data, thereby significantly improving the accuracy of existing medical examinations;

* Privacy Protection: MaMI can detect subtle identifying information in images and automatically remove it before data is shared, ensuring that necessary medical information is maintained while protecting patient privacy.

Model architecture: Introducing parameter adapter based on continuous mode

There are two major challenges in the field of medical image management: historical image management and privacy protection.

first,Traditional methods mainly rely on manually pre-linking images with patient metadata (such as name, medical record number) and retrieving images through query systems. However, when data is stored on different PACS platforms,Such links are often incomplete or inaccurate, making them difficult to manage efficiently.Therefore, there is an urgent need for a method that can accurately retrieve patients' historical images from scattered and poorly organized data to provide reliable historical evidence for disease diagnosis.

Secondly,Currently, most privacy protection measures focus only on removing explicit information (such as the patient's name). However, studies have found thatThere are also subtle visual clues in the images that can reveal the patient's identity.An ideal medical image re-identification model should be able to automatically detect identity-related areas in the image and render these areas unrecognizable through appropriate post-processing, thereby effectively reducing the risk of privacy leakage while ensuring the medical practicality of the data.

In response to the above challenges, although a small number of studies have begun to explore the problem of medical image re-identification, most of the work is limited to specific modalities. Existing studies include:

* Fukuta et al. and Singh et al. used low-level features to perform identity recognition on fundus images;

* Packhauser et al. used neural networks to achieve re-identification of chest X-rays.

These methods are all designed for a single modality and find it difficult to benefit from the complementary advantages of multimodal data. At the same time, they make less use of medical prior information, which limits the generalization ability of the model.

In general, existing research in the field of medical image re-identification is still in its infancy.There is an urgent need to develop a unified solution that can integrate multimodal information while taking into account historical image management and privacy protection.

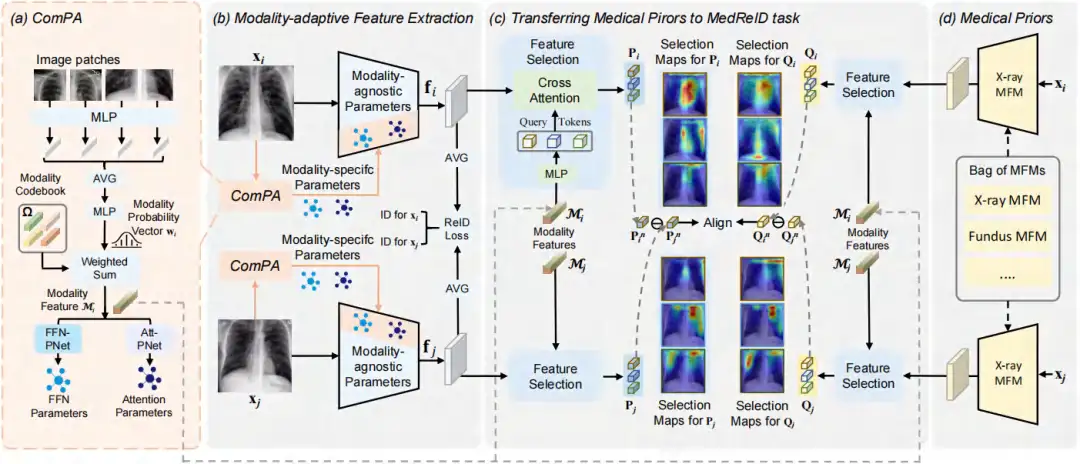

MaMI, proposed by the Shanghai Artificial Intelligence Laboratory in collaboration with a number of universities, has two main innovations. The first is to achieve modality-adaptive feature extraction by upgrading a modality-independent model to a modality-specific model at runtime. The second is to optimize the model by migrating the rich medical priors in the basic medical model to the medical re-identification task, making it more focused on medical-related areas.

As shown in the following figure (a),The researchers introduced a continuous mode based parameter adapter (ComPA)Dynamically adjust a modality-independent model to a model suitable for the current input modality at runtime. The adjusted model extracts identity-related visual features from the input medical image, as shown in Figure (b) below. During the optimization process, the researchers aligned the key differences between the images.Transferring rich medical prior knowledge from medical foundation models (MFMs) to medical re-ID tasks,As shown in Figure (c) below.

Continuous Mode Based Parameter Adapter (ComPA)

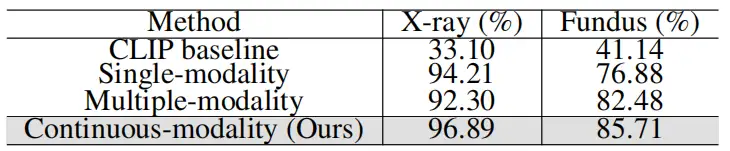

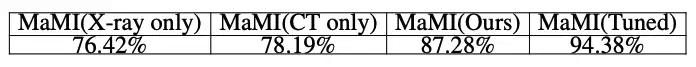

In order to fully capture the specific features of different medical image modalities, researchers found that simply fine-tuning the unified model is difficult to bring out the advantages of each modality (as shown in Table 1), so modality adaptive feature extraction is needed. To this end, they designed the ComPA module, which dynamically generates model parameters specific to the input image at runtime through continuous modality representation and low-rank parameter prediction, effectively making up for the lack of modality-specific information in the unified model.

Transfer medical priors from medical foundation models to ReID models

Relying solely on re-ID loss may cause the model to focus too much on trivial textures (such as equipment noise) and ignore the patient's intrinsic biological characteristics. In contrast, medical foundation models (MFMs) pre-trained on large-scale medical images focus on anatomical structures, providing richer medical priors for identity recognition, and therefore,The researchers transferred their prior knowledge to the re-ID model and used local feature maps to guide the model.

To bridge the domain gap between the MFMs pre-training task and the MedReID task, two strategies are proposed:

First isAdaptive selection of key structures.The modal features of the image are projected into a set of modality-specific query tokens that capture key structural information in each modality, such as organ contours in chest X-rays or vascular distribution in fundus images. These query tokens are then matched with local feature maps through a cross-attention mechanism, thereby achieving accurate selection of key medical semantic features.Compared with using all feature information, the extraction of key structures reduces the risk of overfitting.

The second is prior learning based on structural residuals.Different from the conventional method of directly aligning features, the researchers align inter-image difference features and use contrast loss to learn subtle differences between images, thereby improving the model's ability to capture identity features.

Experimental results: Verifying value in actual application scenarios

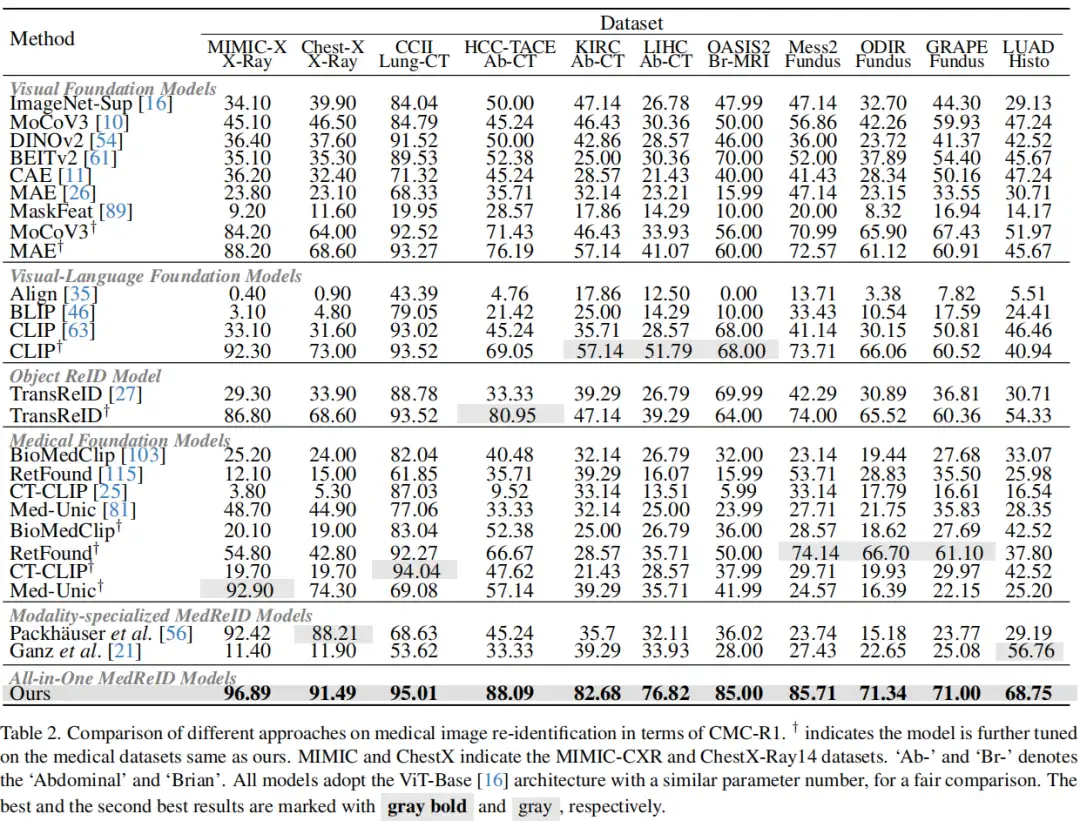

The first medical ReID list

As shown in the table below, the researchers evaluated the visual base model, visual language base model, re-identification model, medical base model and unimodal MedReID model. In order to fully stimulate their potential and ensure fair comparison, they fine-tuned the representative models.The results show that MaMI consistently achieves SOTA performance in multiple tests.

Application scenario 1: Automated retrieval of historical cases to support precision medicine

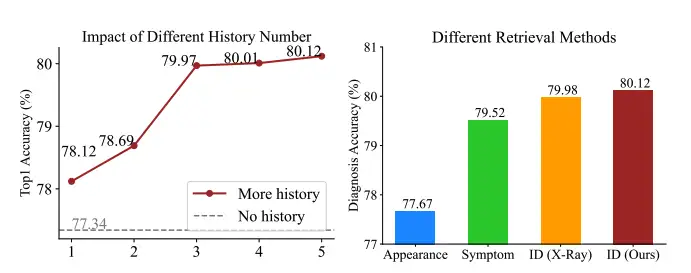

In actual scenarios, considering the poor management of patients' medical images in the past,The research team proposed using MaMI to retrieve historical images related to the current image, and fused the features of multiple historical images through a simple MLP to assist diagnosis and rely only on image information.No history tags needed.

When retrieving 5 historical images,The diagnostic accuracy rate increased from 77.34% to 80.12%, an increase of 2.78%.We demonstrate the effectiveness of MaMI in leveraging historical data in unstructured archives to improve clinical utility; further comparative experiments show that our approach consistently outperforms appearance-based (DINOv2), symptom-based (Med-Unic), and X-ray ReID methods designed specifically for X-rays (Packhauser et al.) in image retrieval performance.

Application scenario 2: Automated privacy protection to help ensure ethical safety in the era of big models

The researchers used a simple U-Net model to predict and remove identity-related visual features in images, and introduced its ID model as the identity similarity loss.This reduces the similarity of identity features while keeping the similarity of medical features high.The results of training on the MIMIC-X dataset and evaluating on the Chest-X dataset show that the protected images are resistant to re-identification attacks, while the disease classification accuracy is only slightly lower than that of the original images. The results are shown in the following table:

Conclusion

The MaMI model unifies the tasks of medical image re-identification and privacy protection, and significantly improves the overall performance of historical image retrieval and identity information protection. The enhancement effect of MaMI is supported by solid theoretical analysis and comprehensive experimental verification. We believe that the innovative continuous modal parameter adapter and its design integrating medical prior knowledge provide a new paradigm for multimodal medical image management, and are expected to inspire the development of more advanced image processing technologies for personalized diagnosis and treatment and privacy protection.

In addition, the newly established large model evaluation team of the Shanghai Artificial Intelligence Laboratory is recruiting interns. Interested friends can click on this article to view details, or directly send your resume to [email protected].