Command Palette

Search for a command to run...

With an Accuracy Far Exceeding That of Junior Dermatologists, Peking University International Hospital and Others Developed a Deep Learning Algorithm to Detect and Grade Acne Lesions

Acne, also known as pimples, is a common chronic inflammatory skin disease that affects more than 80% of adolescents and 9.4 % of people of all ages worldwide. Accurate acne severity grading is crucial for clinical treatment and subsequent management.However, traditional acne grading relies on the observation and clinical experience of dermatologists, and errors are inevitable.

In recent years, with the continuous expansion of artificial intelligence in the medical field, artificial intelligence-based acne image analysis methods have gradually attracted attention. Although some artificial intelligence methods have been developed to automate acne severity grading, the diversity of acne image acquisition sources and various application scenarios will affect their performance.

In this context, Han Gangwen, chief physician of the Department of Dermatology at Peking University International Hospital, and his team developed a deep learning algorithm called AcneDGNet, which can accurately detect acne lesions and judge their severity in different healthcare scenarios. The results were published in the Nature journal Scientific Reports under the title "Evaluation of an acne lesion detection and severity grading model for Chinese population in online and offline healthcare scenarios".

Prospective evaluations have shown thatAcneDGNet’s deep learning algorithm is not only more accurate than junior dermatologists, but is also comparable to senior dermatologists in accuracy. It can accurately detect acne lesions and determine their severity in different healthcare scenarios, effectively helping dermatologists and patients diagnose and manage acne in online consultations and offline medical treatment scenarios.

Research highlights

* Innovative model design: The integration of visual Transformer and convolutional neural network can obtain more efficient hierarchical feature tables, making classification more accurate. * Diversified evaluation data set: 2,157 facial images were collected, covering public and self-built data sets, with diverse image acquisition devices, more comprehensive data, and more representative model training and evaluation. * Comprehensive evaluation in multiple scenarios: Comprehensive online consultation and offline medical treatment scenarios to evaluate model performance. Use data sets of different natures and combine retrospective and prospective data evaluation. * Strong reference of experimental data: The data are all from the Chinese population. The AGS scale suitable for the Chinese data set is selected as the classification standard, which is more in line with China's clinical reality and provides strong support for domestic acne diagnosis and research.

* High accuracy and clinical value: Experimental results show that the classification accuracy in online scenarios reaches 89.5% and in offline scenarios reaches 89.8%, with small counting errors. The model is more accurate in acne classification than junior dermatologists.

Paper address:

https://www.nature.com/articles/s41598-024-84670-z

The open source project "awesome-ai4s" brings together more than 200 AI4S paper interpretations and provides massive data sets and tools:

https://github.com/hyperai/awesome-ai4s

Dataset: Multivariate Assisted Model Training and Testing

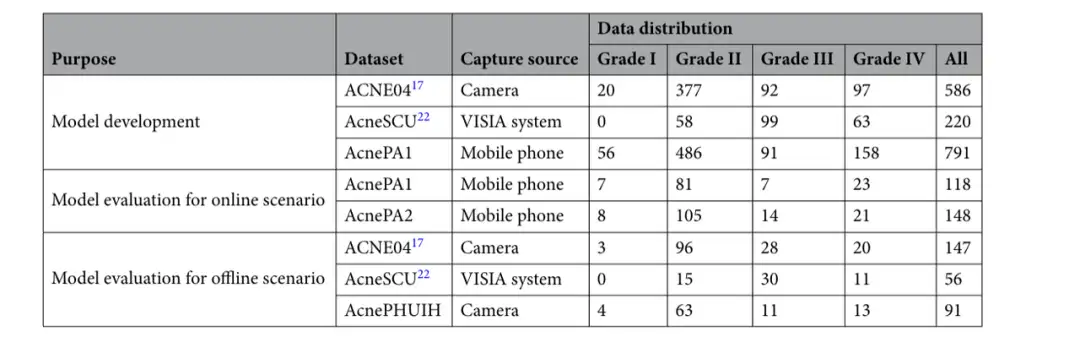

The researchers collected two public datasets, ACNE04 and AcneSCU, and three self-built datasets, AcnePA1 (Ping An Good Doctor acne data), AcnePA2, and AcnePKUIH (Peking University International Hospital acne data). All images in ACNE04, AcneSCU, and AcnePA1 were randomly divided into training datasets and test datasets, while images in AcnePA2 and AcnePKUIH were only used as test datasets.

Training dataset:Contains 586 images from ACNE04, captured by a digital camera; 220 images from AcneSCU, collected by the VISIA system (digital imaging system for skin detection); 791 images from AcnePA1, captured by smartphones.

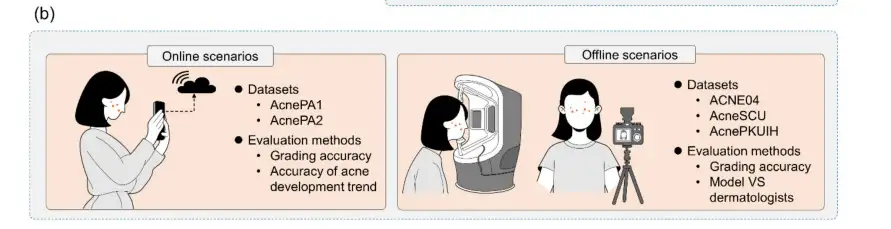

Test Dataset:Five data sets were selected for two different application scenarios: online consultation and offline medical treatment. The online consultation scenario test data includes 118 images from AcnePA1 and 148 images from AcnePA2, all taken by smartphones. The hospital medical treatment scenario test data includes 147 images from ACNE04 taken by a digital camera, 56 images from AcneSCU collected by the VISIA system, and 91 images from AcnePKUIH taken by a camera. See the figure below.

Model architecture: Visual Transformer combined with CNN

According to the Global Burden of Disease Study (GBD) 2021, there are 231 million acne patients among people aged 10-24 worldwide, nearly 300 million people, accounting for 1/4 of the age group! In traditional acne diagnosis, doctors mainly judge the severity of acne through visual assessment.However, this method relies heavily on the doctor's personal experience and has large errors. In addition, in areas with scarce medical resources, it is difficult for people to get diagnosis and treatment from professional dermatologists.

The AcneDGNet in this study is based on an innovative deep learning algorithm that cleverly combines the Visual Transformer (ViT) and convolutional neural network.

*Visual Transformer has a unique advantage in feature extraction, and it can capture a wider range of feature relationships in images.

* Convolutional neural networks are good at processing local features and can accurately identify various acne lesions.

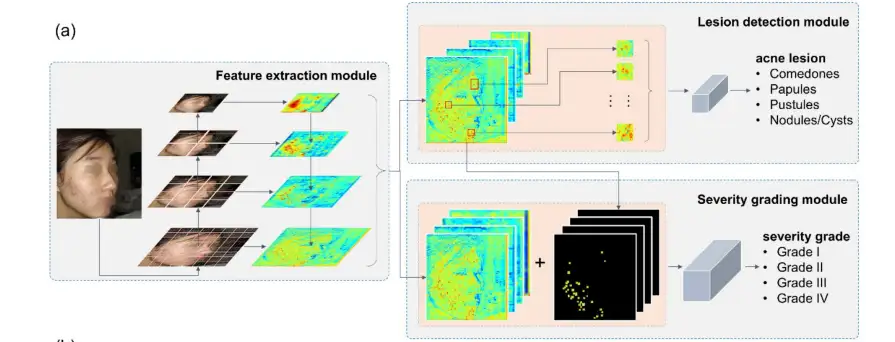

The visual transformer is used to extract features from acne images collected from different sources to generate powerful global features.Convolutional neural networks were used to detect four types of acne lesions, namely comedones, papules, pustules, and nodules/cysts, according to the guidelines of the Acne Grading System (AGS).Finally, the severity of acne is determined by fusing global features and local lesion-aware features.

The AcneDGNet model mentioned in this study consists of three main modules: feature extraction module, lesion detection module and severity grading module.The feature extraction module adopts the visual Transformer architecture to generate stronger global feature representation; the lesion detection module uses a convolutional neural network (CNN) to detect 4 types of acne lesions; and the severity grading module determines the severity of acne by fusing global and local lesion-aware feature representations.

The AcneDGNet framework is shown below. First, the face image is input into the feature extraction module.This module includes the Swin Transformer architecture and the feature pyramid architecture.Then, the multi-scale feature map output by the feature extraction module is input into the lesion detection module and the severity grade module respectively.

In the lesion detection module, the feature map of the acne candidate region is obtained through the region proposal network architecture to predict the location and category of each acne lesion in the image. In the severity grading module,The multi-scale feature maps are resized and combined with the regional lesion-aware feature maps of the lesion detection module to predict the severity grade of acne images.

Model evaluation in online consultation and offline medical treatment scenarios

In order to more comprehensively verify the effectiveness of the AcneDGNet model, the researchers designed two application scenarios:It includes online consultation scenarios using smartphones to take facial images, and offline medical consultation scenarios using digital cameras, VISIA systems and other professional equipment to take photos.Select different data sets for corresponding evaluation and adopt different evaluation methods.

1. High accuracy in online medical scenarios

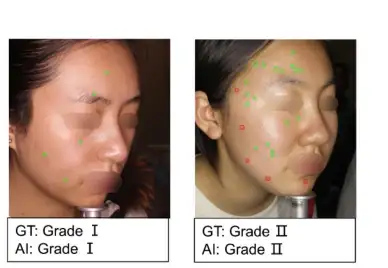

In the online medical scenario, researchers carefully selected test data from the AcnePA1 and AcnePA2 datasets. These data are all from images taken and uploaded by patients using smartphones, as shown in the figure below, which truly reflects the actual situation of image acquisition in online medical care.

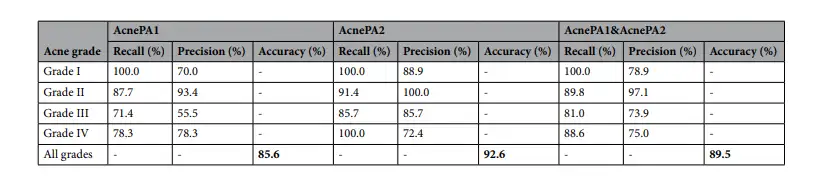

The experimental results are surprising. AcneDGNet performs well in acne severity classification.The overall accuracy reached 89.5%.For the AcnePA1 dataset,The accuracy is 85.6%,On the AcnePA2 dataset,The accuracy rate is as high as 92.6%. This means that AcneDGNet can accurately determine the severity of acne and provide doctors with a reliable basis for diagnosis. The detailed evaluation results are shown in the following table:

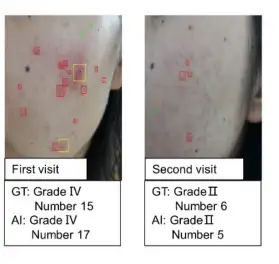

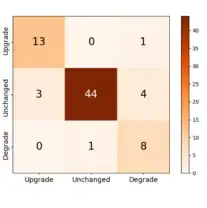

In addition to grading, AcneDGNet also demonstrated strong capabilities in detecting changing trends in acne conditions.In tests on the AcnePA2 dataset, it was able to accurately identify whether a patient's acne level had upgraded, remained unchanged, or degraded, with an accuracy rate of 87.8%.In 74 follow-up visits, it correctly identified 65 cases of acne trends, as shown below:

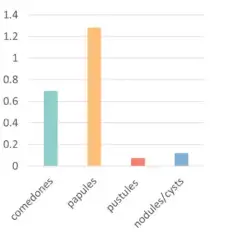

AcneDGNet also performs well in terms of counting error. The total counting error of various acne lesions is only 1.91±3.28.The counting error of comedones is 0.70±1.92, papules is 1.28±2.01, pustules is 0.07±0.29, and nodules/cysts is 0.12±0.38, as shown in the figure below. Such a low error rate shows that AcneDGNet can accurately detect the number of acne lesions, providing accurate data support for doctors to evaluate the condition.

(II) Offline hospital diagnosis performance exceeds that of doctors with more than 5 years of experience

AcneDGNet also performs well in offline medical treatment scenarios. Researchers conducted retrospective and prospective evaluations on it based on the ACNE04, AcneSCU and AcnePKUIH datasets. As shown below:

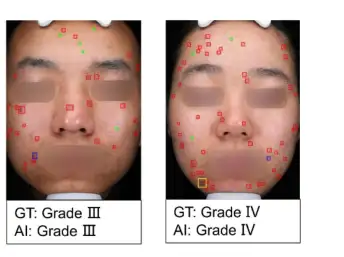

In the retrospective evaluation, AcneDGNet was tested on the ACNE04 and AcneSCU datasets.The results show that the overall accuracy rate reaches 90.1%, of which the accuracy rate on the ACNE04 dataset is 91.2% and the accuracy rate on the AcneSCU dataset is 87.5%.Detailed evaluation results are shown in the table below.

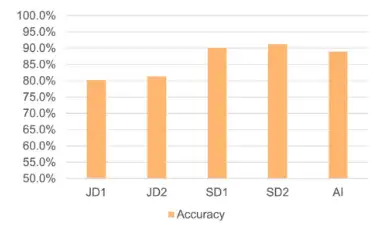

In the prospective evaluation, AcneDGNet was tested on the AcnePKUIH dataset and compared with the diagnostic results of two junior dermatologists with more than 5 years of experience (JD1 and JD2) and two senior dermatologists with more than 10 years of experience (SD1 and SD2), as shown in the figure.

The results are amazing.AcneDGNet achieved an accuracy of 89.0%, which is higher than the 80.8% of junior dermatologists and very close to the 90.7% of senior dermatologists. Combining the results of retrospective and prospective evaluations, the overall accuracy of AcneDGNet in offline scenarios reached 89.8%.This achievement undoubtedly demonstrates the great value of AcneDGNet in offline medical scenarios. It can not only accurately diagnose acne, but also provide powerful assistance to inexperienced doctors, helping them improve the accuracy of diagnosis.

AI empowers skin diagnosis and enters the era of intelligent diagnosis and treatment

In fact, the application of AI in dermatology is already common. In 2019, L'Oréal and Alibaba Group jointly launched the world's first mobile artificial intelligence detection application for acne problems——La Roche-Posay EFFACLAR SPOTSCAN.

In addition, in May 2023, Professor Liu Jie's team from the Department of Dermatology at Peking Union Medical College Hospital and Hangzhou Yongliu Technology Co., Ltd. jointly developedAcne severity intelligent assessment systemIt was also officially released to the industry. After one year of application practice and feedback from professional doctors, the system has undergone multiple algorithm upgrades and function optimizations. In February 2024, Peking Union Medical College Hospital officially released the announcement of the transformation of scientific and technological achievements on its official website. After review by the hospital, the system officially entered the clinical transformation and application stage.

In July 2024, Tencent's AI acne diagnosis and treatment robot was officially launched on the market. It can make an accurate diagnosis in just 3 seconds and provide a treatment plan in just 10 seconds.In addition, Tencent's medical AI system has been deployed in more than 1,300 institutions.The clinical trial results are jaw-dropping: the diagnostic accuracy of cystic acne is 99.7% (human doctors only have 82.4%); acne outbreaks can be warned 6 months in advance (prediction sensitivity is 91.3%); the risk of scarring due to treatment is reduced by 76% (based on 3.2 million AI simulations)

After continuous algorithm iterations, acne diagnosis has basically matured. Next, AI-assisted skin diagnosis will continue to expand to more areas.

The future is promising, AI for Science will have unlimited possibilities

The success of AcneDGNet in acne diagnosis is a new breakthrough in AI in the medical field, which shows us the great potential of AI for Science. It not only brings more accurate and convenient diagnosis methods to acne patients, but also provides valuable experience and examples for AI applications in the medical field.

The influence of AI for Science is not limited to the medical field. AI can also play an important role in other scientific fields such as materials science, physics, and astronomy. It can help scientists process and analyze massive amounts of data and accelerate the progress of scientific research. In materials science, it can help scientists design better performing materials by simulating and predicting the properties of materials; in physics, it can help scientists analyze experimental data and discover new physical phenomena and laws; in astronomy, AI can help astronomers process and analyze astronomical observation data and discover new celestial bodies and cosmic phenomena...

AI is like a master key, opening doors to unknown scientific fields. I believe that in the future, AI for Science will bring more breakthroughs and innovations, and make greater contributions to human progress and development!

References:

1.https://mp.weixin.qq.com/s/vlzUWNQsxYaSVXKSt2dhhQ

2.https://cloud.tencent.com/developer/article/1526199

3.https://mp.weixin.qq.com/s/vN2q