Command Palette

Search for a command to run...

The Stock Price Failed to Stop falling. Huang Renxun Revealed the Launch Time of Blackwell Ultra and Vera Rubin, and the Reasoning Ability Became the Focus

In recent years, NVIDIA has been present in almost every major trend in the global technology field, from cloud computing to cryptocurrency, from the metaverse to artificial intelligence. Especially in the new wave of artificial intelligence, NVIDIA, with its deep technical accumulation, firmly controls the data center GPU market share of about 95%, becoming the absolute leader in the field of AI chips.

However, earlier this year,DeepSeek The emergence of the inference model sent a clear signal to the public: the past model of relying on data and computing power to achieve miracles has gradually become ineffective, which once shook the market's expectations for the prospects of AI computing power, and the stock prices of many technology giants including NVIDIA fell sharply. Although NVIDIA's stock price has since rebounded, its dominant position in the industry is no longer as indestructible as it used to be. In order to prove the company's strength, NVIDIA must fully upgrade and update its GPU.

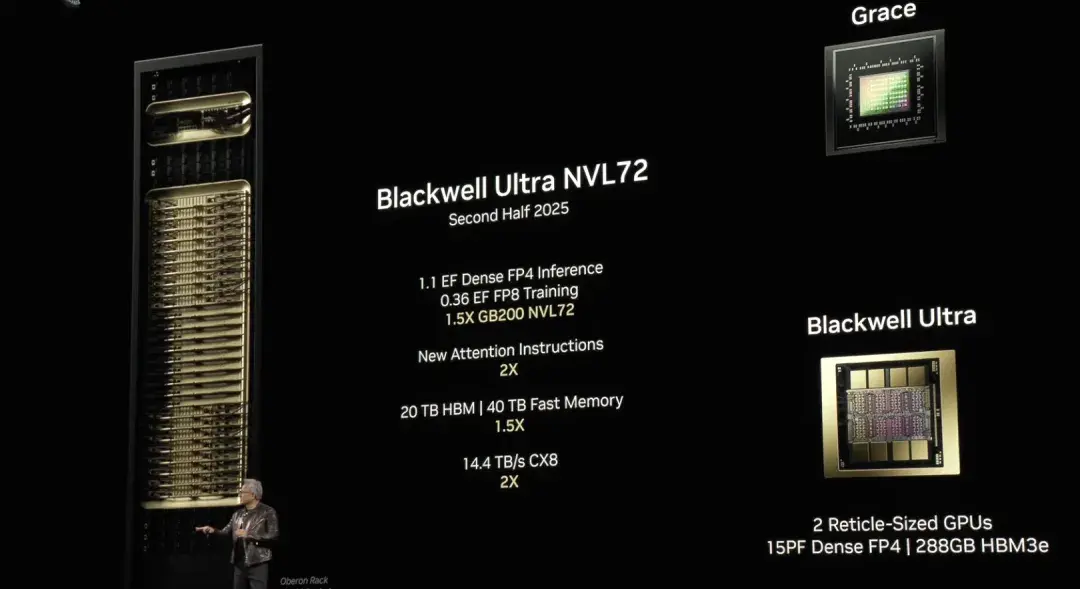

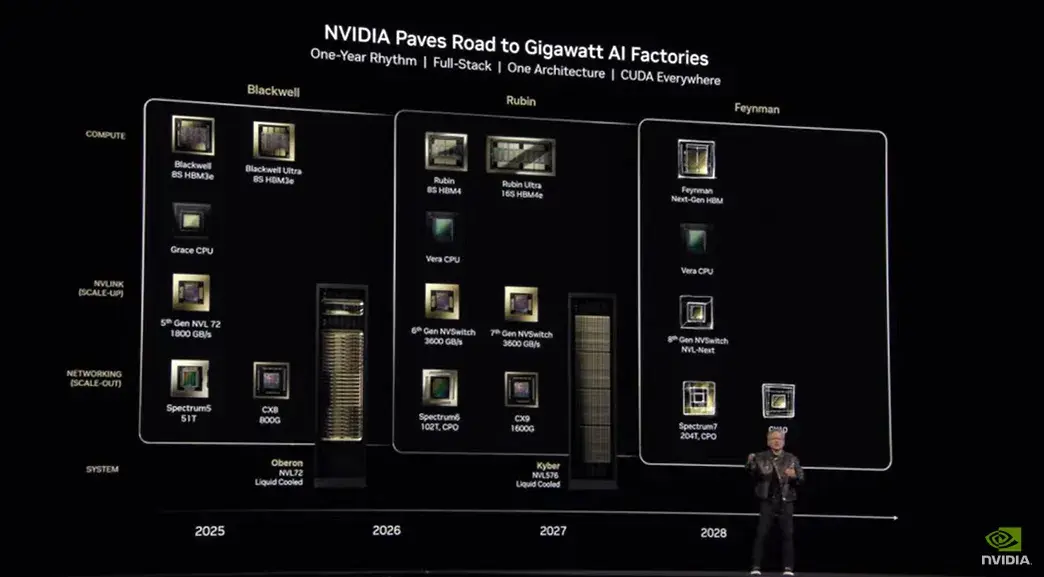

At the GTC 2025 conference held at 1 a.m. Beijing time on March 19, Huang Renxun brought the latest news about Nvidia chips:Blackwell Ultra, an upgraded version of the AI chip architecture Blackwell, will be launched in the second half of this year. NVIDIA GB300 NVL72 and NVIDIA HGX™ B300 NVL16 will comprehensively enhance model reasoning capabilities. NVIDIA's next-generation GPU architecture Vera Rubin will be available next year.

NVIDIA Blackwell Ultra Accelerates AI Inference

At last year's GTC conference, Huang Renxun announced the next-generation AI chip architecture Blackwell. Hopper The successor to the NVIDIA GeForce GPU, the Blackwell architecture has 208 billion transistors and is focused on accelerating generative AI tasks, large-scale training and inference workloads. Huang Renxun proudly declared in his speech that this is the most powerful AI chip series to date.

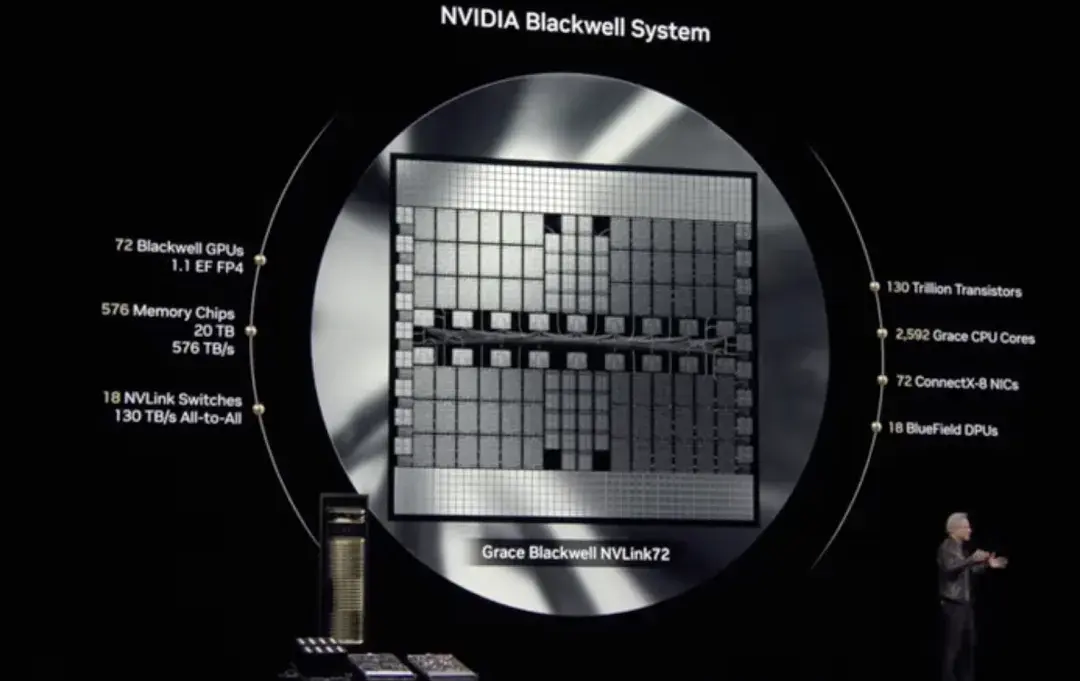

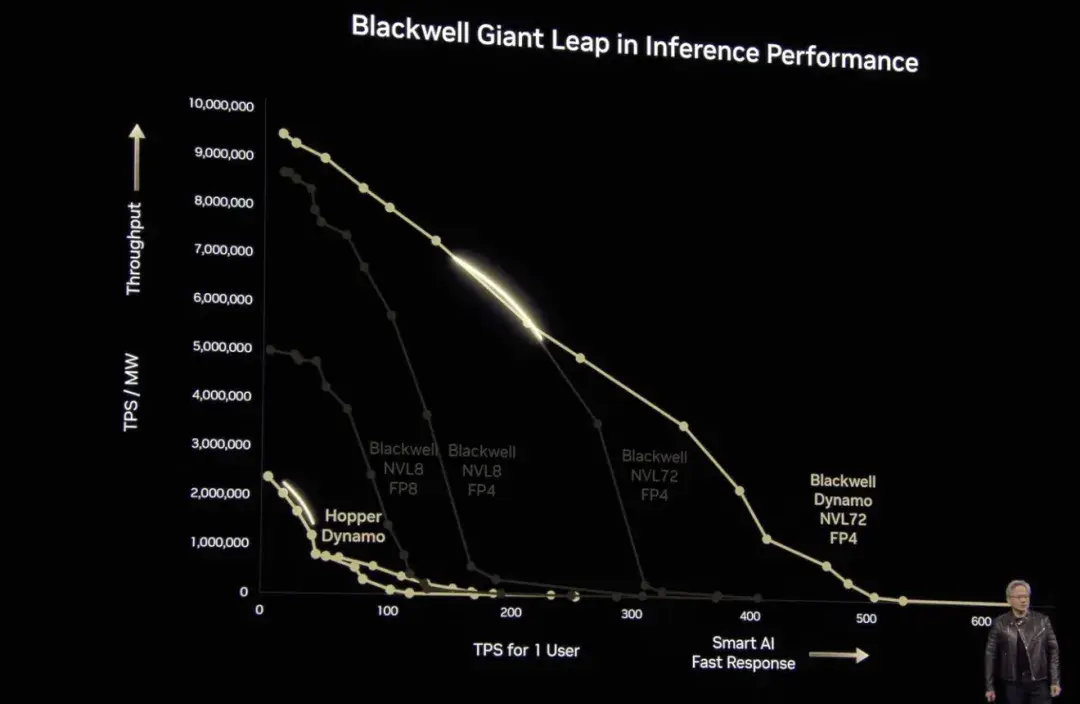

In today's live broadcast, Huang Renxun mentioned Blackwell again.He said: "The advantages of Blackwell are that it is faster, larger, has more transistors, and has stronger computing power." In addition, the NVL 72 architecture + FP4 computing precision model it adopts also further improves Blackwell's performance, which means that we can complete the same computing tasks with less energy consumption.

It is worth mentioning that after the emergence of DeepSeek, the focus of the artificial intelligence market has gradually shifted from "training" to "inference". At this conference, Huang Renxun specifically cited an inference model case to prove the superiority of Blackwell's computing performance - 40 times higher than Hopper. "I've said before that if Blackwell starts shipping on a large scale, you won't even be able to ship Hopper out." Of course, Huang Renxun also mentioned that Blackwell is fully invested in production, and in the second half of this year, NVIDIA Blackwell AI Factory will be upgraded again.And seamlessly transition to Blackwell Ultra.

Blackwell Ultra will include the NVIDIA GB300 NVL72 rack-scale solution, and NVIDIA HGX B300 NVL16 system.

First, the NVIDIA GB300 NVL72 uses a fully liquid-cooled rack-mounted design that packs 72 NVIDIA Blackwell Ultra GPUs and 36 Arm-based NVIDIA Grace™ CPU Combined into a platform optimized for test-time extended inference, the GB300 NVL72 delivers 1.5x faster AI performance than the previous generation NVIDIA GB200 NVL72, can explore multiple solutions, and break down complex tasks into multiple steps to generate higher quality responses.

Secondly, NVIDIA HGX B300 NVL16 provides a breakthrough for efficiently processing complex tasks such as AI reasoning. Compared with Hopper, it increases the reasoning speed of large language models by 11 times, increases computing power by 7 times, and increases memory capacity by 4 times.

In summary, Blackwell Ultra enhances extended inference at training and test time, providing strong support for applications such as accelerated AI reasoning, AI Agent, and Physical AI.

In this regard, Huang Renxun said: "AI technology has made a huge leap forward, and the demand for computing performance for reasoning and AI Agents has increased significantly. To this end, we designed Blackwell Ultra - it is a multi-functional platform that can efficiently perform pre-training, post-training, and reasoning tasks."

Nvidia's Next-Generation GPU Architecture Vera Rubin

Nvidia has been naming its architectures after scientists since 1998, and this time is no exception.Nvidia's upcoming next-generation GPU architecture, Vera Rubin, is named after Vera Rubin, the American astronomer who discovered dark matter.

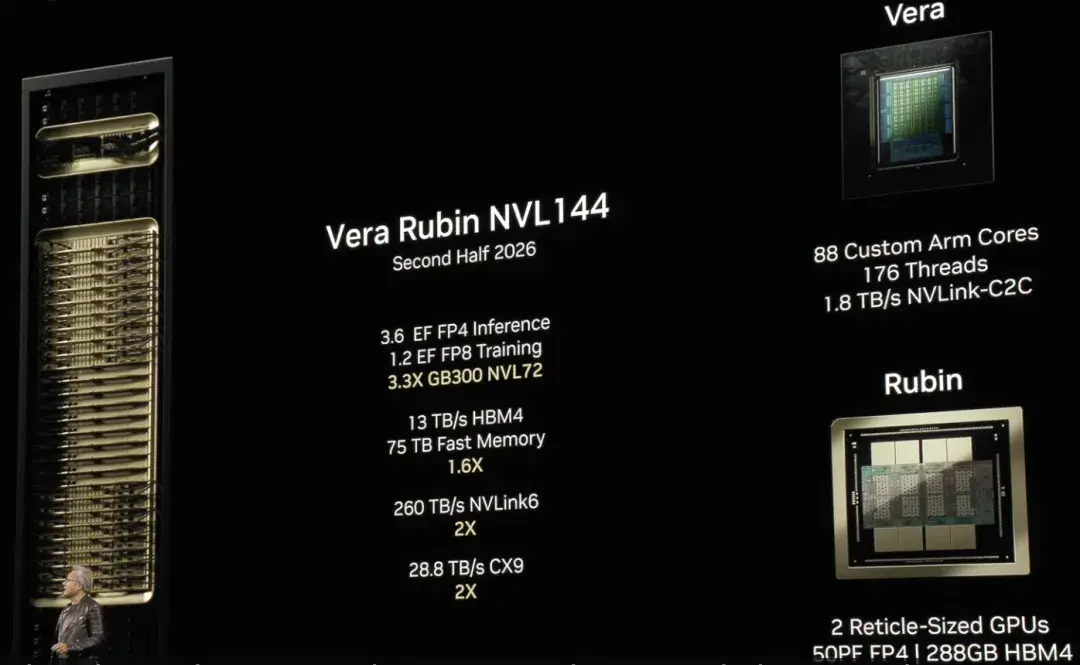

Vera Rubin deeply integrated the self-developed CPU and GPU architecture for the first time.This marks another breakthrough for NVIDIA in AI computing architecture, further expanding the boundaries of AI computing performance.

"Basically, everything is brand new except the chassis," Huang Renxun said. As NVIDIA's first fully self-designed CPU architecture, Vera is built on a customized Arm core. It is a small CPU with only 50 watts, but with larger memory and higher bandwidth. According to NVIDIA's official data, Vera's computing performance is directly increased by 2 times compared to Grace Blackwell. In addition, it is deeply optimized for AI loads. And by optimizing the instruction set, communication latency is significantly reduced, making data processing more efficient and smooth, providing strong support for AI training and reasoning.

At the same time, the new Rubin GPU also brings another leap forward in AI computing. When running with Vera, Rubin inference computing can achieve 50 petaflops, more than 2 times the existing Blackwell GPU. In addition, Rubin also supports up to 288GB of high-speed memory, ensuring that AI training and inference can efficiently process huge amounts of data.

Huang Renxun also revealed that Vera Rubin NVL144 will be available in the second half of next year.In the second half of 2027, NVIDIA expects to launch Vera Rubin Ultra, which uses NVL576 technology and consists of 2.5 million components. Each rack has a power of up to 600 kilowatts. The number of floating-point operations will increase by 14 times to 15 exaflops, achieving extreme scalability.

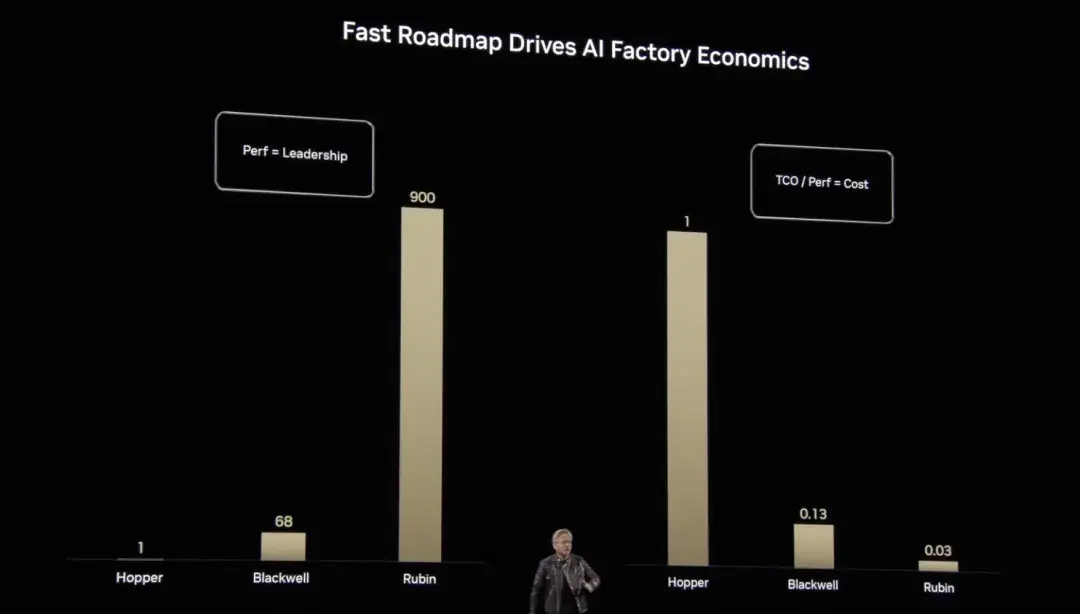

From Grace Hopper to Blackwell and now to Rubin, Huang Renxun has shown us NVIDIA's leaps and bounds in computing performance and cost optimization.Compared with the baseline computing power, the number of floating-point operations expanded vertically by Hopper is 1 times the baseline, Blackwell is increased to 68 times, and Rubin is jumped to 900 times, achieving exponential growth. This breakthrough not only significantly reduces the unit cost of AI computing, but also makes more complex and large-scale AI model training and reasoning efficient and feasible.

Nvidia will provide full-process services for building AI factories

In recent years, the focus of the AI field has gradually shifted from training large models to the widespread application of inference models. Inference has become the core driving force for the rapid growth of the AI economy. This shift has not only changed the technology landscape, but also put forward new requirements for computing infrastructure. Traditional data centers are not designed for the new AI era. In order to efficiently promote the process of AI inference and deployment, AI factories (AI Factories) came into being.

AI Factories not only store and process data, but also "produce intelligence" at scale, turning raw data into real-time insights. NVIDIA said: "Investing in companies that specialize in building AI Factories will take the lead in the future market."

To support this transformation, NVIDIA has created the building blocks of full-stack AI Factories and provides partners with the following key components: high-performance computing chips, advanced network technologies, infrastructure management and workload orchestration, the largest AI inference ecosystem, storage and data platforms, design and optimization blueprints, reference architectures, and flexible deployment methods.

There is no doubt that computing power is the core pillar of AI Factories. From Hopper to Blackwell architecture, NVIDIA provides the world's most powerful accelerated computing. Through the GB300 NVL72 rack-level solution based on Blackwell Ultra, AI Factories can achieve up to 50 times the AI reasoning output, and also provide unprecedented performance support for processing complex tasks.