Command Palette

Search for a command to run...

GTC 2025: More Than Just Chips, Jensen Huang Unveiled Several New Achievements in Physical AI in Half an Hour, All Open Source

On March 18, Beijing time, NVIDIA GTC 2025, which has been upgraded to an AI industry event, kicked off, but perhaps due to the lack of blockbuster products, The first day's offline forum and exhibition did not reverse the decline in Nvidia's stock price.Therefore, the "keynote speech" that has already attracted much attention has attracted even more attention and expectations.

Admittedly, the fluctuation of Nvidia’s stock price is not only related to the “declining computing power demand” speculation caused by DeepSeek, but also more or less affected by the correction of the US stock market. But no matter what the reason is, Huang Renxun, as the helmsman of the company, needs to restore investor confidence as soon as possible. For this reason, we saw him in his nearly 3-hour speech:

* Scaling Law was mentioned many times;

* Joining the open source movement, releasing the NVIDIA Llama Nemotron inference model built on the Llama model;

* He praised that Blackwell has been fully put into production, and that "the production capacity is ramping up at an amazing speed, and customer demand is also impressive";

* Explicitly emphasize that the "computational effort" in the AI field has increased significantly due to the emergence of inference models;

* …

But Huang's passionate speech seemed to have little effect.As of press time, Nvidia's stock price is still on a downward trend.

In a sense, this also shows that the hardware and architecture updates that have been leaked have not received much favor from the market. In addition to Blackwell Ultra and Vera Rubin,The Physical AI shared by Huang Renxun in the last half hour may be another "good story" suitable for in-depth sharing with investors and the industry.

A blast of new achievements in Physical AI in half an hour

At CES 2025, which opened in early January 2025, NVIDIA released the world's basic model Cosmos.Huang Renxun shouted that "the next frontier of AI is physics".This has pushed Physical AI onto the AI main stage. The so-called Physical AI refers to enabling autonomous systems such as robots, self-driving cars and smart spaces to perceive, understand and perform complex operations in the real world.

Back to the early morning of March 19, at the end of the keynote speech, the small robot Blue appeared on stage, pushing the atmosphere to a climax, and cheers continued. The interaction between the two sides also outlined the outline of Physical AI for us.

Blue and Jen-Hsun Huang on stage

In the last less than half an hour, Huang Renxun intensively released a series of blockbuster news.

The first is to launch an open source Physical AI dataset.Providing developers with 15TB of data, including thousands of hours of multi-camera video, more than 320,000 trajectories for robot training, and up to 1,000 general scene descriptions (OpenUSD), it excels in diversity, scale, and geographic coverage. It can identify outliers and evaluate model generalization performance, which will be particularly beneficial to the field of security research. It is worth mentioning that NVIDIA officials said that the dataset will continue to expand over time and may become the world's largest unified open source Physical AI dataset.

* Dataset download address:

Next is the world’s first open-source, fully customizable base model, the NVIDIA Isaac GR00T N1.Used to imitate human reasoning and other skills. The GR00T N1 base model adopts a dual-system architecture inspired by human cognitive principles. "System 1" is a fast-thinking action model that reflects human conditioned reflexes or intuition. "System 2" is a slow-thinking model used for thoughtful and methodical decision-making.

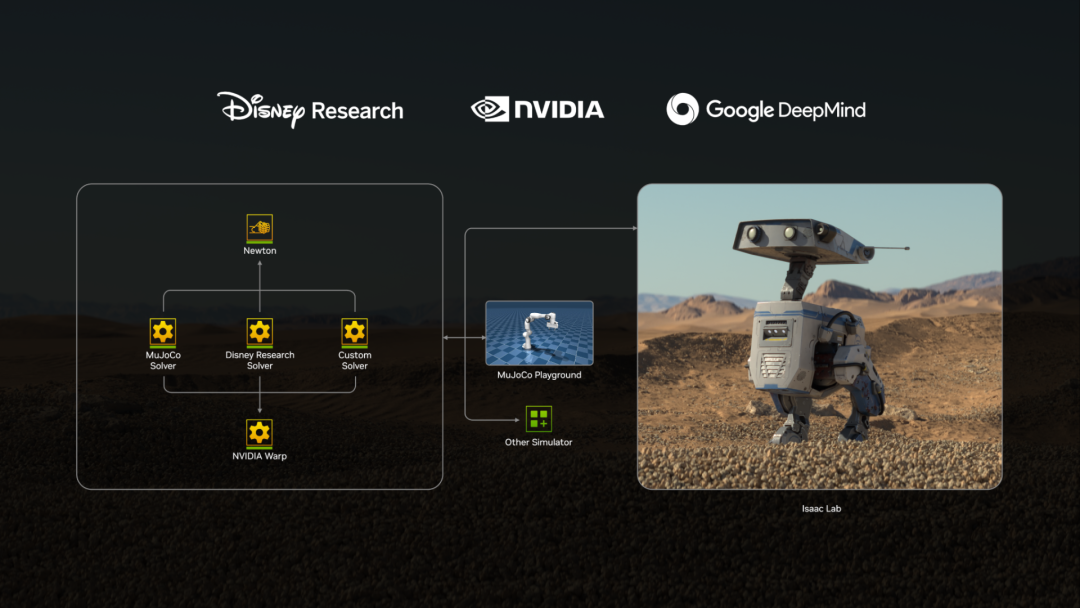

The third is to launch Newton, an open source physics engine for robot simulation.It will be jointly developed by NVIDIA, Google DeepMind and Disney Research, based on the NVIDIA CUDA-X acceleration library NVIDIA Warp, providing developers with an easy way to write GPU-accelerated kernel programs for simulating artificial intelligence, robotics and machine learning (ML).

Finally, the Cosmos world base model was updated, introducing an open source and fully customizable reasoning model.Designed specifically for Physical AI development.Cosmos Transfer is used to generate synthetic data,It receives structured video inputs such as segmentation maps, depth maps, lidar scans, pose estimation maps, and trajectory maps to generate controllable realistic video outputs. Cosmos Predict for intelligent world generation,Multi-frame generation will be supported, predicting intermediate actions or motion trajectories given a starting and ending input image. Cosmos Reason is used to improve the efficiency of Physical AI data annotation and organization.Optimize existing world-based models or build entirely new visual language action models.

From Accelerated Computing to Accelerated Science

In addition to Physical AI, NVIDIA's layout in the field of AI for Science continues to deepen, which may become a new growth point in the future.

NVIDIA's relationship with AI for Science can probably be traced back to the birth of CUDA, which began with the powerful combination of accelerated computing and high-performance computing (HPC). Huang Renxun once said, "Since the creation of CUDA, NVIDIA has reduced the cost of computing by a millionth of its previous cost.For some, NVIDIA is a computational microscope that allows them to see the smallest things; for others, it is a telescope that allows them to explore galaxies unimaginably distant; and for many, it is a time machine that allows them to pursue their life’s work while they still have time.”

In other words, it is the ecological combination of CUDA and NVIDIA GPU that has greatly improved parallel computing capabilities and accelerated the application of high-performance computing in the field of scientific research. Today, AI has injected strong momentum into the scientific research industry, realizing the transition from Accelerated Computing to Accelerated Science. NVIDIA stands at the center of this technological change storm and should seize this opportunity with foresight.

At the GTC 2025 keynote speech that just ended, Huang Renxun announced that developers can now use CUDA-X and the latest superchip architecture to achieve tighter automatic integration and coordination between CPU and GPU resources, compared with using traditional accelerated computing architectures, their computational engineering tools are 11 times faster and have 5 times the computational capacity.

Huang concluded,CUDA-X is already bringing accelerated computing to a range of new engineering disciplines, including astronomy, particle physics, quantum physics, automotive, aerospace, and semiconductor design.

At the 2018 GTC conference,NVIDIA unveiled its first AI platform, NVIDIA Clara, for the medical industry.Initially focusing on the field of medical imaging, NVIDIA Clara provided GPU-based AI solutions to accelerate medical image data processing. In 2019, NVIDIA Clara further expanded to include the genomics computing platform Clara Genomics for DNA/RNA sequence analysis and accelerated genetic data processing.

At the end of 2019, NVIDIA acquired Parabricks, a gene sequencing software development company, and integrated its GPU-accelerated gene sequencing tools into the NVIDIA Clara platform. According to NVIDIA's official documentation,Parabricks can analyze 30x the entire human genome in 10 minutes.Other methods take 30 hours.

At the 2021 GTC conference,NVIDIA launches Clara Holoscan, a real-time AI computing platform dedicated to medical devices.The goal is to enable medical equipment to have real-time AI computing capabilities, thereby improving the intelligence level of medical image analysis, surgical assistance, and telemedicine.

At the GTC conference held in September 2022, NVIDIA further expanded Clara and released BioNeMo. At that time, NVIDIA's blueprint for AI for Science became clearer, from AI accelerated computing to generative AI, from the initial medical imaging AI platform to more in-depth life science research. Specifically,BioNeMo is a framework for training and deploying large biomolecular language models at ultra-large computational scale.Contains 4 pre-trained language models:

* Protein LLM ESM-1: It processes amino acid sequences and generates representations that can be used to predict various protein properties and functions, improving scientists' ability to understand protein structure.

* Open source version of the protein modeling tool OpenFold.

* Generative chemistry model MegaMolBART: can be used for reaction prediction, molecular optimization and new molecule generation.

* ProtT5: Developed by RostLab of Technical University of Munich in collaboration with NVIDIA, it extends the functionality of Meta AI’s ESM-1b and other protein LLMs to sequence generation.

In addition to medical and life science research,NVIDIA also released the climate digital twin cloud platform Earth-2 at GTC 2024.Using its newly launched generative AI model CorrDiff, combined with NVIDIA FourCastNet, global climate simulation can be achieved at the 1-kilometer level.

Conclusion

Each GTC conference has long been upgraded from a "promotion" of new products and technologies to an industry benchmark. At this year's conference, we saw Huang Renxun's expectations for the next generation of chip architecture and products, as well as his active layout in the fields of robotics, autonomous driving, and scientific computing. It is obviously too early to hope that the latter will quickly grow into Nvidia's main blood bank, but how to quickly reverse the current market sentiment to clear obstacles for the former is also quite challenging. Let us look forward to what other "unique tricks" Huang will come up with in the future?