Command Palette

Search for a command to run...

Online Tutorial | OpenManus and QwQ-32B Combine to Make the Reasoning Process Fully Transparent

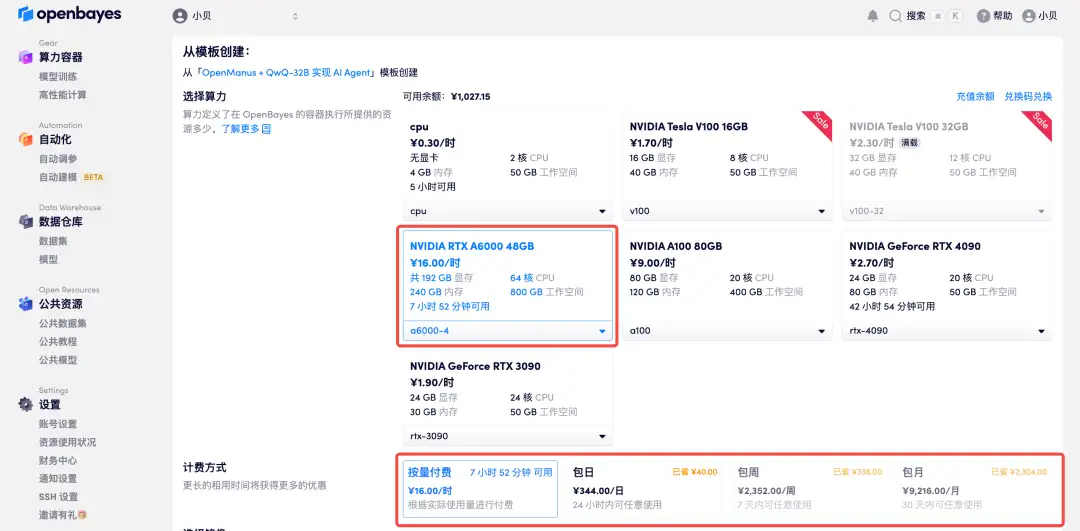

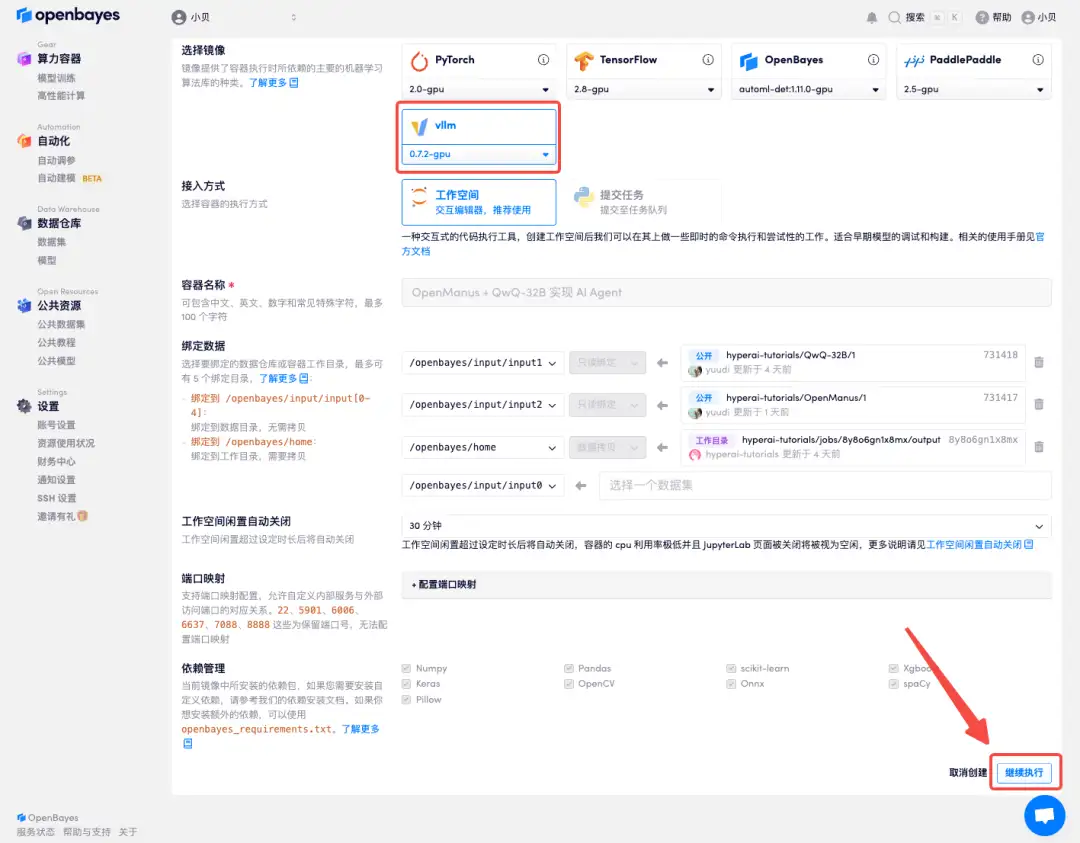

Yesterday evening (March 11), Manus announced on its official Weibo that it had formally reached a strategic cooperation with the Alibaba Tongyi Qianwen team. The two parties will work to realize all the functions of Manus on domestic models and computing platforms based on the Tongyi Qianwen series of open source models. After the news was released, the outside world's expectations for this "king bomb combination" continued to rise.

Let's go back to 5 days ago, in the early morning of March 6th.Alibaba Cloud Open Source Tongyi Qianwen QwQ-32B,With only 32B parameters, its performance is comparable to the full-blooded version of DeepSeek-R1 with 671B parameters.Manus, known as the world's first general-purpose AI agent, was also officially unveiled.Unfortunately, Manus is not open source and adopts an invitation-based internal testing system. Invitation codes are hard to come by and have even been sold for as much as 100,000 yuan. Many people are eagerly waiting but are unable to experience it themselves.

Fortunately, the MetaGPT team of five people acted quickly and launched the open source replica of OpenManus in just 3 hours. In just a few days, the project has received 29k+ stars on GitHub.

Not only can you conceive, but you can also deliver results?As an open source version of Manus, OpenManus' core advantage lies in its ability to think independently, plan accurately, and efficiently execute complex tasks, directly delivering complete results.Thanks to its innovative modular Agent system, developers can freely combine various functional modules according to actual needs to customize their own AI assistant. When receiving a task, agents with different divisions of labor will work closely together, from understanding the needs, planning and formulating to specific action execution, with the whole process open and transparent. How to think about the problem and how to plan the execution steps can be fed back to the user in real time, so that people can intervene in time, which helps the model complete the task with higher quality.

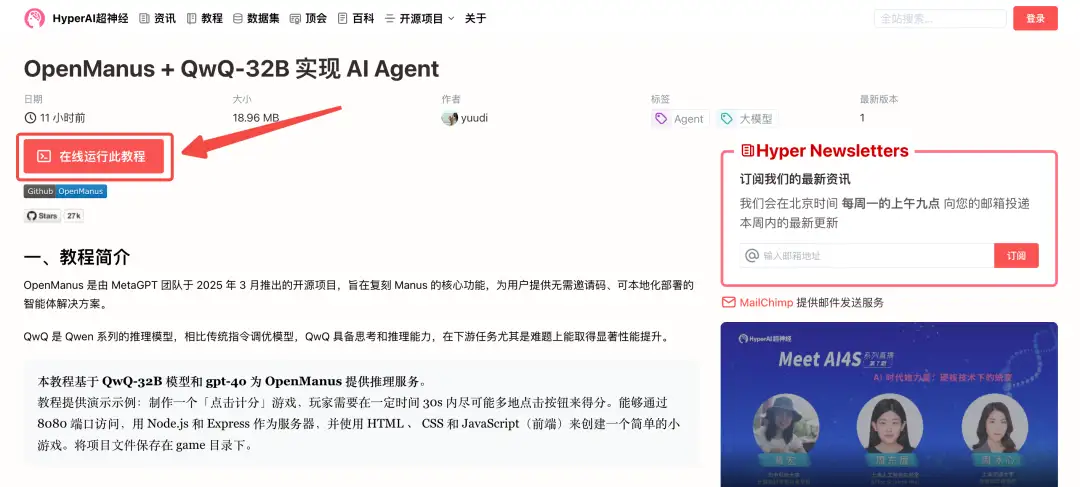

In order to let more friends experience OpenManus as soon as possible,The HyperAI official website has launched the "OpenManus + QwQ-32B to implement AI Agent" tutorial.No invitation code required to unlock all-round AI productivity!

Tutorial address:

More importantly, we have also prepared a surprise benefit for everyone - 1 hour of free use of 4 RTX A6000 cards (the resource is valid for 1 month). New users can get it by registering with the invitation code "OpenManus666". There are only 10 benefit places available, first come first served!

Registered Address:

https://openbayes.com/console/signup

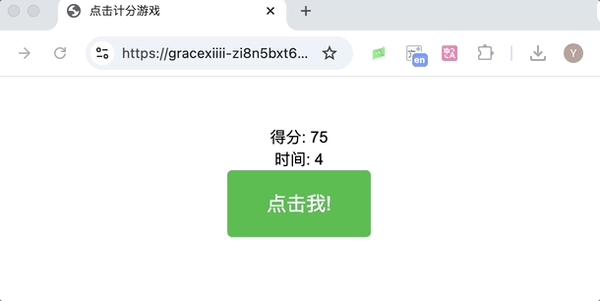

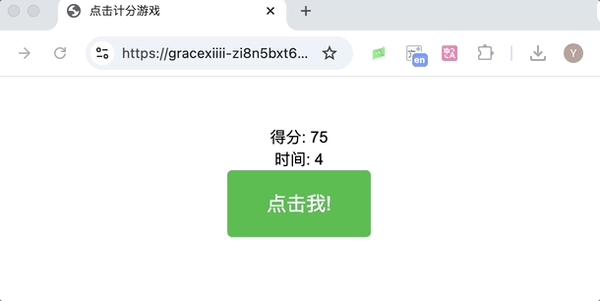

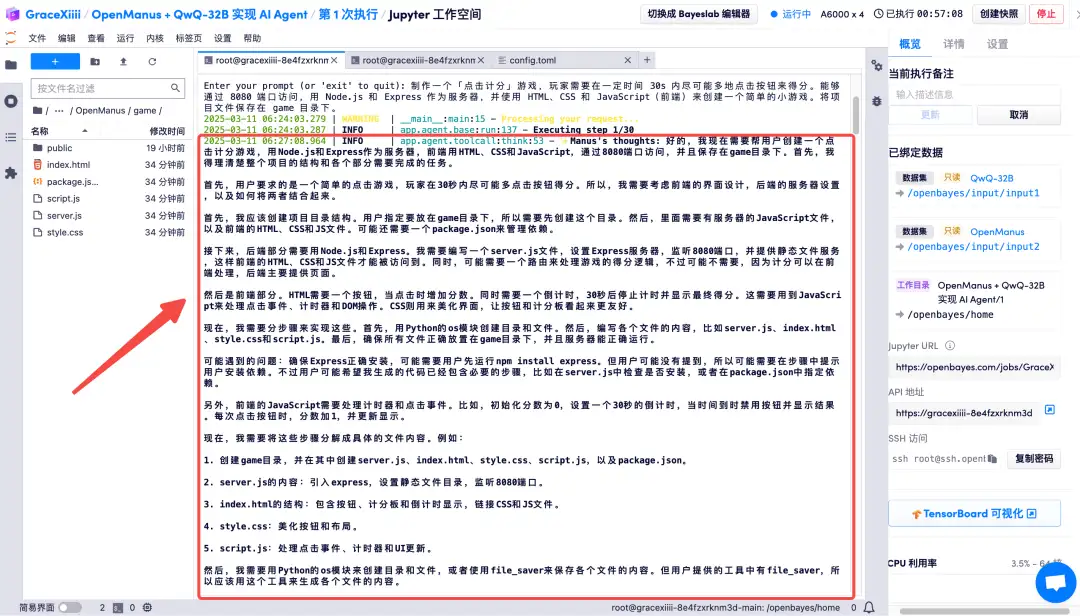

I made a game called "Test Hand Speed - Click to Score". The thinking path of OpenManus is clearly visible during the whole task. Finally, I can start the game directly through the API address. Let's see how many points I can get~

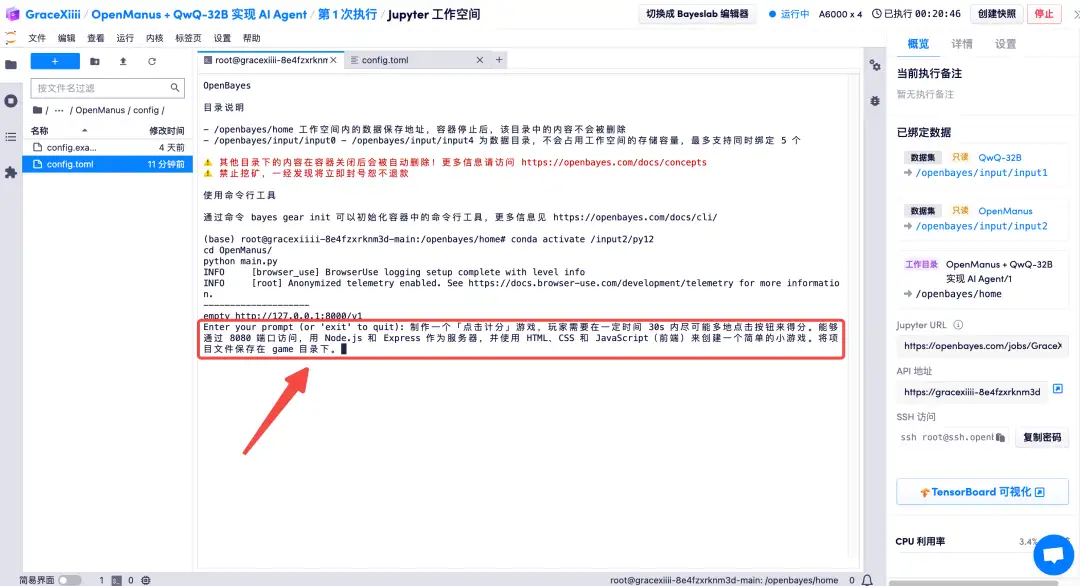

*prompt: Make a "click to score" game, where players need to click as many buttons as possible within 30 seconds to score points. Accessible through port 8080, use Node.js and Express as servers, and use HTML, CSS and JavaScript (front-end) to create a simple game. Save the project file in the game directory.

Demo Run

1. Log in to hyper.ai, on the Tutorial page, select OpenManus + QwQ-32B to implement AI Agent, and click Run this tutorial online.

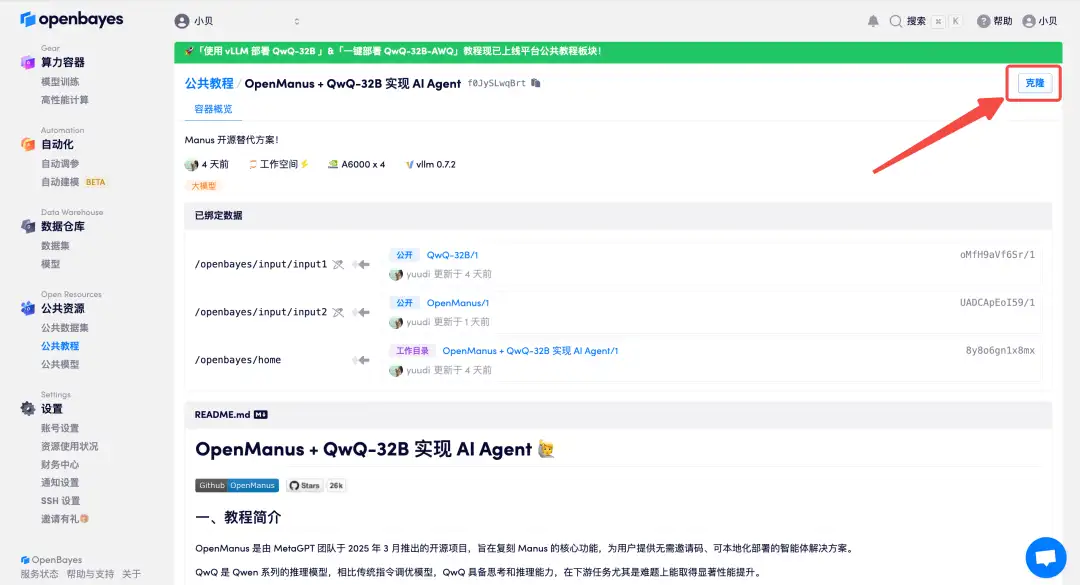

2. After the page jumps, click "Clone" in the upper right corner to clone the tutorial into your own container.

3. Select "NVIDIA A6000-4" and "vllm" image, and click "Continue". The OpenBayes platform has launched a new billing method. You can choose "pay as you go" or "daily/weekly/monthly" according to your needs. New users can register using the invitation link below to get 4 hours of RTX 4090 + 5 hours of CPU free time!

HyperAI exclusive invitation link (copy and open in browser):

https://openbayes.com/console/signup?r=Ada0322_NR0n

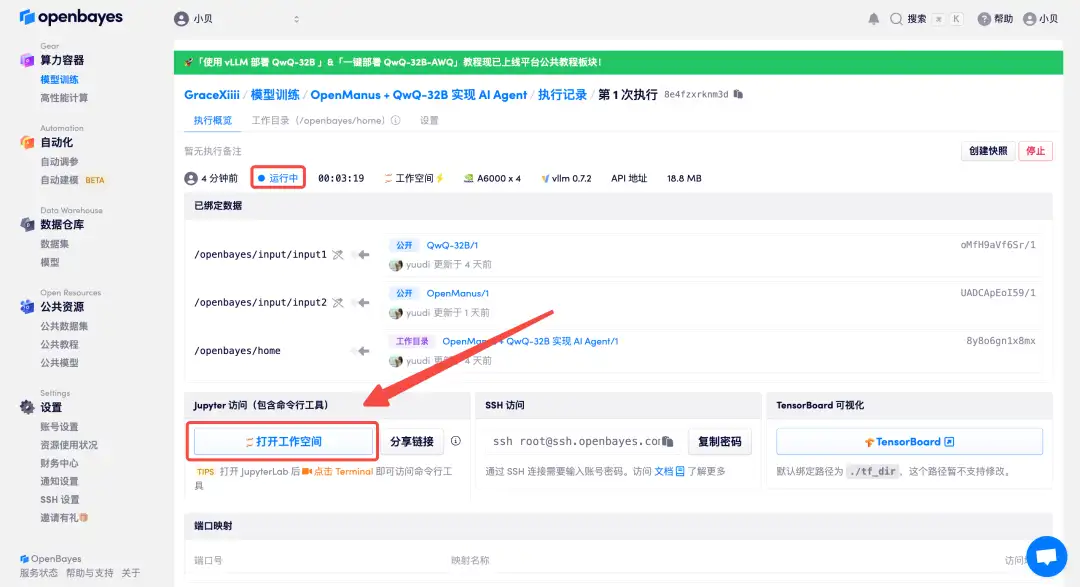

4. Wait for resources to be allocated. The first clone will take about 1 minute. When the status changes to "Running", click "Open Workspace".

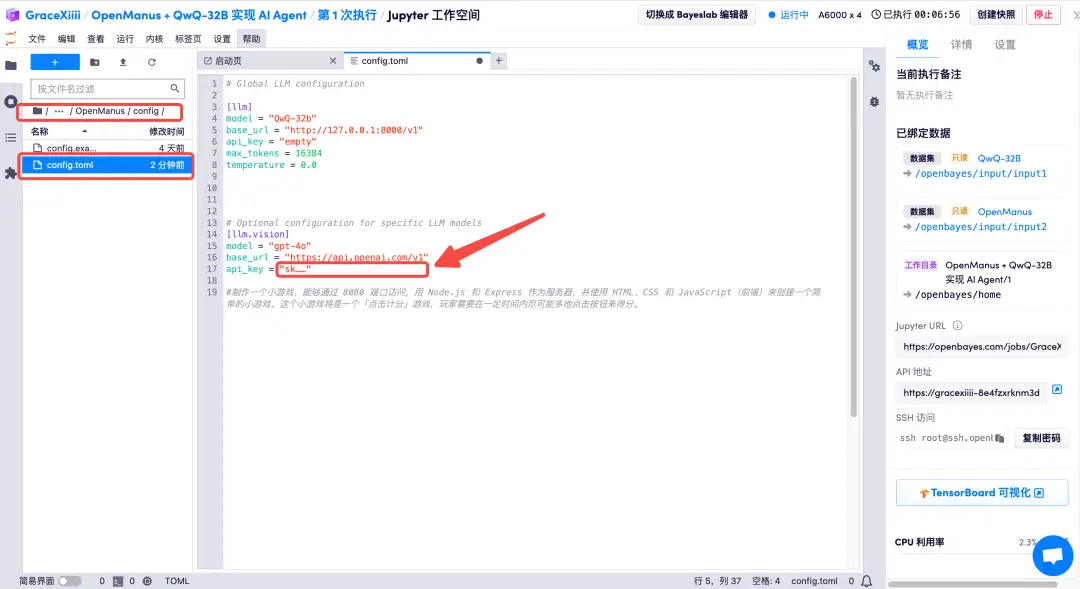

5. Find

OpenManus/config/config.tomlPath to add the API key of the visual model. After modification, use the shortcut key "control+s" to save the file on this page:

The OpenAI GPT-4o model is used here as a demonstration. You can customize other model effects. A built-in vision LLM version tutorial will be deployed later to replace the following [llm.vision].

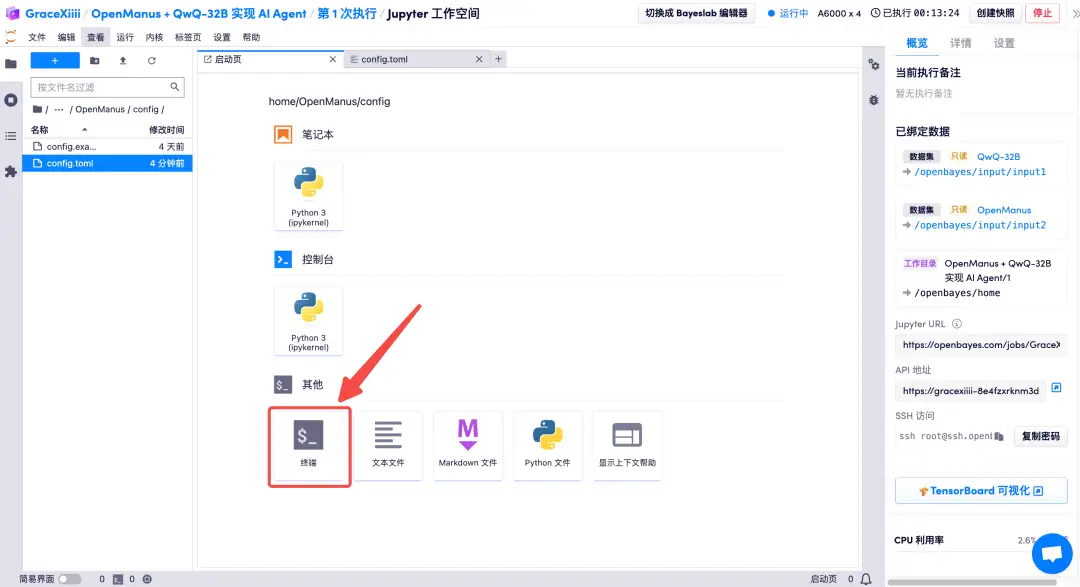

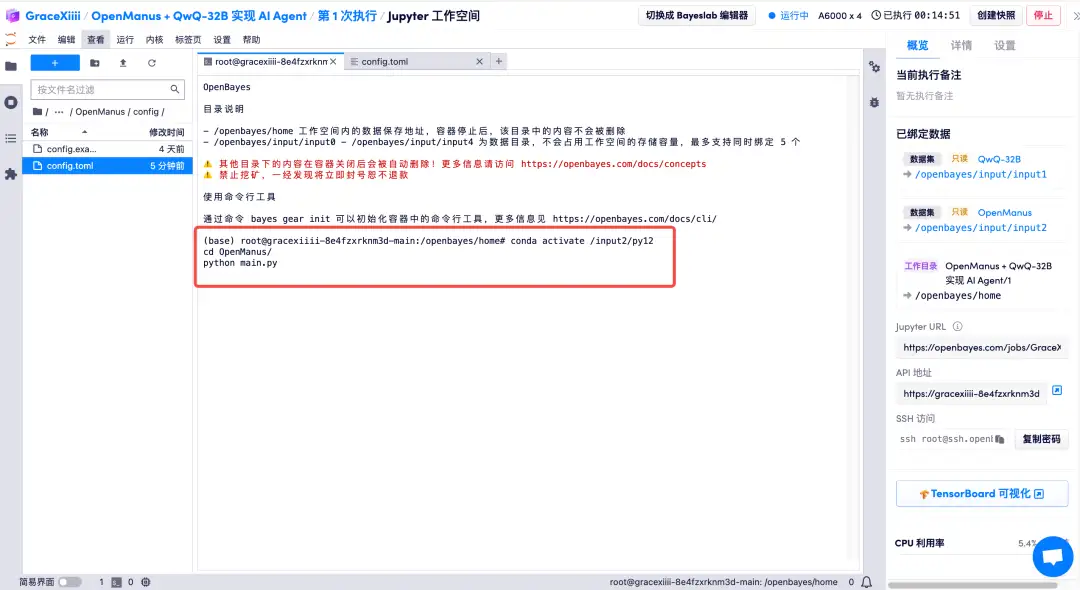

6. Create a new terminal, enter the following command and press Enter to start OpenManus.

conda activate /input2/py12

cd OpenManus/

python main.py

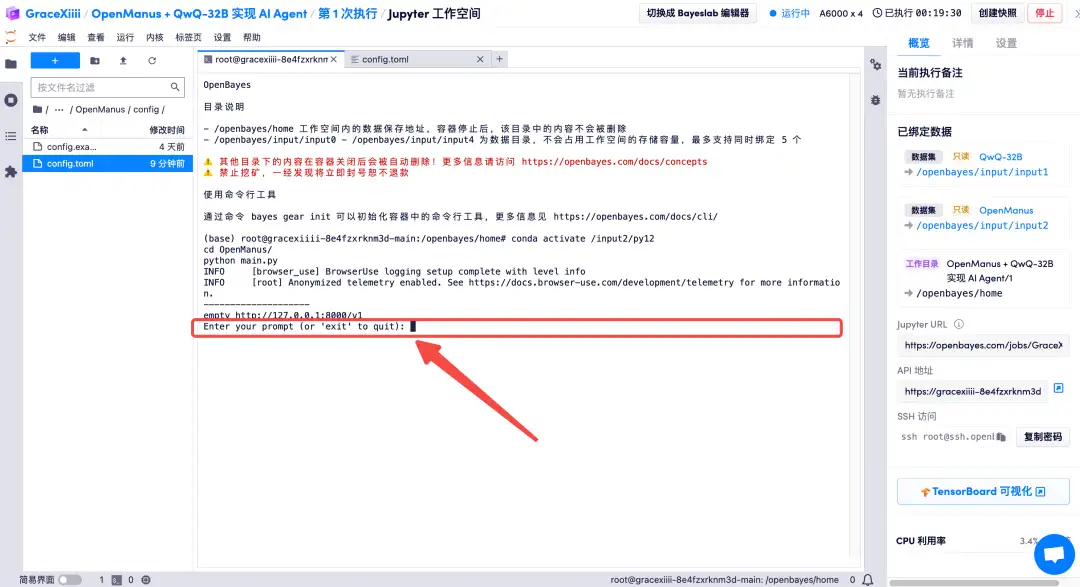

7. Enter your creative prompt via the terminal.

Effect display

1. Here is a demonstration of how to use OpenManus to create a "Test Hand Speed - Click to Score" game.

*prompt: Make a "click to score" game, where players need to click as many buttons as possible within 30 seconds to score points. Accessible through port 8080, use Node.js and Express as servers, and use HTML, CSS and JavaScript (front-end) to create a simple game. Save the project file in the game directory.

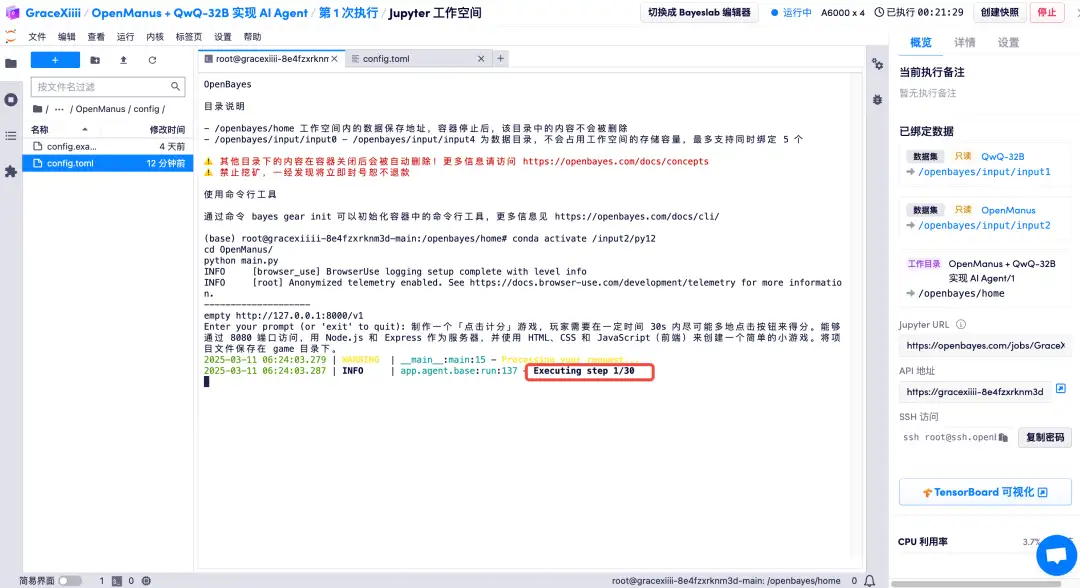

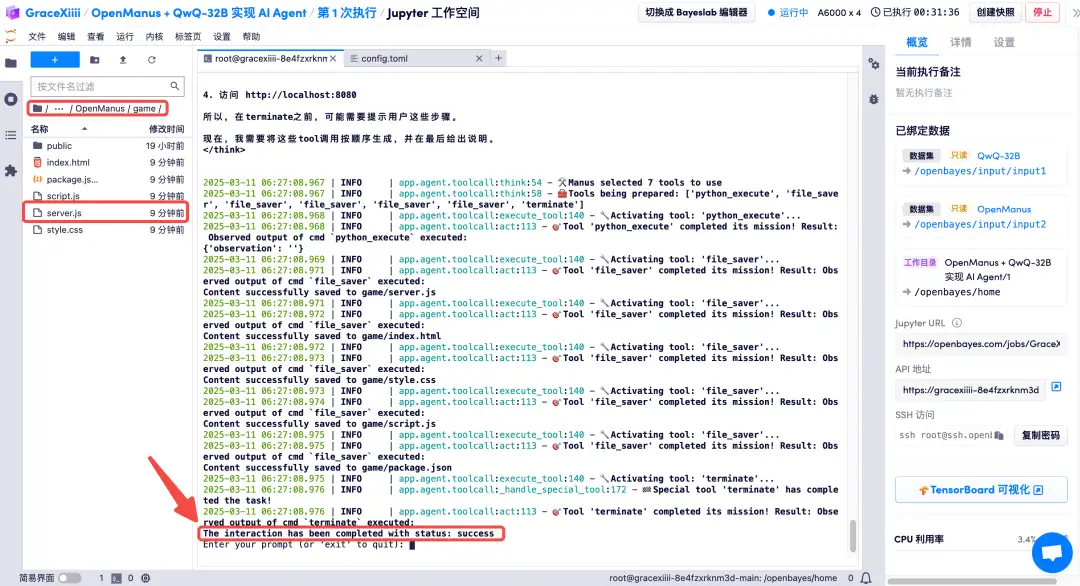

2. Wait for step 1/30 in the figure below to complete 30 steps (about 20 minutes). After the terminal displays "The interaction has been completed with status: success", you can see the entire project file in the OpenManus/game directory. (The project file generated by OpenManus is saved in the OpenManus folder by default)

You can see OpenManus' thought process while making the game.

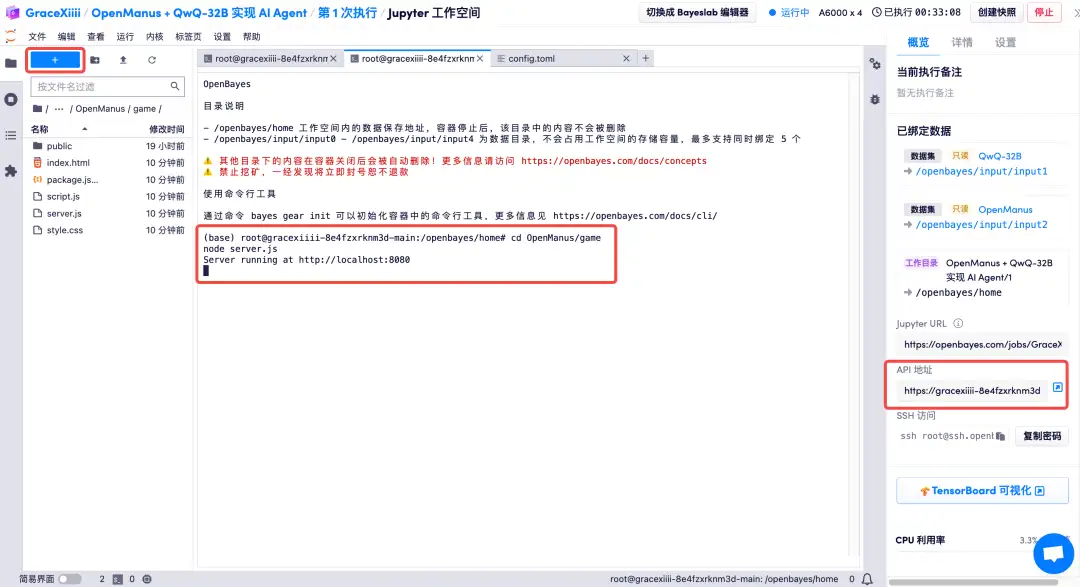

3. After the task is successfully generated, create a new terminal and enter the following command to start the game:

cd OpenManus/game

node server.js

4. Click the API address on the right to run the "Test Hand Speed - Click to Score" game and see how many points you can get in 30 seconds~