Command Palette

Search for a command to run...

Online Tutorial: 10 Seconds to Produce a Picture! Tencent's First 3D Generated Large Model Is Online

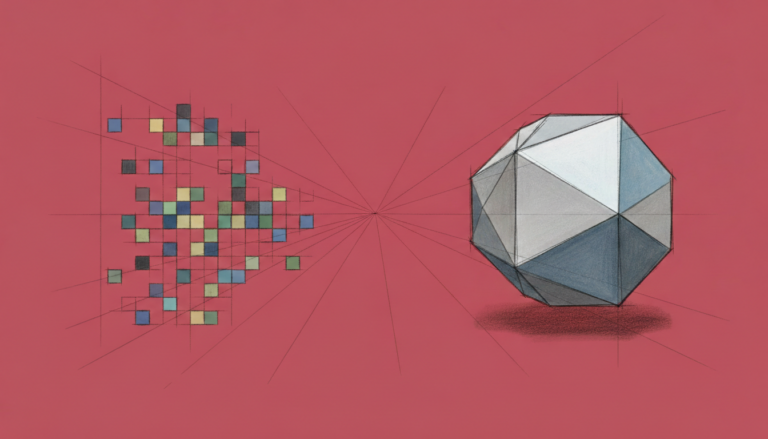

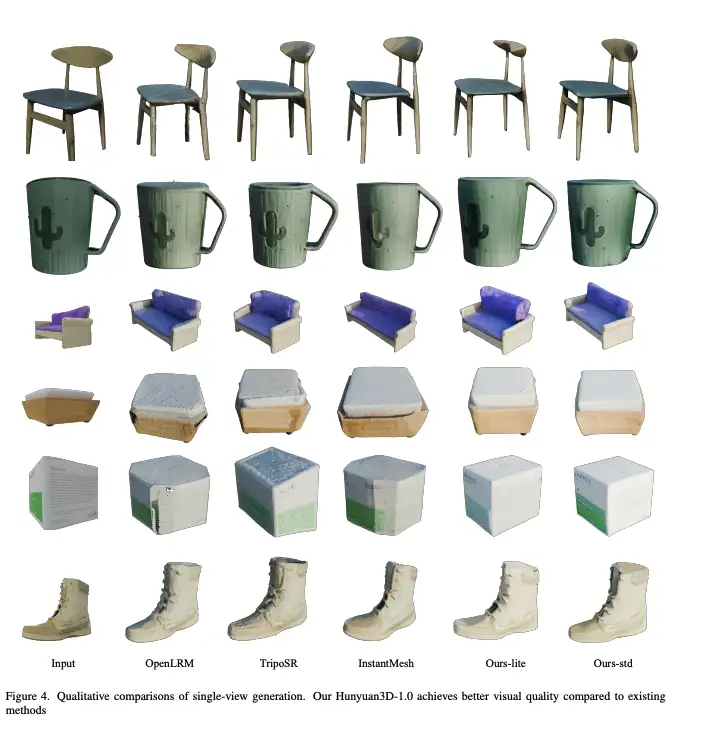

3D models play an important role in many industries and scenarios such as game development, film and television animation, and virtual reality (VR), but the existing 3D generation and diffusion models are still insufficient in terms of rapid generation and generalization capabilities. To solve these problems, Tencent's research team launched Hunyuan3D-1.0 and open-sourced the lightweight and standard versions of the models.This innovative framework effectively overcomes many challenges in 3D generation technology by combining multi-view generation and sparse view reconstruction technology.

Hunyuan3D-1.0 achieves an excellent balance between quality and efficiency. In terms of generation quality, Hunyuan3D-1.0 has shown significant advantages in the reconstruction of shapes and textures. It can not only accurately capture the overall 3D structure of objects, but also show excellent precision in the depiction of tiny details. In terms of generation speed, the lightweight version of the model only takes 10 seconds to generate a 3D image from a single image, significantly improving the efficiency of 3D modeling.

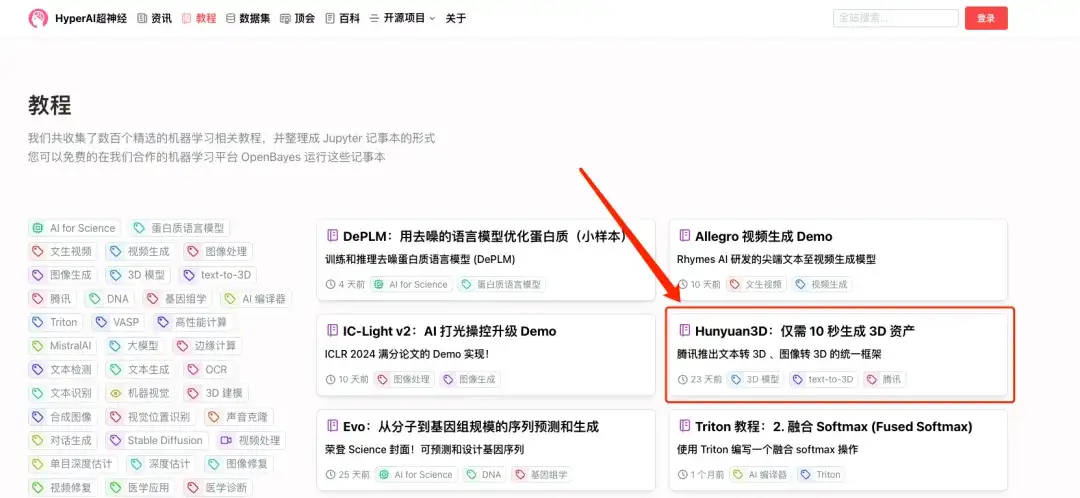

Currently, the "Hunyuan3D: Generate 3D assets in just 10 seconds" tutorial has been launched on the HyperAI Super Neural Tutorial section.It supports both Vincent 3D and Tousen 3D. Come and experience it~

Tutorial address:

Demo Run

1. Log in to hyper.ai, on the Tutorials page, select Hunyuan3D: Generate 3D assets in just 10 seconds, and click Run this tutorial online.

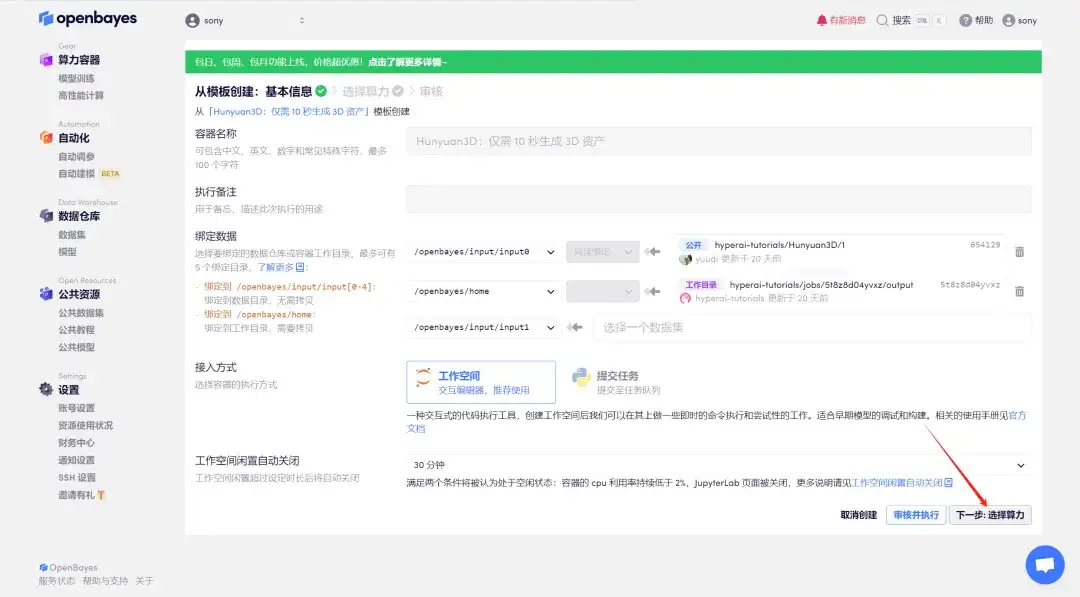

2. After the page jumps, click "Clone" in the upper right corner to clone the tutorial into your own container.

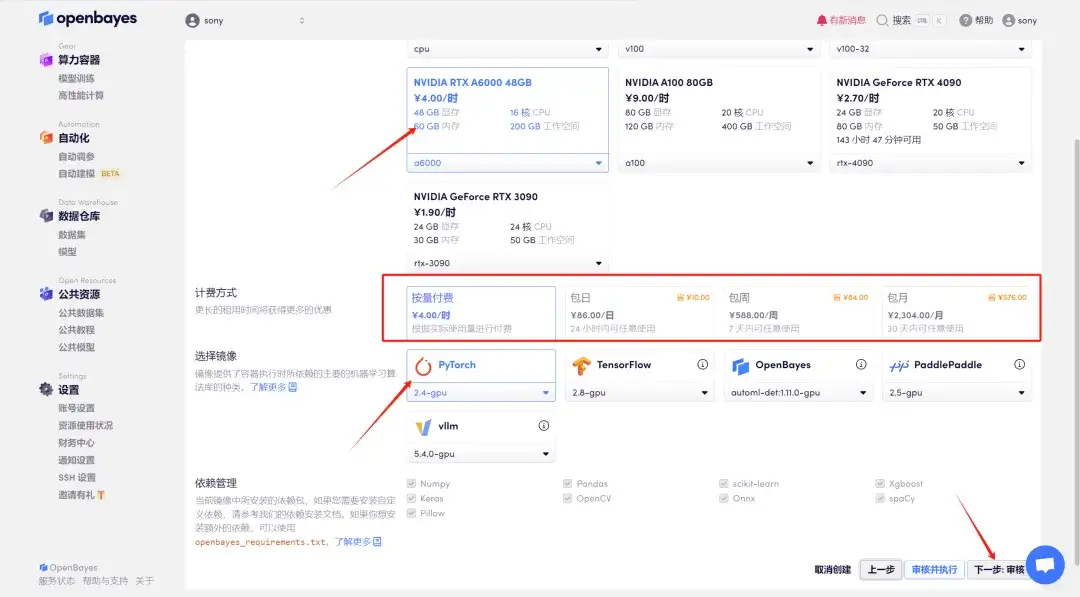

3. Click "Next: Select Hashrate" in the lower right corner.

4. After the page jumps, select "NVIDIA RTX A6000" and "PyTorch" images. The OpenBayes platform has launched a new billing method. You can choose "pay as you go" or "daily/weekly/monthly package" according to your needs. Click "Next: Review". New users can register using the invitation link below to get 4 hours of RTX 4090 + 5 hours of CPU free time!

HyperAI exclusive invitation link (copy and open in browser):

https://openbayes.com/console/signup?r=Ada0322_QZy7

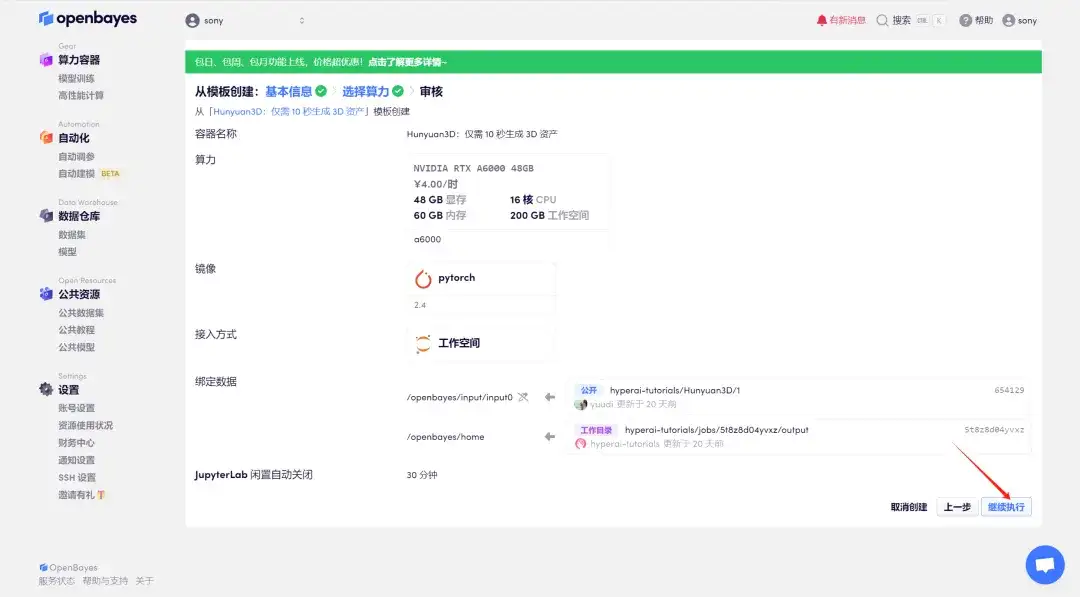

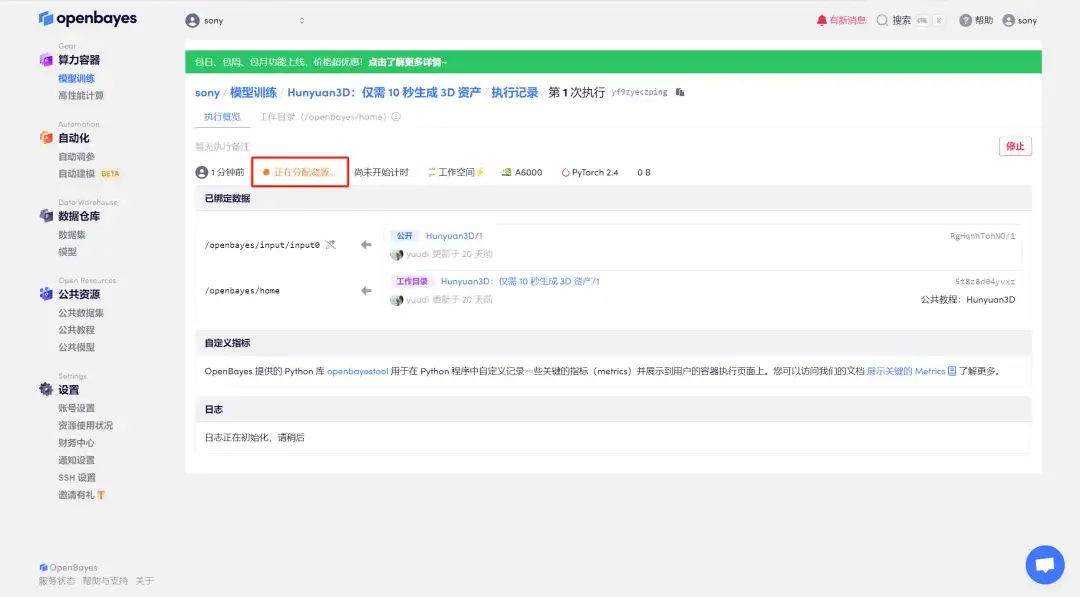

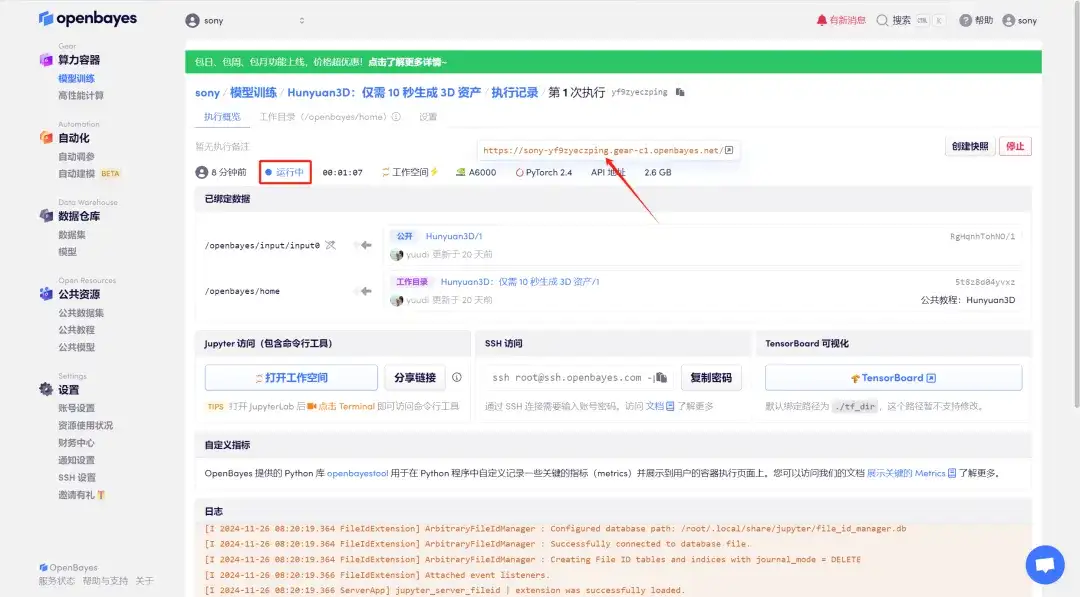

5. After confirmation, click "Continue" and wait for resources to be allocated. The first cloning takes about 2 minutes. When the status changes to "Running", click the jump arrow next to "API Address" to jump to the Demo page.Please note that users must complete real-name authentication before using the API address access function.

Effect Demonstration

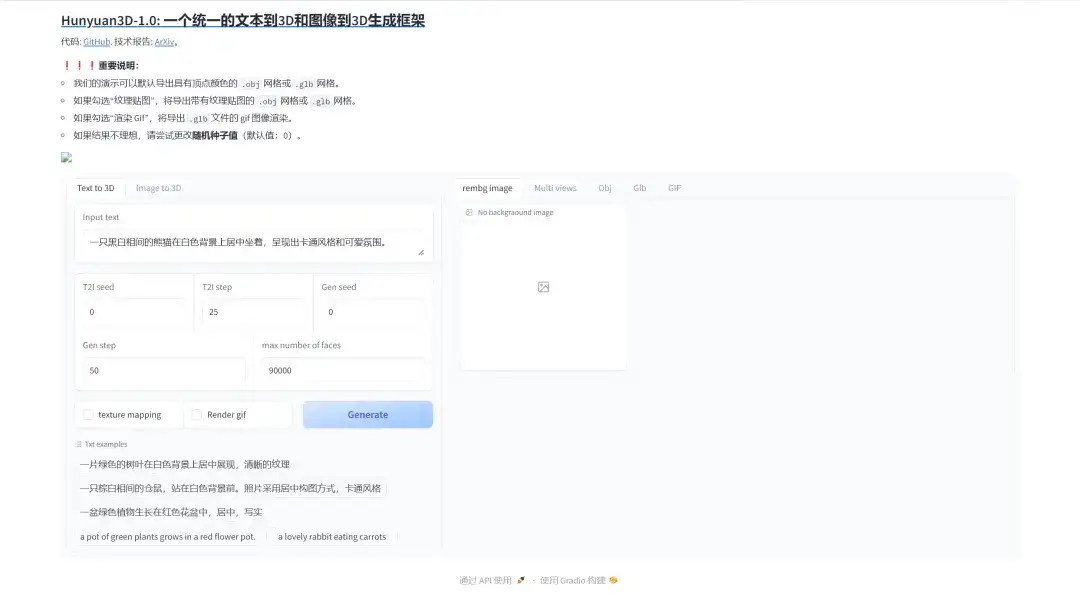

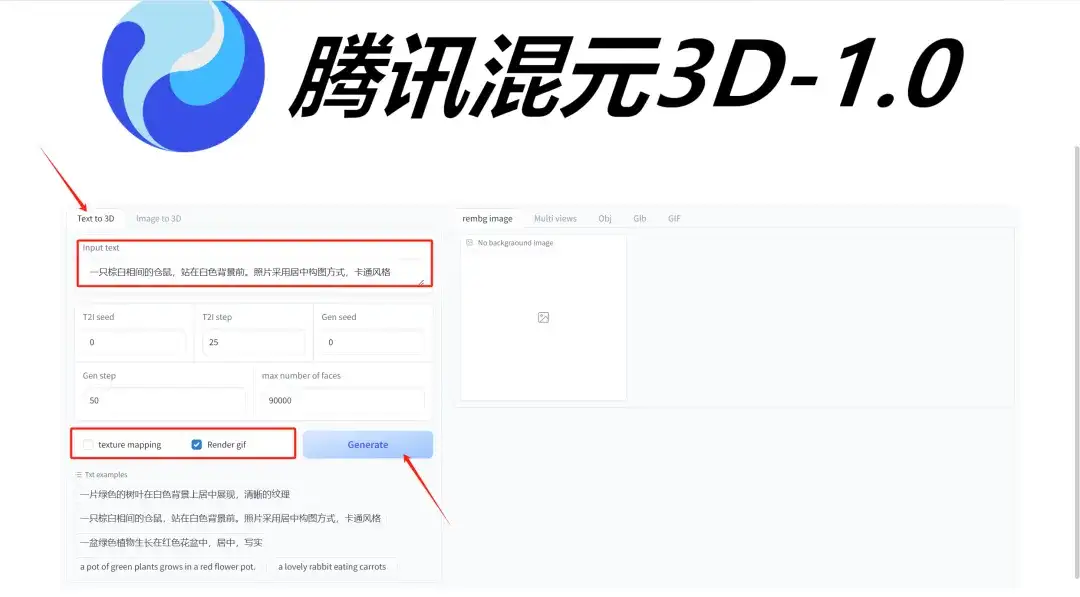

This tutorial is a lightweight version of Hunyuan3D-1.0, which includes two functions: "Image to 3D" and "Text to 3D"

1. Text to 3D

Click "text to 3D" and enter the text prompt in "Input text" (a brown and white hamster standing in front of a white background. The photo is centered and cartoon-style). If you need to generate a gif, you must select "Render gif". "Texture mapping" indicates whether to change vertex shading to texture shading. Click "Generate" to generate.

The following parameters represent:

* T2I seed:The random seed for generating images, default is 0.

* T2I step:Number of sampling steps for text to image.

* Gen seed:Generates random seeds for 3D generations.

* Gen step:Number of 3D generation sampling steps.

* max number of faces:3D mesh face count limit.

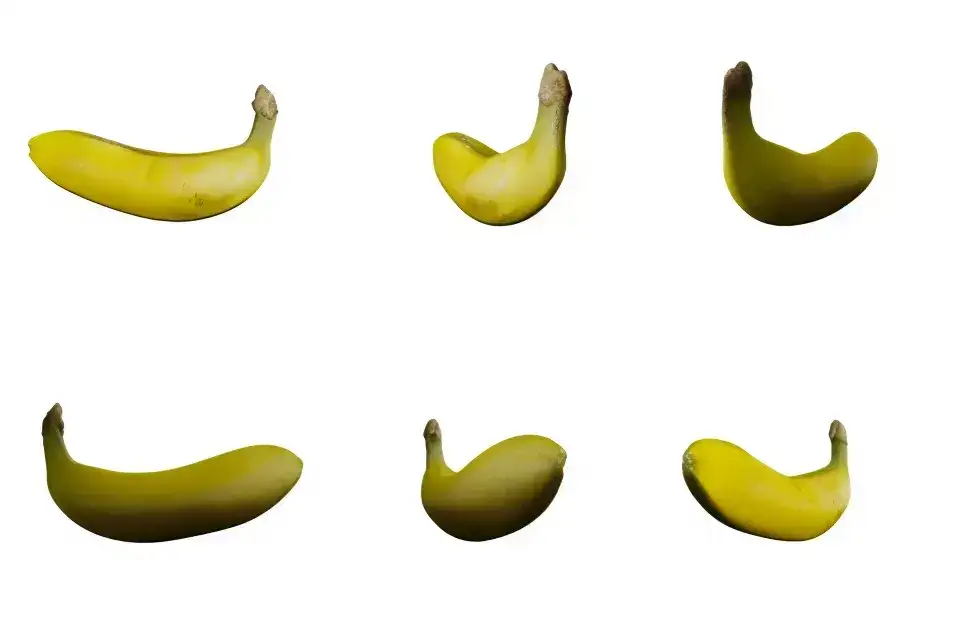

2. Image to 3D

Click "image to 3D" and upload the image in "Input image" (Note: When uploading images by yourself, please make sure that the image is an n*n square, otherwise an error will occur). If you need to generate a gif, you must select "Render gif", and "texture mapping" indicates whether to change vertex shading to texture shading. Click "Generate" to generate.