Command Palette

Search for a command to run...

Online Tutorial | YOLOv11 in Action! A Speedy and Accurate Object Detection Tool

The YOLO model has always been a classic choice for target detection. As a new generation of target detection model, YOLOv11It not only continues the high efficiency and real-time performance of its series, but also greatly improves the detection accuracy and the ability to adapt to complex scenarios, bringing stronger accuracy, faster speed and smarter reasoning performance.

YOLOv11 can complete multiple visual tasks at the same time: from basic object detection and object classification to fine instance segmentation, and even analyze the movements of people or objects through posture estimation. At the same time, YOLOv11 also performs well in positioning object detection, and can accurately locate and identify targets in images to meet the needs of more complex scenarios. For example, in autonomous driving, it can not only accurately identify vehicles and pedestrians in front, but also accurately locate lane lines and traffic signs to ensure driving safety.

The HyperAI HyperNeural Tutorial section is now online with "One-click Deployment of YOLOv11". The tutorial has set up the environment for everyone. You don't need to enter any commands. Just click Clone to quickly explore the powerful functions of YOLOv11!

Tutorial address:

https://go.hyper.ai/ycTq1

Demo Run

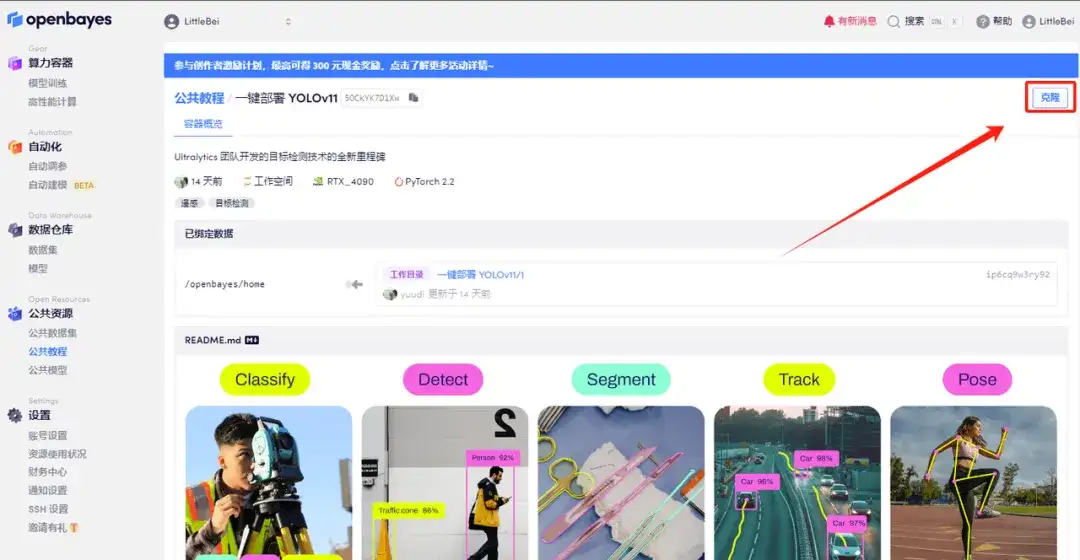

1. Log in to hyper.ai, on the Tutorial page, select One-click deployment of YOLOv11, and click Run this tutorial online.

2. After the page jumps, click "Clone" in the upper right corner to clone the tutorial into your own container.

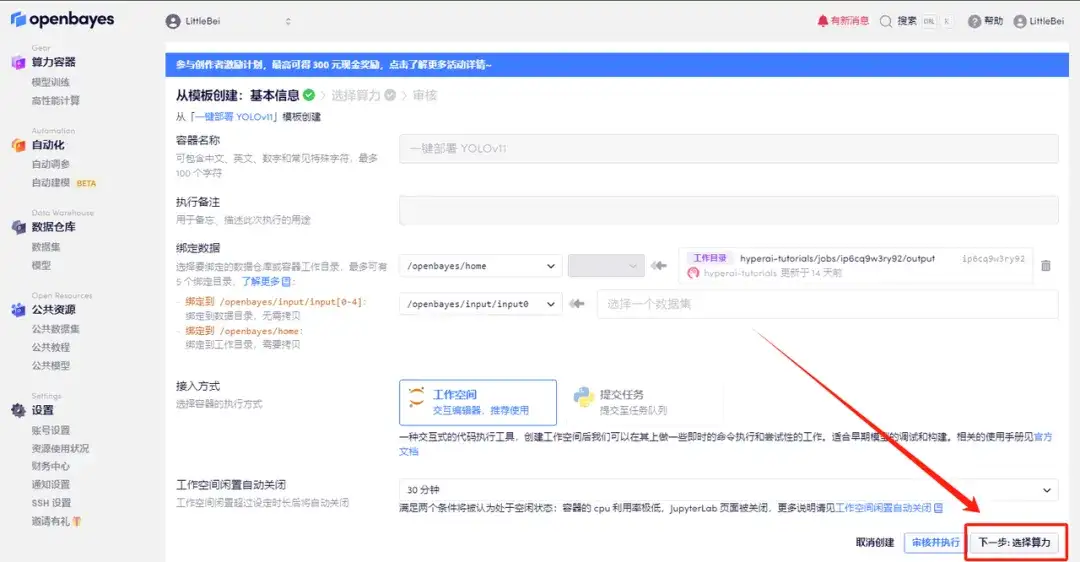

3. Click "Next: Select Hashrate" in the lower right corner.

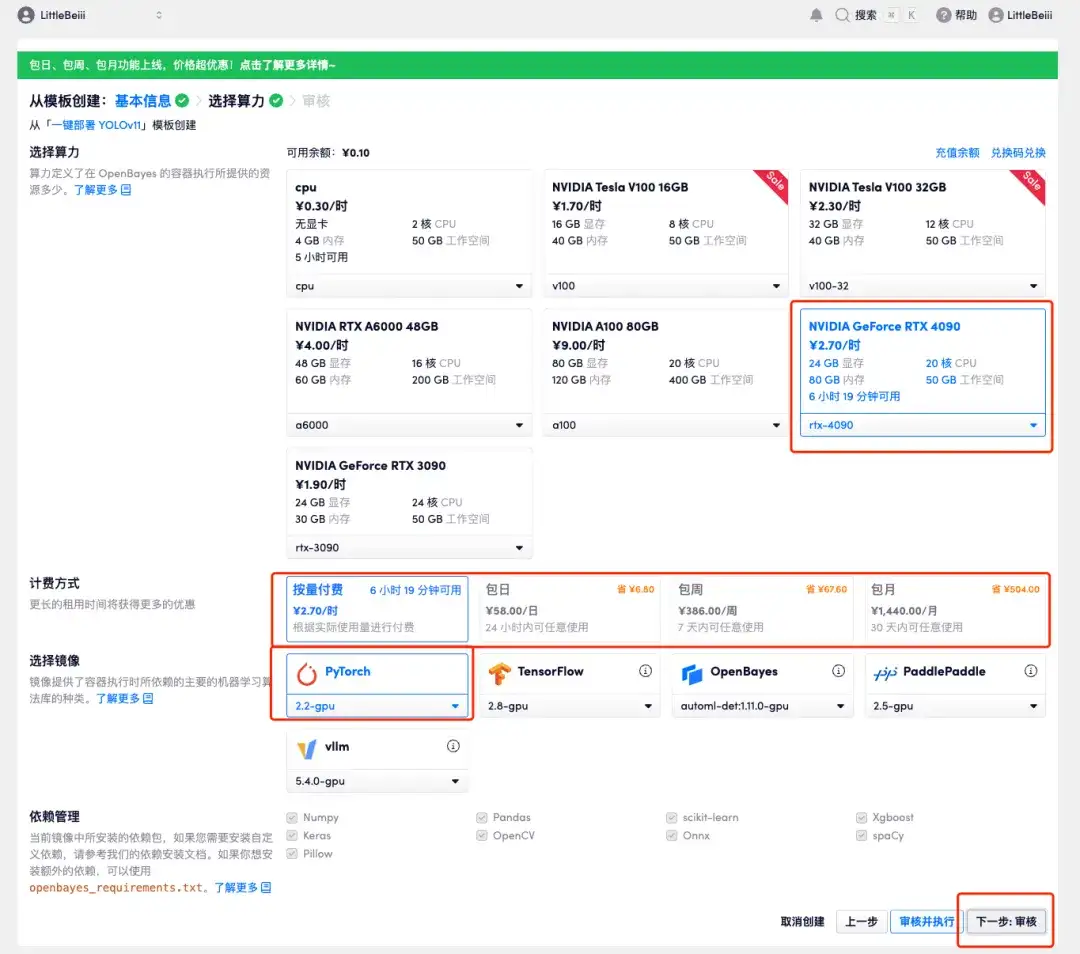

4. After the page jumps, select "NVIDIA RTX 4090" and "PyTorch" image. Users can choose "pay as you go" or "pay as you go" according to their needs. 「Daily/Weekly/Monthly」, after completing your selection, click "Next: Review".New users can register using the invitation link below to get 4 hours of RTX 4090 + 5 hours of CPU free time!

HyperAI exclusive invitation link (copy and open in browser):

https://openbayes.com/console/signup?r=Ada0322_QZy7

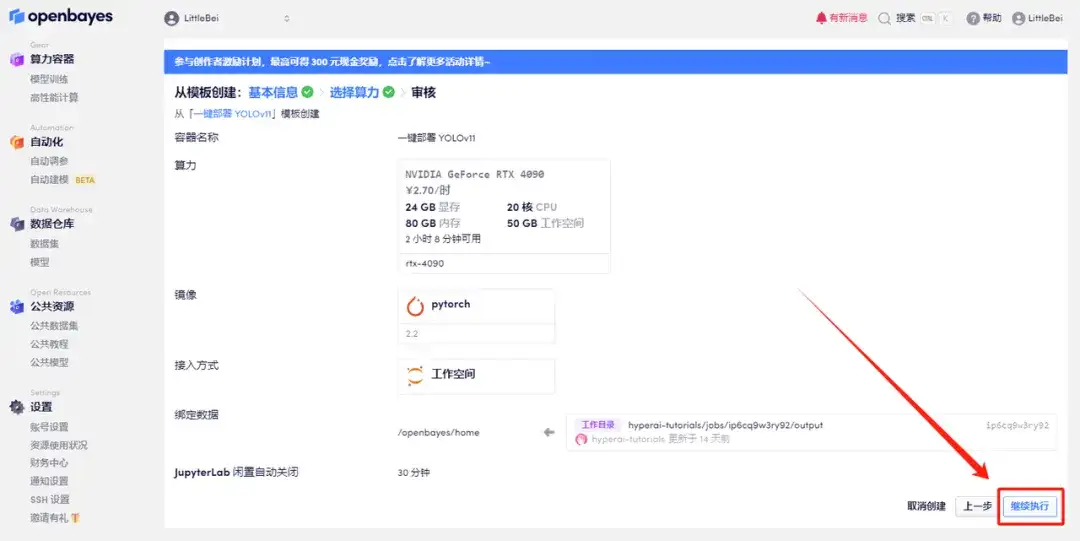

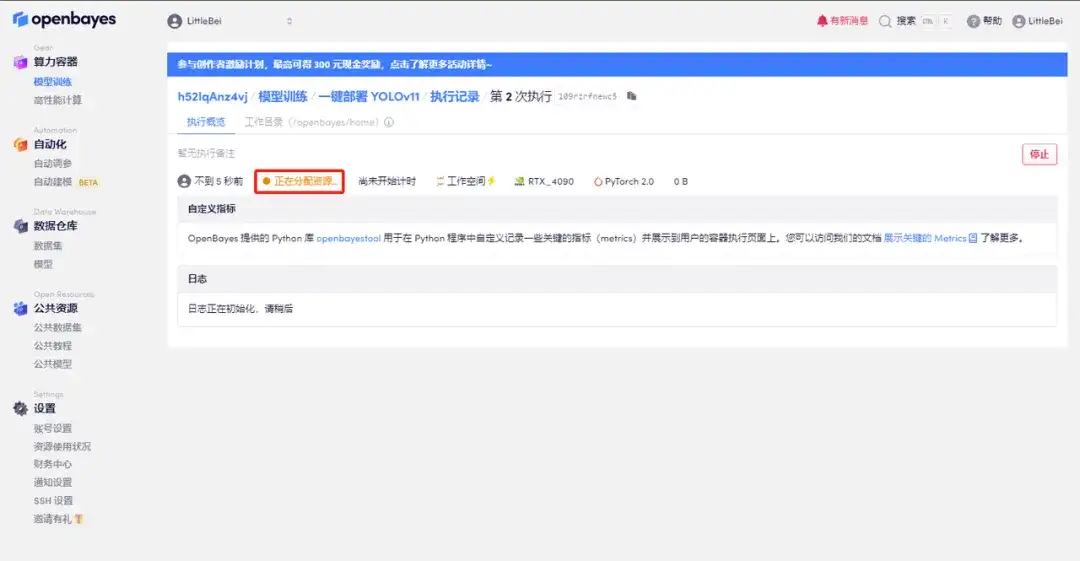

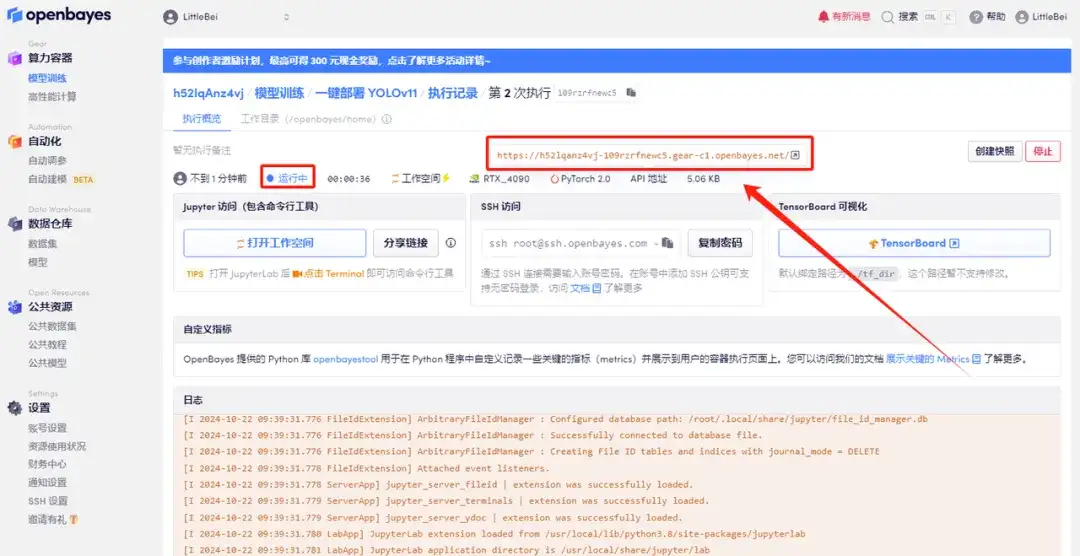

5. After confirmation, click "Continue" and wait for resources to be allocated. The first cloning takes about 2 minutes. When the status changes to "Running", click the jump arrow next to "API Address" to jump to the Demo page.Please note that users must complete real-name authentication before using the API address access function.

Effect Demonstration

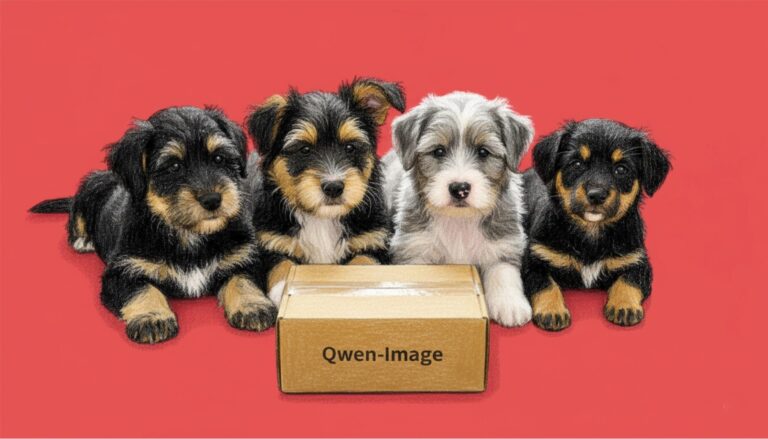

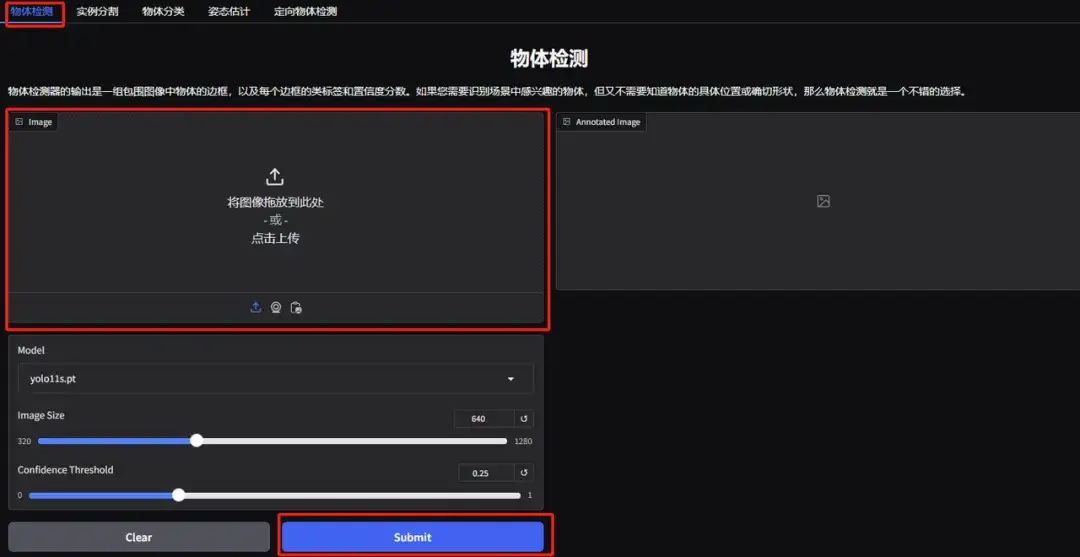

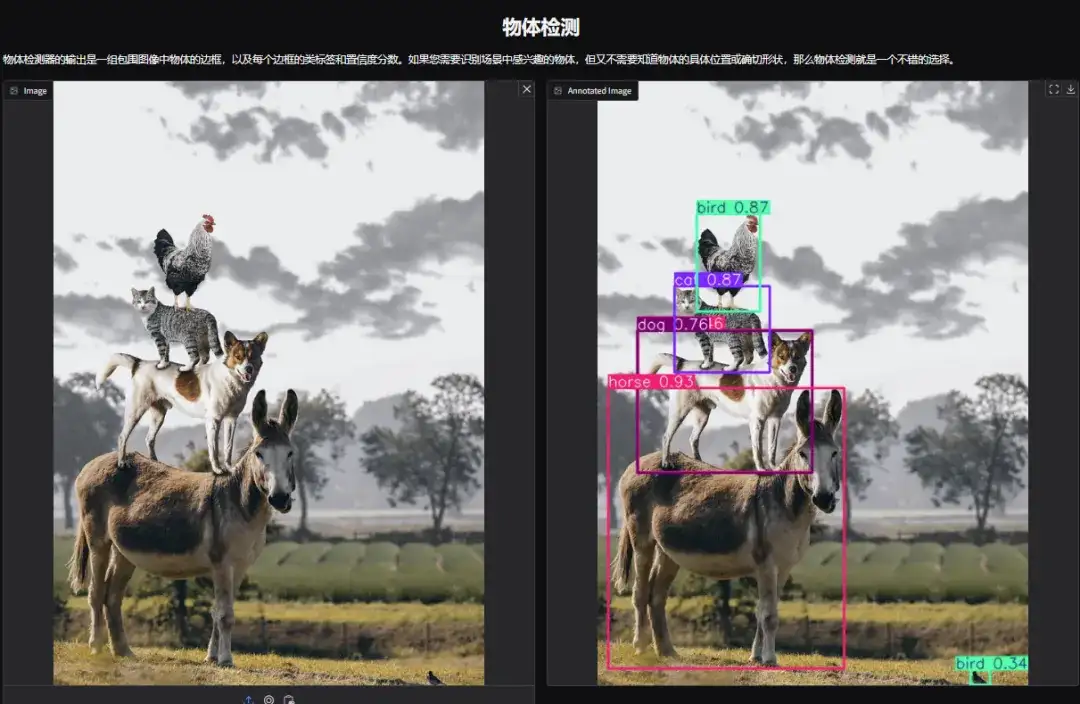

1. Open the YOLOv11 object detection demo page. I uploaded a picture of animals stacked up in a circle, adjusted the parameters, and clicked "Submit". You can see that YOLOv11 has accurately detected all the animals in the picture. There is a bird hidden in the lower right corner! Did you notice it?

The following parameters represent:

* Model:Refers to the YOLO model version selected for use.

* Image Size:The size of the input image. The model will resize the image to this size during detection.

* Confidence Threshold:The confidence threshold means that when the model performs target detection, only the detection results with a confidence level exceeding this set value will be considered as valid targets.

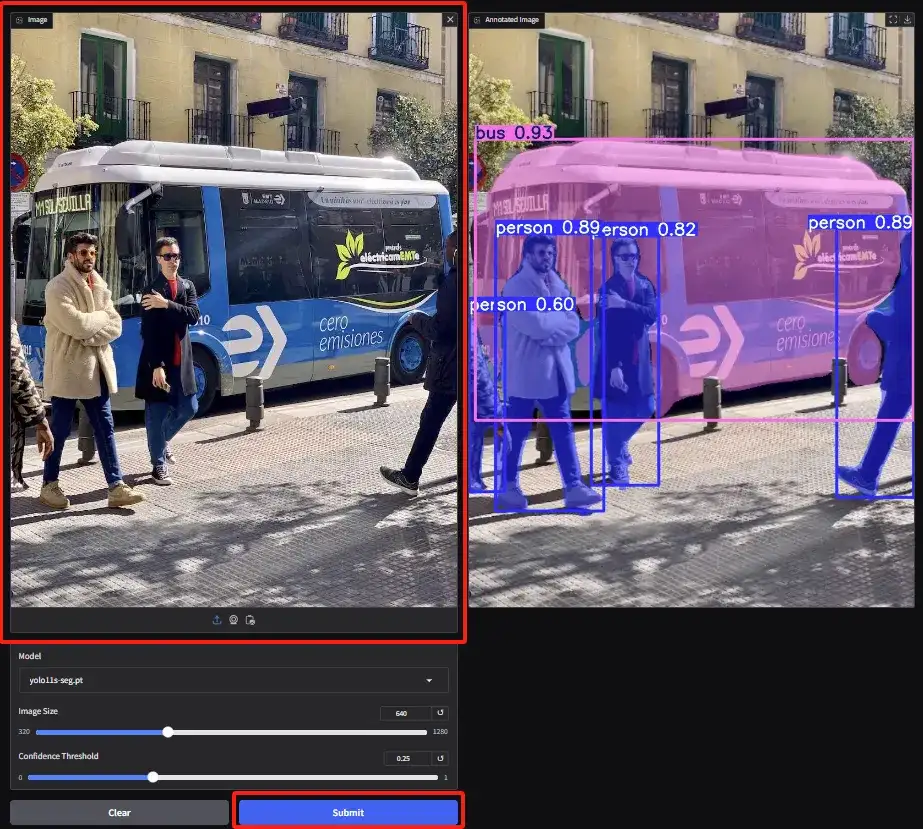

2. Enter the instance segmentation demo page, upload the image and adjust the parameters, then click "Submit" to complete the segmentation operation. Even with occlusion, YOLOv11 can do an excellent job of accurately segmenting the person and outlining the outline of the bus.

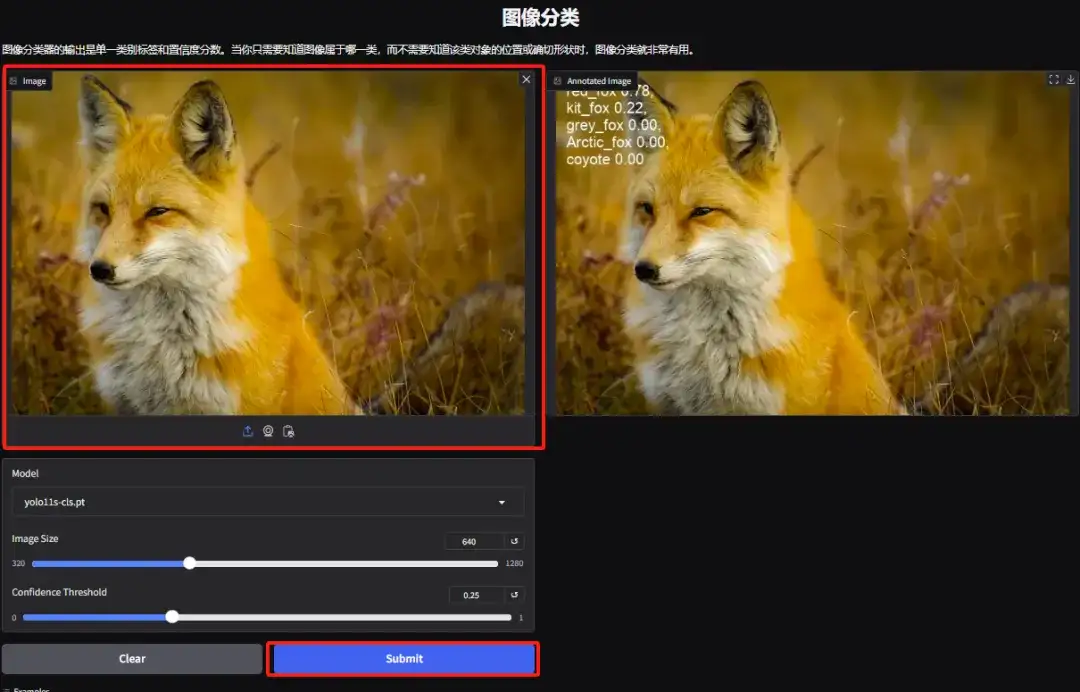

3. Enter the object classification demo page. The editor uploaded a picture of a fox. YOLOv11 can accurately detect that the specific species of the fox in the picture is a red fox.

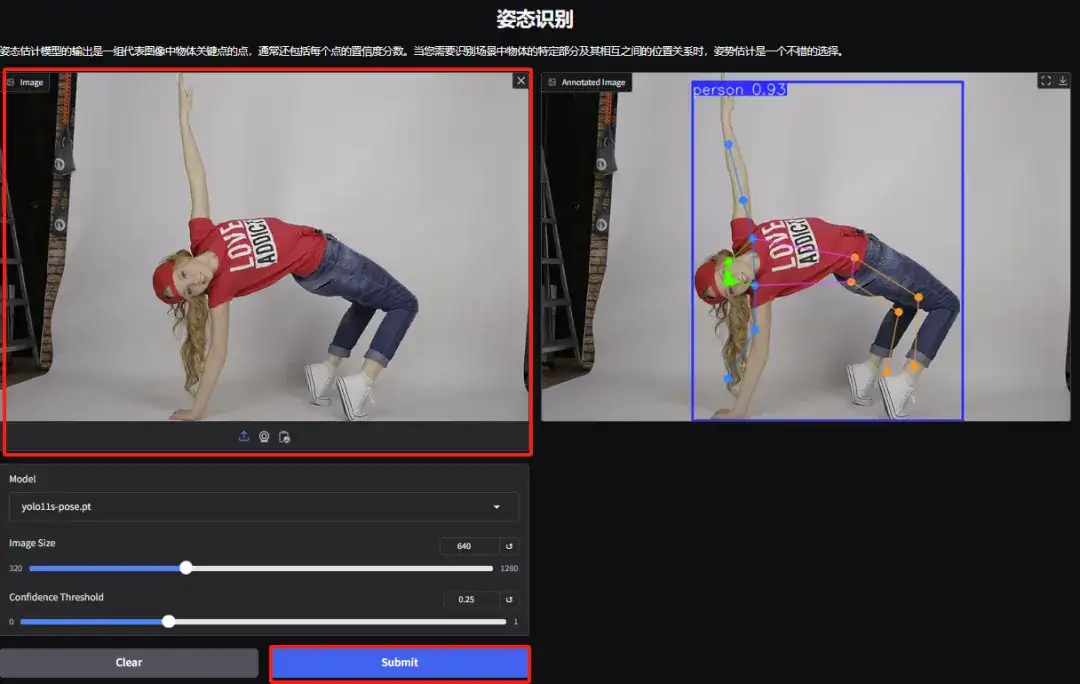

4. Enter the gesture recognition demo page, upload a picture, adjust the parameters according to the picture, and click "Submit" to complete the gesture analysis. You can see that it accurately analyzes the exaggerated body movements of the characters.

5. On the Directed Object Detection Demo page, upload an image and adjust the parameters, then click “Submit” to identify the specific location and classification of the object.