Command Palette

Search for a command to run...

Major Breakthrough in PLM! The Latest Achievements of Shanghai Jiaotong University and Shanghai AI Lab Were Selected for NeurIPS 24, ProSST Effectively Integrates Protein Structure Information

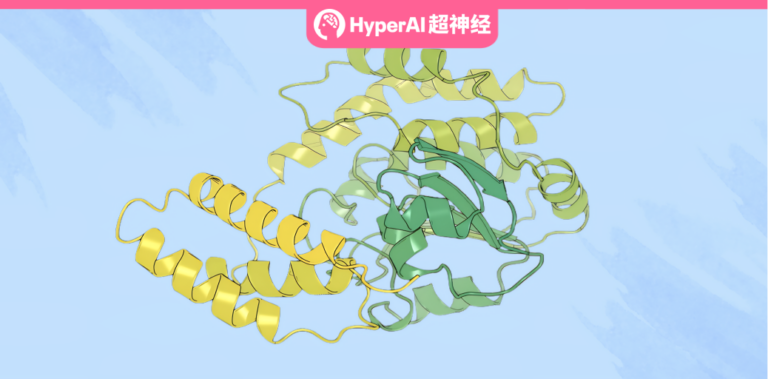

Proteins are key molecules in life. Their sequence determines their structure, and their structure determines their function. The function of proteins is closely related to their three-dimensional structure. For decades, scientists have used X-ray crystallography, nuclear magnetic resonance and other technologies to resolve thousands of protein structures, providing important clues for people to understand protein functions. However, faced with millions of proteins, the task of resolving all protein structures seems extremely arduous.

Inspired by pre-trained language models in the field of natural language processing, pre-trained protein language models (PLMs) came into being.By learning on massive unlabeled protein sequence data, PLM is able to capture the complex patterns and interactions of protein sequences, which has brought revolutionary progress in the prediction of protein function, structural analysis, and identification of protein-protein interactions.

However, most PLMs focus on protein sequence modeling and ignore the importance of structural information, mainly due to the lack of structural data. With the emergence of technologies such as AlphaFold and RoseTTAFold, the accuracy of protein structure prediction has been greatly improved, and researchers have begun to explore how to effectively integrate protein structure information into PLM to train large-scale structure-aware pre-trained language models.

For example, the research group of Professor Hong Liang from the Institute of Natural Sciences/School of Physics and Astronomy/Zhangjiang Institute for Advanced Studies/School of Pharmacy of Shanghai Jiao Tong University, Assistant Researcher Zhou Bingxin of Shanghai Jiao Tong University, and Young Researcher Tan Pan from the Shanghai Artificial Intelligence Laboratory have recently successfully developed a pre-trained protein language model with structure-awareness capabilities - ProSST.

Specifically, the model is pre-trained on a large dataset containing 18.8 million protein structures, converting protein structures into structured token sequences and inputting them into the Transformer model together with amino acid sequences. By adopting a disentangled attention mechanism, ProSST can effectively fuse these two types of information, thereby significantly surpassing existing models in supervised learning tasks such as thermal stability prediction, metal ion binding prediction, protein localization prediction, and GO annotation prediction.

The research, titled "ProSST: Protein Language Modeling with Quantized Structure and Disentangled Attention", has been selected for NeurIPS 2024.

Research highlights:

* This study proposes a protein structure quantizer that can convert protein structure into a series of discrete structural elements. These discrete structural elements can effectively characterize the local structural information of residues in proteins.

* This study proposed a decoupled attention mechanism to learn the relationship between protein amino acid sequence and three-dimensional structure, thereby promoting efficient information integration between structure discretization sequence and amino acid sequence

* Compared with other large protein pre-training models such as the ESM series and SaProt, the number of parameters of ProSST is only 110M, which is much smaller than the 650M of the classic ESM series. However, ProSST has shown the best performance in almost all protein downstream tasks, reflecting the superiority of the ProSST model architecture design.

* ProSST ranks first on the ProteinGym Benchmark, the largest zero-shot mutation effect prediction platform. On the latest ProteinGym, it is the first open source model to achieve a zero-shot mutation performance prediction Spearman correlation of more than 0.5.

Paper address:

https://neurips.cc/virtual/2024/poster/96656

Follow the official account and reply "ProSST" to get the complete PDF

The open source project "awesome-ai4s" brings together more than 100 AI4S paper interpretations and provides massive data sets and tools:

https://github.com/hyperai/awesome-ai4s

Based on the industry's mainstream unsupervised pre-training dataset, covering 18.8 million protein structures

In order to achieve unsupervised pre-training of ProSST, the research team mainly used the following datasets:

* AlphaFoldDB dataset:A reduced version of 90% was selected from more than 214 million protein structures, totaling 18.8 million structures, of which 100,000 structures were randomly selected as a validation set to monitor and adjust the perplexity during the training phase.

* CATH43-S40 dataset:It contains 31,885 protein crystal domains that have been deduplicated using 40% sequence similarity. After removing structures that lack key atoms (such as Cα and N), 31,270 records remain, from which 200 structures are randomly selected as the validation set to monitor and optimize model performance.

* CATH43-S40 local structure dataset:It consists of local structures extracted from the CATH43-S40 dataset. By constructing a star graph method, 4,735,677 local structures are extracted for embedding representation of the structure encoder and clustering analysis of the structure codebook.

* ProteinGYM benchmark dataset:Used to evaluate the ability of ProSST in predicting zero-sample mutation effects, it includes 217 experimental analyses, each of which contains the sequence and structure information of the protein, with special attention paid to 66 data sets focusing on thermal stability, using Spearman coefficient, Top-recall and NDCG as performance evaluation indicators.

ProSST: PLM with structure-aware capabilities, including two key modules

ProSST (Protein Sequence-Structure Transformer) developed in this study is a pre-trained protein language model with structure-aware capabilities. As shown in the figure below,ProSST mainly consists of two modules:The structure quantization module and the Transformer model with sequence-structure disentangled attention.

Structural Quantification Module: Serialize and quantify protein structure into a series of structural elements

The goal of the structure quantization module is to convert the local structure of residues in a protein into discrete tags. Initially, the local structure is encoded into a dense vector by a pre-trained structure encoder. Subsequently, a pre-trained k-means clustering model assigns a category label to the local structure based on the encoded vector. Finally, the category label is assigned to the residue as a structure token.

* Compared with the overall structure of the protein, the local structure description is more granular

Specifically, the study used a geometric vector perceptron (GVP) as a local structure encoder, as shown in Figure A below. The study integrated the GVP with a decoder containing a position-aware multilayer perceptron (MLP) to form an autoencoder model. The entire model was trained using denoised pre-trained target proteins. After training on the CATH dataset, the researchers only used the average pooled output of the encoder as the final representation of the structure.

Next, as shown in Figure B below, the local structure encoder of this study quantizes the dense vector representing the protein structure into discrete tags. To this end, the researchers used the structure encoder GVP to embed the local structures of all residues in the CATH dataset into a continuous latent space, and then applied the k-means algorithm to identify K centroids in this latent space, which constitute the structure codebook.

Finally, for the residue at position i in the protein sequence, the study first constructs a graph Gi based on its local structure and then embeds it into a continuous vector ri using the structure encoder GVP. Overall, as shown in Figure C below, the entire protein structure can be serialized and quantized into a series of structure tokens.

Sequence-structure decoupled attention: enabling the model to learn the relationship between residues and residues, and between residues and structures

This study was inspired by the DeBerta model, which aims to learn the relationship between residue sequence (amino acid sequence) and structural sequence, as well as relative position by decoupling attention, so that the model can process protein sequence and structural information, and improve the performance and stability of the model by decoupling.

Specifically, for the ith residue in the primary sequence of a protein, it can be represented by three items: Ri represents the encoding of the amino acid sequence token, Si Represents the local structure token encoding of amino acids, while Pi|j Represents the token encoding of the ith residue at position j. As shown in the figure below, the sequence-structure decoupled attention mechanism of this study includes five types: residue to residue (R to R), residue to structure (R to S), residue to position (R to P), structure to residue (S to R), and position to residue (P to R), which enables the model to capture the complex relationship between protein sequence and structure in a more detailed manner.

ProSST is a leader in performance, and the inclusion of structural information greatly improves model characterization capabilities

To verify the effectiveness of ProSST in zero-shot mutant effective prediction, the study compared it with a variety of top models, including sequence-based models, structure-sequence models, inverse folding models, evolutionary models, and ensemble models.

As shown in the following table, In the ProteinGYM benchmark, ProSST outperforms all the compared models and achieves the best stability. In addition, ProSST (-structure) performs comparable to other sequence models, which confirms that the performance improvement of ProSST is mainly due to its effective integration of structural information.

* ProSST (-structure) does not include structure information modules

For supervised learning, the study selected four major downstream protein tasks: thermal stability prediction (Thermostability), metal ion binding prediction (Metal Ion Binding), protein localization prediction (DeepLoc), and GO annotation prediction (MF/BP/CC), and compared ProSST with other protein language models such as ESM-2, ESM-1b, SaProt, MIF-ST, GearNet, etc. The results are shown in Table 2 below.ProSST achieved the best results among all models, obtaining 5 first places and 1 second place in all 6 settings.

Protein language model: a bridge between big data and life sciences

Since the release of large language models such as ChatGPT, pre-trained models (PLMs) based on large-scale protein sequences have become a hot research topic in the field of life sciences. Currently, PLM research is mainly divided into two directions:

* Retrieval-enhanced PLM: This type of model integrates multiple sequence alignment (MSA) information during the training or prediction stage, such as MSATransformer and Tranception, to improve prediction performance.

* Multimodal PLM: Unlike models that only use sequence information, multimodal PLM integrates additional information such as protein structure. For example, the ProSST model described in this article can enhance the representation ability of the model by fusing the structural token sequence with the amino acid sequence.

In terms of search-enhanced PLM,In April this year, a research team from Fudan University and other institutions launched PLMSearch, a homologous protein search method based on sequence input. The study can use pre-trained protein language models to obtain deep representations and predict structural similarities. Related research has been published in Nature Communication.

Paper link:

https://doi.org/10.1038/s41467-024-46808-5

In terms of multimodal PLM,Professor Huajun Chen's team at Zhejiang University recently proposed a new denoising protein language model (DePLM) for protein optimization. This model can improve the performance of protein optimization tasks by optimizing evolutionary information. The related results have been successfully selected for the top conference NeurIPS 24.

More details: Selected for NeurIPS 24! Zhejiang University team proposed a new denoising protein language model DePLM, which predicts mutation effects better than SOTA models

As these breakthrough studies continue to emerge, PLM is gradually becoming a powerful tool for exploring unknown areas of life sciences. It has great potential in areas such as protein function prediction, interaction prediction, and phenotype association prediction, and is expected to provide new ideas for disease treatment and improving human life.