Command Palette

Search for a command to run...

The First Complete Triton Chinese Documentation Is Online! Opening a New Era of GPU Inference Acceleration

Three years after its release, Triton is leading the new wave of AI compilers

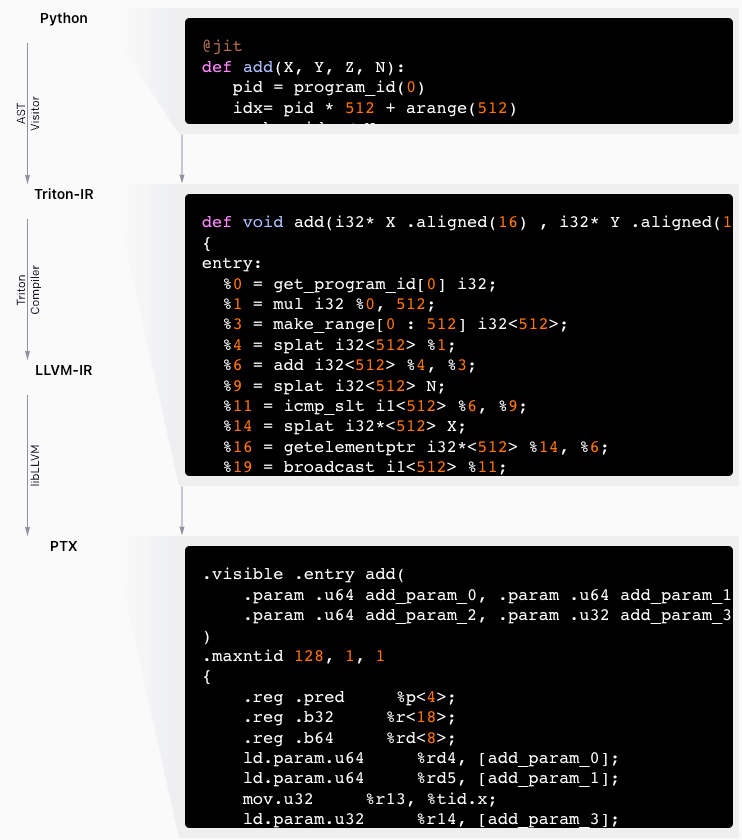

In 2019, Philippe Tillet et al. first proposed Triton in the paper "Triton: An Intermediate Language and Compiler for Tiled Neural Network Computations". In 2020, Philippe Tillet joined OpenAI and continued to lead the development of the Triton project, and in 2021, the Triton compiler was first publicly released.

Paper address:https://dl.acm.org/doi/10.1145/3315508.3329973

The Triton compiler is designed to enable researchers and developers to achieve expert-level CUDA performance with less code.It has attracted much attention in the development of deep learning both overseas and domestically.

Used to specify a block program and compile it into efficient GPU code

Since its open source release in 2021,Triton is known for its outstanding performance and flexibility.It has quickly gained widespread attention and application around the world. It has not only been used in multiple open source projects such as PyTorch, Unsloth, and FlagGems, but has also received strong support from chip giants such as NVIDIA, AMD, Intel, and Qualcomm.

In addition, leading AI companies such as Google, Microsoft, OpenAI, AWS and Meta have also used Triton as a key technology for building open AI software stacks. Triton is gradually becoming a new option for AI compilers with lower barriers to entry and higher availability.

The first complete version of Triton Chinese documentation is online

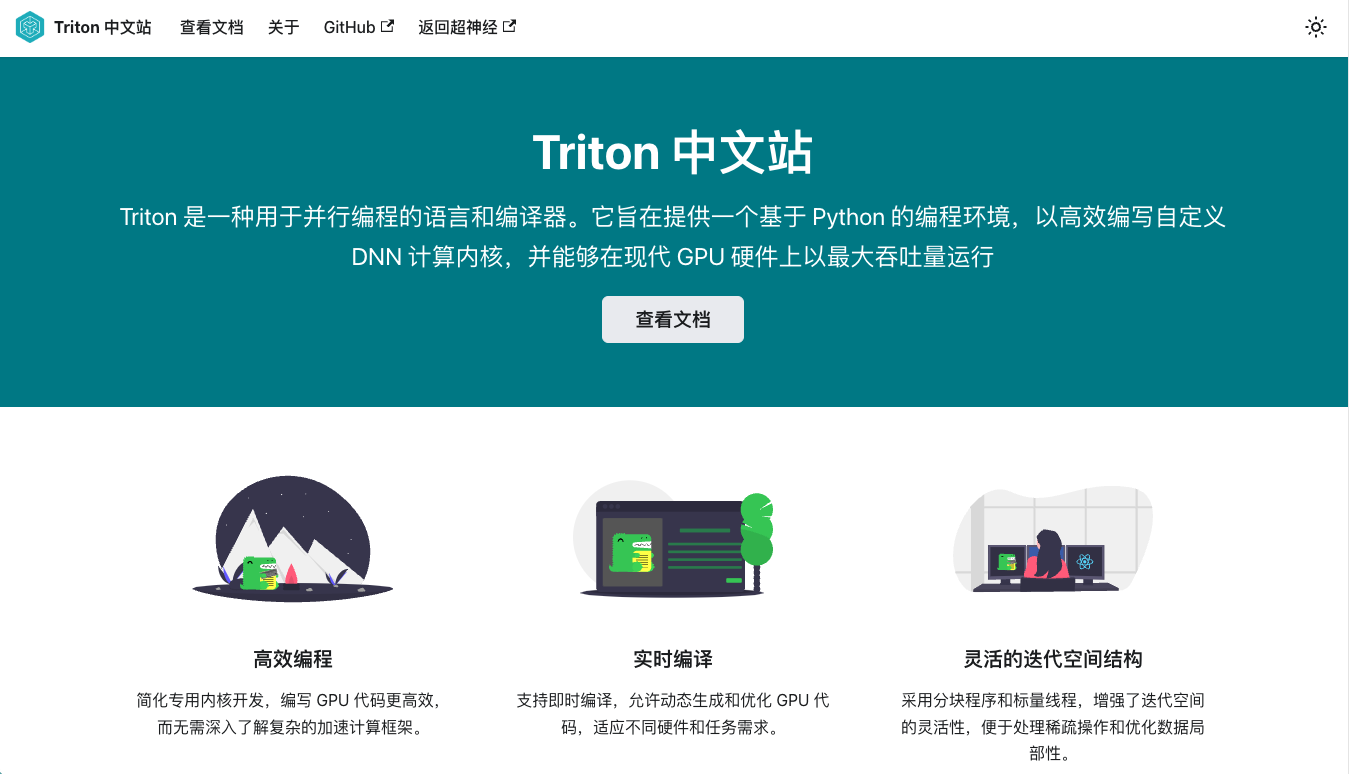

Although Triton's influence in the global AI field is rapidly rising,However, in the Chinese field, Triton-related information and resources are relatively scarce.The breadth and depth of its application and research still need to be expanded.HyperAI community volunteers, in an open source collaborative way, spent 3 months and went through strict review of the first translation and second proofreading to complete the localization of all Triton documents, which are now fully online. https://triton.hyper.ai/.

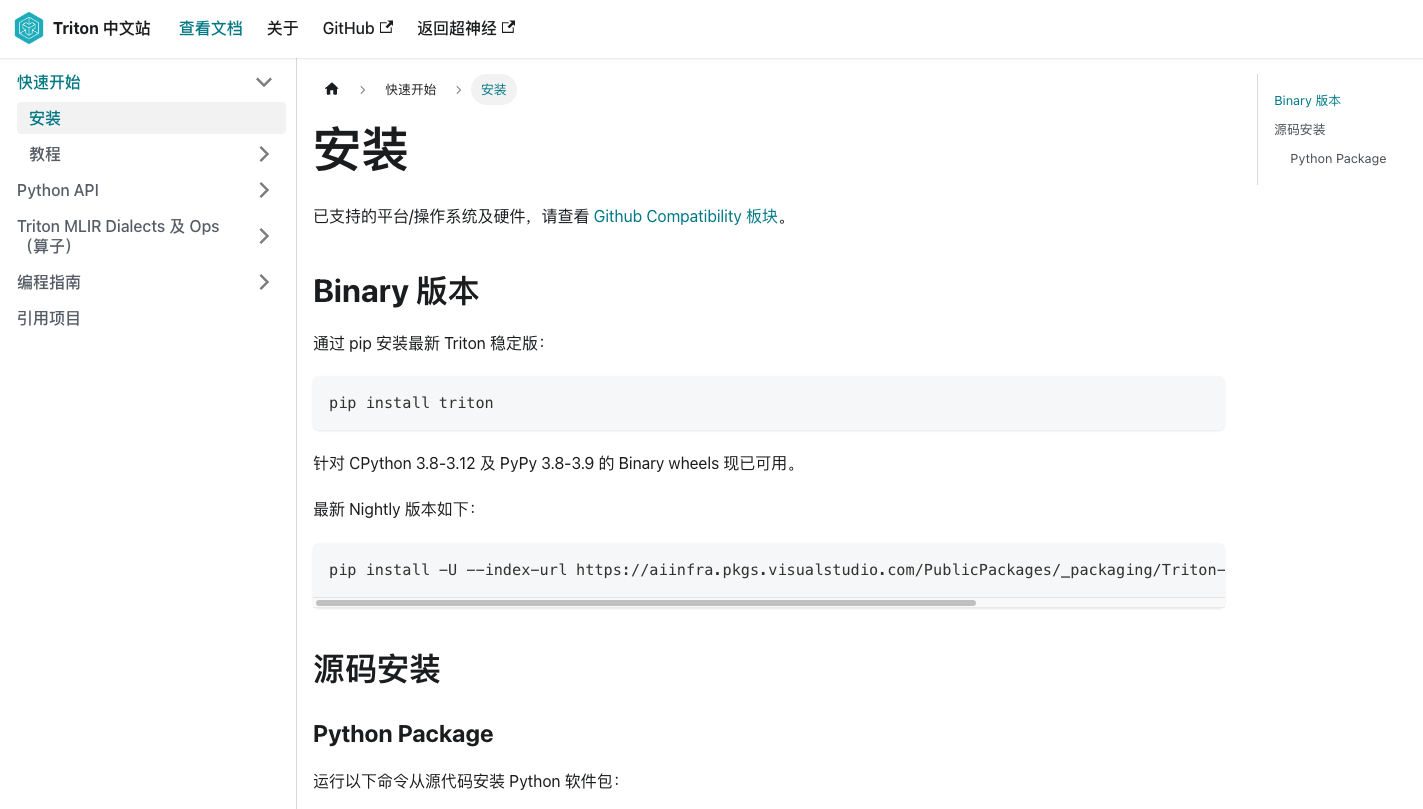

The Triton Chinese documentation is based on the English documentation and has been adjusted to a localized structure.It is more in line with the reading habits of Chinese developers and provides a series of detailed and practical guides from Triton installation to application development.

Triton | With this document, you can:

* Get started with Triton from scratch and easily understand its core concepts and applications

* Gain in-depth understanding of Triton's advanced features and explore innovative paths

* Quickly identify practical problems and obtain solutions to improve efficiency

* Track Triton's cutting-edge technology trends and keep up with technology updates

* Participate in community discussions on Chinese documents and collaborate to create a technical ecosystem

Install via pip and source code

The "Reference Projects" section of the document collects some open source and closed source star projects that use Triton. Through these real cases, developers can have a deeper understanding of the implementation of Triton in actual projects and master its optimization and integration techniques.

From TVM to Triton:

Building a diverse and inclusive AI compiler community

In 2022, HyperAI launched the first Apache TVM Chinese documentation in China (Click to view the original text: TVM Chinese website is officially launched! The most comprehensive machine learning model deployment "reference book" is here), as domestic chips are advancing rapidly, we provide domestic compiler engineers with the infrastructure to understand and learn TVM.At the same time, we have also joined hands with Apache TVM PMC Dr. Feng Siyuan and others to form the most active TVM Chinese community in China.Through online and offline activities, we have attracted the participation and support of mainstream domestic chip manufacturers, covering more than a thousand chip developers and compiler engineers.

TVM Chinese Documentation Address:

Two years later, we hope to continue to expand the community's technical boundaries and content scope, and build a more open, diverse, and inclusive AI compiler community.In addition to Apache TVM, we actively embrace old compiler technologies such as LLVM and MLIR and related engineers and projects. The launch of the Triton Chinese website also shows our determination to expand the "circle of friends" of the AI compiler community.

The Chinese documentation of TVM and Triton is still being updated. As infrastructure, Chinese documentation is only the first step in building an ecosystem. In the upstream of the ecosystem, we cannot do without the development tools and operating systems around the AI compiler, and in the downstream of the ecosystem, we cannot do without hardware manufacturers and service providers. Only by building a full-link partnership, connecting each link and the real needs of each partner, and reducing barriers and providing value for them, can we create more possibilities for cooperation and progress.

We look forward to more corporate partners joining us to build a more open, diverse, and inclusive AI compiler community!

View the complete Triton Chinese documentation:https://triton.hyper.ai/

On GitHub 🌟 Triron Chinese:https://github.com/hyperai/triton-cn