Command Palette

Search for a command to run...

Online Tutorial | LivePortrait Achieves ultra-realistic Expression Migration, Bringing Virtual Idols to Life!

In the past, creating dynamic video effects using a single image required complex animation techniques and a lot of manual work.Especially when it comes to controlling details like eyes and lips, it is time-consuming and difficult to achieve realistic synchronization effects.

In the latest version, LivePortrait greatly simplifies this process through precise portrait editing and video editing. Creators can precisely control the subtle movements in the image and generate high-quality, detailed dynamic videos, providing great flexibility and convenience for creativity and content production.

The HyperAI hyper-neural tutorial section is now online with "LivePortrait Kuaishou open source image-generated video digital human Demo". The tutorial has set up the environment for everyone. There is no need to enter any commands. You can start it immediately with one-click cloning!

Tutorial address:

Demo Run

Using LivePortrait to achieve expression migration

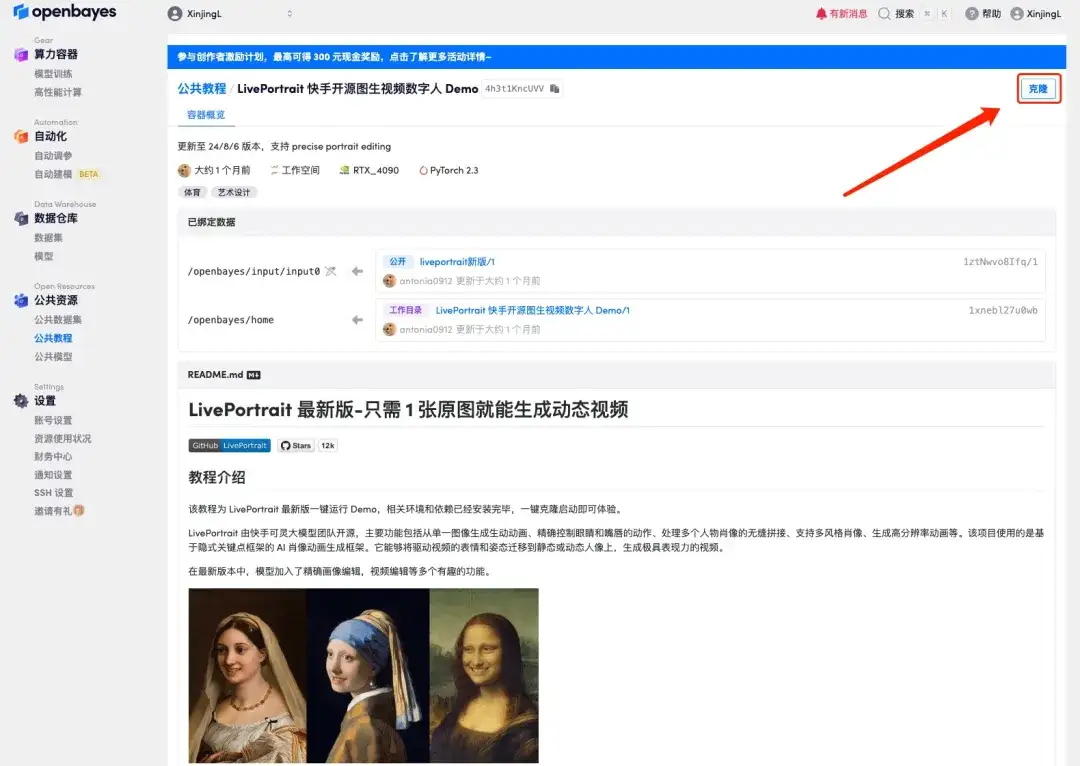

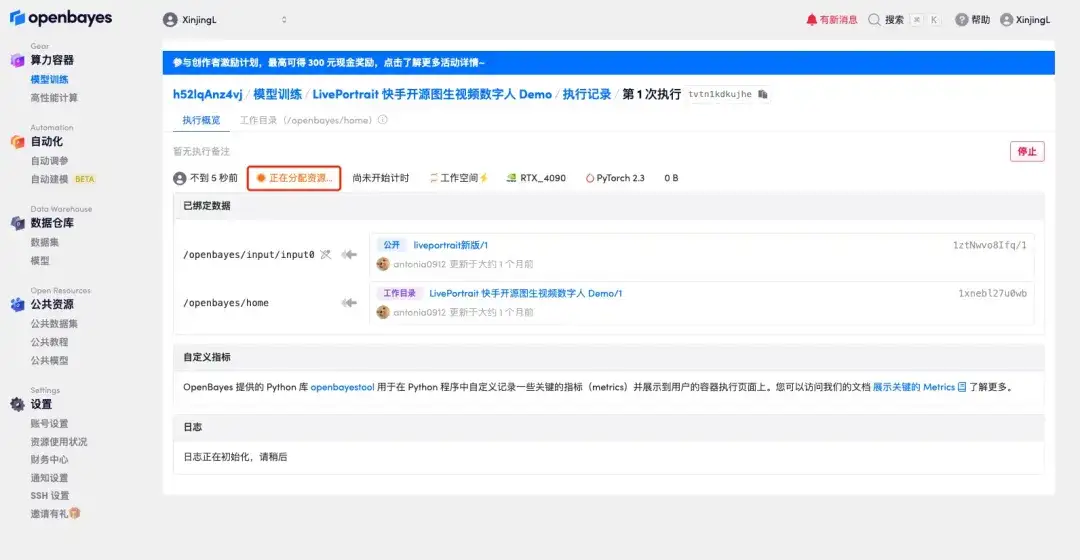

1. Log in to hyper.ai, on the Tutorial page, select LivePortrait Kuaishou Open Source Image-Based Video Digital Human Demo, and click Run this tutorial online.

2. After the page jumps, click "Clone" in the upper right corner to clone the tutorial into your own container.

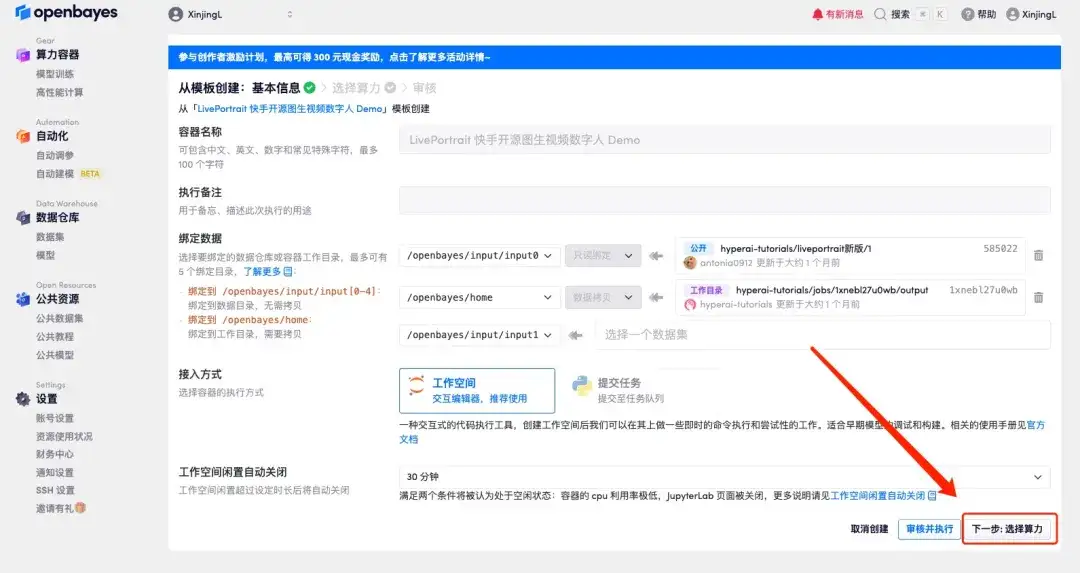

3. Click "Next: Select Hashrate" in the lower right corner.

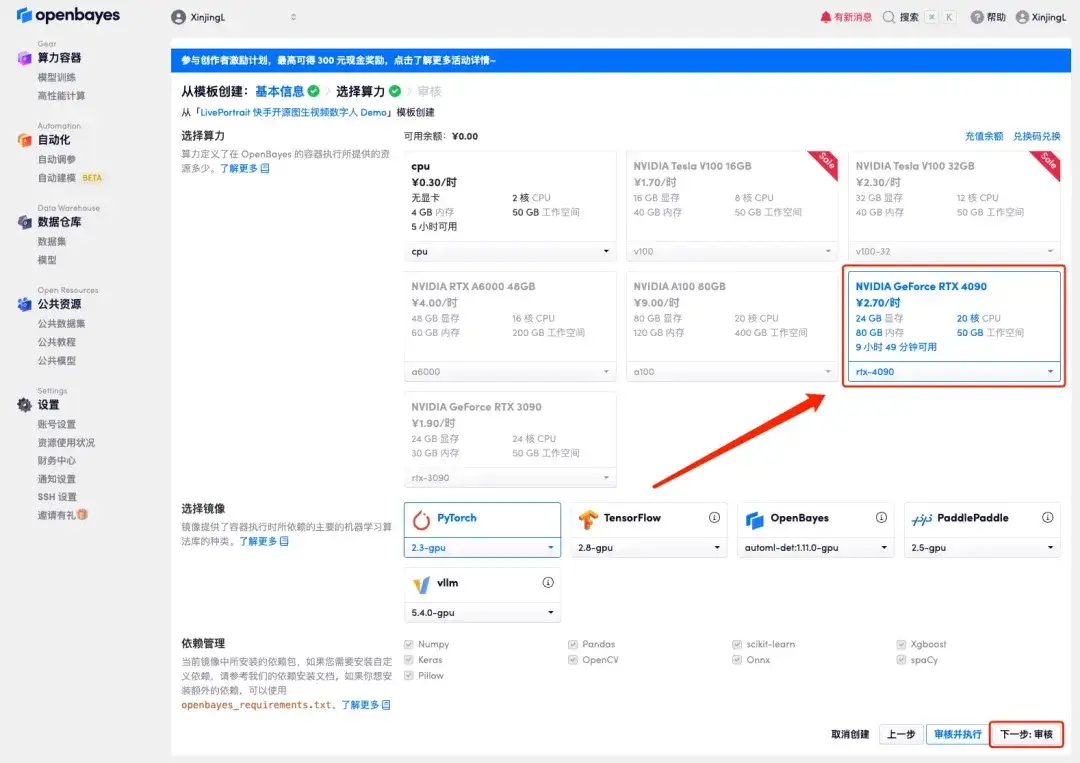

4. After the page jumps, select "NVIDIA RTX 4090" and "PyTorch" image, and click "Next: Review".New users can register using the invitation link below to get 4 hours of RTX 4090 + 5 hours of CPU free time!

HyperAI exclusive invitation link (copy and open in browser):

https://openbayes.com/console/signup?r=Ada0322_QZy7

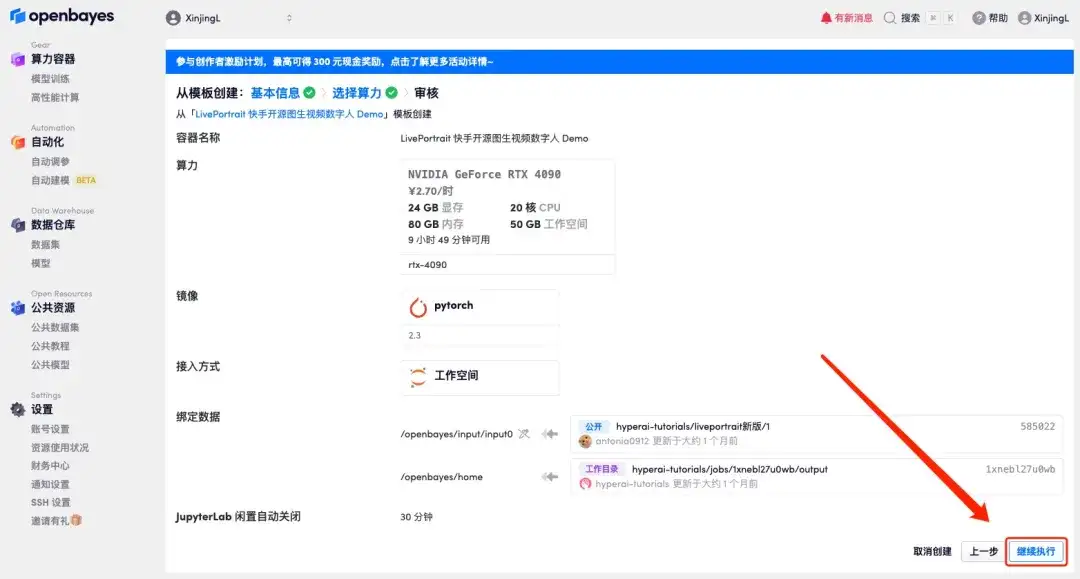

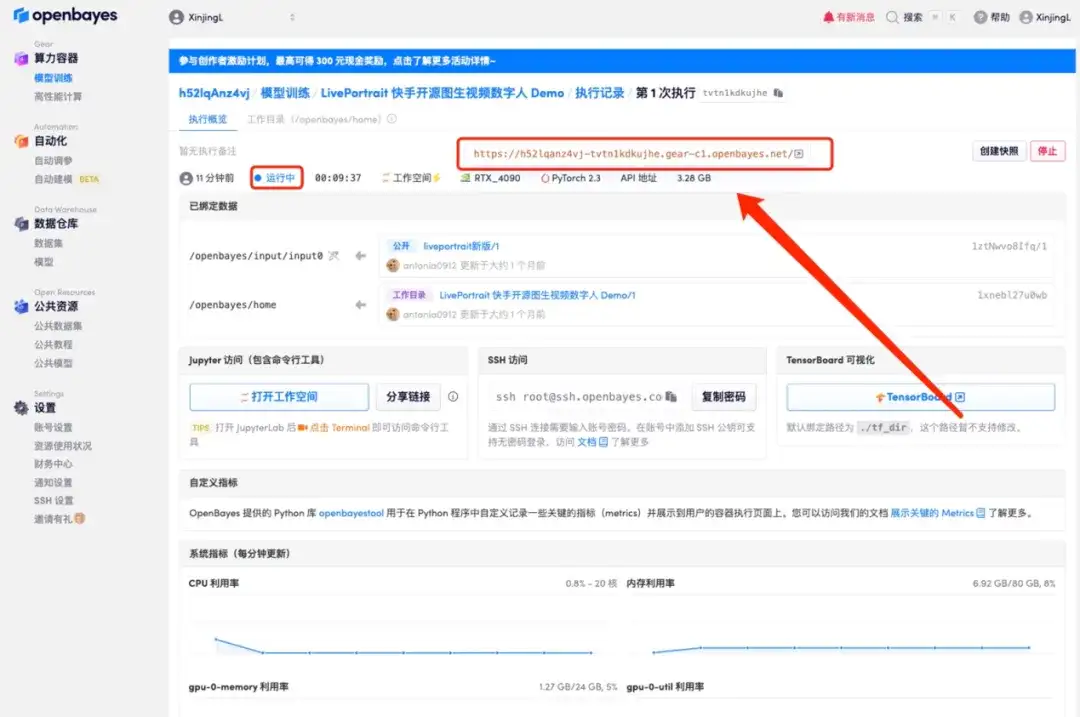

5. After confirmation, click "Continue" and wait for resources to be allocated. The first cloning takes about 1 minute. When the status changes to "Running", click the jump arrow next to "API Address" to jump to the Demo page.Please note that users must complete real-name authentication before using the API address access function.

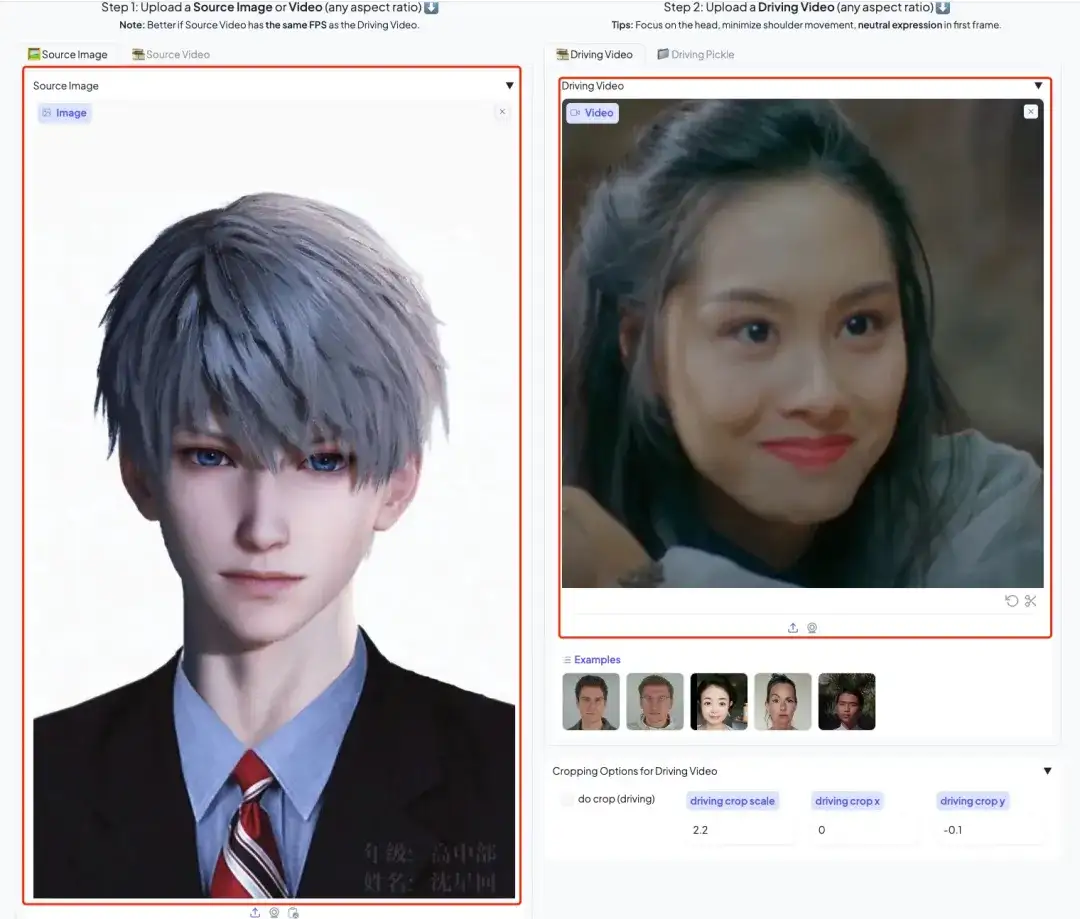

6. After opening the Demo, upload the picture and expression reference video respectively, click "Animate", and wait for a moment to generate the video result.

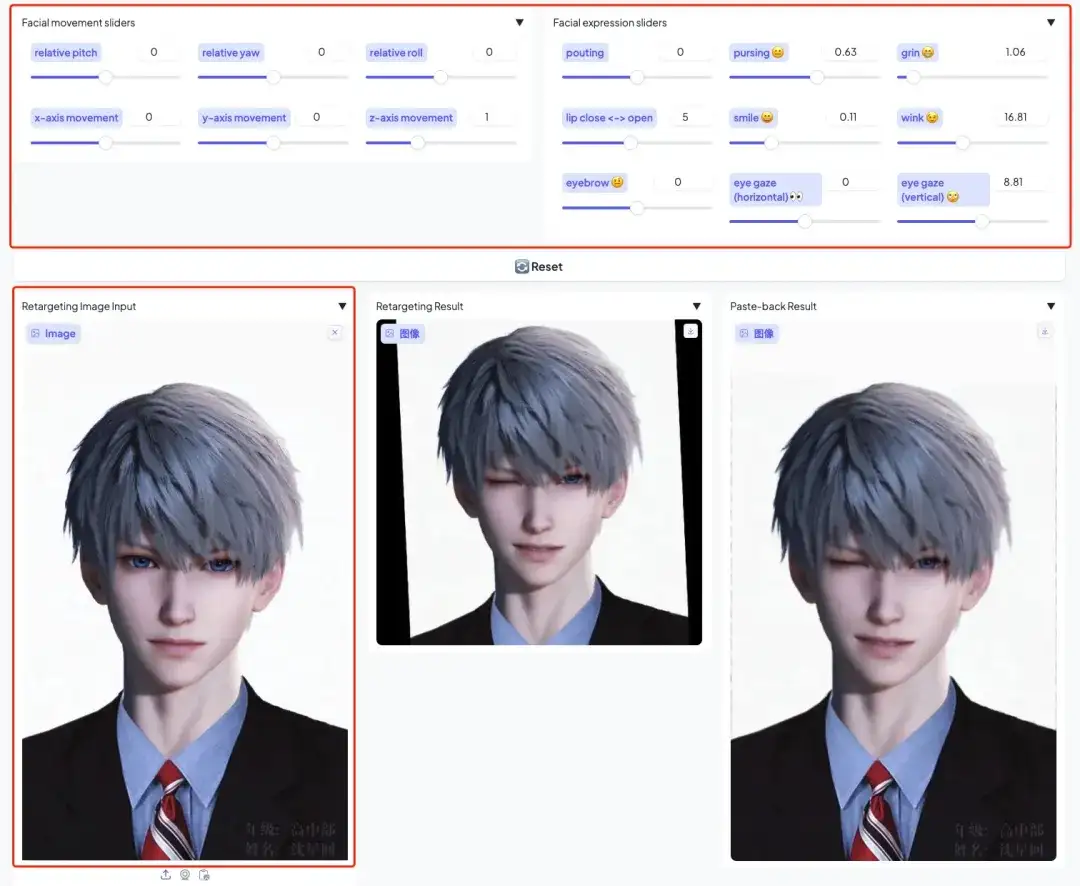

Custom expression refinement

1. Upload a picture in Precise Portrait Editing mode and adjust parameters such as eyes, smile, mouth size, etc. You can adjust them according to the picture you uploaded.

We have established a "Stable Diffusion Tutorial Exchange Group". Welcome friends to join the group to discuss various technical issues and share application results~

Scan the QR code below to add HyperaiXingXing on WeChat (WeChat ID: Hyperai01), and note "SD Tutorial Exchange Group" to join the group chat.