Command Palette

Search for a command to run...

Llama 3.2 Is Here, Multimodal and Open Source! Huang Renxun Is the First to Experience AR Glasses, and the Price of Quest 3S Headset Is Ridiculously Low

If OpenAI's ChatGPT kicked off the "100 Model Wars",Meta's Ray-Ban Meta smart glasses are undoubtedly the fuse that triggered the "War of a Hundred Mirrors".Since its debut at the Meta Connect 2023 Developer Conference in September last year, Ray-Ban Meta has sold over one million copies in just a few months, not onlyMark ZuckerbergIt was called "Amazing", which prompted major domestic and foreign companies such as Google, Samsung, and ByteDance to join the market!

A year later, Meta once again launched a new smart glasses product, Orion, at the Connect Developer Conference.This is the company's first holographic AR glasses.Zuckerberg called it the world's most advanced glasses that will change the way people interact with the world in the future.

In addition, Meta usually launches new Quest headsets at the Connect conference, and this year is no exception. Quest 3 Users generally complain that the price is too high.This year, Meta launched a new headset, Quest 3S, which has similar performance to Quest 3 but is more affordable.This headset is considered to be the best mixed reality device on the market today, providing an excellent hyper-reality experience.

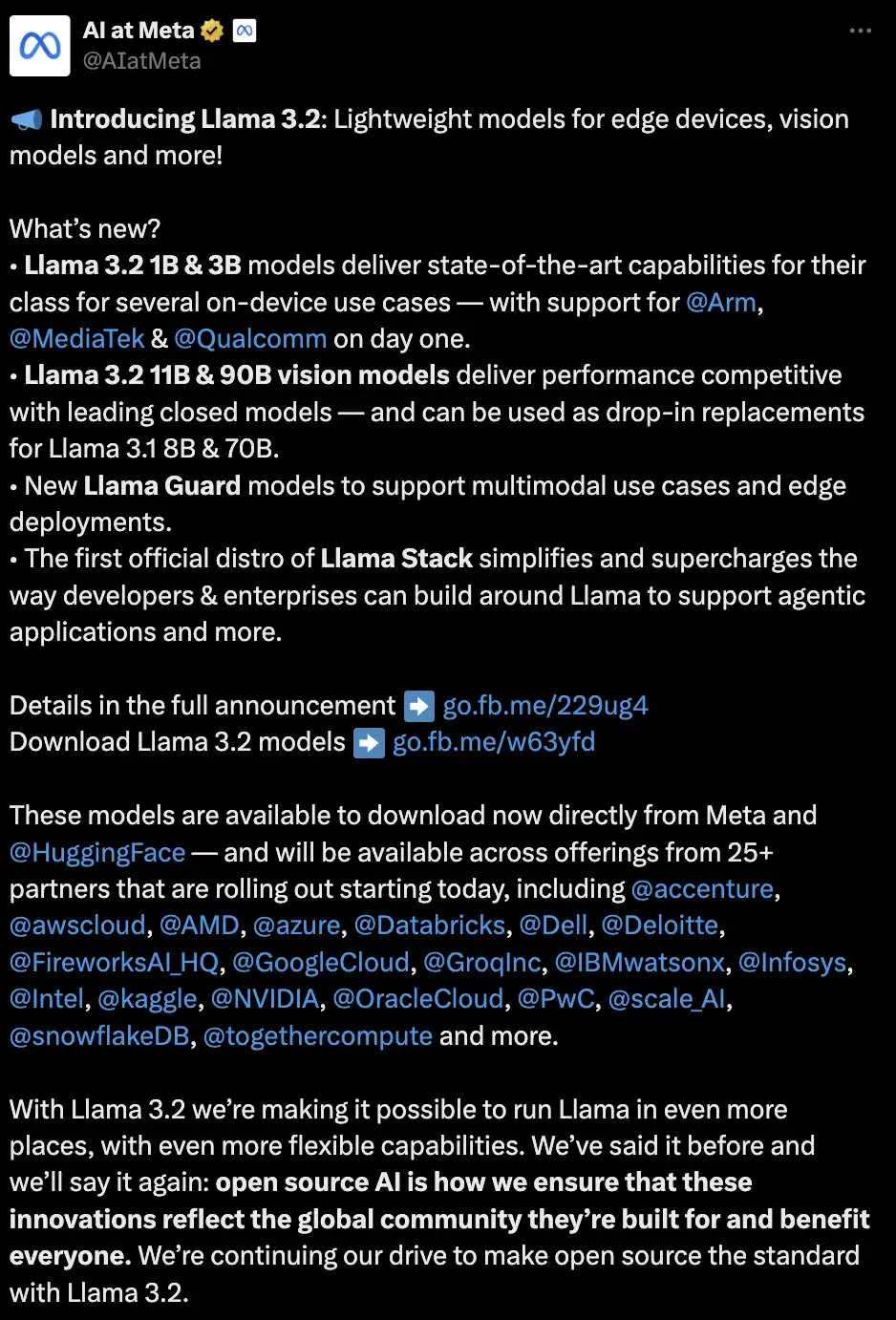

Of course, as one of Meta's highly anticipated core technologies, the Llama model also underwent a major update at this press conference.Multimodality Llama 3.2 can understand both images and text, and mobile phones can also run large models, adding further benefits to its open source ecosystem.

Meta's AR dream comes true, Orion glasses open a new era of interaction

In April this year, to celebrate the 10th anniversary of Reality Labs, Meta published an article outlining the development history of the department and previewed its next core product, the first AR glasses. Meta said that Quest 3 allows users to interact immersively with digital content in the physical world, Ray-Ban Meta glasses allow users to enjoy the practicality and entertainment of Meta AI, and the new AR glasses will combine the advantages of the two to achieve the best technology fusion.

As Meta Connect 2024 approaches, more and more people in the market speculate that this AR glasses will be released at this conference. As expected,Today, Meta released its first AR glasses, Orion.

Zuckerberg said that Orion is committed to changing the way people interact with the world. It is the most advanced AR glasses ever and has been developed over 10 years. It has the most advanced AR display, customized silicon chips, silicon carbide lenses, complex waveguides, uLED projectors, etc.A combination of technologies enables powerful AR experiences to run on a pair of glasses, while consuming only a fraction of the power and weighing a fraction of an MR headset.

In simple terms,This AR glasses uses a new display architecture.usePico ProjectorLight is projected into the waveguide, and then different depths and sizes ofHologramThe world is projected in front of the user and is powered by a battery in the temple of the glasses. For example, if the user wants to meet up with a friend who is far away, they will appear in the living room as a hologram, just like they are really there.

It is worth mentioning that Orion has 7 embedded in the edge of the frame.Mini cameraAnd sensors, combined with voice, eye and gesture tracking, equipped with an EMG wristband, users can easily swipe, click and scroll. For example, if you want to take a photo during your morning run, just tap your fingertips and Orion will freeze the moment. In addition, you can also summon entertainment activities such as card games, chess or holographic table tennis with just a tap of your fingers.

Founder and CEO of Nvidia Jen-Hsun HuangCan't wait to try it out!

The best mixed reality device, Quest 3S, is great value for money

Following Meta’s announcement of the world’s first mixed reality headset, Meta Quest 3, at the Connect conference last year,Meta launched a streamlined version of Quest 3 this year - Quest 3S.

Zuckerberg said,“Quest 3S is not only a great value for money, but it’s also the best mixed reality device you can buy on the market!”It has similar core features to Quest 3, namely high-resolution full-color mixed reality, allowing users to "seamlessly travel" between the physical and virtual worlds, and to engage in a range of activities such as entertainment, fitness, games, and social experiences. The difference is that Quest 3S has improved lenses and optimized technology stacks, effective resolution, and latency, and its mixed reality hand tracking software performs better.

The magic of mixed reality is that it brings realistic space intoMetaverse, allowing users to have an immersive feeling and switch freely between different experiences. For example, users can choose theater mode to enlarge the screen into a movie theater for the best theater viewing experience.

In a live demonstration, Zuckerberg showed an immersive experience from a 2D mobile app to a remote desktop PC, where users can open the screen and place it anywhere to form a hugeVirtual DisplayHe mentioned that Meta has been working with Microsoft to upgrade the Remote Desktop feature, and it may be able to connect to Windows 11 computers soon.

Meta Quest 3S offers a hyper-realistic experience at an incredible price, starting at just $299.99 and available October 15th. Buy it this fall Quest 3S Users will also receive a VR experience of the game "Batman: Arkham Shadow".

The first Llama model to support visual tasks, multimodal and open source

In addition to the heavy-duty hardware release, Zuckerberg also brought an update to Llama, launching the Llama 3.2 model. As the first Llama model to support visual tasks, Llama 3.2 can understand both images and text. It includes small and medium-sized visual models (11B and 90B) for edge and mobile devices and lightweight plain text models (1B and 3B), both of which include pre-trained versions and instruction fine-tuning versions. It is worth mentioning that these models of different specifications can be tried out through Meta AI.

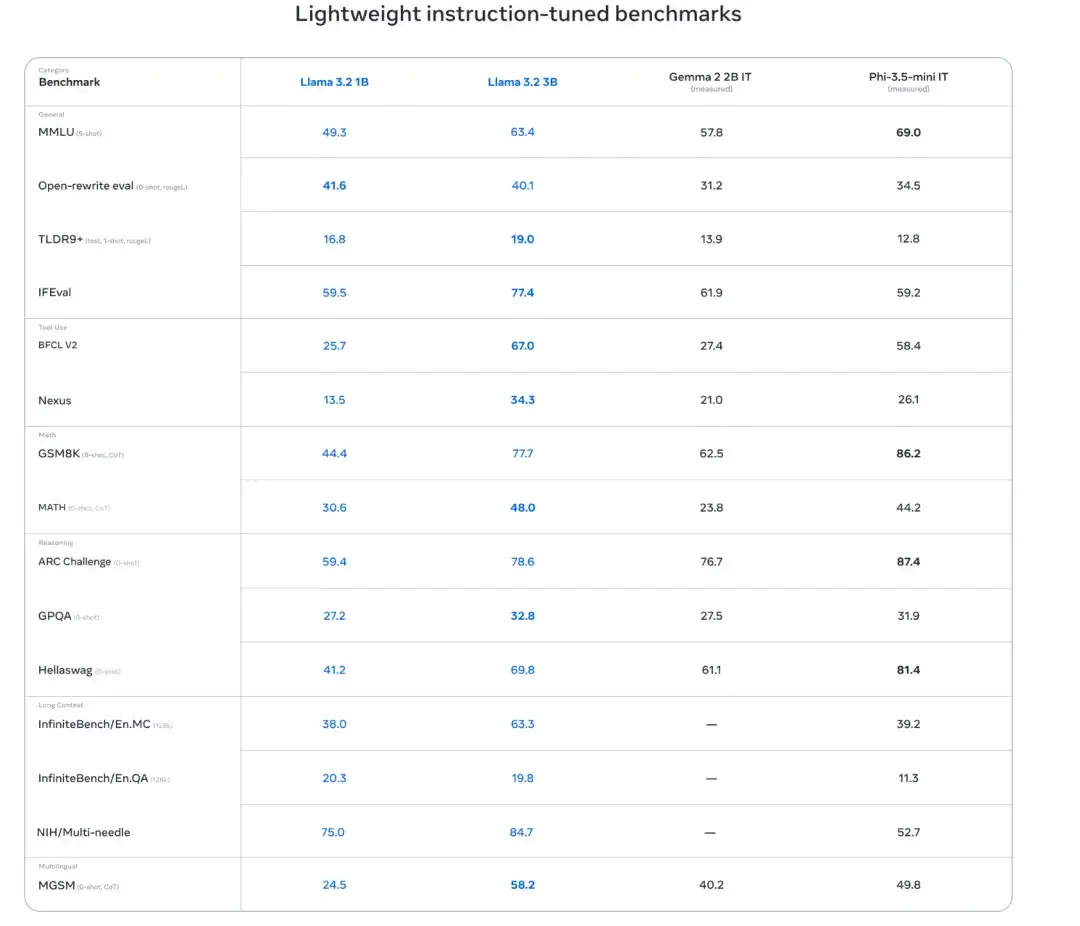

Model performance evaluation

Researchers have conducted a cross-linguistic study of more than 150Benchmark datasetsEvaluate model performance. The results show that Llama 3.2 1B and 3B models support a context length of 128K tokens. In tasks such as following instructions, summarizing, prompt rewriting, and tool use, the 3B model outperforms the Gemma 2 2.6B and Phi 3.5-mini models, while the 1B model is able to compete with the Gemma model.

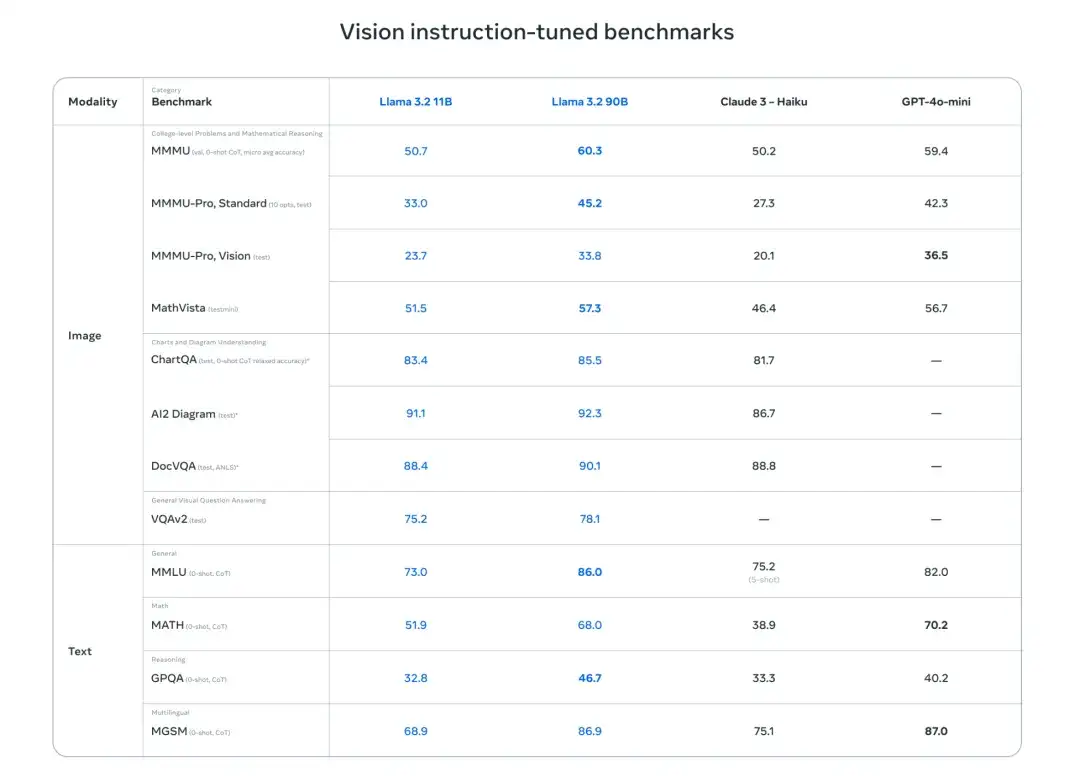

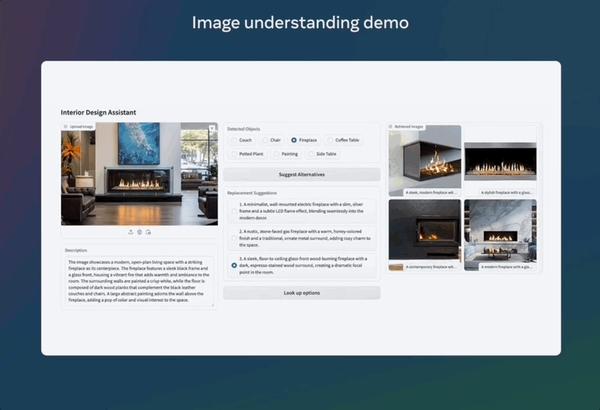

In addition, the researchers evaluated the performance of the model on image understanding and visual reasoning benchmarks. The results showed that the Llama 3.2 11B and 90B vision models can seamlessly replace the corresponding text models, while surpassing closed-source models such as Claude 3 Haiku on image understanding tasks.

Lightweight model training

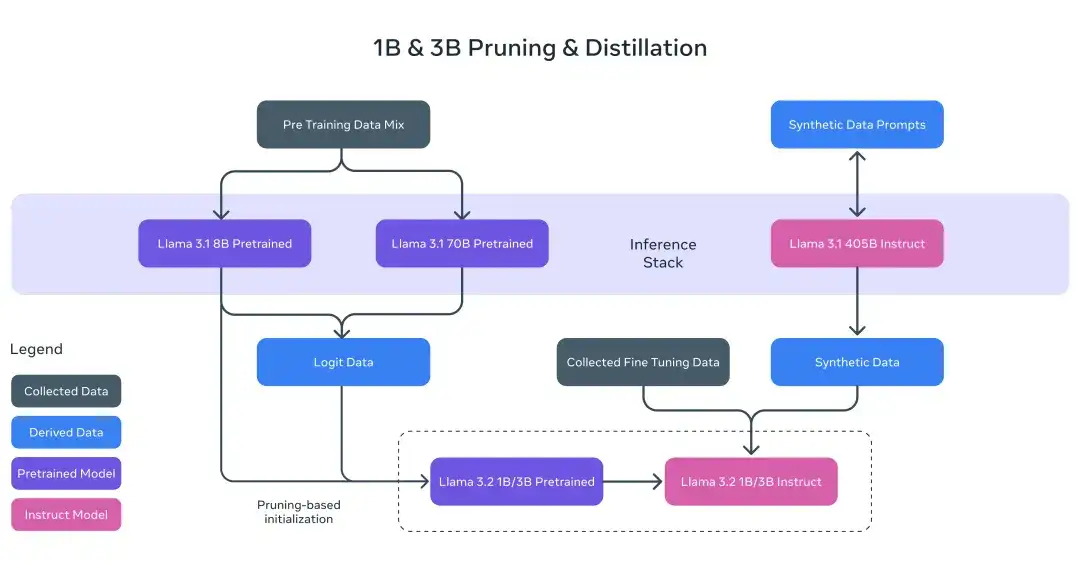

For the Llama 3.2 1B and 3B models, the researchers used pruning and distillation methods to extract efficient 1B/3B models from the 8B/70B models.

Specifically, the researchers included the Logit Date of the 8B and 70B models of Llama 3.1 in the pre-training phase, and used the output (logit date) of these larger models as token-level targets for structured pruning.Knowledge Distillationto restore the performance of the model.

Visual model training

The training process of Llama 3.2 is divided into multiple stages. First, we start with the pre-trained Llama 3.1 text model. Then, we add image adapters and encoders and pre-train on large-scale noisy (image, text) paired data. Then, we train on medium-scale high-quality in-domain and knowledge-enhanced (image, text) paired data.

In the later stages of training, the researchers used a similar approach to the text model, using multiple rounds of alignment for supervised fine-tuning, rejection sampling, and direct preference optimization. The researchers used the Llama 3.1 model to generate synthetic data, filter and enhance questions and answers for in-domain images, and use a reward model to rank all candidate answers to ensure high-quality fine-tuning data.

In addition, the researchers introduced security mitigation data to create a model that is both highly secure and practical. Finally, the Llama 3.2 model, which can understand both images and text, was born, marking another important step for the Llama model on the road to richer agent capabilities.

Local deployment models are timely and secure

The researchers pointed out that running the Llama 3.2 model locally has two major advantages. First, in terms of response speed, since all processing is done locally, the speed of prompts and responses can reach a near-instant effect.

Secondly, in terms of privacy and security, running the model locally does not require sending data such as messages and calendars to the cloud, which protects user privacy and makes the application more private. Through local processing, the application can clearly control which queries remain on the device and which queries need to be handled by a larger model in the cloud.

hold fastOpen SourceOriginal intention: model deployment is simpler and more efficient

Meta has always adhered to the original intention of open source. In order to greatly simplify the process of developers using Llama models in different environments (including single node, on-premises deployment, cloud and devices),Search EnhancementMeta announced that it will share the first official Llama Stack distributions, providing one-click deployment of RAG and tool-supported applications with integrated security features.

According to the official introduction, the Llama 3.2 model will be http://llama.com and Hugging Face, and will provide immediate development support on partner platforms including AMD, AWS, Databricks, Dell, Google Cloud, Groq, IBM, Intel, Microsoft Azure, NVIDIA, Oracle Cloud, Snowflake, and others.

As a consistent advocate of open source, Zuckerberg once said in a conversation with Huang Renxun that "Meta has benefited from the open source ecosystem and has saved billions of dollars." Llama is undoubtedly an important tool for building its open source ecosystem. Llama 3.2 further expands to visual tasks and achieves multimodality, which is bound to add benefits to its open source ecosystem.