Command Palette

Search for a command to run...

Major Breakthrough in Domestic Optical Chips! Tsinghua Team Uses Neural Network to Create the First full-forward Intelligent Optical Computing Training Architecture

Since 2012, the computing power required for AI model training has doubled every 3-4 months, and the computing power required for AI model training has increased by as much as 10 times each year. This brings a challenge:How can we make AI faster and more efficient? The answer may lie in the world of light.

Optical computing,A field full of potential advocates the use of the speed and properties of light to bring new levels of speed and energy efficiency to machine learning applications. However, to achieve this goal, we must solve a difficult problem: how to effectively train these optical models. In the past, people relied on digital computers to simulate and train optical systems, but this capability was greatly limited by the precise models required for optical systems and the large amount of training data. Moreover, as the complexity of the system increases, these models become increasingly difficult to build and maintain.

Recently,The research team led by Academician Dai Qionghai and Professor Fang Lu of Tsinghua University seized the symmetry of photon propagation, equating the forward and backward propagation in neural network training to the forward propagation of light, and developed a fully forward mode (FFM) learning method.Through FFM learning, researchers are not only able to train deep optical neural networks (ONNs) with millions of parameters, but also achieve ultra-sensitive perception and efficient all-optical processing, thereby alleviating the limitations of AI on optical system modeling.

The research, titled "Fully forward mode training for optical neural networks", was successfully published in the top journal Nature.

Research highlights:

* Enables efficient parallelization of machine learning operations in the field, alleviating the limitations of numerical modeling

* Introduced state-of-the-art optical systems for a given network size, and FFM learning also showed that training the deepest optical neural networks with millions of parameters can achieve comparable accuracy to ideal models

* FFM learning not only facilitates a learning process that is orders of magnitude faster, but can also advance the development of deep learning neural networks, ultra-sensitive perception, and topological photonics.

Paper address:

https://doi.org/10.1038/s41586-024-07687-4

The open source project "awesome-ai4s" brings together more than 100 AI4S paper interpretations and provides massive data sets and tools:

https://github.com/hyperai/awesome-ai4s

7 Large dataset aggregation to create input complex fields with phase set to zero in multi-layer classification experiments

A total of 7 datasets were used in this study. In the multi-layer classification experiments, each sample was used to create an input complex field with the phase set to zero:

* The MNIST dataset.The dataset is a collection of handwritten digits in 10 categories, consisting of 60,000 training samples and 10,000 test samples.

* Fashion-MNIST dataset.The dataset contains 10 different categories of fashion products and also consists of a training set of 60,000 samples and a test set of 10,000 samples.

* CIFAR-10 dataset.This dataset is a subset of the 80 million small images dataset, containing 50,000 training images and 10,000 test images.

* ImageNet dataset.The dataset is an image database consisting of a WordNet hierarchy where each node is depicted by hundreds to thousands of images, with a total of 120 million images for training and 50,000 for testing.

* MWD dataset.The dataset contains images of 4 different weather conditions for outdoor scenes (sunrise, sunny, rainy, and cloudy). It contains a total of 1,125 samples, 800 of which are used for training and 325 for testing.

* Iris flower dataset.The dataset consists of 3 Iris species, each with 50 samples. Each original sample in the dataset contains four entries describing the shape of the Iris flower. In the PIC experiment, each entry is replicated to create four identical data points, resulting in a total of 16 channels of input data.

* Chromium target dataset.The dataset consists of glass chrome plates with different regions (reflective and semi-transparent). The reflective regions represent the physical scene itself (letter targets T, H and U). During training, a single reflective region was used and moved in the same plane to generate 9 different training scenes.

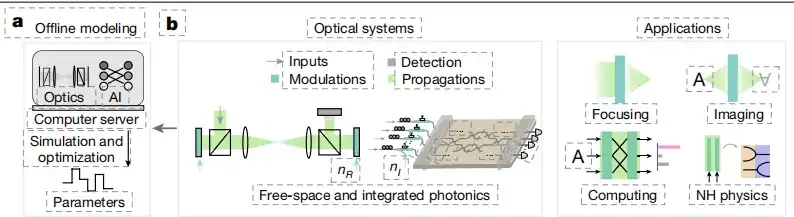

Reparameterize the optical system and build a differentiable embedded photonic neural network FFM

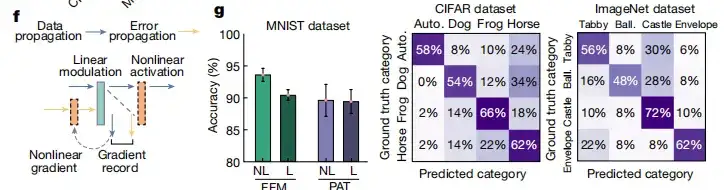

Traditionally, optics-related AI is designed through offline modeling and optimization, as shown in Figure a below, which results in limited design efficiency and system performance. In addition, in order to achieve various functions, general optical systems use the tunability of the refractive index.The optical system is divided into two different regions: modulation regions (dark green) and propagation regions (light green).As shown in Figure b below.

The study found that the optical system controlled by Maxwell's equations can be reparameterized into a differentiable embedded photonic neural network, and the gradient descent training of neural networks has been a key factor in promoting its development.The machine learning principle of FFM in this study is shown in Figure c below.Since parts of the optical system can be mapped into a neural network and connected to neurons, a differentiable onsite neural network can be constructed between input and output.

Next, the study used spatial symmetry reciprocity, shared forward physical propagation and measurement of data and error calculations, and calculated onsite gradients to update the refractive index in the design area (upper right and lower left areas of Figure c). Through onsite gradient descent, the optical system gradually converged (lower right area of Figure c).

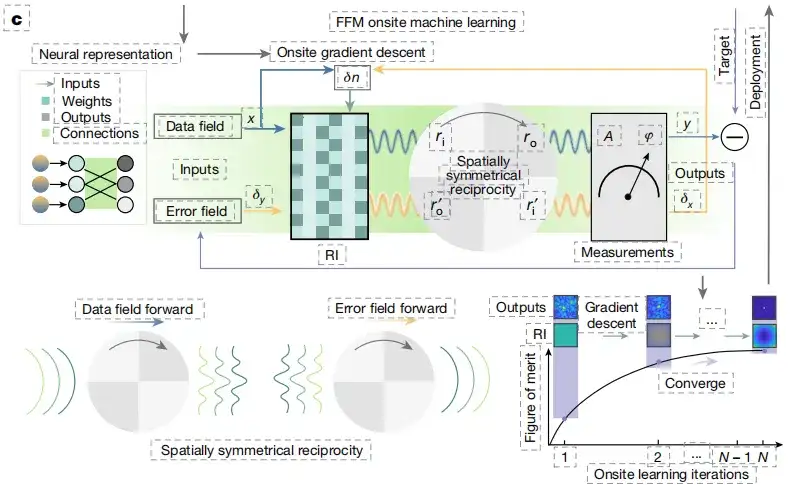

Training a single-layer optical neural network for object classification, FFM learning achieves comparable accuracy to the ideal model

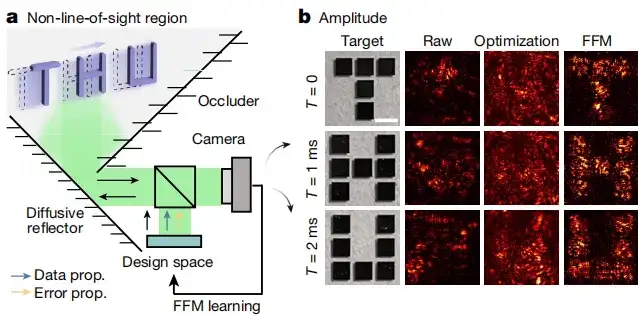

To demonstrate the effectiveness of FFM learning, the study first trained a single-layer optical neural network for object classification using a benchmark dataset, and then showed the self-training process of the optical neural network in deep free space using FFM learning in Figure 1a, and visualized its training results on the MNIST dataset in Figure 1b.

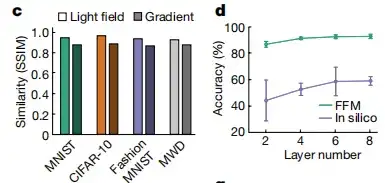

As shown in Figure c below,The structural similarity index (SSIM) between the experimental and theoretical light fields exceeds 0.97.This indicates a high level of similarity. In Figure d below, the study further analyzed the multi-layer optical neural network for the classification of the Fashion-MNIST dataset. By gradually increasing the number of layers from 2 to 8, the study found that using FFM learning,The performance of the neural network can be improved to 86.5%, 91.0%, 92.3% and 92.5%, which is close to the theoretical computer simulation accuracy.

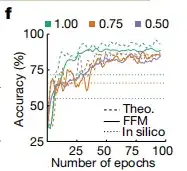

Based on the above results, the study further proposed nonlinear FFM learning, as shown in Figure f below. During data propagation, the output is nonlinearly activated before entering the next layer, so that the input of the nonlinear activation can be recorded and the relevant gradient can be calculated. Since only forward propagation is required, the nonlinear training paradigm proposed by FFM is applicable to general measurable nonlinear functions, and is therefore applicable to optoelectronic and all-optical nonlinear optical neural networks. Not only that,The classification accuracy of the nonlinear optical neural network increased from 90.4% to 93.0%.As shown in Figure g below.

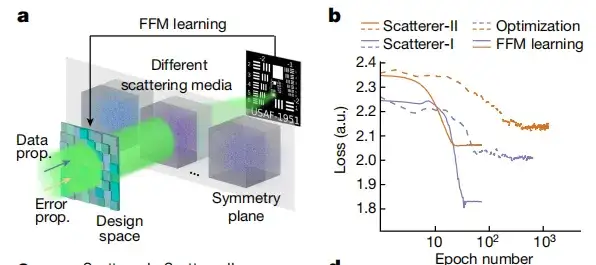

FFM learning simplifies the design of complex photonic systems, enabling all-optical scene reconstruction and analysis of dynamically hidden objects

The study also found thatFFM learning overcomes the limitations imposed by offline numerical modeling and simplifies the design of complex photonic systems.For example, the study shows a point scanning scatter imaging system in Figures a and b below, respectively, and uses particle swarm optimization (PSO) to analyze various advanced optimization methods. The results show that gradient-based FFM learning shows higher efficiency, converging after 25 design iterations in two experiments, with convergence loss values of 1.84 and 2.07 in the two scattering types, respectively. In contrast, the PSO method requires at least 400 design iterations to converge, with final loss values of 2.01 and 2.15.

As shown in Figure c below, the study analyzed the evolution of the FFM self-design, showing that the initially randomly distributed intensity profile gradually converged to a tight point. In Figure d below, the study further compared the full width at half maximum (FWHM) and peak signal-to-noise ratio (PSNR) metrics of the focus optimized using FFM and PSO. In the case of FFM, the average FWHM was 81.2 microns and the average PSNR was 8.46 dB.

The study also found thatIn situ FFM learning provides a valuable tool for designing unconventional imaging modalities, especially in situations where accurate modeling is not possible.Such as non-line-of-sight missions (NLOS) and other scenarios. As shown in Figure a below, FFM learning achieves full optical scene reconstruction and analysis of dynamic hidden objects. Figure b below shows NLOS imaging. The wavefront designed by FFM restores the shapes of all 3 letters, and the structural similarity index of each target reaches 1.0.

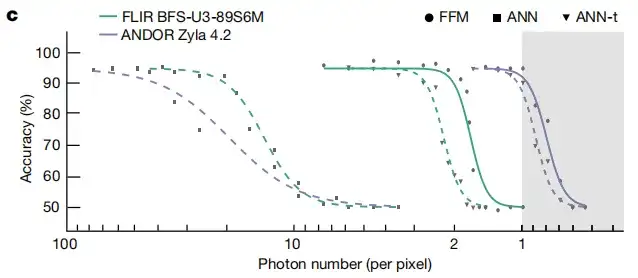

In addition to dynamic imaging capabilities, the FFM learning method also allows for full optical classification of hidden objects in NLOS regions. This study compared the classification performance of FFM with that of an artificial neural network (ANN). The results are shown in Figure c below.In the case of sufficient photons, FFM and ANN have similar performance, but FFM requires fewer photons for accurate classification.

FFM learning can be extended to the self-design of integrated photonic systems. The simulation takes about 100 cycles to converge.

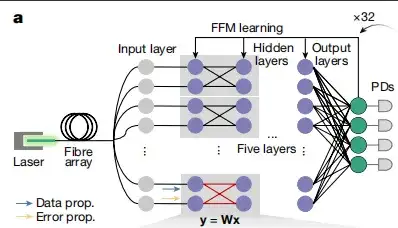

The study found that the FFM learning method can be extended to the self-design of integrated photonic systems. As shown in Figure a below, the study used an integrated neural network composed of symmetric photonic cores connected in series and parallel to implement FFM learning. The results show that the symmetry of the matrix allows the error propagation matrix and the data propagation matrix to be equivalent.

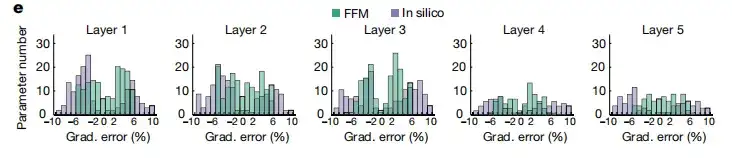

Figure e below visualizes the error of the entire neural network. The results show that at the 80th iteration,FFM learning shows lower gradient errors than computer simulation training.

In the evolution of design accuracy, as shown in Figure f below,Both the ideal simulation and the FFM experiment require about 100 cycles to converge, but the FFM method has the best accuracy.

The intelligent optical computing industry chain has gradually matured and may be on the cusp of a new era

It is worth mentioning that based on the research results of this paper,The research team launched the "Tai Chi-II" optical training chip.The development of Taichi-II was completed only four months after the previous generation Taichi, and the related results were also published in Science. The experimental results of the paper show thatTai Chi is 1,000 times more energy efficient than Nvidia's H100.This powerful computing capability is based on the distributed broad-based intelligent optical computing architecture pioneered by the research team.

Paper link:

https://www.science.org/doi/10.1126/science.adl1203

With the launch of new Taichi series products, intelligent optical computing is once again setting the industry on fire.But from a practical perspective, whether it is physical hardware, software development, or application, intelligent optical computing still needs further optimization and exploration. In fact, the research system of intelligent optical computing has become increasingly mature, with many institutions and universities such as Peking University, Zhejiang University, and Huazhong University of Science and Technology involved, and related academic exchanges are becoming increasingly frequent. However, in terms of specific application directions, research teams represented by leading figures from different universities have different focuses. For example:

* Team of Academician Dai Qionghai from Tsinghua University:The team is the author of this paper. The next-generation AI optoelectronic fusion chip they developed has completed experiments in application scenarios such as intelligent image recognition, traffic scenes, and face wake-up. It is reported that with the same accuracy, the team's research results have increased the computing power by 3,000 times and the energy efficiency by 4 million times compared with existing GPUs, and are expected to subvert traditional computing ideas in the fields of autonomous driving, robot vision, and mobile devices.

* Dong Jianji's team from Huazhong University of Science and Technology:The optoelectronic hybrid chip developed by the team has completed the application of human expression recognition.

* Xu Shaofu's team from Shanghai Jiao Tong University:The series of optoelectronic fusion chips developed by the team have been applied in artificial intelligence, signal processing, and other fields, and have completed computational experiments in medical image reconstruction and other aspects.

Fortunately, with the unremitting efforts of researchers, the progress of my country's intelligent optical computing chips has basically been on par with that of foreign countries. In the past five years, the number of companies deploying optical computing has rapidly increased from a few to dozens worldwide. Abroad, the most representative ones are Luminous Computing, which is committed to building the world's leading artificial intelligence supercomputer, and Lightmatter, which uses photonic technology to improve the performance and energy saving of AI and high-performance computing workloads. Both companies have received more than $100 million in financing.

In China, companies represented by Xizhi Technology and Photonic Arithmetic have also joined the international optical computing industry competition.These startups all focus on optical computing, focusing on optical computing accelerators based on optical chips, and carrying out the research and development of software, systems, and principle machines. In short, my country's optical computing industry chain has gradually matured, and the relevant industry-university-research system has been effectively operated and efficiently implemented. We also expect that in this new era, intelligent optical computing can provide surging momentum for the development of the digital economy and new quality productivity.