Command Palette

Search for a command to run...

Stanford, Apple and 23 Other Institutions Released DCLM benchmarks. Can high-quality Datasets Shake up the Scaling Laws? The Basic Model Performs on Par With Llama3 8B

As people continue to pay close attention to AI models, the debate about Scaling Laws is also becoming increasingly heated.

OpenAI first proposed Scaling Laws in the paper "Scaling Laws for Neural Language Models" in 2020. It is regarded as Moore's Law for large language models. Its meaning can be briefly summarized as follows:As the model size, dataset size, and floating point number of computations (used for training) increase, the performance of the model will improve.

Under the influence of Scaling Laws, many followers still believe that "big" is still the first principle to improve model performance. In particular, large companies with "deep wealth" rely more on large and diverse corpus datasets.

In this regard, Qin Yujia, a Ph.D. from the Department of Computer Science at Tsinghua University, pointed out, "LLaMA 3 tells us a pessimistic reality: the model architecture does not need to be changed, and increasing the data volume from 2T to 15T can produce miracles. On the one hand, this tells us that the base model is an opportunity for large companies in the long run; on the other hand, considering the marginal effect of Scaling Laws, if we want to continue to see the next generation of models to have improvements from GPT3 to GPT4, we may need to wash out at least 10 orders of magnitude more data (for example, 150T)."

In response to the continuous increase in the amount of data required for language model training and issues such as data quality, 23 institutions including the University of Washington, Stanford University, and Apple have jointly proposed an experimental testing platform DataComp for Language Models (DCLM). The core of this platform is the 240T new candidate vocabulary from Common Crawl. By fixing the training code, it encourages researchers to propose new training sets for innovation, which is of great significance for improving the training sets of language models.

Related research has been published on the academic platform under the title "DataComp-LM: In search of the next generation of training sets for language models" http://arXiv.org superior.

Research highlights

* DCLM benchmark participants can experiment with data management strategies on models ranging from 412M to 7B parameters

* Model-based filtering is the key to building high-quality training sets. The generated dataset DCLM-BASELINE supports training a 7B parameter language model from scratch on MMLU using 2.6T training tokens, achieving a 5-shot accuracy of 64%

* The DCLM base model performs comparably with Mistral-7B-v0.3 and Llama3 8B on MMLU

Paper address:

https://arxiv.org/pdf/2406.11794v3

The open source project "awesome-ai4s" brings together more than 100 AI4S paper interpretations and provides massive data sets and tools:

https://github.com/hyperai/awesome-ai4s

DCLM benchmark: Multi-scale design from 400M to 7B to meet different computing scale requirements

DCLM is a dataset experimentation platform for improving language models and is the first benchmark for language model training data management.

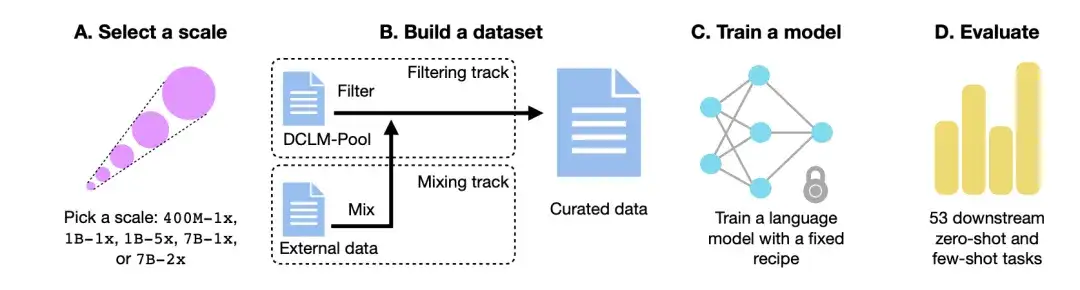

As shown in the figure below,The DCLM workflow mainly consists of four steps: select a scale, build a dataset, train a model, and evaluate the model based on 53 downstream tasks.

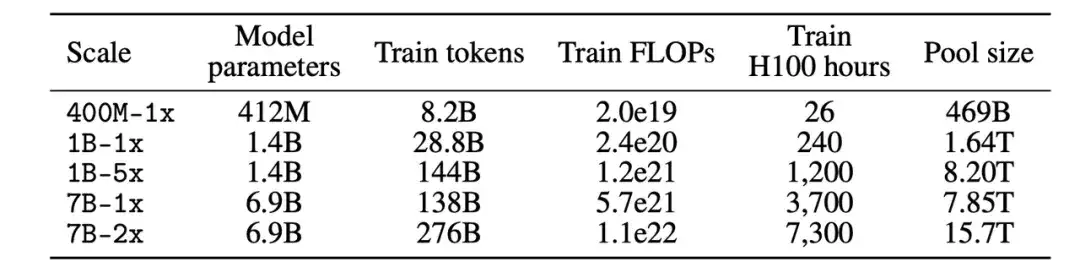

Choose the compute scale

First, in terms of computational scale, the researchers created five different competition levels spanning three orders of magnitude of computational scale. Each level (i.e., 400M-1x, 1B-1x, 1B-5x, 7B-1x, and 7B-2x) specifies the number of model parameters (e.g., 7B) and a Chinchilla multiplier (e.g., 1x). The number of training tokens for each scale is 20 times the number of parameters multiplied by the Chinchilla multiplier.

Building a Dataset

Secondly, after determining the parameter scale, in the process of building a dataset, participants can create a dataset by filtering (Filter) or mixing (Mix) data.

In the Filtering track,The researchers extracted a standardized corpus of 240T tokens from the unfiltered crawler website Common Crawl, built the DCLM-Pool, and divided it into five data pools based on the computational scale. Participants proposed algorithms and selected training data from the data pool.

In the Mix track,Participants are free to combine data from multiple sources, for example, synthesizing data documents from DCLM-Pool, custom crawled data, Stack Overflow, and Wikipedia.

Training the model

OpenLM is a PyTorch-based code library that focuses on distributed training of the FSDP module. In order to eliminate the impact of dataset interference, researchers use a fixed method for model training at each data scale.

Based on previous ablation studies on model architecture and training, the researchers adopted a decoder-only Transformer architecture like GPT-2 and Llama, and finally trained the model in OpenLM.

Model Evaluation

at last,The researchers evaluated the model using the LLM-Foundry workflow, using 53 downstream tasks suitable for basic model evaluation as criteria.These downstream tasks include question answering, open-ended generation, and cover a variety of domains such as encoding, textbook knowledge, and commonsense reasoning.

To evaluate the data organization algorithm, the researchers focused on three performance indicators: MMLU 5-shot accuracy, CORE center accuracy, and EXTENDED center accuracy.

Dataset: Use DCLM to build high-quality training datasets

How does DCLM construct the high-quality dataset DCLM-BASELINE and quantify the effectiveness of data management methods?

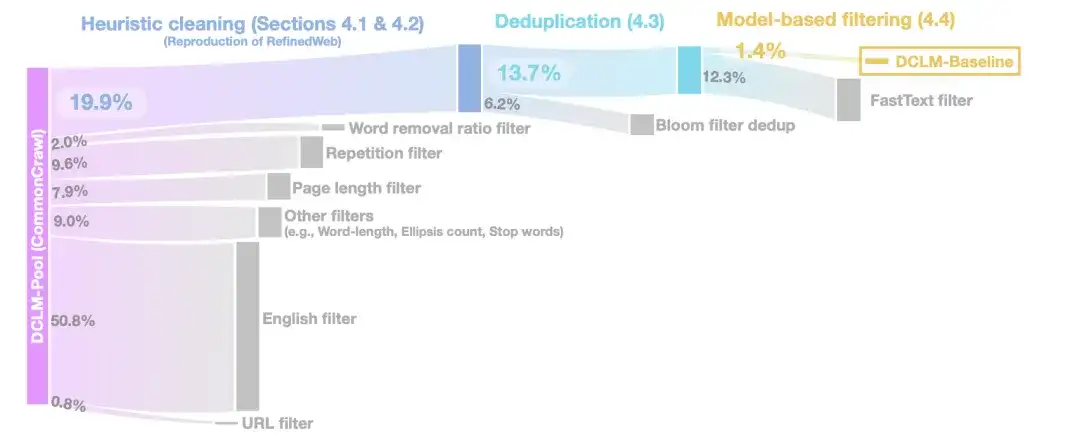

In the heuristic cleaning stage,The researchers used RefinedWeb's method to clean the data, including removing URLs (URL filter), English filters (English filter), page length filters (Page length filter), and duplicate content filters (Repetition filter).

In the deduplication phase,The researchers used Bloom filters to deduplicate the extracted text data and found that the modified Bloom filters were more easily scalable to 10TB datasets.

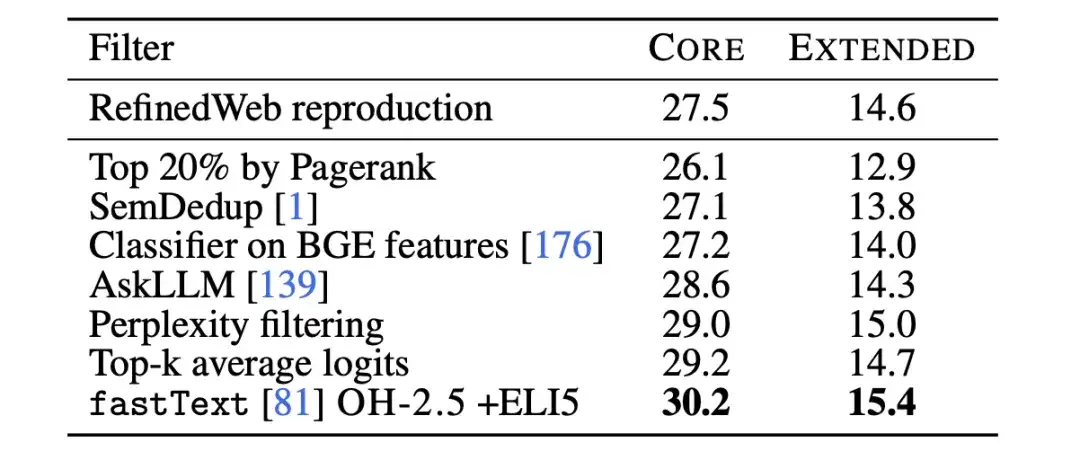

To further improve the quality of the data,In the model-based filtering stage, researchers compared seven model-based filtering methods.Including filtering using PageRank scores, semantic deduplication (SemDedup), fastText binary classifier, etc., it was found that fastText-based filtering outperformed all other methods.

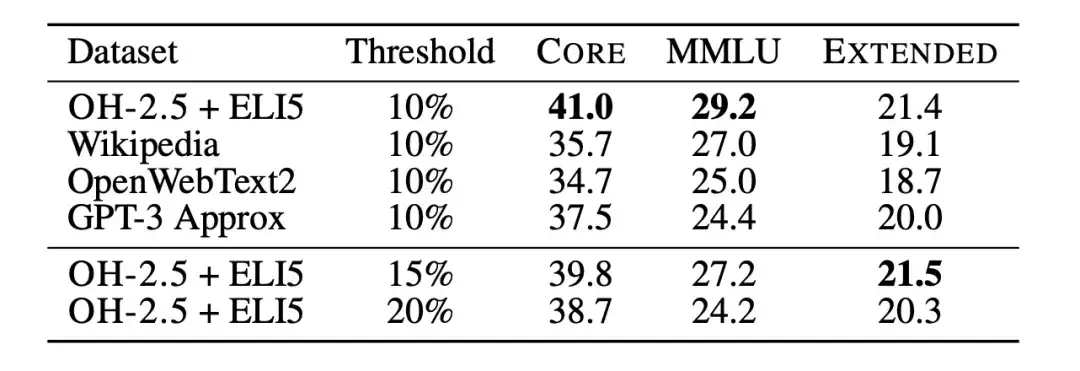

Subsequently, the researchers used text classifier ablations to further study the limitations of data filtering based on fastText. The researchers trained multiple different variants and explored different choices of reference data, feature space, and filtering thresholds, as shown in the figure below. For reference data, the researchers chose the commonly used Wikipedia, OpenWebText2, and RedPajama-books, which are all reference data used by GPT-3.

at the same time,The researchers also made innovative use of data in instruction format, extracting examples from high-scoring posts in the OpenHermes 2.5 (OH-2.5) and r/ExplainLikeImFive (ELI5) subreddits.The results show that the OH-2.5 + ELI5 method improves 3.5% on CORE compared to the commonly used reference data.

In addition, the researchers found that a strict threshold (i.e., Threshold 10%) can achieve better performance.The researchers used fastText OH-2.5 + ELI5 classifier scores to filter the data, retaining the first 10% documents to obtain DCLM-BASELINE.

Research findings: Model-based filtering is key to generating high-quality datasets

First, the researchers analyzed whether contamination of un-evaluated pre-training data could affect the results.

MMLU is a benchmark for measuring the performance of large language models, aiming to more comprehensively examine the model's ability to understand different languages. Therefore, the researchers used MMLU as an evaluation set and detected and removed the problems in DCLM-BASELINE from MMLU. Subsequently, the researchers trained a 7B-2x model based on DCLM-BASELINE without using the detected MMLU overlap.

The results are shown in the figure below. The removal of contaminated samples does not lead to a decrease in the performance of the model.The performance improvement of DCLM-BASELINE on the MMLU benchmark is not due to the inclusion of data in MMLU in its dataset.

In addition, the researchers also applied the above removal strategy on Dolma-V1.7 and FineWeb-Edu to measure the difference in contamination between DCLM-BASELINE and these datasets. The results showed that the contamination statistics of DLCM-BASELINE are roughly similar to those of other high-performance datasets.

Secondly, the researchers also compared the newly trained model with other models at the 7B-8B parameter scale. The results showed that the model generated based on the DCLM-BASELINE dataset outperformed the model trained on the open source dataset and was competitive with the model trained on the closed source dataset.

A large number of experimental results show thatModel-based filtering is the key to forming a high-quality dataset, and dataset design is very important for training language models.The generated dataset DCLM-BASELINE supports training a 7B parameter language model from scratch on MMLU using 2.6T training tokens, achieving a 5-shot accuracy of 64%.

Compared with the previous most advanced open data language model MAP-Neo,The generated dataset DCLM-BASELINE improves MMLU by 6.6 %, while reducing the amount of computation required for training by 40%.

The base model of DCLM is comparable to Mistral-7B-v0.3 and Llama3 8B on MMLU (63% and 66%), and performs similarly on 53 natural language understanding tasks, but requires 6.6 times less computation to train than Llama3 8B.

Scaling Laws: The future direction is unclear, looking for the next generation of training sets for language models

In summary, the core of DCLM is to encourage researchers to build high-quality training sets through model-based filtering, thereby improving model performance. This also provides a new approach to solving problems under the trend of "big is beautiful" model training.

As Qin Yujia, a Ph.D. in computer science at Tsinghua University, said, "It's time to scale down the data." By analyzing and summarizing multiple papers, he found that "clean data after cleaning + smaller models can be closer to the effect of dirty data + large models."

In early July, Bill Gates mentioned the topic of paradigm shift in AI technology in the latest episode of Next Big Idea podcast, and he believes that Scaling Laws are coming to an end. The revolution of AI in computer interaction has not yet arrived, but its real progress lies in achieving metacognitive capabilities closer to humans, rather than just expanding the scale of the model.

Prior to this, many domestic industry leaders also had in-depth discussions on the future direction of Scaling Laws at the 2024 Beijing Zhiyuan Conference.

Kai-Fu Lee, CEO of Zero One Everything, said that the Scaling Law has been proven to be effective and has not yet reached its peak, but the scaling law cannot be used to blindly pile up GPUs. Simply relying on piling up more computing power to improve model effects will only result in companies or countries with enough GPUs winning.

Zhang Yaqin, dean of the Institute of Intelligent Industry at Tsinghua University, said that the realization of Scaling Law is mainly due to the use of massive data and the significant improvement of computing power. It will still be the main direction of industrial development in the next five years.

Yang Zhilin, CEO of Dark Side of the Moon, believes that there is no fundamental problem with the Scaling Law. As long as there is more computing power and data, and the model parameters become larger, the model can continue to generate more intelligence. He believes that the Scaling Law will continue to evolve, but the method of the Scaling Law may change greatly in this process.

Wang Xiaochuan, CEO of Baichuan Intelligence, believes that in addition to the Scaling Law, we must look for new transformations in computing power, algorithms, data, and other paradigms, rather than simply turning them into knowledge compression. Only by stepping out of this system can we have a chance to move towards AGI.

The success of large models is largely due to the existence of Scaling Laws, which provide valuable guidance for model development, resource allocation, and selection of appropriate training data. We may not know the end of Scaling Laws yet, but the DCLM benchmark provides a new paradigm and possibility for improving model performance.

References:

https://arxiv.org/pdf/2406.11794v3

https://arxiv.org/abs/2001.08361