Command Palette

Search for a command to run...

CUHK, Tencent and Others Jointly Released! DynamiCrafter: Any Image Can Be Transformed Into a Dynamic Video in Seconds, With Seamless ultra-high Definition

In 1986, the Calabash Brothers, broadcast on CCTV-1, received widespread acclaim as soon as it was released, and became a precious childhood memory for countless people born in the 1980s and 1990s. However, this classic animation,In fact, it is composed of about 200,000 images drawn by staff.

According to the development of the plot, the staff will splice the character's joints to pose and shoot it into video form at 24 frames per second.It creates a visual difference for the audience.That is, it is believed that the character can move.

In fact,Dynamic video is played continuously frame by frame.At first, people sketched pictures on paper and made the images come alive by quickly flipping the paper; later, computers and cameras could retain more than 24 frames in 1 second, creating a fast and coherent dynamic visual effect. So far, video technology has made great progress in simulating random dynamics of natural scenes (such as clouds and fluids) or motion in specific areas (such as human body movements).

However, whether it is a real scene captured or a virtual animation or cartoon, the presentation of dynamic video requires the support of continuous picture materials. For example, to show the complete cycle of flower blooming, people need to really wait for the flowers to bloom and record them frame by frame.

The emergence of AI breaks through this limitation.By CUHK, Tencent AI Lab The DynamiCrafter model jointly launched by the two companies uses video diffusion prior technology to simulate real-world motion patterns.Combined with text commands, you can convert pictures into dynamic videos. It can process almost all types of static images including landscapes, people, animals, vehicles, sculptures, etc. The generated dynamic videos are high-definition, super smooth, and seamless, with every detail captured just right!

DynamiCrafter is even more powerful in application scenarios. Specific application cases include but are not limited to:

Entertainment Media: Create dynamic backgrounds and character animations for movies, games, and virtual reality environments.

Cultural education: Convert historical photos into dynamic forms to provide new interactive methods for cultural heritage; create educational videos to animate images to explain complex concepts; convert scientific data or models into intuitive dynamics to reduce communication barriers in scientific research.

Social Marketing: Generate engaging motion graphics and short videos for social media advertising and branding; tell stories through motion graphics and add visual impact to the promotion of books and e-books.

In order to help everyone better experience DynamiCrafter,HyperAI has launched the "DynamiCrafter AI Video Generation Tool" tutorial!This tutorial has built the environment for you. You no longer need to wait for the model to be downloaded and trained. Just click Clone to start it with one click, and smooth videos will be produced instantly!

Tutorial address:https://hyper.ai/tutorials/31974

The above tutorials are based on WebUI, which is simple and easy to use, but the local precision control is limited. ComfyUI is based on a node-based interface and workflow, and can achieve different effects by changing nodes, giving users greater freedom and creative space.HyperAI also launched the "ComfyUI DynamiCrafter Image-to-Video Workflow" tutorial.For specific tutorial details, please see the next article released by HyperAI today.

Demo Run

1. Log in to hyper.ai, on the Tutorials page, select DynamiCrafter AI Video Generation Tool, and click Run this tutorial online.

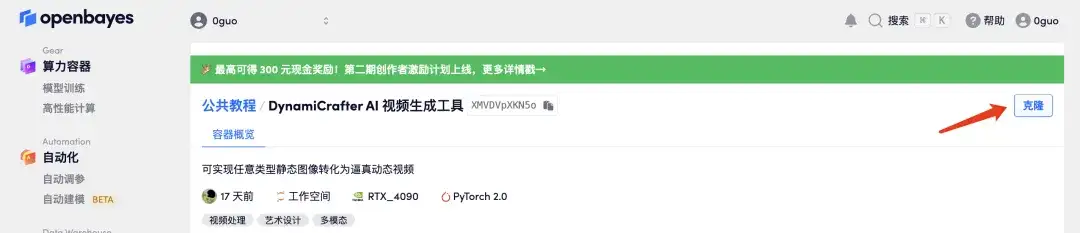

2. After the page jumps, click "Clone" in the upper right corner to clone the tutorial into your own container.

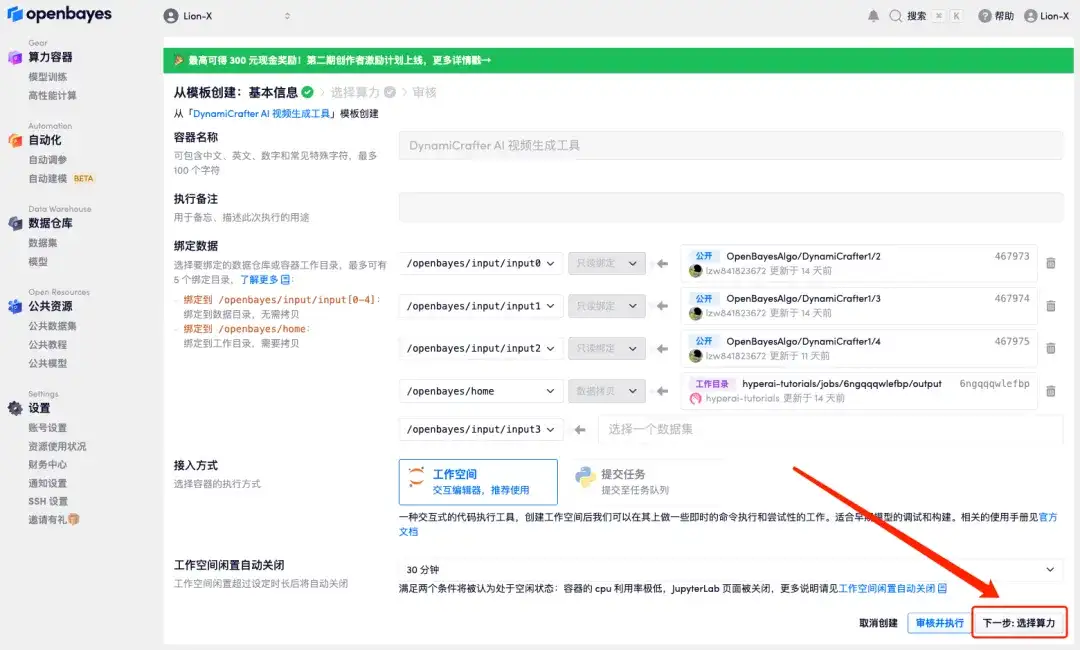

3. Click "Next: Select Hashrate" in the lower right corner.

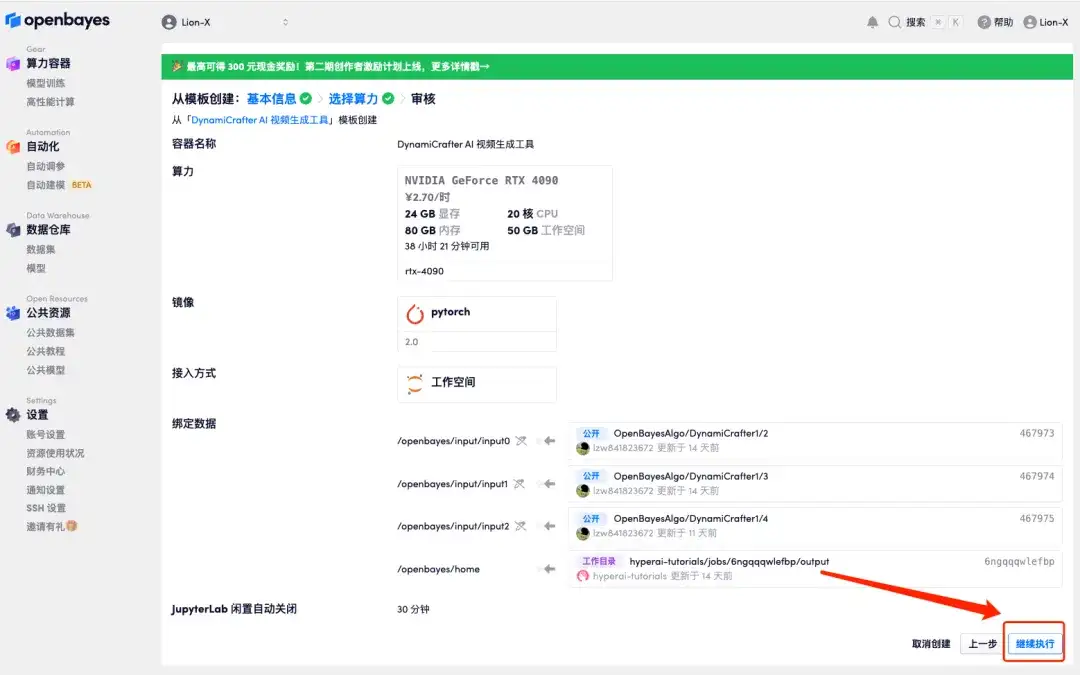

4. After the jump, select "NVIDIA GeForce RTX 4090" and click "Next: Review".New users can register using the invitation link below to get 4 hours of RTX 4090 + 5 hours of CPU free time!

HyperAI exclusive invitation link (copy and open in browser):https://openbayes.com/console/signup?r=6bJ0ljLFsFh_Vvej

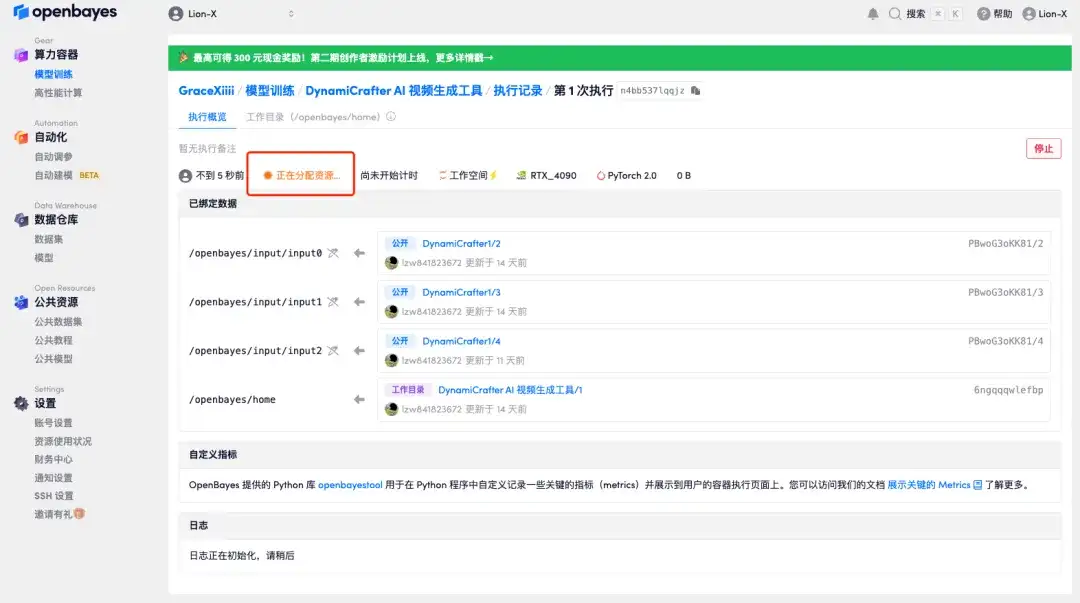

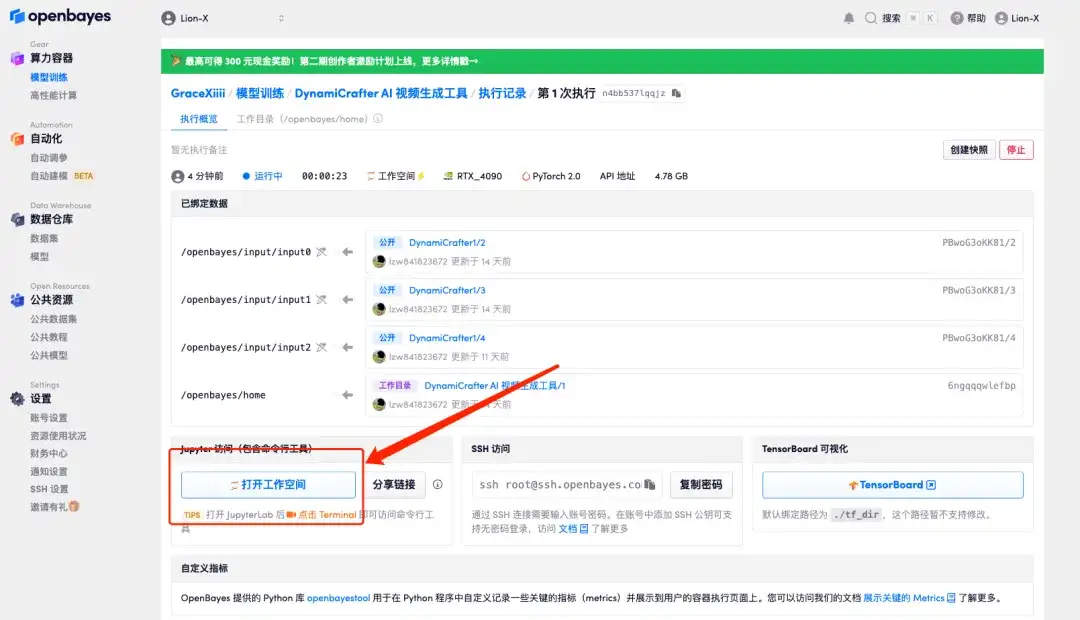

5. Click "Continue" and wait for resources to be allocated. The first cloning process takes about 3-5 minutes. When the status changes to "Running", click "Open Workspace".

If the issue persists for more than 10 minutes and remains in the "Allocating resources" state, try stopping and restarting the container. If restarting still does not resolve the issue, please contact the platform customer service on the official website.

6. This tutorial can achieve the following two effects, which will be demonstrated one by one below:

Generate videos from images and text prompts;

Generate a video using the start frame, end frame, and prompt words.

Generate videos from images and text prompts

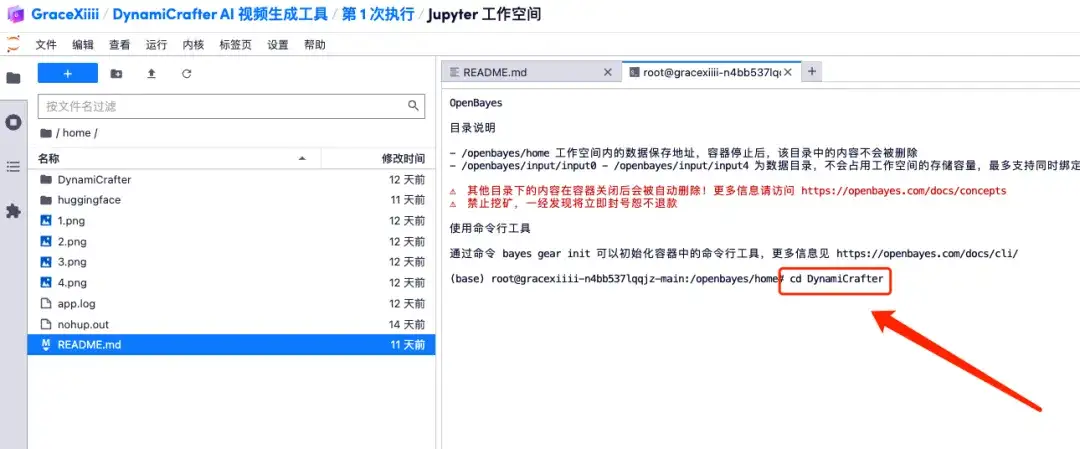

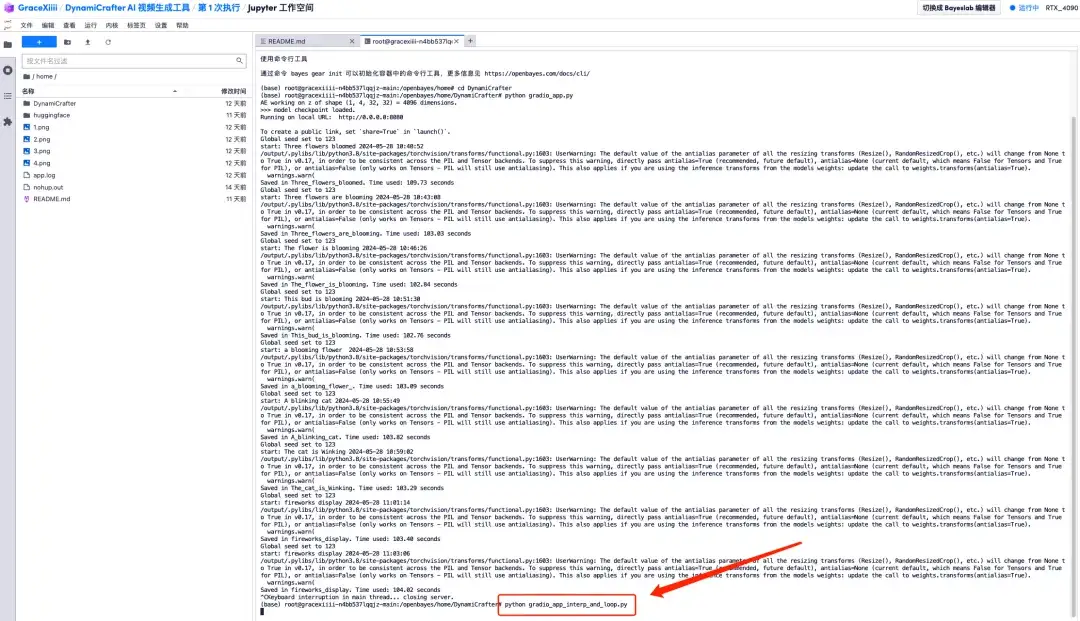

1. Create a new terminal and run "cd DynamiCrafter" to switch to the DynamiCrafter directory.

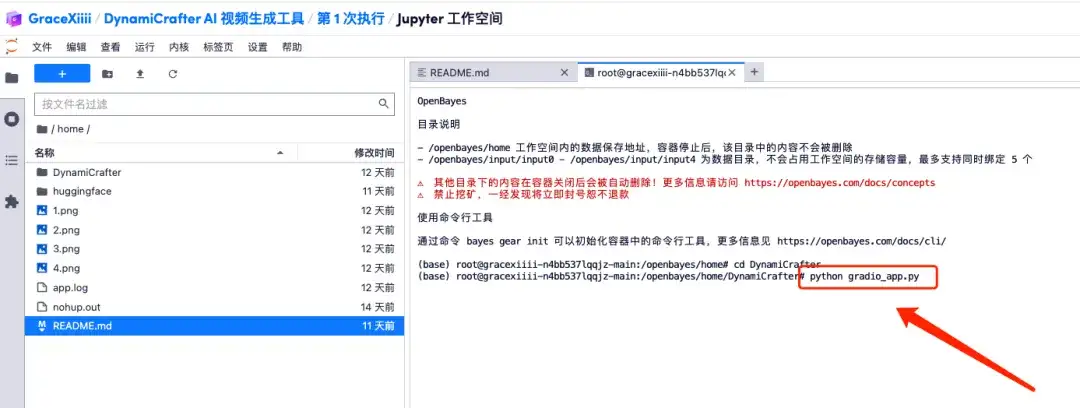

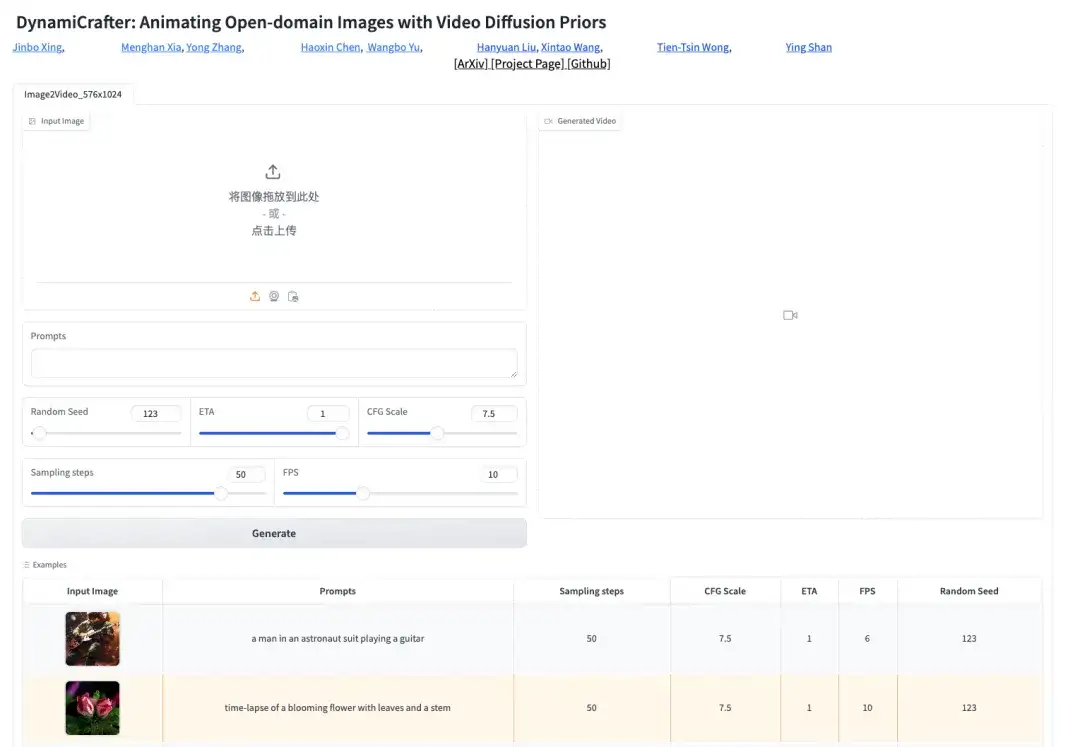

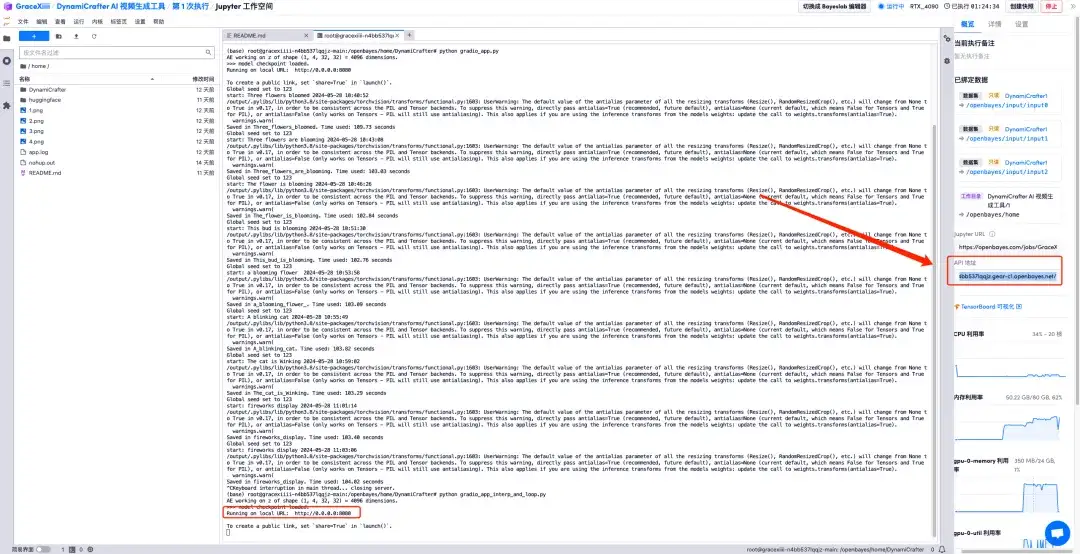

2. Execute the command "python gradio_app.py" and wait for a while. When the command line appears "https://0.0.0.0:8080", copy the API address on the right to the browser address bar to open the Gradio interface.Please note that users must complete real-name authentication before using the API address access function.

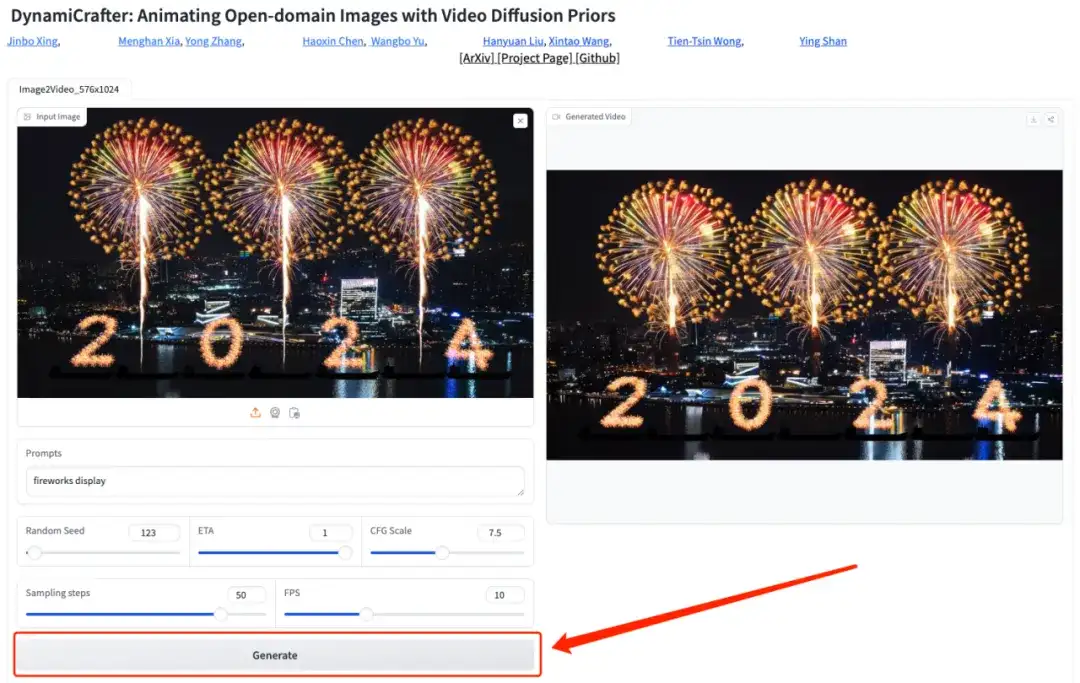

3. After opening the Gradio page, upload a photo and add a text description (for example: fireworks display), click "Generate", and wait a moment to generate the video.

Generate a video using the start frame, end frame, and prompt words

1. Return to the terminal interface, terminate the current process by pressing "Ctrl + C", and then run the "python gradio_app_interp_and_loop.py" command.https://0.0.0.0:8080", then open the API address on the right.

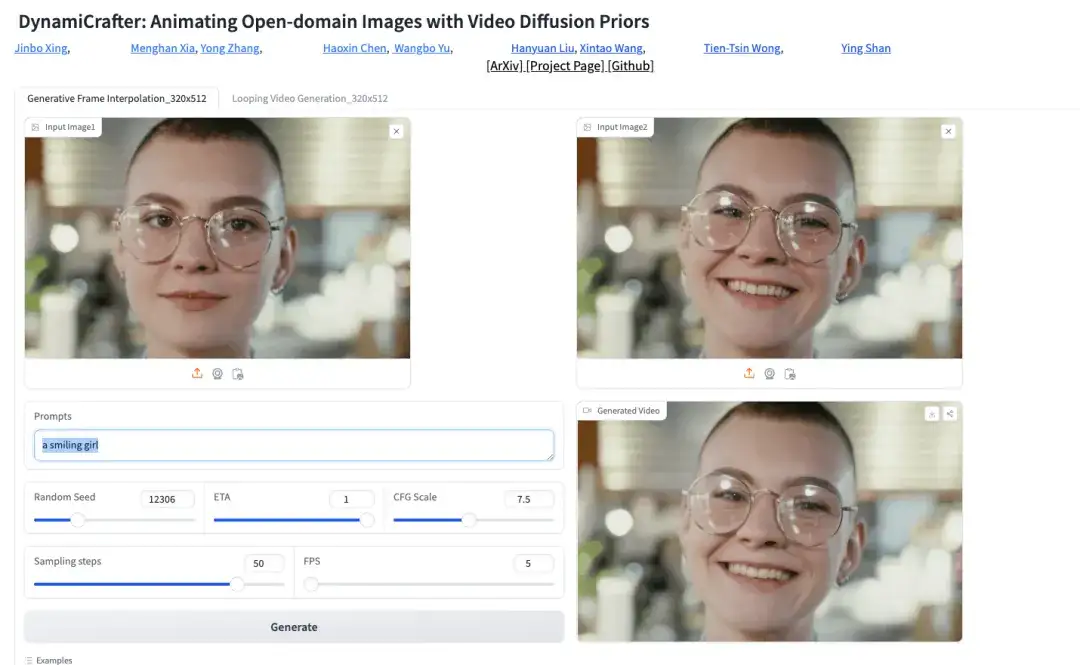

2. On the Gradio page, upload a start frame image, an end frame image, and a text description (for example: a smiling girl), and click "Generate" to generate the video.

At present, HyperAI's official website has launched hundreds of selected machine learning related tutorials, which are organized into the form of Jupyter Notebook.

Click the link to search for related tutorials and datasets:https://hyper.ai/tutorials

The above is all the content shared by HyperAI Super Neural Network this time. If you see high-quality projects, please leave a message in the background to recommend them to us!