Command Palette

Search for a command to run...

GPT-4o Is a dragon-slaying Shocker! Multimodal, real-time Interaction, Free for All, Smooth Voice Interaction ChatGPT Wins Big

It was revealed in early May that a search engine would be released on the 9th; on May 11th, it was officially announced that ChatGPT and GPT-4 updates would be released at 10 a.m. local time on May 13th; then Sam Altman personally came out to refute the rumor, saying "it's not GPT-5, nor a search engine," but "a new thing like magic"; subsequently, netizens began to dig deep into the content of the release through various clues, and eventually GPT-4.5 and ChatGPT's call function became popular candidates.

Initially, some netizens joked that Sam Altman was trying to steal the spotlight from the Google I/O conference, but compared with the showdown between Gemini 1.5 and Sora, if there wasn't something "hard stuff", Altman probably wouldn't be willing to take the risk of releasing an update the day before Google's annual conference.

This is indeed the case. OpenAI's seemingly impromptu online live broadcast conference was actually well prepared.

Sam Altman was not present in this OpenAI spring updates, but the company's CTO Muri Murati brought a series of updates, including:

- GPT-4o

- ChatGPT’s voice interaction function

- ChatGPT’s visual capabilities

GPT-4o: Voice buff, free for all users

Since its release in March 2023, GPT-4 has dominated the list for a long time. Whenever a new model is released, it will be brought out for comparison, which is enough to prove its powerful performance. In addition, GPT-4 is also a "cash cow" with great commercial value for OpenAI at this stage, and has been repeatedly criticized by Musk for being "not open".

More importantly, facing Meta's open source Llama, Google's open source Gemma, and Musk's open source Grok, the free version of ChatGPT based on GPT-3.5 is under increasing pressure. As GPT-5 has not yet appeared, many netizens speculate that OpenAI will release a new version of GPT-4 to replace GPT-3.5.

As expected, in today’s live broadcast, OpenAI released a new version, GPT-4o, which is free for all users, and paid users will have 5 times the capacity limit of free users. The “o” stands for “omni”, implying a move towards more natural human-computer interaction.

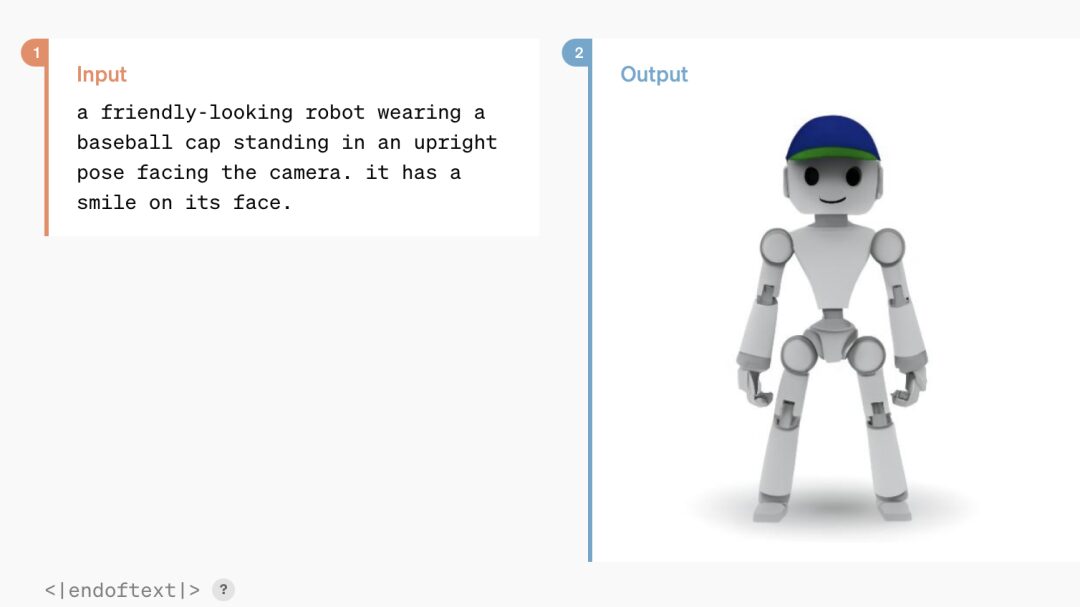

OpenAI CTO Muri Murati said that GPT-4o provides GPT-4-level AI capabilities and can perform reasoning based on speech, text, and vision. It accepts any combination of text, audio, and image inputs, and can generate any combination of text, audio, and image outputs.

Click the link to view the GPT-4o demonstration video:

https://www.bilibili.com/video/BV1PH4y137ch

Before GPT-4o, the average latency for a conversation with ChatGPT using voice mode was 2.8 seconds (GPT-3.5) and 5.4 seconds (GPT-4). To optimize the interactive experience, OpenAI trained a new end-to-end model in text, vision, and audio, which means that all inputs and outputs are processed by the same neural network, reducing information loss.

In terms of performance, GPT-4o can respond to audio input within 232 milliseconds, with an average reaction time of 320 milliseconds, which is similar to the reaction time of humans in conversation. In addition, GPT-4o's performance in English and code text is comparable to GPT-4 Turbo, and it has also significantly improved in non-English language text, improving the quality and recognition speed of 50 different languages. At the same time, in terms of API, the speed is twice as fast as before, but the price is 50% cheaper.

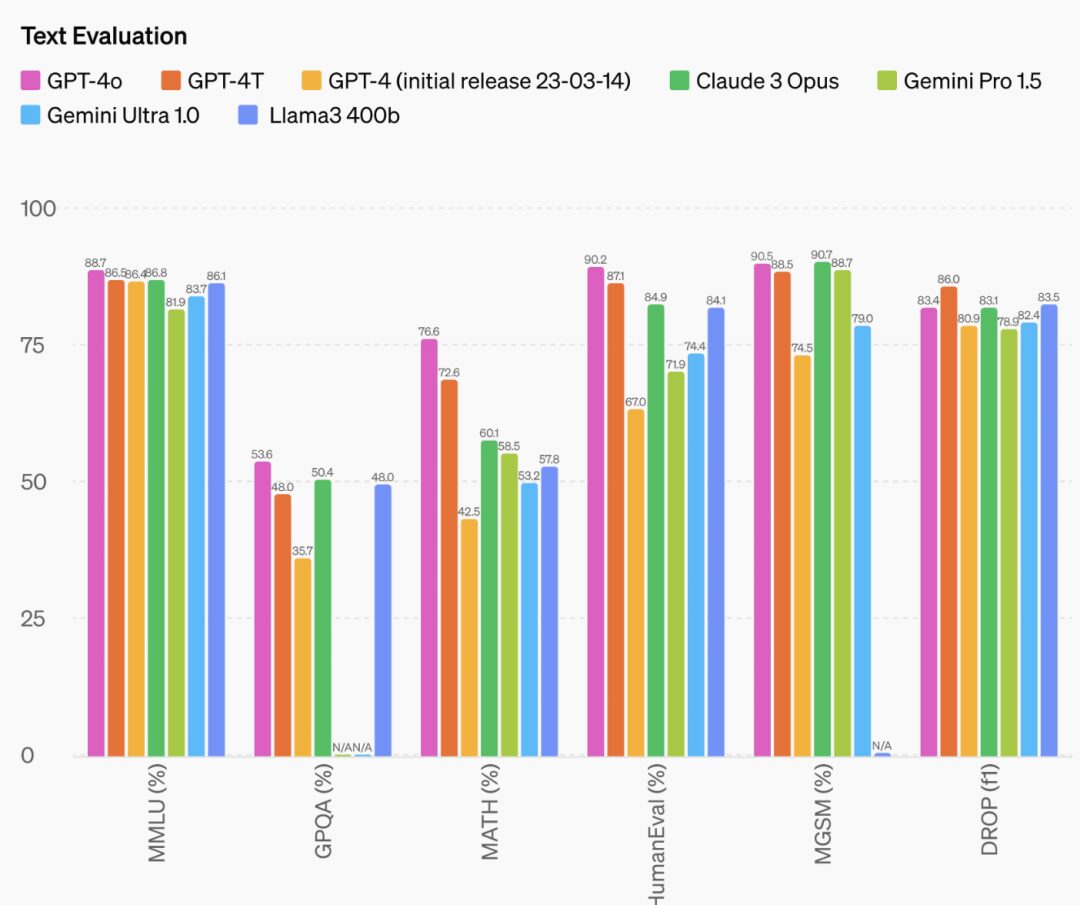

In addition, GPT-4o's reasoning ability has also been greatly improved. Official data shows that GPT-4o has created a new high score of 88.7% in 0-shot COT MMLU (common sense questions).

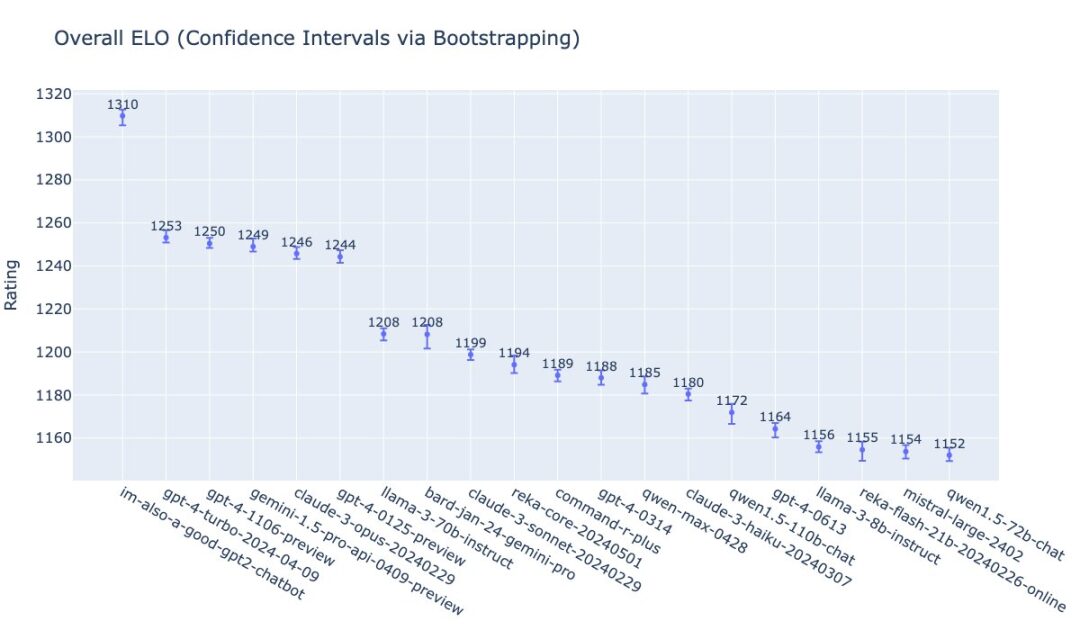

William Fedus of OpenAI posted the ranking of GPT-4o on the LMSys list. The name the team gave to GPT-4o is also very interesting: im-also-a-good-gpt2-chatbot.

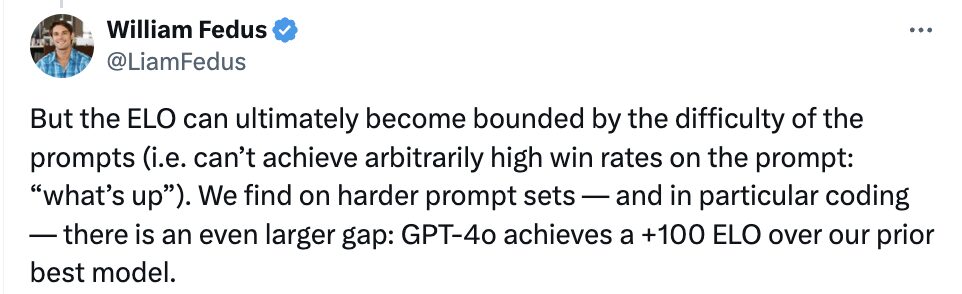

He also introduced that on more difficult prompt sets, especially in encoding ability, GPT-4o's level is +100 ELO higher than OpenAI's previous most advanced large model.

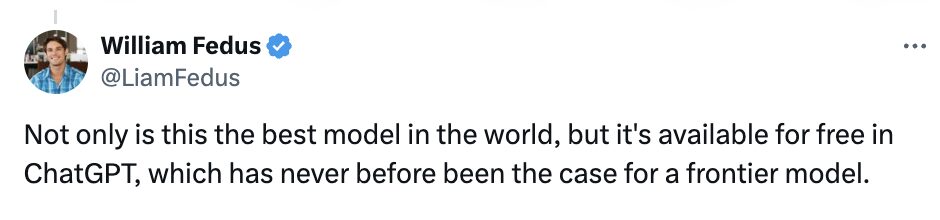

William Fedus bluntly stated that GPT-4o is not only the best model in the world, but also provided for free in ChatGPT, which sets a precedent in cutting-edge models.

To some extent, GPT-4o can be seen as OpenAI's update to its "open source" products. As for the repeated criticism of "OpenAI not being open", Sam Altman also said in his blog: "One of OpenAI's missions is to provide people with powerful artificial intelligence tools for free (or at a discounted price). I am very proud that we provide the world's best model for free in ChatGPT, without ads or anything like that."

Sam Altman also praised GPT-4o's performance: "The new voice (and video) mode is the best computing interface I have ever used. It feels like the artificial intelligence in the movies; and its authenticity still surprises me a little. One of the important changes is the response time and expressiveness that reach human levels."

Later, Altman posted "her" on his account, hinting that his new model will usher in the "Her era".

It’s worth noting that voice functionality isn’t available for all customers in the GPT-4o API.

OpenAI said that GPT-4o takes the security of various modes into consideration in its design through techniques such as filtering training data and refining the model's behavior through post-training. The team also created a new security system to protect speech output.

Still, OpenAI said it plans to roll out new audio and video features for GPT-4o first to “a small group of trusted partners” in the coming weeks, citing the risk of abuse.

GPT-4o’s text and image capabilities will be available in ChatGPT starting today. In the coming weeks, OpenAI will launch a new version of the speech mode alpha with GPT-4o in ChatGPT Plus. Developers can now also access GPT-4o in text and visual modes in the API.

Her era is here: ChatGPT emotional voice interaction

As early as February this year, the former head of OpenAI developer relations mentioned that the ultimate form of ChatGPT is not just chatting.

On May 11, Sam Altman also said in a podcast that OpenAI will continue to improve and enhance the quality of ChatGPT's voice functions, and said that voice interaction is an important way to future interaction methods.

At today's press conference, OpenAI also demonstrated its interim results in improving the voice quality of ChatGPT - voice interaction was achieved based on GPT-4o, and the response speed was greatly improved.

Specifically, the upgrades and updates of ChatGPT are mainly reflected in three aspects: real-time interaction, multimodal input and output, and emotion perception.

In terms of real-time interaction, in the live demonstration, ChatGPT can respond to all questions from the questioner almost instantly, and if the questioner interrupts ChatGPT's answer, it can also stop. Muri Murati also demonstrated the real-time translation function of GPT-4o at the request of the audience.

There is no need to elaborate on multimodal input/output. ChatGPT based on GPT-4o can recognize text, voice and visual information, and answer in any form according to needs.

In terms of emotion perception and emotional feedback, the upgraded ChatGPT can quickly analyze a person's facial emotions based on their selfie. It can also adjust the tone of voice when speaking according to the interlocutor's requirements, from exaggerated drama to cold and mechanical, and even singing, which shows its excellent plasticity.

In this regard, Muri Murati said: "We know that these models are becoming more and more complex, but we hope that the interactive experience will become more natural and simpler, so that you don't have to pay attention to the user interface at all, but only focus on the collaboration with GPT, which is very important."

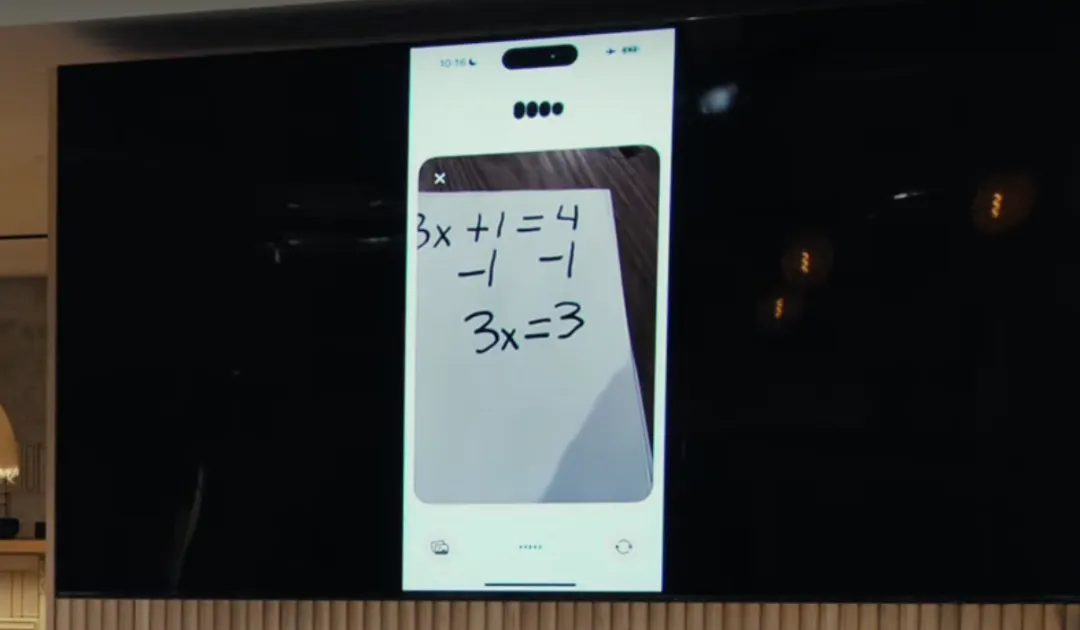

In addition, in terms of reasoning analysis, GPT-4o has also improved the visual function of ChatGPT. Given a photo, ChatGPT can quickly browse the content of the picture and answer related questions, such as taking a photo to solve math problems.

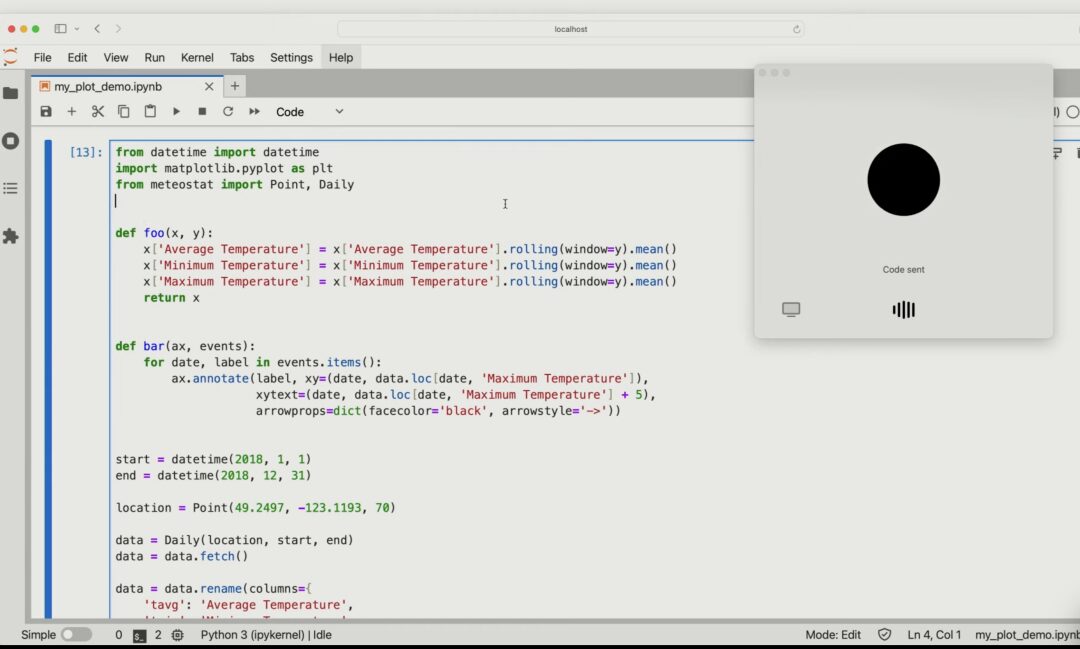

You can also describe the specific content of the code based on the shared code image, and analyze what specific impact will occur if one of the variables in the code is changed.

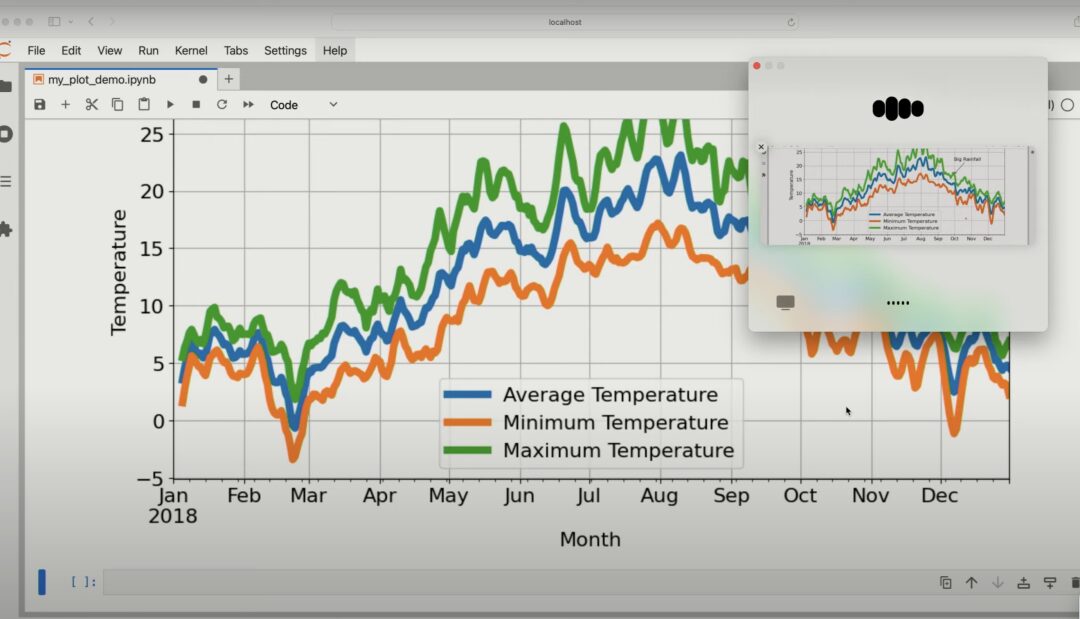

Give GPT-4o a chart and it can analyze the contents of the chart in detail.

This innovative attempt by OpenAI extends the application of ChatGPT across voice, text, and vision, which indicates that human-computer interaction may be more natural and smooth in the future.

In response, OpenAI stated that AI voice assistants with visual and audio capabilities have the same transformative potential as smartphones. In theory, they can do a range of things that current AI assistants cannot do, such as acting as a thesis or math tutor, or translating traffic signs and helping to solve car breakdowns.

Final Thoughts

Since GPT came out, OpenAI has been regarded as the "barometer" of the era of big models.

- Microsoft has a delicate relationship with OpenAI. It is not only a financial investor, but also has deployed the GPT model in its own business and provides cloud services to OpenAI.

- Apple began to increase its investment in generative AI this year and released its own large model, but it started late and it remains to be seen whether it can shake OpenAI's position. At the same time, there are reports that Apple is about to cooperate with OpenAI and Google on large models.

- As the world's largest cloud infrastructure provider, Amazon has also launched its own large model, Amazon Titan, but this is only part of its cloud hosting service Bedrock. Although Amazon has also invested in Anthropic, it still seems difficult to compete with OpenAI.

In comparison, Google seems to be the company with the greatest hope of competing with OpenAI. It not only has deep technical accumulation (the Transformer architecture comes from Google), but also has a rich ecosystem for large models to show their strength.

However, readers who follow Google may find that this old giant has a bit of "Wang Feng" physique in the era of large models (dog head to save life) - from Bard and PaLM 2 being inferior to GPT-4 in performance, to the release of the subversive masterpiece Gemini 1.5 being overshadowed by Sora, and the Google I/O conference scheduled for March being overshadowed by an "improvised" live broadcast by OpenAI during the best promotion period...

Just this morning (May 14), OpenAI launched the "best model in the world". I wonder if Pichai will "change the script" overnight after watching today's press conference?

The answer will be revealed tomorrow morning. We look forward to Google making a comeback and HyperAI will continue to provide first-hand reports!