Command Palette

Search for a command to run...

Competing at Google I/O? OpenAI Will Live Broadcast One Day in Advance, ChatGPT May Have a Call Function

The AI circle is destined to be very lively this week.

On May 13th, local time, OpenAI will live broadcast the update of ChatGPT and GPT-4. The next day, Google I/O is coming as scheduled. Unlike the I/O conference, which is Google's annual event, OpenAI's temporary release this time is a bit of a hype. How will this long-standing "old rival" make a move this time? Let's review the origins of the two sides and make bold guesses!

Multiple rounds of confrontation, the battle is in full swing

Ever since OpenAI made a splash, Google has been labeled as "disappointed", "slow start", and "catching up".The most worthy of exploration is the title of "AI Huangpu Military Academy".It seems like praise, but in fact it is Google's "tears of bitterness".

As we all know, ChatGPT, which laid the foundation for OpenAI, is based on the Transformer architecture.Transformer is the milestone architecture proposed by Google in the paper "Attention Is All You Need".In addition, many former Google bosses appeared in the acknowledgments on the ChatGPT release interface, and subsequently many core Google employees jumped to OpenAI... What's more interesting is that every time Google tries to "fight back", there are always some small episodes.

In February 2023, Google proposed Bard to deal with ChatGPT.But soon after the release, it was revealed that there were factual errors in the demonstration.

In response to the question, "What can I tell my 9-year-old about the James Webb Space Telescope (JWST) and what it has discovered?" Bard gave one answer: The first photo of an exoplanet was taken by JWST. But Grant Tremblay, a researcher at the Harvard-Smithsonian Center for Astrophysics, pointed out that it was the European Southern Observatory's Very Large Telescope (VLT) that took the first photo of an exoplanet in 2004.

At the I/O conference in May 2023, Google demonstrated Bard's product upgrades.For example, it supports more languages, recognizes image information, connects to Google applications and some external applications, etc. At the same time, Google also released PaLM2, which is a product benchmarked against GPT-4 and has improvements in mathematics, coding, reasoning, and natural language generation.

Based on this, the Google Health Research Team also created Med-PaLM 2, which has the functions of retrieving medical knowledge and decoding medical terms. As expected, the model is comparable to GPT, and the application is also comparable to Microsoft. Google has integrated its AI capabilities into office scenarios such as copywriting and spreadsheet making, and launched Google Workspace.

Afterwards, many netizens compared PaLM 2 with GPT-4 in various forms, and OpenAI was still more likely to be in the lead.

In December 2023, Google released Gemini, its "largest and most powerful" AI model.The demonstration effect is indeed amazing, and the high-end version can compete with GPT-4 in performance. However, it was revealed that the demonstration video was post-processed and the effect was partially exaggerated.

On February 8, 2024, Google announced that Bard will be officially renamed Gemini.The chatbot Gemini Advanced powered by its most powerful model Gemini Ultra is also officially available, with a monthly rent of $20, the same as ChatGPT, which is a bit like a competition. The more important significance of this release is that Google AI is unified into Gemini, which is both the model name and the product name.

On February 16, 2024, just a few days after the release of its most powerful Gemini 1.0 Ultra, Google launched Gemini 1.5 in one go.Among them, Gemini 1.5 Pro can support up to 1 million tokens of ultra-long context, crushing GPT-4 in terms of token number, thus achieving excellent performance in tasks such as audio and video processing. If it weren't for Sora, Gemini 1.5 would probably be a hot topic in the AI circle for a long time.

Just a few hours after the release of Gemini 1.5, OpenAI released the Vincent video model Sora.With its unprecedented video generation capabilities, it instantly took center stage, and the one-minute demonstration video directly stole the spotlight of Gemini.

In this round, there is no comparison in terms of technology, but the winner is clearly decided in terms of popularity. OpenAI has also further consolidated its position with the help of Sora.

Is OpenAI going to steal the spotlight again?

It is worth noting that on May 1, X netizen Jimmy Apples broke the news:OpenAI's search engine may be released on May 9th.This netizen once accurately predicted the release date of GPT-4. Later, he said that the release date was postponed to May 13.

On May 8, Bloomberg reported that OpenAI is developing a new search engine that will provide a new search experience through the question-answering method of generative AI. Bloomberg said that one of the features of the search engine is that it can answer questions with written text and images. Bloomberg reported that OpenAI's search product is an extension of its flagship product ChatGPT, which enables ChatGPT to directly obtain information from the Internet, including citations. In previous reports, The Verge broke the news,OpenAI is poaching engineers from Google's search department to speed up the launch of its AI search products.

OpenAI's move to target the already stable search business seems a bit like "attacking the dragon directly"?

However, on May 11, OpenAI officially tweeted that the press conference on the 13th would only bring updates to ChatGPT and GPT-4, without mentioning the "search engine". But the date of May 13 is quite interesting, because Google has already announced that it will hold the Google I/O conference on May 14.

Then, Sam Altman revealed his cards directly -Not GPT-5, not a search engine,But we’re always working on something new that we think people will like! It’s like magic to me.

After Sam Altman removed two wrong answers, netizens were still enthusiastic about guessing "what OpenAI will release", and more clues were revealed, including voice interaction.

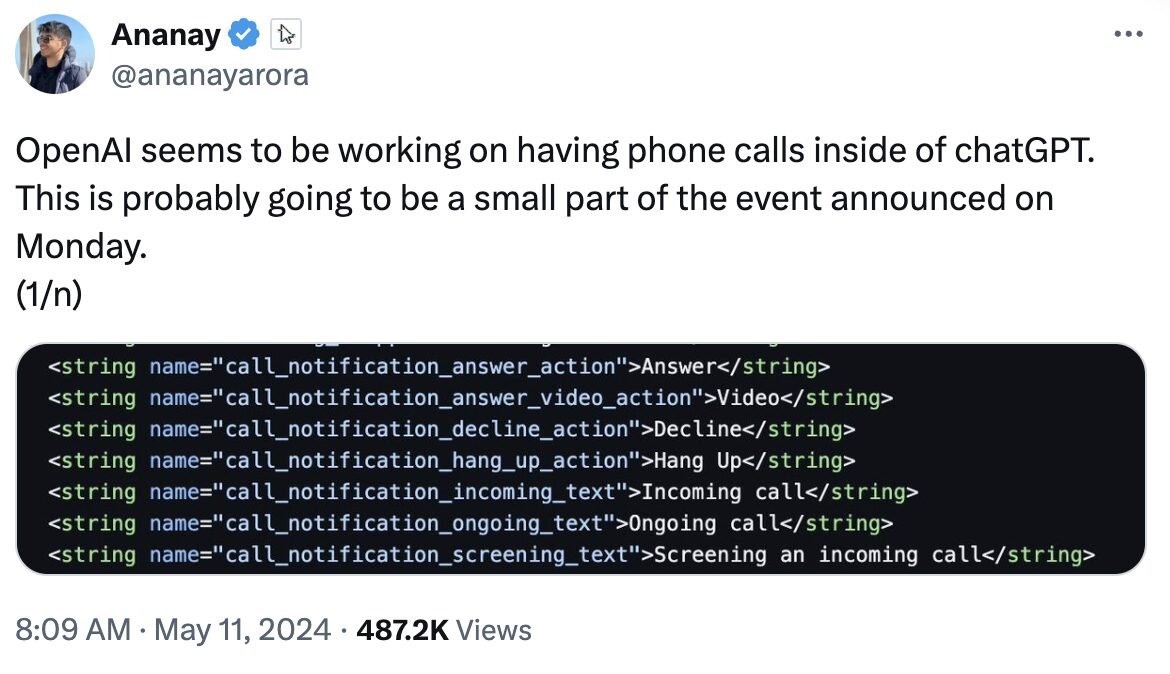

According to The Information, OpenAI has shown its users a new model that can both talk and recognize objects, which can provide faster and more accurate image and audio understanding. According to The Verge, developer Ananay Arora said ChatGPT may have a call function. Arora also found evidence that OpenAI provides servers for real-time audio and video communications.

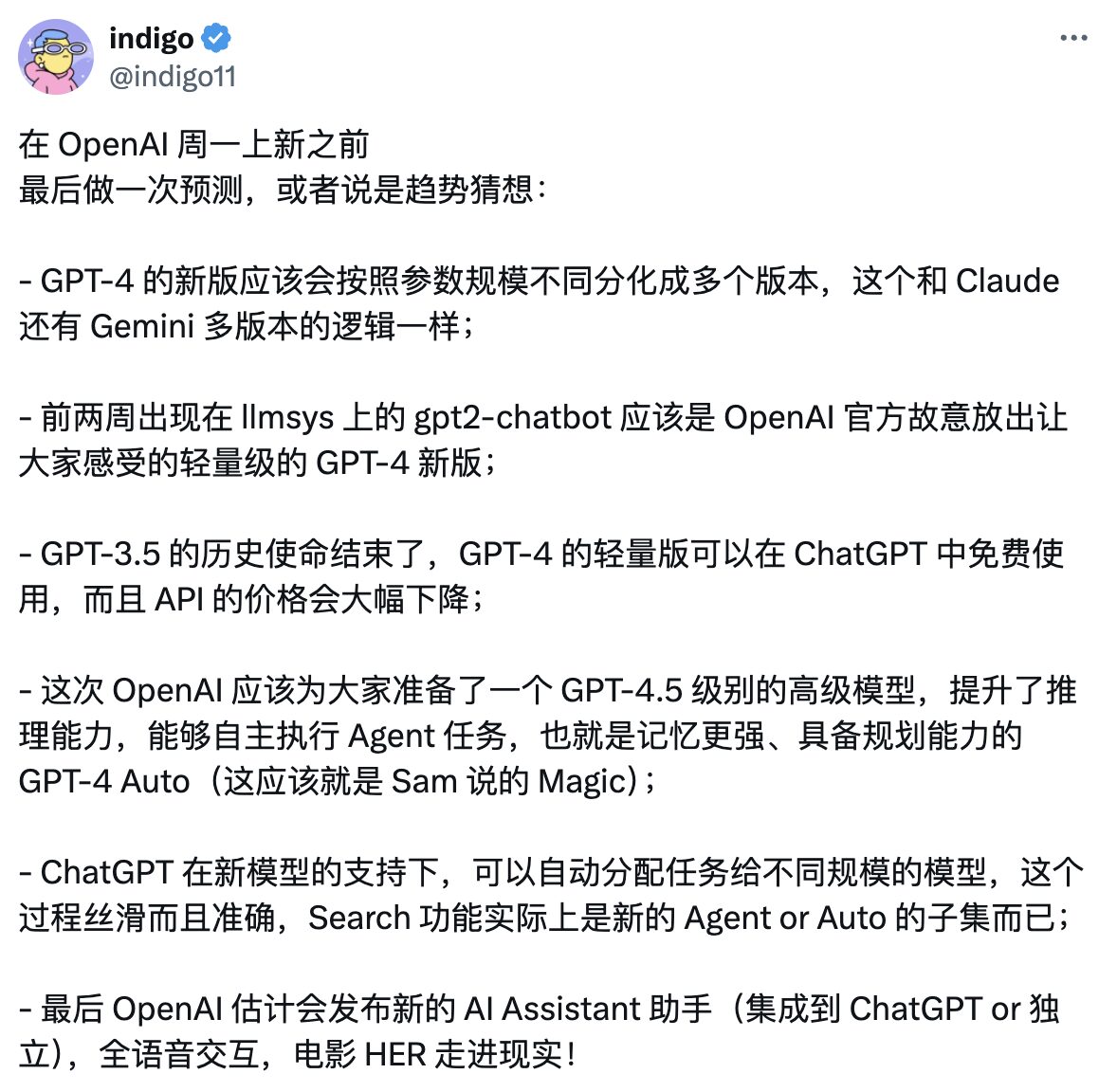

In addition, Halliday co-founder indigo posted a more detailed prediction on his Twitter (X) account, not only mentioning GPT-4.5, but also predicting that OpenAI's new AI Assistant will support full voice interaction.

However, from a certain perspective,Although Sam Altman denied the "search engine", he did not say that he would not add a "search buff" to ChatGPT.In fact, in recent times, netizens have dug up a lot of evidence that OpenAI has entered the search field.

First, Lior S, a former Mila researcher and MIT lecturer, broke the news.OpenAI's latest SSL certificate log shows that the search.chatgpt.com subdomain has been created.

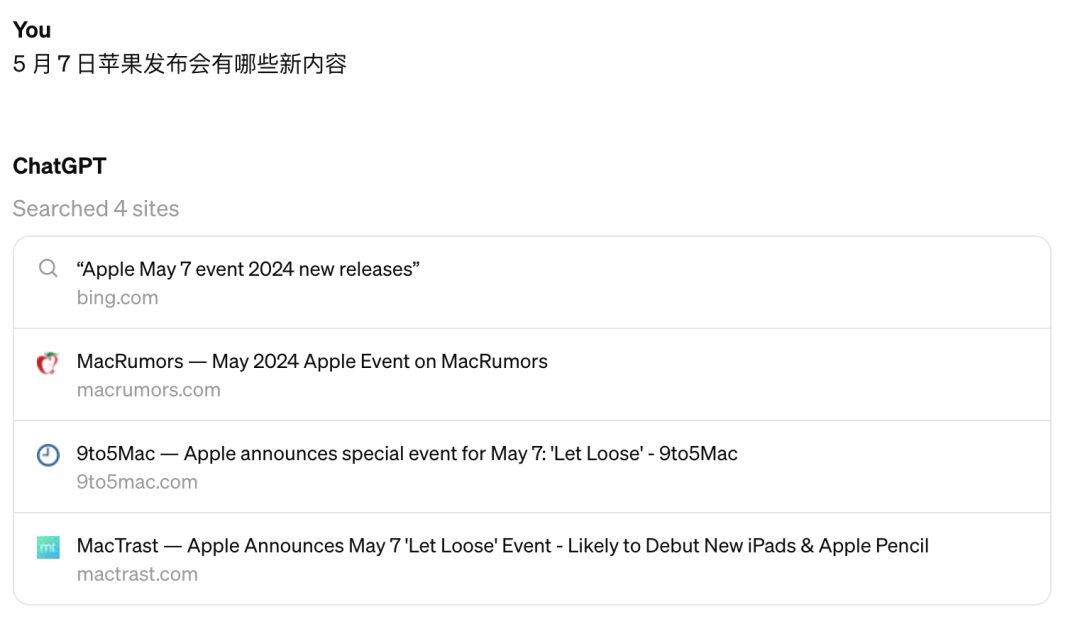

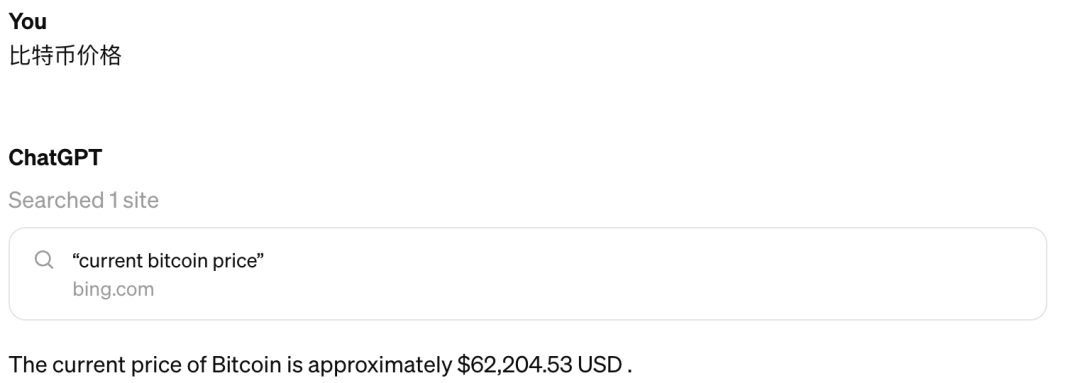

Some netizens in China received the grayscale test, and "Cyber Zen Heart" published the trial results on its official account:

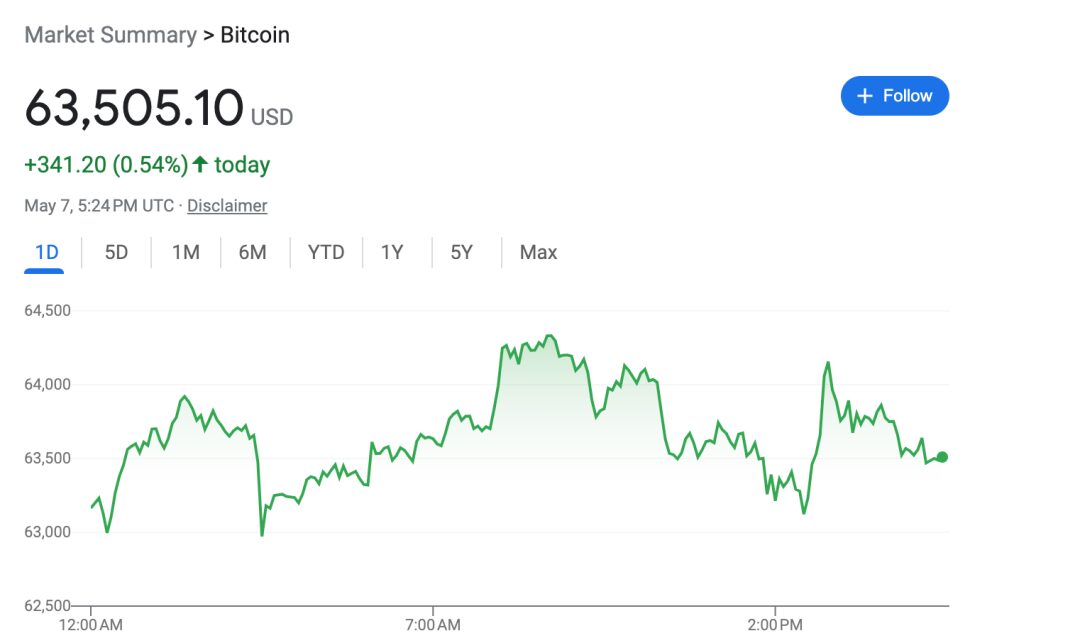

As you can see, ChatGPT's answers are quite accurate, and Cyber Zen Heart says its answer speed is also acceptable.ChatGPT is not good at obtaining real-time information.Cyber Zen searched for Bitcoin prices and compared them with the prices found on Google:

In addition, a netizen directly posted a demo on Twitter claiming to be the official OpenAI AI search page, but it is very different from the grayscale test interface:

At present, it is still unknown whether OpenAI's search product will eventually be released in the form of grayscale testing. Overall, its competitors are not only Google, but also Perplexity AI.In a sense, Perplexity AI is the product that OpenAI should directly compete with in the search business.

Today, this AI tool, which claims to be "the world's first conversational search engine", is in the limelight and has received support from Jensen Huang and investment from bigwigs such as Bezos. Its uniqueness lies in the combination of ChatGPT-style question and answer and the link list of traditional search engines.

How will OpenAI participate in the search engine market competition in the AI era? Let's wait and see whether ChatGPT's search function will be revealed at the press conference on May 13.

Can Google I/O only rely on Gemini to take the lead?

It remains to be seen whether OpenAI’s press conference will reveal any major updates, intentionally or unintentionally, but I believe Google will definitely watch the live broadcast on time. If there is a surprise, I wonder if Pichai will be able to respond quickly and fight back in time at the Google I/O conference a day later?

In comparison, the annual Google I/O conference lacks some mystery. The conference focuses announced on its official page are - mobile, Web, ML/AI, and cloud.

As usual, the company's CEO Sundar Pichai will share Android updates, new generation hardware products, Google's latest progress and achievements in the field of AI, and the integration of its AI capabilities with Google's entire ecosystem in his keynote speech.

* Gemini empowers Google's entire ecosystem

There is no doubt that Gemini will be the highlight of this year's Google I/O conference. Gemini 1.5, which was updated in February this year, has already increased the context length to millions, and its performance can compete with GPT-4. Therefore, Google's next step is to consider the issue of landing applications - how to integrate Gemini with its search, photo and video tools, Google Maps, and workspace tools such as Gmail and Google Docs.

In addition, Google has gradually injected its AI capabilities into Google Assistant. Can Gemini's powerful capabilities create a more advanced and more human-like natural language voice assistant?

It is worth noting that as a company with both advanced large models and hardware business, what kind of sparks can Gemini and Google's own Pixel collide with? Last year, there was news that an AI assistant named Pixie might debut on Pixel 9.

The Pixel 8, which debuted at the Google I/O conference last year, is already equipped with Google's AI capabilities. It is equipped with Google's self-developed chip Tensor G3 processor, and has functions such as audio magic eraser, Best Take, and translation and reading of web pages. For example, the Best Take function can combine multiple group photos together and select different people's expressions from different pictures to create a perfect group photo.

As usual, Pixel 9 will be released at this year's conference, but it has not been seen in the current leaks. Instead, Pixel 8a has been more popular. As for whether the AI assistant Pixie will make an appearance, we can only wait and see.

In addition, in April this year, foreign media broke the news that Apple and Google are working together to integrate Gemini into the iOS system. Neither company has officially confirmed the news. If the news is true, it can be regarded as a "century cooperation" between the two giants who are in a competitive relationship in many businesses. I wonder if Pichai will announce the relevant news at the Google I/O conference.

* Android and AR/XR

As the cornerstone of Google, Android is always an indispensable part of the Google I/O conference. This year, Android 15 has been unveiled, and the developer preview and initial beta versions have been released. Pichai is bound to further introduce the major updates of the system in his speech. According to previously disclosed information, he will also introduce the smart car and smartwatch software Wear OS based on Android Auto.

In addition, some media reported that Pichai will share news about Google's AR software and introduce the Android XR platform developed for Samsung and other head-mounted display manufacturers. According to reports earlier this year, the AR hardware team was the hardest hit by Google's layoffs, so some media speculated that it has given up developing its own AR hardware and is fully committed to the OEM cooperation model. In other words, Google will focus on the software level.

In addition to Pichai's keynote speech, this year's Google I/O conference also has multiple theme forums, such as the latest developments in Google AI, Android's new developments, ML frameworks for the generative AI era, etc. However, no live broadcast will be provided. Relevant video materials will be released after the speech. HyperAI will also continue to pay attention and bring in-depth reports around AI. Stay tuned~

Final Thoughts

In the past, industry was an important measure of national strength. Today, technological strength has also entered the negotiation table and even become a bargaining chip in the game between major powers. Especially when the popularity of large models remains high, every move of Silicon Valley giants has attracted much attention. I still remember that at the end of 2022, OpenAI, Microsoft, Google, etc. always released major updates in a surprise attack, and netizens shouted - after waking up, the AI circle has changed again?

Entering 2024, the battle is still heating up, from the speed of technology to the development of application scenarios, from old powerhouses to new unicorns, the companies that can continue to dominate the list must be those with moats. As for how the big guys at the top of the pyramid will fight, let us move our small stools together and watch the fight between the gods!

References:

1.https://36kr.com/p/2660898993824512

2.https://techcrunch.com/2024/05/09/google-i-o-2024-what-to-expect/

3.https://www.spiceworks.com/tech/tech-general/articles/google-io-2024-expectations

4.https://www.theverge.com/2024/5/11/24154307/openai-multimodal-digital-assistant-chatgpt-phone-calls