Command Palette

Search for a command to run...

GTC 2023 | Huang Renxun Talks About the Top 5 AIs, Including Scientific Computing, Generative AI, and Omniverse

By Super Neuro

Contents at a glance:At 23:00 Beijing time on March 21, NVIDIA founder and CEO Jensen Huang delivered a keynote speech at GTC 2023, introducing the latest developments in generative AI, metaverse, large language models, cloud computing and other fields.

Keywords:NVIDIA Jen-Hsun Huang GTC 2023

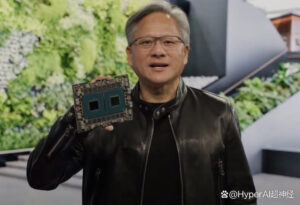

"Don't Miss This Defining Moment in AI", which means "Don't miss the decisive moment of AI" in Chinese. At 23:00 on March 21, 2023 Beijing time, "Leather Swordsman" Huang Xiaoming delivered a keynote speech with the same theme at GTC 2023, and said "This will be our most important GTC yet."NVIDIA’s official Twitter account also released the AI Wave Top 5 of this year’s GTC. What is the truth? Let’s take a look at the major announcements of this year’s Keynote~ Edit Search Image

Edit Search Image

AI Wave Top 5

Keyword 1: Generative AI

Generative AI technology provides the basic patterns and structures of learning data to generate new content, such as images, audio, code, text, 3D models, etc. Professional generative AI tools can improve the productivity of creators and benefit users who are not familiar with the technology. NVIDIA AI Foundations cloud service and NVIDIA Piccasso were announced at GTC 2023.

AI technology is revolutionizing 3D content creation. At GTC 2023,Jensen Huang announced the arrival of a new version of the Omniverse Audio2Face application in the field of generative AI.

Edit Search Image Omniverse Audio2Face Effect

Edit Search Image Omniverse Audio2Face Effect

Omniverse Audio2Face applies artificial intelligence to allow 3D artists to quickly create realistic facial animations from audio files, avoiding the typically time-consuming and laborious manual process. Audio2Face now previews Mandarin Chinese language support, along with improved lip sync quality, more robust multi-language support, and new pre-trained models.

The first generation of generative AI supercomputing system for the pharmaceutical industry is launched

Japan's Mitsui & Co. announced the creation of Tokyo-1, a supercomputing system based on NVIDIA DGX. Tokyo-1 is the world's first generative AI supercomputing system for the pharmaceutical industry and will be used to explore molecular dynamics simulations and generative AI models.The project is expected to go online in the second half of 2023 and will be operated by Xeureka, a subsidiary of Mitsui & Co. Xeureka hopes to use Tokyo-1 to change the long-standing problems of lagging drug development in the Japanese pharmaceutical industry.

Tokyo-1 is based on NVIDIA DGX H100, and the first phase includes 16 NVIDIA DGX H100 systems, each equipped with 8 NVIDIA H100 Tensor Core GPUs. Xeureka will continue to expand the system scale and provide node connection capabilities for Japanese industry customers, providing molecular dynamics simulation, large language model training, quantum chemistry, AI generation of molecular structures for potential drug innovation, etc. At the same time, Tokyo-1 users can also provide drug discovery and services through NVIDIA BioNeMo.

Keyword 2: Digital biology

NVIDIA has released a set of generative AI cloud services for customizing AI-based models to accelerate research in areas such as proteins and therapeutics, genomics, chemistry, biology, and molecular dynamics.

Generative AI models can quickly identify potential drug molecules and, in some cases, design compounds or protein-based therapeutics from scratch. Trained on large datasets of small molecules, proteins, DNA, and RNA sequences, these models can predict the three-dimensional structure of proteins and how well molecules dock with target proteins. Edit Search Image

Edit Search Image

The new BioNeMo™ cloud service accelerates drug development for AI model training and inference.It enables researchers to fine-tune generative AI applications on their own data and run AI model inference directly in the browser or easily integrate into existing applications through new cloud APIs.

BioNeMo cloud services include pre-trained AI models that help researchers create AI pipelines for drug development and are currently being used for drug design by biopharmaceutical companies such as Evozyne and Insilico Medicine.

New generative AI models connected to BioNeMo services include:

* MegaMolBART Generative Chemistry Model

* ESM1nv protein language model

* OpenFold protein structure prediction model

* AlphaFold2 protein structure prediction model

* DiffDock Diffusion generation model for molecular docking

* ESMFold protein structure prediction model

* ESM2 protein language model

* MoFlow generative chemistry model

* ProtGPT-2 A language model for generating new protein sequences

Keyword 3: CV

CV-CUDA is an open source GPU acceleration library for cloud-based computer vision, designed to help enterprises build and scale end-to-end, AI-based computer vision and image processing pipelines on GPUs. Edit Search Image

Edit Search Image

Microsoft's Bing visual search engine uses AI, computer vision to search images on the web

(The picture shows the dog food in the search picture)

CV-CUDA offloads pre-processing and post-processing steps from the CPU to the GPU, processing four times as many streams on a single GPU for the same workload at a quarter of the cost of cloud computing.

The CV-CUDA library provides developers with more than 30 high-performance computer vision algorithms.Includes native Python APIs and zero-copy integration with machine learning frameworks such as PyTorch, TensorFlow2, ONNX, and TensorRT, providing higher throughput, lower compute costs, and a smaller carbon footprint than cloud AI services.

Since the CV-CUDA alpha release, more than 500 companies have created more than 100 use cases.

Keyword 4: Autonomous Machines

In 2021, NVIDIA introduced cuOpt, a real-time route optimization software that provides enterprises with the ability to adapt to real-time data. cuOpt optimizes delivery routes by analyzing billions of possible moves per second.

cuOpt is now the center of a thriving partner ecosystem that includes system integrators and service providers, logistics and transportation software vendors, optimization software experts, and location service providers. cuOpt set three records in the Li & Lim pickup and delivery benchmark, a collection of benchmarks proposed by Li and Lim to measure the efficiency of routes.

Edit Search Image

Edit Search Image

For the Li & Lim benchmark, researchers have mapped out the best route two decades ago, invented algorithms to set and re-set the world's most famous solution, and previous winners have focused on making small adjustments to previous routes. The route created by cuOpt is different from the routes created by previous winners. cuOpt provides an improvement of 7.2 times higher than the previously recorded improvement on the benchmark, and 26.6 times higher than the improvement obtained by the previous record-breaking result.

Keyword 5: Conversational AI

Companies across industries want to leverage interactive avatars to enhance digital experiences. But creating them is a complex and time-consuming process that requires applying advanced artificial intelligence models that can see, hear, understand, and communicate with users. Edit Search Image

Edit Search Image

To simplify this process,NVIDIA provides developers with real-time artificial intelligence solutions through Omniverse Avatar Cloud Engine (ACE).This is a cloud-native microservices suite for end-to-end development of interactive avatars. NVIDIA is continuously improving it to provide users with the tools they need.Easily design and deploy a variety of avatars, from interactive chatbots to intelligent digital humans.

AT&T is planning to use Omniverse ACE and Tokkio AI avatar workflows to build, customize, and deploy virtual assistants for customer service and employee help desks. Working with Quantiphi, one of NVIDIA’s service delivery partners, AT&T is developing interactive avatars that can provide 24/7 support across regions in local languages. This helps the company reduce costs while providing a better experience for its global employees. In addition to customer service, AT&T plans to build and develop digital humans for various use cases for the company.

In addition to the above five key words throughout the keynote, there are also some other things worth noting at GTC 2023:Huang Renxun's "Online Sales" Grace Superchip Superchip.

Grace CPU: Paving the way for energy saving

In actual tests, the Grace CPU Superchip has 2 times higher performance than the x86 processor when the envelope of major data center CPU applications is the same, which means that the data center can handle 2 times the peak traffic or cut electricity costs in half.

Three major CPU innovations:

* In a single die, Grace CPU connects 72 Arm Neoverse V2 cores with ultra-fast fabric, sporting 3.2TB/s of fabric bandwidth, a standard throughput.

* Connecting two of the chips into a superchip package using the NVIDIA NVLink-C2C interconnect, providing 900GB/s of bandwidth.

* Grace CPU is the first datacenter CPU to use server-grade LPDDR5X memory. This provides up to 50% of memory bandwidth, similar cost but 1/8 the power of typical server memory, in a compact size and 2x the density of typical card-based memory designs. Edit Search Image

Edit Search Image

Lao Huang's live show Grace Superchip

The test found thatCompared to the leading x86 CPUs in the data center using the same power consumption, the Grace CPU:

* Microservices are 2.3 times faster;

* Memory-intensive data processing speed increased by 2 times;

* Computational fluid dynamics is now 1.9 times faster.

NVIDIA: The Engine of the AI World

Since the end of last year, ChatGPT has pushed generative AI and large language models to the forefront. In Huang Renxun's view, ChatGPT has opened up a new era. AI’s “iPhone Moment”, but in today's keynote live broadcast, he also admitted “The impressive capabilities of generative AI have created a sense of urgency for companies to reimagine their products and business models.”It can be seen that NVIDIA has begun to make multi-dimensional breakthroughs from AI training to deployment, from semiconductors to software libraries, from systems to cloud services, etc.

Currently, the global NVIDIA ecosystem includes 4 million developers, 40,000 companies and 14,000 startups in NVIDIA Inception.As Huang Renxun said in an interview with CNBS on the eve of GTC 2023, NVIDIA wants to become such a company:

Because of what we do, we could make what is barely possible possible, or we could make something that is very energy consuming, very energy efficient, or we could turn something that cause a lot of money, and make it more affordable.

-- over--