Command Palette

Search for a command to run...

GPT-4 Aims at Multimodality, With Google PaLM-E in the Past, Is the AI Landscape Going to Change?

Contents at a glance:GPT-4 was like a nuclear bomb that detonated the entire technology circle on March 14. In the center of this nuclear explosion, the highly anticipated GPT-4 flexed its muscles, while on the periphery of the nuclear explosion, Google and other gods watched with eager eyes and made constant moves.

March 14th, Eastern Time,OpenAI has launched the large-scale multimodal model GPT-4. GPT-4 is the technology behind ChatGPT and Bing AI chatbots. OpenAI said that GPT-4 can accept image and text input and output text content, although its ability in many real-world scenarios is not as good as that of humans.However, it has achieved human-level performance on a variety of professional and academic benchmarks.

GPT-4 suddenly landed: three features attracted attention

This update of GPT-4 has three major new features that have been greatly improved:The text input limit is improved, and it has the ability to recognize images and stronger reasoning capabilities.

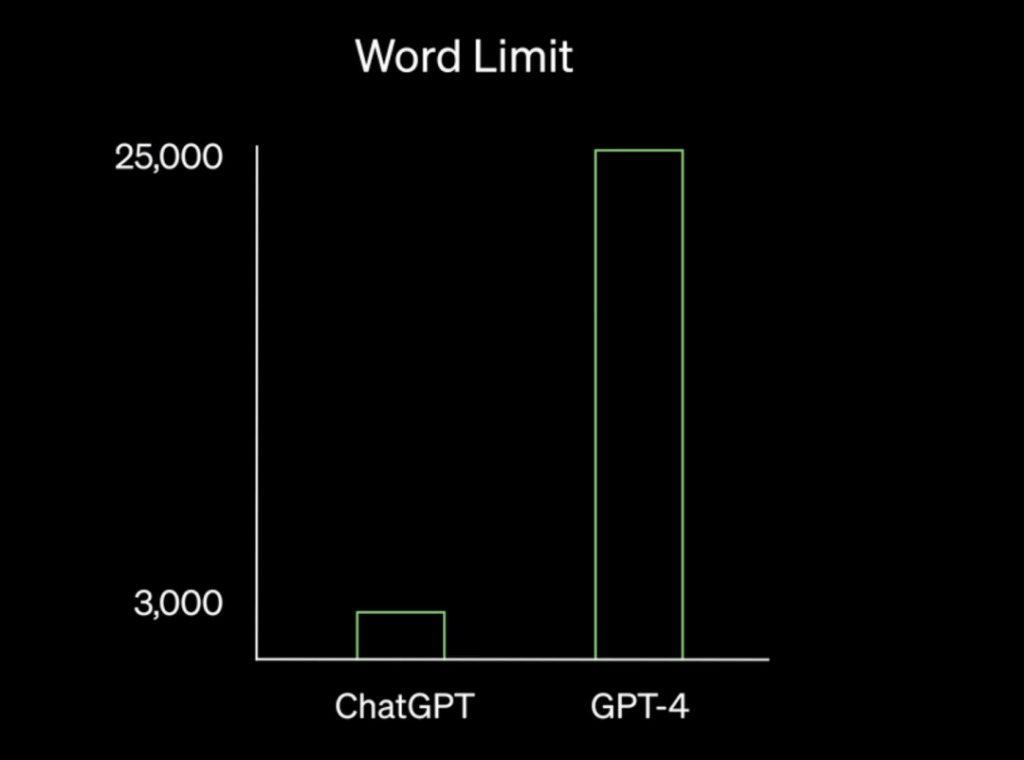

Longer and richer content

According to OpenAI,GPT-4 is capable of processing texts of more than 25,000 words. Medium writer Michael King said this feature allows for the creation of long-form content, such as articles and blogs, which can save time and resources for businesses and individuals.

In addition, GPT-4's processing of large amounts of text makes extended conversations possible, which means that in industries such as the service industry, AI chatbots can provide more detailed and insightful responses to customer inquiries. At the same time, this feature can also perform efficient document search and analysis, making it a beneficial tool for industries such as finance, law, and healthcare.

More reasoning ability

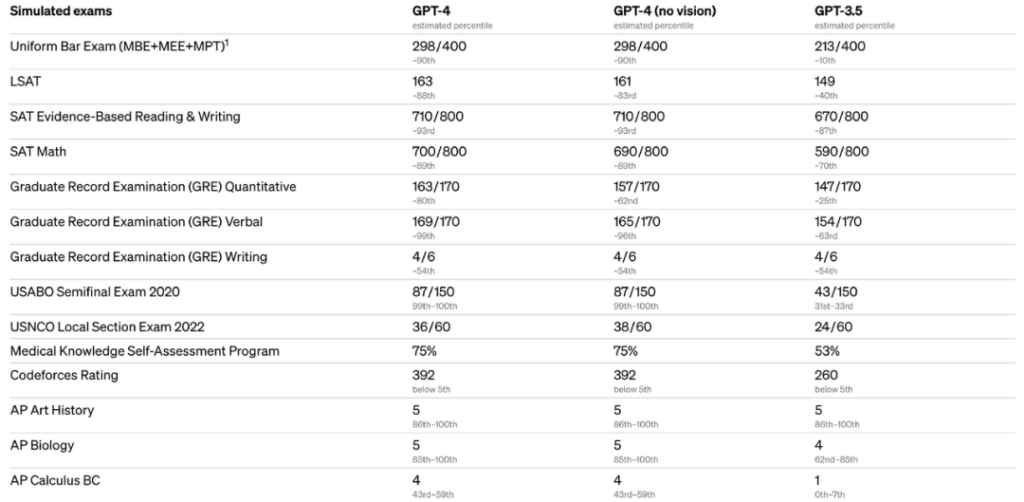

OpenAI said that the answers generated by GPT-4 have fewer errors and are 40% more accurate than GPT-3.5. At the same time, there is not much difference between GPT-3.5 and GPT-4 when chatting, but if the task is complex enough, GPT-4 is more reliable, more creative and can handle more subtle instructions than GPT-3.5. It is reported that GPT-4 has participated in a variety of benchmark tests.Among them, candidates who scored higher than 88% in Uniform Bar Exam, LSAT and other exams.

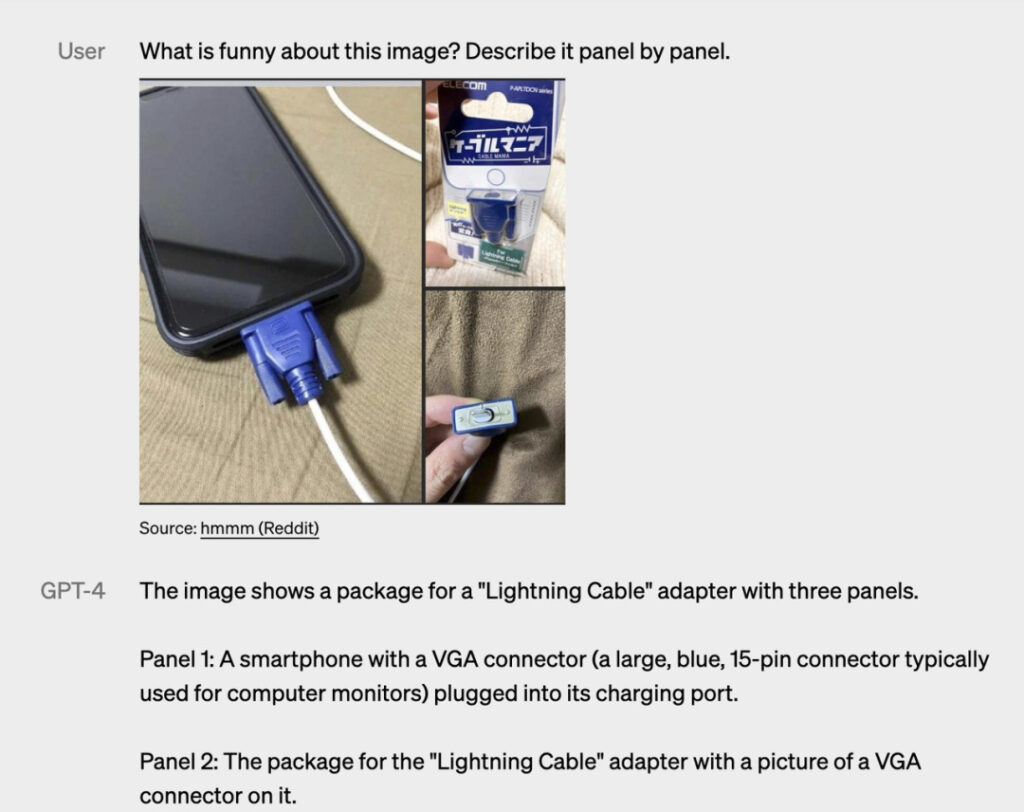

Bright new feature: multi-modality

In addition to better answering skills,Another outstanding feature of GPT-4 is that it can accept input in the form of text and images.Image types can be photos, charts, screenshots, etc. For example:

Question: What is funny about this picture? Describe it point by point.

GPT-4 answer: The image shows a "Lightning Cable" adapter containing three pictures.

Figure 1: A smartphone with a VGA connector (a large, blue, 15-pin connector commonly used on computer monitors) plugged into its charging port.

Figure 2: The packaging for the “Lightning Cable” adapter, with an image of a VGA connector.

Figure 3: A close-up of the VGA connector, with a small Lightning connector at the end (used for charging iPhones and other Apple devices).

The humor in this image comes from the absurdity of plugging a large, outdated VGA connector into a small, modern smartphone charging port.

Please note that this feature is still in the researcher preview version.It is not open to the public.OpenAI has not yet revealed when it will be open.

“It’s not perfect”

While introducing the new features of GPT-4, OpenAI also acknowledged some of its limitations. Like previous versions of GPT,GPT-4 still has problems such as social biases, hallucinations, and adversarial prompts.In other words, GPT-4 is not perfect at the moment. But OpenAI also said that these are the problems they are working hard to solve.

Multimodal models: Google takes the lead in serving appetizers

Although multimodality is a highlight of GPT-4, it must be said thatGPT-4 isn’t the only multimodal model.

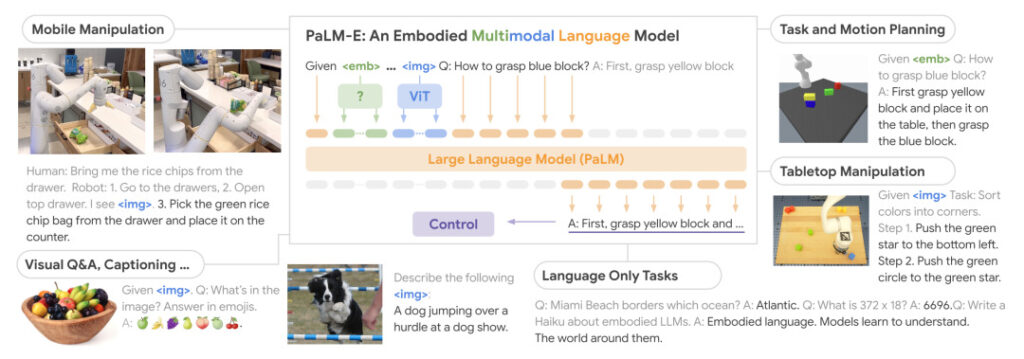

Microsoft AI technical expert Holger Kenn introduced the so-called multimodal model, which can not only translate text into images, but also into audio and video. Earlier this month,Google has released the largest visual language model in history - PaLM-E (Pathways Language Model with Embodied),An embedded multimodal language model for robotics. PalM-E combines the 540B PaLM language model with the 22B ViT vision model, resulting in 562B parameters.

The researchers conducted end-to-end training on multiple tasks, including robot operation rules, visual question answering, and image captioning. The evaluation results showed that the model can effectively solve various reasoning tasks and exhibit "positive transfer" on different observation modalities and multiple entities. In addition to being trained on robot tasks, the model also performs well on visual-language tasks.

In the demonstration example, when a human issues the command "Give me the potato chips in the drawer", PaLM-E can generate an action command for a robot equipped with a robotic arm and execute the action. This is achieved by analyzing the data from the robot's camera without the need to pre-process the scene.

In addition to giving the robot the above capabilities, PaLM-E itself is also a visual language model that can tell stories based on pictures or answer questions based on the content of the pictures.

Is the multimodal model equivalent to the release of the iPhone?

It seems that the entire AI field is now engaged in an arms race. Faced with the explosive popularity of ChatGPT, Google urgently released Bard to press on, and recently fought back again, opening its own large language model API "PaLM API", and also released a tool MakerSuite to help developers quickly build AI programs.

Although OpenAI CEO Sam Altman remained mysterious about the release date of GPT-4 in an interview not long ago, claiming that "we want to make sure it is safe and responsible when it is released", the sudden emergence of GPT-4It is inevitable that people speculate whether it is because of the constant counterattacks and encirclements by giants such as Google that it is forced to accelerate its pace.

It is worth noting that Sam also mentioned a point in the interview.The next evolutionary stage of artificial intelligence is the arrival of multimodal large models.“I think that’s going to be a big trend… more generally, these powerful models are going to be one of the true new technology platforms that we haven’t had since mobile.”

Whether the release of GPT-4 marks the official arrival of the era of multimodal models remains to be seen, but its powerful capabilities have begun to make many developers and even ordinary people worry about whether they will be replaced by it. Perhaps the public remarks made by Microsoft Germany CEO Marianne Janik a few days ago can serve as an answer to this question. She believes that the current development of AI is like the "appearance of the iPhone" in the past. At the same time, she also made it clear thatIt’s not about replacing jobs, it’s about completing repetitive tasks in a different way than before.

「Change will cause traditional working models to change, but we should also see that this change has added many new possibilities, and therefore, exciting new careers will emerge."

Reference Links:

[1]https://openai.com/research/gpt-4

[2]https://venturebeat.com/ai/openai-releases-highly-anticipated-gpt-4-model-in-surprise-announcement/

[3] https://palm-e.github.io/

[4]https://medium.com/@neonforge