Command Palette

Search for a command to run...

DeepMind Releases DreamerV3, a General Reinforcement Learning Algorithm That Can Teach Itself to Pick up Diamonds

Minecraft is not only played by humans. The well-known AI technology company DeepMind has also developed a dedicated AI to play Minecraft!

Contents at a glance:Reinforcement learning is a cross-disciplinary product, and its essence is to achieve automatic and continuous decision-making. This article will introduce DeepMind's latest research and development results: DreamerV3, a general algorithm that expands the scope of reinforcement learning applications. Keywords:Reinforcement Learning DeepMind General Algorithm

On January 12, Beijing time, DeepMind’s official Twitter account posted:Officially announced Dreamer V3,This is the first general algorithm that can collect diamonds from scratch in the game "Minecraft" without referring to human data, solving another important challenge in the field of AI.

Reinforcement learning is a problem in terms of scalability, and general algorithms are needed for its development

Reinforcement learning allows computers to solve a task through interaction, such as AlphaGo defeating humans in the game of Go and OpenAI Five defeating amateur human players in Dota 2.

However, to apply the algorithm to new application scenarios, such as moving from board games to video games or robotics tasks,Engineers are required to continuously develop specialized algorithms.Such as continuous control, sparse rewards, image inputs, and spatial environments.

This requires a lot of expertise and computing resources to fine-tune the algorithms.This greatly hinders the expansion of the model. Creating a general algorithm that can learn and master new domains without tuning has become an important way to expand the scope of reinforcement learning applications and solve decision-making problems.

As a result, DreamerV3, jointly developed by DeepMind and the University of Toronto, came into being.

DreamerV3: A general algorithm based on world model

DreamerV3 is a general and scalable algorithm based on the world model.It can be applied to a wide range of domains under the premise of fixed hyperparameters, and is better than specialized algorithms.

These domains include continuous action and discrete action, visual input and low-dimensional input, 2D world and 3D world, different data budgets, reward frequencies and reward scales, etc.

DreamerV3 consists of 3 neural networks trained simultaneously from replayed experience without sharing gradients:

1. World model:Predicting future outcomes of potential actions

2. Critic:Determine the value of each case

3. Actor: Learn how to make valuable situations possible

As shown in the figure above, the world model encodes the sensory input into a discrete representation zt. zt is predicted by a sequence model with a recurrent state ht and an action at. The input is reconstructed into a learning signal and then a shape representation.

The actor and critic learn from the abstract representation trajectory predicted by the world model.

In order to better adapt to cross-domain tasks,These components need to adapt to different signal magnitudes and robustly balance terms among their objectives.

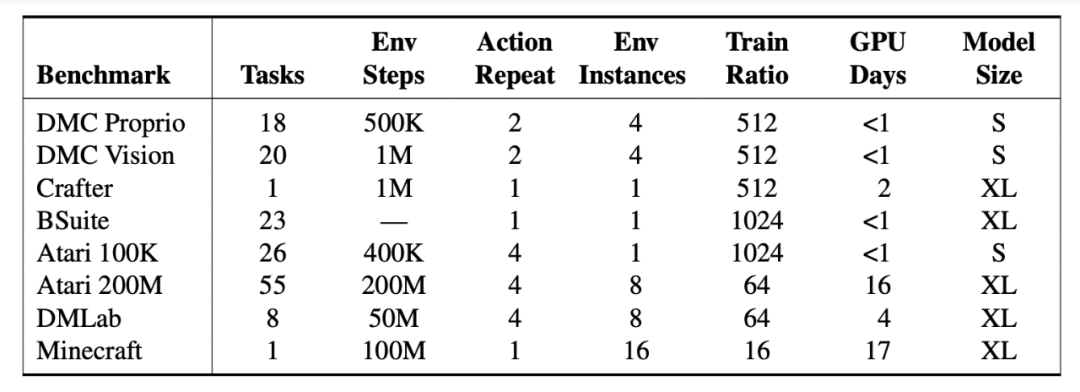

Engineers tested DreamerV3 on more than 150 fixed-parameter tasks and compared them with the best methods recorded in the literature. The experiments showed that DreamerV3 has high versatility and scalability for tasks in different domains.

DreamerV3 achieved excellent results in 7 benchmarks and established new SOTA levels in continuous control of state and image, BSuite and Crafter.

However, DreamerV3 still has certain limitations.For example, when the environment steps are less than 100 million, the algorithm cannot pick up diamonds in all scenes like human players, but only occasionally.

Standing on the shoulders of giants, reviewing the development history of the Dreamer family

First generation: Dreamer

Release time:December 2019

Participating Institutions:University of Toronto, DeepMind, Google Brain

Paper address:https://arxiv.org/pdf/1912.01603.pdf

Algorithm Introduction:

Dreamer is a reinforcement learning agent that can solve long-horizon tasks from images using only latent imagination.

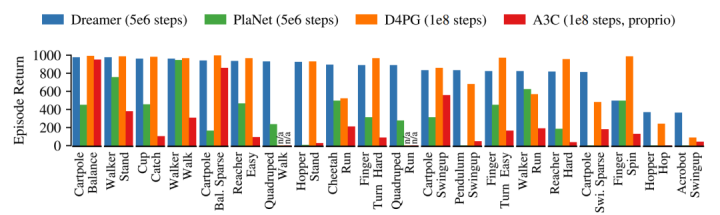

It uses the world model to achieve efficient behavior learning based on the back propagation of model predictions. In 20 extremely challenging visual control tasks, Dreamer surpassed the mainstream methods at the time in terms of data efficiency, computing time and final performance.

Dreamer inherits PlaNet’s data efficiency while surpassing the asymptotic performance of the best model-free agent at the time. After 5×106 environment steps, Dreamer’s average performance in each task reached 823, while PlaNet’s was only 332, and the best model-free D4PG agent was 786 after 108 steps.

Second generation: DreamerV2

Release time:October 2020

Participating Institutions:Google Research, DeepMind, University of Toronto

Paper address:https://arxiv.org/pdf/2010.02193.pdf

Algorithm Introduction:

DreamerV2 is a reinforcement learning agent that learns behaviors from predictions in a compact latent space of a world model.

Note: The world model uses discrete representations and is trained separately from the policy.

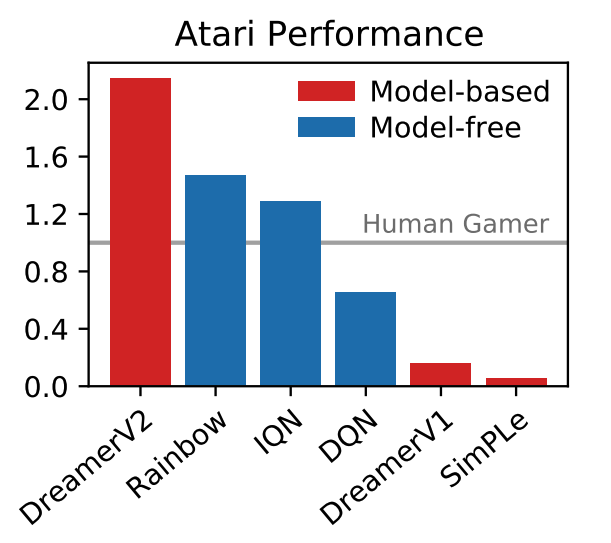

DreamerV2 is the first agent to achieve human-level performance in 55 tasks of the Atari benchmark by learning behaviors in a separately trained world model. With the same computational budget and wall-clock time, DreamerV2 reaches 200 million frames, surpassing the final performance of top single-GPU agents IQN and Rainbow.

In addition, DreamerV2 is also suitable for tasks with continuous actions. It learns a complex world model of a humanoid robot and solves the problems of standing and walking only through pixel input.

Twitter users take the lead in using memes in the comment section

Regarding the birth of DreamerV3, many netizens also made jokes in the comment section of DeepMind’s Twitter.

Liberate humanity and never have to play Minecraft again.

Stop playing games and do something real! @DeepMind and CEO Demis Hassabis

The ultimate boss of "Minecraft", the Ender Dragon, is trembling.

In recent years, the game "Minecraft" has become a focus of reinforcement learning research, and international competitions around diamond collection in "Minecraft" have been held many times.

Solving this challenge without human data is widely considered a milestone in artificial intelligence.Because of the obstacles of sparse rewards, difficult exploration, and long time spans in this procedurally generated open-world environment, previous methods need to be based on human data or tutorials.

DreamerV3 is the first algorithm that completely taught itself to collect diamonds in Minecraft from scratch.It further expands the application scope of reinforcement learning.Just as netizens have said, DreamerV3 is already a mature general algorithm. It’s time to learn how to upgrade and kill monsters on your own and fight against the ultimate BOSS, the Ender Dragon!

View original link:DeepMind releases DreamerV3, a general reinforcement learning algorithm that can teach itself to pick up diamonds

Follow HyperAI to learn more interesting AI algorithms and applications. We also update tutorials regularly, so let’s learn and improve together!