Command Palette

Search for a command to run...

TorchX and Ax Integration: More Efficient Multi-Objective Neural Architecture Search

The purpose of NAS is to discover the best architecture for a neural network. The integration of Torch and Ax provides assistance for multi-objective exploration of neural architectures. This article will show how to run a fully automatic neural architecture search using Multi-objective Bayesian NAS.

Multi-Objective Optimization in Ax (Multi-Objective Optimization) can effectively explore neural architecture search Neural Architecture Search tradeoff , such as the tradeoff between model performance and model size or latency.

This approach has been successfully applied by Meta in various products such as On-Device AI.

This article provides an end-to-end tutorial to help use TorchX.

Axe Introduction

Neural networks continue to grow in both size and complexity.Developing state-of-the-art architectures is often a tedious and time-consuming process that requires domain expertise and extensive engineering efforts.

To overcome these challenges, several neural architecture search (NAS) methods have been proposed.To automatically design good performing architectures without the need for human in the loop (HITL).

Although the sample efficiency is low,But naive methods like random search and grid search are still popular for hyperparameter optimization and NAS. A study conducted at NeurIPS 2019 and ICLR 2020 found that 80% of NeurIPS papers and 88% of ICLR papers used manual tuning, random search, or grid search to adjust ML model hyperparameters.

Since model training is often time-consuming and may require a lot of computing resources,So it is very important to minimize the number of configurations evaluated.

Ax is a general tool for black-box optimization that allows users to use state-of-the-art algorithms such as Bayesian optimization in a sample-efficient way while exploring large search spaces.

Meta uses Ax in various fields.Areas including hyperparameter tuning, NAS, determining optimal product settings with large-scale A/B testing, infrastructure optimization, and designing cutting-edge AR/VR hardware.

In many NAS applications, there is a natural tradeoff between multiple metrics of interest.For example, when deploying a model on a device, one would want to maximize model performance (such as accuracy) while minimizing competing metrics such as power consumption, inference latency, or model size to meet deployment constraints.

In many cases, a small decrease in model performance is acceptable in exchange for a significant reduction in computational requirements or prediction latency (in some cases this can result in both improved accuracy and reduced latency!).

A key enabler of Sustainable AI is a principled approach to effectively explore the implications of this trade-off.

Meta successfully explores this trade-off using multi-objective Bayesian NAS in Ax.And the method is already routinely used to optimize ML models on AR/VR devices.

In addition to NAS applications, Meta has also developed MORBO, a method for high-dimensional multi-objective optimization of AR optical systems.

Multi-objective Bayesian NAS in Ax:

Using Ax to implement fully automatic multi-objective Nas

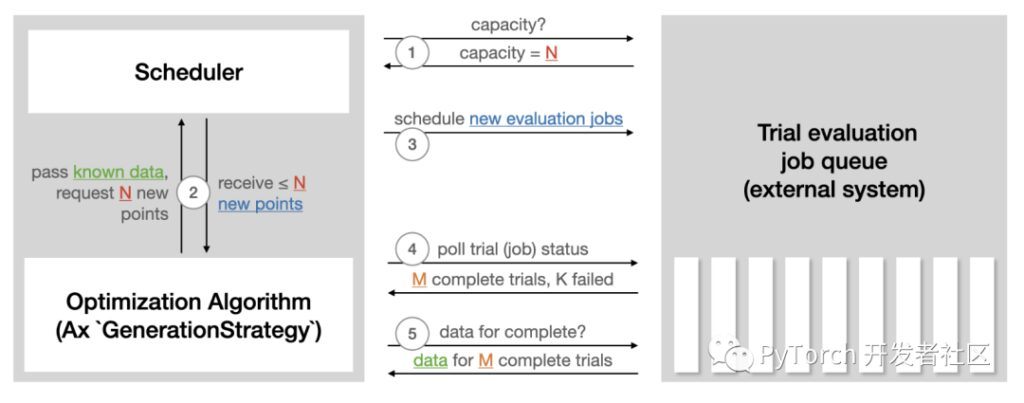

Ax's Scheduler allows running experiments asynchronously in a closed-loop manner.The approach is to continuously deploy experiments to external systems, poll the results, use the acquired data to generate more experiments, and repeat this process until the stopping condition is met. No human intervention or supervision is required. The features of this scheduler are:

- Parallelism, fault tolerance, and many other settings can be customized.

- Large selection of state-of-the-art optimization algorithms.

- Save ongoing experiments (SQL DB or json) and restore experiments from storage.

- Easily extendable to new backends for running trial evaluations remotely.

Below is an illustration from the Ax scheduler Tutorial that summarizes how Seduler interacts with any external system used to run trial evaluations.

Axe sceduler Tutorial:

https://ax.dev/tutorials/scheduler.html

To run automatic NAS using Scheduler, the following preparations are required:

- Define a Runner.Responsible for sending a model with a specific architecture to be trained on the platform of our choice (such as Kubernetes, or it may just be a Docker image on your local machine). The following tutorial will use TorchX to handle the deployment of the training job.

- Define a metric.Responsible for obtaining objective metrics (such as accuracy, model size, latency) from the training task. The following tutorial will use Tensorboard to record data, so you can use the Tensorboard metric bundled with Ax.

Tutorial

Tutorial showing how to use Ax to run multi-objective NAS for a simple neural network model on the popular MNIST dataset.

While the basic methodology can be used for more complex models and larger datasets, a tutorial has been chosen that can be easily run on a laptop and completed end-to-end in under an hour.

In this example, we adjust the width of the two hidden layers, the learning rate, the dropout probability, the batch size, and the number of training iterations.The goal is to use multi-objective Bayesian optimization to trade off performance(accuracy on the validation set) and model size (number of model parameters).

This tutorial uses the following PyTorch libraries:

- PyTorch Lightnig (for specifying the model and training loop)

https://github.com/Lightning-AI/lightning

- TorchX (for running training jobs remotely/asynchronously)

https://github.com/pytorch/torchx

- BoTorch (the Bayesian optimization library that powers Ax’s algorithm)

https://github.com/pytorch/botorch

For a complete runnable example, see:

https://pytorch.org/tutorials/intermediate/ax_multiobjective_nas_tutorial.html

result

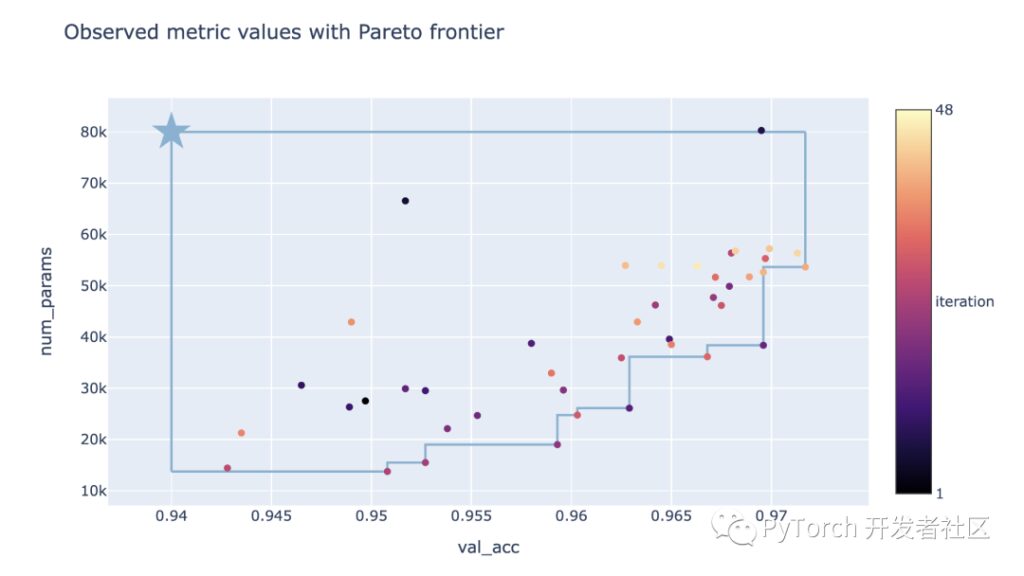

The final result of the NAS optimization performed in the tutorial can be seen in the tradeoff plot below.Here, each point corresponds to the result of a trial, the color represents its iteration number, and the star represents the reference point defined by the threshold imposed on the target.

It can be seen that this method can successfully explore the tradeoff between validation accuracy and the number of parameters.It can find both large models with high verification accuracy and small models with low verification accuracy.

Based on performance requirements and model size constraints, decision makers can now choose which model to use or further analyze.

Visualization

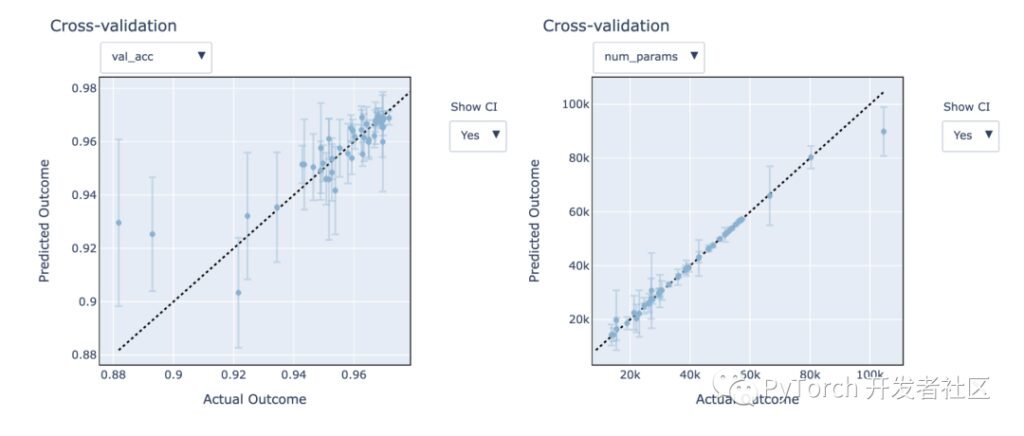

Ax provides some visualization tools to help analyze and understand experimental results.We focus on the performance of Gaussian process models for modeling unknown targets, which can be used to help discover promising configurations more quickly.

Ax helps to better understand the accuracy of these models and their performance on unseen data through leave-one-out cross-validation.

In the figures below, we can see that the model fits quite well – the predictions are close to the actual results.The predicted 95% confidence intervals cover the actual results well.

In addition, it can be seen that the model size (num_params) Index ratio verification accuracy (val_acc) Indicators are easier to model.

Summary of Ax

- Tutorial showing how to run a fully automated multi-objective neural architecture search using Ax.

- Use the Ax scheduler to automatically run optimizations in a fully asynchronous manner (this can be done locally) or by deploying experiments remotely to a cluster (just change the TorchX Scheduler configuration).

- The state-of-the-art multi-objective Bayesian optimization algorithms provided in Ax help effectively explore the tradeoff between validation accuracy and model size.

Advanced Features

Ax has some other advanced features that are not discussed in the above tutorial. These include the following:

Early Stopping

When evaluating a new candidate configuration, partial learning curves are often available while the NN training job is running.

The information contained in the partial curves can be used to identify poorly performing trials so that they can be stopped early, freeing up computational resources for more promising candidates. Although not demonstrated in the above tutorial, Ax supports Early Stopping out of the box.

https://ax.dev/versions/latest/tutorials/early_stopping/early_stopping.html

High-dimensional search space

The tutorial uses Bayesian optimization with a standard Gaussian process to keep the running time low.

However, these models typically only scale to around 10-20 tunable parameters. The new SAASBO method is very sample-efficient and can tune hundreds of parameters. select_generation_strategy transfer use_saasbo=True , SAASBO can be easily enabled.

The above is an introduction to TorchX. The PyTorch developer community will continue to focus on using Graph Transformations to optimize the performance of production PyTorch models.

Search for Hyperai01, note " PyTorchJoin the PyTorch technical exchange group to learn about the latest developments and best practices of PyTorch!