Command Palette

Search for a command to run...

Tutorial Details | Using PaddleOCR to Detect and Identify Container Numbers

Content at a glance: Container number detection based on PaddleOCR shortens the time for recording container numbers and improves port loading and unloading efficiency.

Keywords: PaddleOCR text recognition online tutorial

According to a set of data released by Alphaliner, an international shipping consulting and analysis agency, in March this year, among the top 30 container throughput lists in 2021,Shanghai Port topped the list with a "report card" of 47.025 million TEUs.

The world's top 100 container ports completed a total container throughput of 676 million TEUs in 2021.Such a large number of containers has increased the pressure on container number identification. The traditional method of manually identifying and recording container numbers is costly, inefficient, and has backward operating conditions.

With the development of economy and society, the introduction of artificial intelligence in port operations has become the key to the transformation and upgrading of traditional ports in market competition.

This article will cover environment preparation to model training.Demonstrate how to use PaddleOCR to detect and identify container numbers.

Directly view the code tutorial:

https://openbayes.com/console/open-tutorials/containers/XJsxhLTnKNu

Using a small amount of data to achieve box number detection and recognition tasks

The container number refers to the number of the container that ships the exported goods.This item is required when filling out the consignment note. The structure of the standard container number adopts the ISO6346 (1995) standard and consists of 11 digits. Taking the container number CBHU 123456 7 as an example, it consists of 3 parts:

The first part consists of 4 English letters. The first three letters indicate the owner and operator of the container, and the fourth letter indicates the container type. CBHU indicates that the owner and operator of the container is COSCO Container's standard container.

The second part consists of 6 digits. Indicates the container registration code, which is the unique identification of the container.

The third part is the check code, which is obtained by calculating the verification rules based on the previous 4 letters and 6 digits, and is used to identify whether an error occurs during verification.

This tutorial is based on PaddleOCR for container number detection and recognition tasks. Use a small amount of data to train the detection and recognition models separately, and finally connect them together to achieve the task of container number detection and recognition.

Environment Preparation

- Start a "model training" container in the OpenBayes console. Select PaddlePaddle 2.3 for environment and RTX 3090 or other GPU type for resources.

If you do not have a platform account, please visit the following address to register first: https://openbayes.com/console/signup

- Open a Terminal window in Jupyter. Then execute the following command:

cd PaddleOCR-release-2.5 #进入 PaddleOCR-release-2.5 文件夹

pip install -r requirements.txt #安装 PaddleOCR 所需依赖

python setup.py install #安装 PaddleOCRDataset Introduction

This tutorial uses the Container Number-OCR Dataset, which contains 3003 container images with a resolution of 1920×1080.

To view the dataset details, please visit:

https://openbayes.com/console/open-tutorials/datasets/BzuGVEOJv2T/3

- The PaddleOCR detection model training annotation rules are as follows, separated by "\t":

" 图像文件名 json.dumps 编码的图像标注信息"

ch4_test_images/img_61.jpg [{"transcription": "MASA", "points": [[310, 104], [416, 141], [418, 216], [312, 179]]}, {...}]The image annotation information before json.dumps encoding is a list containing multiple dictionaries. The points in the dictionary represent the coordinates (x, y) of the four points of the text box, arranged clockwise from the point in the upper left corner.

transcription represents the text in the current text box. When its content is "###", it means that the text box is invalid and will be skipped during training.

- The PaddleOCR recognition model training annotation rules are as follows, separated by "\t":

" 图像文件名 图像标注信息 "

train_data/rec/train/word_001.jpg 简单可依赖

train_data/rec/train/word_002.jpg 用科技让复杂的世界更简单## Data collation

3.1 Data preparation required for detection model

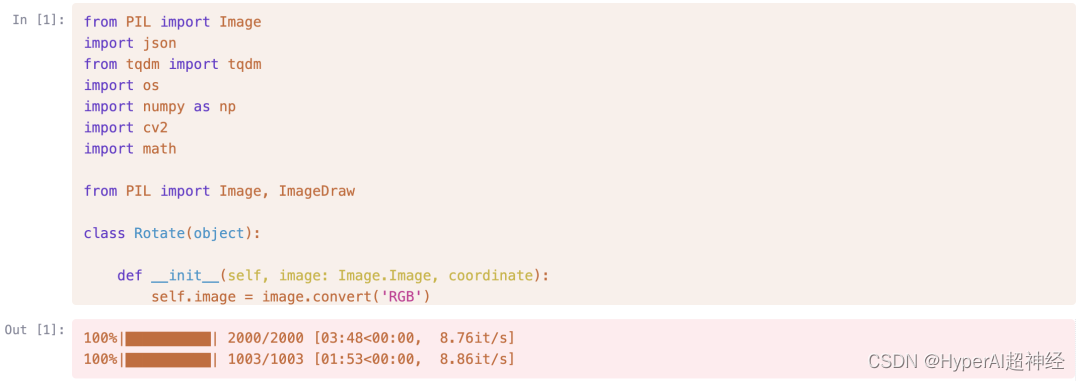

Divide the 3,000 images in the dataset into training and validation sets at a ratio of 2:1 and run the following code:

from tqdm import tqdm

finename = "all_label.txt"

f = open(finename)

lines = f.readlines()

t = open('det_train_label.txt','w')

v = open('det_eval_label.txt','w')

count = 0

for line in tqdm(lines):

if count < 2000:

t.writelines(line)

count += 1

else:

v.writelines(line)

f.close()

t.close()

v.close()3.2 Data preparation required for identification model

According to the annotations of the detection part, crop the data set to contain only the text part of the picture as the recognition data, and run the following code:

For the complete code, see: https://openbayes.com/console/open-tutorials/containers/XJsxhLTnKNu

## Experiment

Since the data is relatively small, in order to achieve better and faster model convergence, the PP-OCRv3 model in PaddleOCR is used for detection and recognition.

Based on PP-OCRv2, the end-to-end Hmean index of PP-OCRv3 for Chinese scenes is improved by 5% compared with PP-OCRv2, and the end-to-end effect of English digital models is improved by 11%.

Please refer to the PP-OCRv3 technical report for detailed optimization details.

4.1 Detection Model

4.1.1 Detection model configuration

PaddleOCR provides many detection models. On the path PaddleOCR-release-2.5/configs/det The models and their configuration files can be found underh_PP-OCRv3_det_student.yml, the configuration file path is:PaddleOCR-release-2.5/configs/det/ch_PP-OCRv3/ch_PP-OCRv3_det_student.yml

Before use, necessary settings need to be made, such as training parameters, dataset path, etc. Some key configurations are shown below:

#关键训练参数

use_gpu: true #是否使用显卡

epoch_num: 1200 #训练 epoch 个数

save_model_dir: ./output/ch_PP-OCR_V3_det/ #模型保存路径

save_epoch_step: 200 #每训练 200epoch,保存一次模型

eval_batch_step: [0, 100] #训练每迭代 100 次,进行一次验证

pretrained_model: ./PaddleOCR-release

2.5/pretrain_models/ch_PP-OCR_V3_det/best_accuracy.pdparams #预训练模型路径

#训练集路径设置

Train:

dataset:

name: SimpleDataSet

data_dir: /input0/images #图片文件夹路径

label_file_list:

- ./det_train_label.txt #标签路径4.1.2 Model Fine-tuning

Run the following command in the notebook to fine-tune the model, where -c passes in the configured model file path:

leOCR-release-2.5/tools/train.py \

-c PaddleOCR-release-2.5/configs/det/ch_PP-OCRv3/ch_PP-OCRv3_det_student.ymlUsing default hyperparameters, the model ch_PP-OCRv3_det_student After 385 epochs of training on the training set, the hmean of the model on the validation set reached: 96.96%, and there was no significant increase thereafter:

[2022/10/11 06:36:09] ppocr INFO: best metric, hmean: 0.969551282051282, precision: 0.9577836411609498,

recall: 0.981611681990265, fps: 20.347745459258228, best_epoch: 3854.2 Identification Model

4.2.1 Identification model configuration

PaddleOCR provides many recognition models. PaddleOCR-release-2.5/configs/rec The model and its configuration files can be found under

If we choose the model ch_PP-OCRv3_rec_distillation, its configuration file path is:PaddleOCR-release-2.5/configs/rec/PP-OCRv3/ch_PP-OCRv3_rec_distillation.yml

Necessary settings need to be made before use, such as training parameters, dataset path, etc. Some key configurations are shown below:

#关键训练参数

use_gpu: true #是否使用显卡

epoch_num: 1200 #训练 epoch 个数

save_model_dir: ./output/rec_ppocr_v3_distillation #模型保存路径

save_epoch_step: 200 #每训练 200epoch,保存一次模型

eval_batch_step: [0, 100] #训练每迭代 100 次,进行一次验证

pretrained_model: ./PaddleOCR-release-2.5/pretrain_models/PPOCRv3/best_accuracy.pdparams #预训练模型路径

#训练集路径设置

Train:

dataset:

name: SimpleDataSet

data_dir: ./RecTrainData/ #图片文件夹路径

label_file_list:

- ./rec_train_label.txt #标签路径4.2.2 Model Fine-tuning

Run the following command in the notebook to fine-tune the model, where -c passes in the configured model file path:

%run PaddleOCR-release-2.5/tools/train.py \

-c PaddleOCR-release-2.5/configs/rec/PP-OCRv3/ch_PP-OCRv3_rec_distillation.ymlUsing default hyperparameters, the model ch_PP-OCRv3_rec_distillation After 136 epochs of training on the training set, the model's accuracy on the validation set reached 96.11%, and there was no significant increase thereafter:

[2022/10/11 20:04:28] ppocr INFO: best metric, acc: 0.9610600272522444, norm_edit_dis: 0.9927426548965615,

Teacher_acc: 0.9540291998159589, Teacher_norm_edit_dis: 0.9905629345025616, fps: 246.029195787707, best_epoch: 136Results

5.1 Detection Model Reasoning

Run the following command in the notebook to use the fine-tuned model to detect text in the test image:

- Global.infer_img is the image path or image folder path

- Global.pretrained_model is the fine-tuned model

- Global.save_res_path is the path to save the inference results

%run PaddleOCR-release-2.5/tools/infer_det.py \

-c PaddleOCR-release-2.5/configs/det/ch_PP-OCRv3/ch_PP-OCRv3_det_student.yml \

-o Global.infer_img="/input0/images" Global.pretrained_model="./output/ch_PP-OCR_V3_det/best_accuracy" Global.save_res_path="./output/det_infer_res/predicts.txt"5.2 Recognition Model Reasoning

Run the following command in the notebook to use the fine-tuned model to detect text in the test image:

Global.infer_imgThe path to the image or the path to the image folderGlobal.pretrained_modelFor the fine-tuned modelGlobal.save_res_pathSave the path for inference results

%run PaddleOCR-release-2.5/tools/infer_rec.py \

-c PaddleOCR-release-2.5/configs/rec/PP-OCRv3/ch_PP-OCRv3_rec_distillation.yml \

-o Global.infer_img="./RecEvalData/" Global.pretrained_model="./output/rec_ppocr_v3_distillation/best_accuracy" Global.save_res_path="./output/rec_infer_res/predicts.txt"5.3 Detection and Recognition Model Serial Reasoning

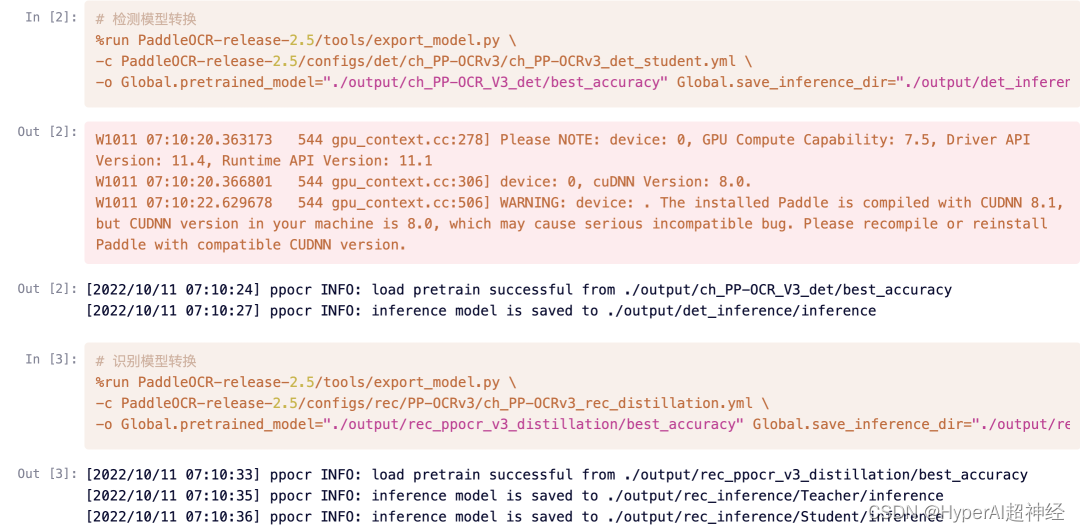

5.3.1 Model Conversion

Before serial reasoning, you first need to convert the trained and saved model into an inference model by executing the following detection commands respectively. in:

-cPass in the path to the configuration file of the model to be converted-o Global.pretrained_modelThe model file to be convertedGlobal.save_inference_dirThe storage path for the inference model obtained by conversion

5.3.2 Model Cascading Reasoning

After the conversion is completed, PaddleOCR provides a detection and recognition model concatenation tool, which can concatenate any trained detection model and any recognized model into a two-stage text recognition system.

The input image goes through four main stages: text detection, detection frame correction, text recognition, and score filtering to output the text location and recognition results.

The execution code is as follows:

image_dirThe path to a single image or a collection of imagesdet_model_dirPath to detect inference modelrec_model_dirTo identify the path of the inference model

The visual recognition results are saved in the ./inference_results folder by default.

%run PaddleOCR-release-2.5/tools/infer/predict_system.py \

--image_dir="OCRTest" \

--det_model_dir="./output/det_inference/" \

--rec_model_dir="./output/rec_inference/Student/"

To view the full tutorial, visit:

https://openbayes.com/console/open-tutorials/containers/XJsxhLTnKNu

About PaddleOCR and OpenBayes

PaddleOCR is an OCR tool library based on Baidu PaddlePaddle, including an ultra-lightweight Chinese OCR with a total model of only 8.6M. It also supports training algorithms, service deployment, and end-side deployment for multiple text detection and text recognition.

For more information, please visit:

https://github.com/PaddlePaddle/PaddleOCR

Understanding OpenBayes

OpenBayes is a leading machine intelligence research institution in China.Provides a number of basic services related to AI development, including computing power containers, automatic modeling, and automatic parameter adjustment.

At the same time, OpenBayes has also launched many mainstream public resources such as data sets, tutorials, and models. For developers to quickly learn and create ideal machine learning models.

Visit openbayes.com and register now to enjoy 600 minutes/week of RTX 3090 and 300 minutes/week of free CPU computing time.

Note: The weekly gift resources will arrive every Monday afternoon

To view and run the complete tutorial, visit the following link:

https://openbayes.com/console/open-tutorials/containers/XJsxhLTnKNu

-- over--