Young people with a certain level of scientific literacy don’t even need to click on these headlines to know that they are rumors or exaggerated, but these articles are circulating wildly in the WeChat groups and friend circles of our parents’ generation every day.

It is difficult to determine the source and authenticity of news in the virtual world, especially for parents. As long as an article mentions complex scientific knowledge or obscure scientific institutions, or even adds some patriotic or sentimental incitement, they are more likely to be confused by the rumors and even become part of the spread.

Many platforms are trying every means to establish a rumor-refuting mechanism. In the past, this mechanism was mainly composed of complaints + manual review, but in the form of manual review, it is still a drop in the bucket.

The sameThe world, the same rumor

This problem is also happening in the United States. The "rumor" commonly used in China is often translated into English as "Rumor". In fact, this word means hearsay or hearsay. More rigorous news organizations may translate it as "False Rumor", which means false hearsay.

Interestingly, when we wanted to compare which types of rumors were most likely to spread widely, we found that rumor makers around the world chose news about celebrity deaths.

Using AI to purify news

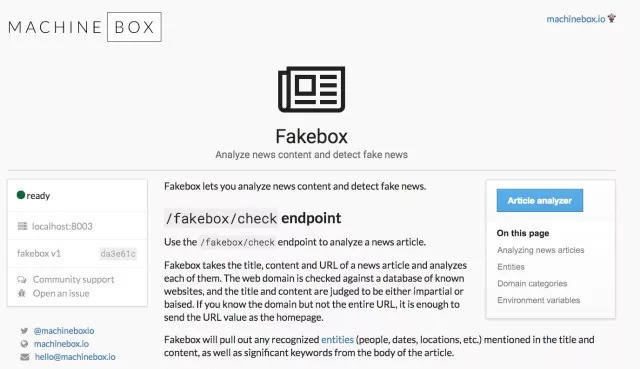

In the United States, an engineer named Aaron Edell has completed a "FakeBox fake news detector" through AI. Although the accuracy rate is relatively high, the content is not applicable to the Chinese system in the English context. Secondly, if it is really put into commercial use, there is still a lot of room for improvement. However, his research process is definitely of great reference value to professionals engaged in similar research.

The design process of the "FakeBox Fake News Detector" was not all smooth sailing. The most important turning point for its success was that he changed his goal from judging fake news to judging real news - the truth is always consistent, while illusions are varied.

The following is his mental journey when designing FakeBox:

The first question: How to define fake news?

The first difficulty I encountered was rather unexpected.After studying some fake news, I found that fake news is not entirely false. Some news is exaggerated, and some news is unverified. In fact, fake news should also be divided into different types: obviously wrong, half true and half false, complete pseudoscience, fake news commentary, etc.

Therefore, fake news must be thoroughly screened and eliminated one by one.

First experiment: Solving the problem with a sentiment analysis model

At the beginning, I made a small tool myself, using a crawler to grab the article title, description, author and content, and send the results to the sentiment analysis model. I used Textbox, which can quickly feedback the results, which is very convenient. For each article, Textbox will feedback a score. 5 points or more is positive feedback, and 5 points or less is negative feedback. I also made a small algorithm to calculate the scores of the title, content, author, etc. of the text separately, and add them together to ensure that the score is comprehensive and integrated.

It worked fine at first, but it stopped working after the 7th or 8th article I tested, but it was a pretty close prototype to what I envisioned a rumor detection system to be.

But the result was failure.

Second experiment: Solving problems with NLP models

My friend David Hernandez recommended that I train a model on the text itself. I tried to understand the characteristics of fake news, such as website sources and author names, to see if I could quickly build a dataset to train a model.

We spent a few days collecting a large number of different datasets that seemed useful for training models. We thought the dataset was large enough, but in fact the content of the dataset was not correctly classified from the beginning, because some websites marked as "fake" or "misleading" sometimes have real articles, or just forward the content of other websites, so the results are not ideal.

I started reading every article in person and spent a long time processing the data. Although this process was very hard, when I saw these false, malicious and even violent news these days, I doubted the civilization fostered by the Internet, but I also hoped that more people could use better tools to avoid being poisoned by rumors. After I intervened in the manual review of the data set, it reached an accuracy of about 70% in the test.

However, this method has a fatal shortcoming. After we tried to spot-check articles outside the data set, we still could not correctly determine the authenticity of the information.

So it still failed.

The third experiment: Don’t look for fake news as a data set, look for real news

The turning point of this success was a suggestion from David that woke me up: he suggested that the key to improving accuracy might be to simplify the problem. Maybe what we need to do is not to detect fake news, but to detect real news. Because real news is easier to classify - the articles are all facts and key points, with almost no extra explanations, and there are a lot of resources to confirm the authenticity of the news. So I started to collect data again.

I only classify news into two categories: real and notreal. Notreal includes satire, opinion pieces, fake news, and other articles that are not written in a purely factual manner.

This time we succeeded with an accuracy of over 95%.

This model is named Fakebox, it will give each article a score, if the score is very low, it may mean that the article is fake, commentary, satire or other. And Fakebox also has a set of REST API, you can integrate it into any environment, you can also deploy it on Docker.

But it still has a shortcoming: if the article is too short, or consists mainly of other people's opinions or quotes, then it may be difficult to judge whether it is true or false.

So, Fakebox is not the final solution, but Aaron Edell I hope this model will be helpful for articles that need to be identified as true or false.