Let me first go off topic and complain about last night's Apple conference. The launch of the education version of the iPad barely caused any waves, which is unworthy of its reputation as the "Technology Spring Festival Gala". Instead, the "All in Blockchain" friends were desperately chasing Nvidia's GTC 2018 and making waves.

"Where is the mining card?"

Yes, the rumored two new product lines for gaming and computing, “Turing” and “Ampere,” did not appear; Huang Renxun, who has always sneered at “digital miners,” has no intention of providing any dedicated mining products.

The most credible guesses about the lack of new products are that the GDDR6 video memory originally scheduled for use in new products could not be delivered on time due to the power outage at Samsung's Q1 wafer fab; or that a 2×2 calculation error bug broke out in Titan V on the eve of the launch, which also exists in new product lines with the same architecture, and the scope of involvement is not limited to the molecular dynamics program Amber.

Being able to disappoint game enthusiasts, AI practitioners, and digital miners at the same time may also be a manifestation of strength. In addition, Nvidia announced the suspension of road tests of autonomous driving. At the end of the press conference, Nvidia's stock price fell by 9.64%.

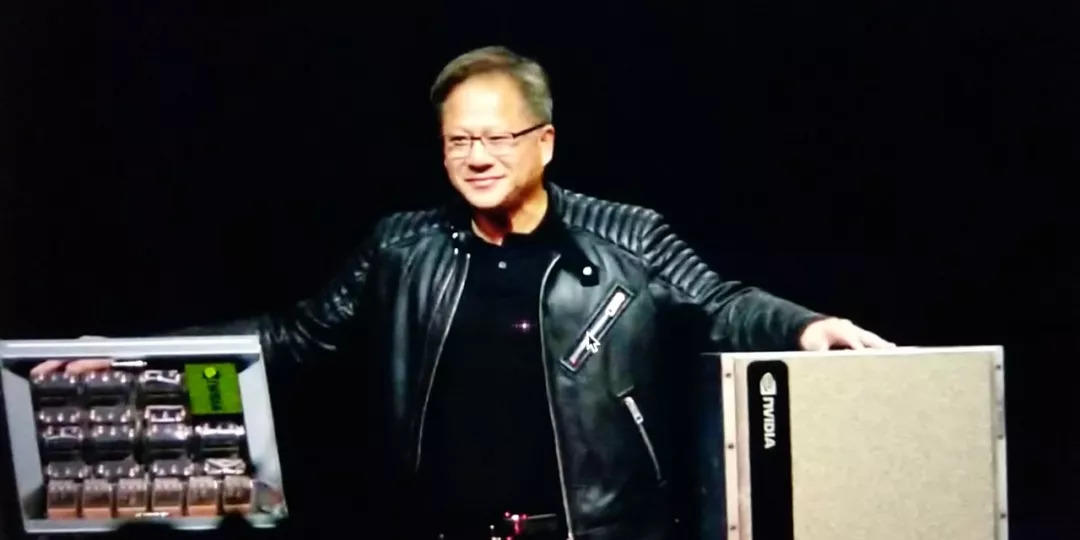

Huang Renxun was apparently indifferent to the drop in stock prices. When he released the new supercomputer DGX-2 wearing the jacket that he always wears, the official subtitles even indicated that the jacket was brand new ^_^.

The DGX-2, which Huang called the "world's largest GPU," is a perfect match for this product number. In terms of performance, number of graphics cards, computing power, and price, all parameters of the DGX-2 are the corresponding values of the DGX-1V multiplied by 2. However, since NVIDIA has increased the video memory of a single V100, the total video memory of the DGX-2 is four times that of the DGX-1V.

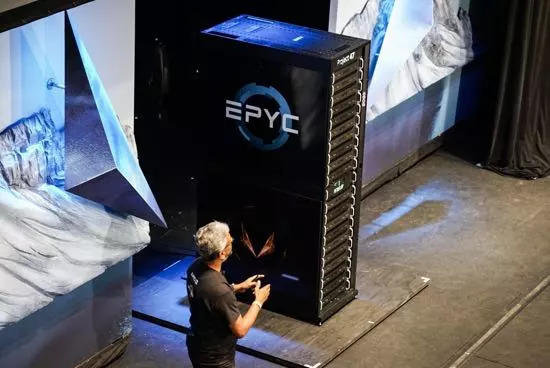

So overall, Nvidia seems to be standing still at this conference. However, due to the leading advantages of V100 and NVlink, even if DGX-2 is just two DGX-1Vs bundled together in the first half of last year, it is still far ahead of related competitors. For example, AMD's supercomputer "project 47" released at the end of last year has a computing power of 1Pflops (1 trillion floating point calculations per second), and it looks like this:

Does it look like a refrigerator? The direct current of this thing is 80-100A, and this power consumption can lift dozens of tons at the port.

In comparison, the energy consumption of the DGX series is only equivalent to that of a household non-inverter air conditioner. No wonder Huang Renxun boasted that "the more you buy, the more you save."

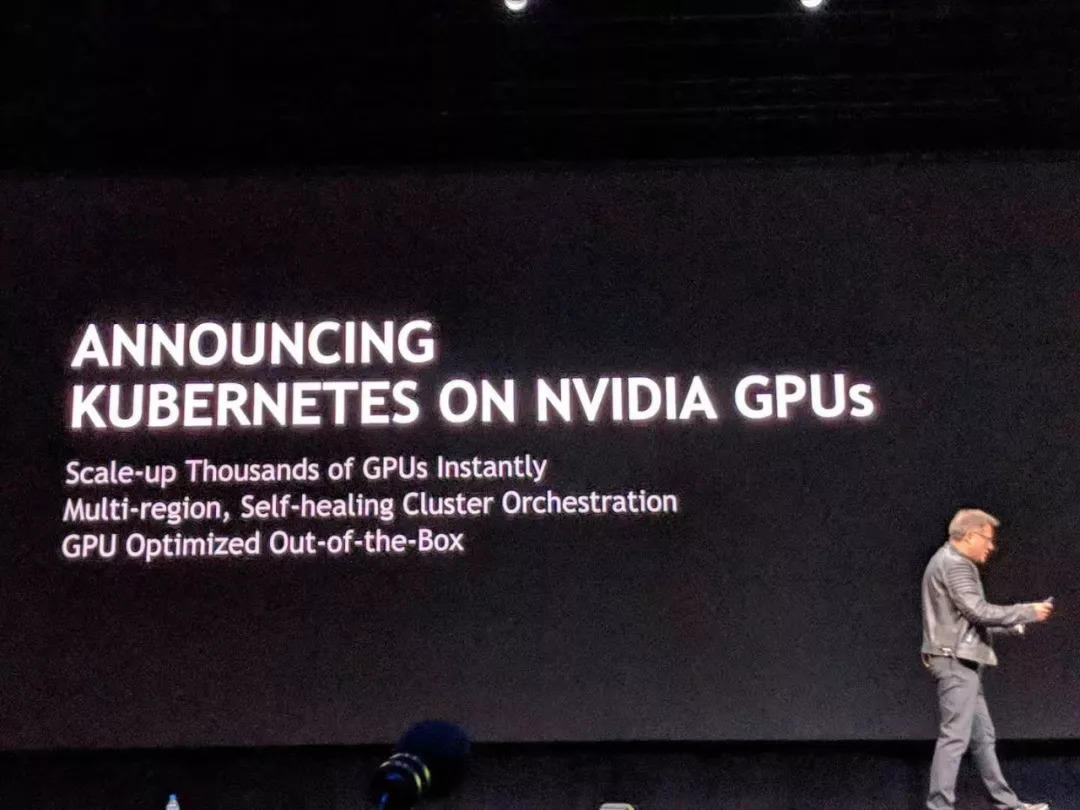

In terms of software, NVIDIA provides the new TensorRT. In addition, the official package "Kubernetes on Nvidia GPUs" allows developers to more easily manage tasks and computing power scheduling between GPU clusters. As NVIDIA's software ecosystem becomes more and more abundant, the choice of developers is actually almost locked.

Moreover, NVIDIA still has a unique dominant position with CUDA and CuDNN, which have tied the entire industry to its own chariot. If new entrants cannot come up with products that beat Tensor Core by several times, commercial companies actually have no sufficient motivation to defect from NVIDIA.

What, you mean TPU? Where can I buy it?