Command Palette

Search for a command to run...

Anime Style Transfer AnimeGANv2, Released Online Running Demo

AnimeGANv2 recently released an update developed by community contributors.A demo that can run online is implemented through Gradio and published on huggingface.

access https://huggingface.co/spaces/akhaliq/AnimeGANv2

You can easily achieve the processing effect of AnimeGANv2 online (only supports static image processing).

AnimeGAN: All three-dimensional objects become two-dimensional

AnimeGAN is an improvement based on CartoonGAN, and proposes a more lightweight generator architecture. In 2019, AnimeGAN was first open-sourced and caused heated discussions with its extraordinary results.

Papers at the time of initial release "AnimeGAN: a novel lightweight GAN for photo animation"Three new loss functions are also proposed in this paper to improve the stylized anime visual effects.

The three loss functions are:Grayscale style loss, grayscale adversarial loss, color reconstruction loss.

AnimeGANv2, released last September, optimized the model and solved some problems in the initial version of AnimeGAN.

In v2, new training data sets of comic styles of three cartoonists, Makoto Shinkai, Hayao Miyazaki, and Satoshi Kon, were added.

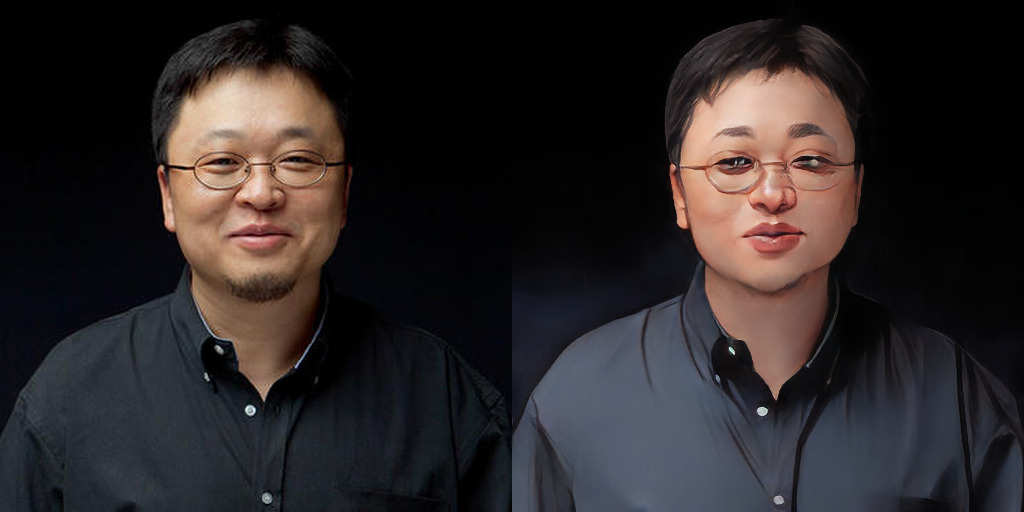

Take Musk as an example. The effect of the first generation of AnimeGAN was already amazing, but it was too white, tender and sickly, like a member of a Korean boy band. In comparison, v2 is more natural and more in line with the real temperament.

AnimeGANv2 update highlights:

– The problem of high-frequency artifacts in the generated images has been solved;

– v2 is easier to train and can directly achieve the results described in the paper;

– Further reduce the number of parameters of the generator network. (Generator size 8.17Mb);

– Add more high-quality image data.

Project Information

TensorFlow version environment configuration requirements

- Python 3.6

- tensorflow-gpu

- tensorflow-gpu 1.8.0 (ubuntu, GPU 1080Ti or Titan xp, cuda 9.0, cudnn 7.1.3)

- tensorflow-gpu 1.15.0 (ubuntu, GPU 2080Ti, cuda 10.0.130, cudnn 7.6.0)

- opencv

- tqdm

- numpy

- glob

- argparse

PyTorch Implementation

Weight conversion

git clone https://github.com/TachibanaYoshino/AnimeGANv2

python convert_weights.pyreasoning

python test.py --input_dir [image_folder_path] --device [cpu/cuda]Colab inside the wall

Project GitHub

https://github.com/TachibanaYoshino/AnimeGANv2

Online Demo

https://huggingface.co/spaces/akhaliq/AnimeGANv2

Colab inside the wall

https://openbayes.com/console/open-tutorials/containers/pROHrRgKItf